实验一:决策树算法实验

实验一:决策树算法实验

姓名:许珂

学号:201613344

【实验目的】

- 理解决策树算法原理,掌握决策树算法框架;

- 理解决策树学习算法的特征选择、树的生成和树的剪枝;

- 能根据不同的数据类型,选择不同的决策树算法;

- 针对特定应用场景及数据,能应用决策树算法解决实际问题。

【实验内容】

- 设计算法实现熵、经验条件熵、信息增益等方法。

- 针对给定的房贷数据集(数据集表格见附录1)实现ID3算法。

- 熟悉sklearn库中的决策树算法;

- 针对iris数据集,应用sklearn的决策树算法进行类别预测。

【实验报告要求】

- 对照实验内容,撰写实验过程、算法及测试结果;

- 代码规范化:命名规则、注释;

- 查阅文献,讨论ID3、5算法的应用场景;

- 查询文献,分析决策树剪枝策略。

实验代码

1.设计算法实现熵、经验条件熵、信息增益等办法:

1 #导入本次实验所需要的包

2 import numpy as np

3 import 4 import matplotlib.pyplot as plt

5 %matplotlib inline

6 from sklearn.datasets import load_iris

7 from sklearn.model_selection import train_test_split

8 from collections import Counter

9 import math

10 from math import log

11 import pprint

#数据集和分类属性

def create_data():

datasets = [['青年', '否', '否', '一般', '否'],

['青年', '否', '否', '好', '否'],

['青年', '是', '否', '好', '是'],

['青年', '是', '是', '一般', '是'],

['青年', '否', '否', '一般', '否'],

['中年', '否', '否', '一般', '否'],

['中年', '否', '否', '好', '否'],

['中年', '是', '是', '好', '是'],

['中年', '否', '是', '非常好', '是'],

['中年', '否', '是', '非常好', '是'],

['老年', '否', '是', '非常好', '是'],

['老年', '否', '是', '好', '是'],

['老年', '是', '否', '好', '是'],

['老年', '是', '否', '非常好', '是'],

['老年', '否', '否', '一般', '否'],]

labels = [u'年龄', u'有工作', u'有自己的房子', u'信贷情况', u'类别']

# 返回数据集和每个维度的名称

return datasets, labels

1 datasets, labels = create_data()

2 train_data = pd.DataFrame(datasets, columns=labels)

3 train_data

运行结果:

计算数据集的熵

1 def calc_ent(datasets):

2 data_length = len(datasets)

3 label_count = {}

4 for i in range(data_length):

5 label = datasets[i][-1]

6 if label not in label_count:

7 label_count[label] = 0

8 label_count[label] += 1

9 ent = -sum([(p / data_length) * log(p / data_length, 2)

10 for p in label_count.values()])

11 return ent

12

13

14 ent = calc_ent(datasets)

15 ent

16 #def entropy(y):

17 #Entropy of a label sequence

18 #"""

19 #hist = np.bincount(y)

20 #ps = hist / np.sum(hist)

21 #return -np.sum([p * np.log2(p) for p in ps if p > 0])

运行结果:

计算经验条件熵

1 def cond_ent(datasets, axis=0):

2 data_length = len(datasets)

3 feature_sets = {}

4 for i in range(data_length):

5 feature = datasets[i][axis]

6 if feature not in feature_sets:

7 feature_sets[feature] = []

8 feature_sets[feature].append(datasets[i])

9 cond_ent = sum([(len(p) / data_length) * calc_ent(p) for p in feature_sets.values()])

10 return cond_ent

11

12 cond_ent = cond_ent(datasets)

13 cond_ent

运行结果:

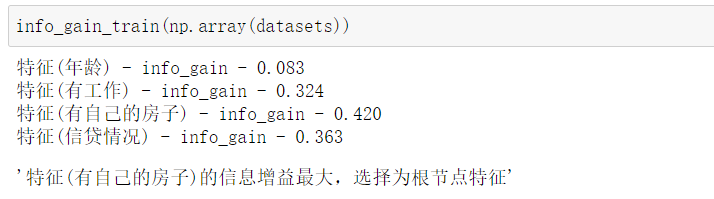

计算数据集的信息增益:

1 def info_gain(ent, cond_ent):

2 return ent - cond_ent

3 def info_gain_train(datasets):

4 count = len(datasets[0]) - 1

5 ent = calc_ent(datasets)

6

7 #ent = entropy(datasets)

8 best_feature = []

9 for c in range(count):

10 c_info_gain = info_gain(ent, cond_ent(datasets, axis=c))

11 best_feature.append((c, c_info_gain))

12 print('特征({}) - info_gain - {:.3f}'.format(labels[c], c_info_gain))

13 # 比较大小

14 best_ = max(best_feature, key=lambda x: x[-1])

15 return '特征({})的信息增益最大,选择为根节点特征'.format(labels[best_[0]])

info_gain_train(np.array(datasets))

运行结果:

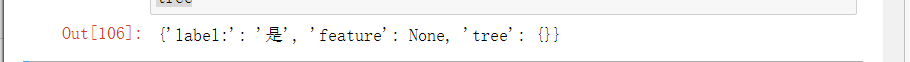

2.针对给定的房贷数据集实现ID3算法:

1 #使用ID3算法生成决策树

2 class Node:

3 def __init__(self, root=True, label=None, feature_name=None, feature=None):

4 self.root = root

5 self.label = label

6 self.feature_name = feature_name

7 self.feature = feature

8 self.tree = {}

9 self.result = {

10 'label:': self.label,

11 'feature': self.feature,

12 'tree': self.tree}

13 def __repr__(self):

14 return '{}'.format(self.result)

15 def add_node(self, val, node):

16 self.tree[val] = node

17 def predict(self, features):

18 if self.root is True:

19 return self.label

20 return self.tree[features[self.feature]].predict(features)

21

22 class DTree:

23 def __init__(self, epsilon=0.1):

24 self.epsilon = epsilon

25 self._tree = {}

26 # 熵

27 @staticmethod

28 def calc_ent(datasets):

29 data_length = len(datasets)

30 label_count = {}

31 for i in range(data_length):

32 label = datasets[i][-1]

33 if label not in label_count:

34 label_count[label] = 0

35 label_count[label] += 1

36 ent = -sum([(p / data_length) * log(p / data_length, 2)

37 for p in label_count.values()])

38 return ent

39 # 经验条件熵

40 def cond_ent(self, datasets, axis=0):

41 data_length = len(datasets)

42 feature_sets = {}

43 for i in range(data_length):

44 feature = datasets[i][axis]

45 if feature not in feature_sets:

46 feature_sets[feature] = []

47 feature_sets[feature].append(datasets[i])

48 cond_ent = sum([(len(p) / data_length) * self.calc_ent(p)

49 for p in feature_sets.values()])

50 return cond_ent

51 # 信息增益

52 @staticmethod

53 def info_gain(ent, cond_ent):

54 return ent - cond_ent

55 def info_gain_train(self, datasets):

56 count = len(datasets[0]) - 1

57 ent = self.calc_ent(datasets)

58 best_feature = []

59 for c in range(count):

60 c_info_gain = self.info_gain(ent, self.cond_ent(datasets, axis=c))

61 best_feature.append((c, c_info_gain))

62 # 比较大小

63 best_ = max(best_feature, key=lambda x: x[-1])

64 return best_

65 def train(self, train_data):

66 """

67 input:数据集D(DataFrame格式),特征集A,阈值eta

68 output:决策树T

69 """

70 _, y_train, features = train_data.iloc[:, :

71 -1], train_data.iloc[:,-1], train_data.columns[:-1]

72 # 1,若D中实例属于同一类Ck,则T为单节点树,并将类Ck作为结点的类标记,返回T

73 if len(y_train.value_counts()) == 1:

74 return Node(root=True, label=y_train.iloc[0])

75 # 2, 若A为空,则T为单节点树,将D中实例树最大的类Ck作为该节点的类标记,返回T

76 if len(features) == 0:

77 return Node(

78 root=True,

79 label=y_train.value_counts().sort_values(

80 ascending=False).index[0])

81 # 3,计算最大信息增益 同5.1,Ag为信息增益最大的特征

82 max_feature, max_info_gain = self.info_gain_train(np.array(train_data))

83 max_feature_name = features[max_feature]

84 # 4,Ag的信息增益小于阈值eta,则置T为单节点树,并将D中是实例数最大的类Ck作为该节点的类标记,返

85 if max_info_gain < self.epsilon:

86 return Node(

87 root=True,

88 label=y_train.value_counts().sort_values(ascending=False).index[0])

89 # 5,构建Ag子集

90 node_tree = Node(

91 root=False, feature_name=max_feature_name, feature=max_feature)

92 feature_list = train_data[max_feature_name].value_counts().index

93 for f in feature_list:

94 sub_train_df = train_data.loc[train_data[max_feature_name] ==f].drop([max_feature_name], axis=1)

95 # 6, 递归生成树

96 sub_tree = self.train(sub_train_df)

97 node_tree.add_node(f, sub_tree)

98 # pprint.pprint(node_tree.tree)

99 return node_tree

100 def fit(self, train_data):

101 self._tree = self.train(train_data)

102 return self._tree

103 def predict(self, X_test):

104 return self._tree.predict(X_test)

1 #创建决策树

2 datasets, labels = create_data()

3 data_df = pd.DataFrame(datasets, columns=labels)

4 dt = DTree()

5 tree = dt.fit(data_df)

6 tree

运行结果:

1 dt.predict(['老年', '否', '否', '一般'])

运行结果:

3.针对iris数据集,应用sklearn的决策树算法进行类别预测:

1 import numpy as np

2 import random

3 from sklearn import tree

4 from graphviz import Source

5 import pandas as pd

6 import re

7

8 def origalData():

9 dataSet =[['青年', '否', '否', '一般', '否'],

10 ['青年', '否', '否', '好', '否'],

11 ['青年', '是', '否', '好', '是'],

12 ['青年', '是', '是', '一般', '是'],

13 ['青年', '否', '否', '一般', '否'],

14 ['中年', '否', '否', '一般', '否'],

15 ['中年', '否', '否', '好', '否'],

16 ['中年', '是', '是', '好', '是'],

17 ['中年', '否', '是', '非常好', '是'],

18 ['中年', '否', '是', '非常好', '是'],

19 ['老年', '否', '是', '非常好', '是'],

20 ['老年', '否', '是', '好', '是'],

21 ['老年', '是', '否', '好', '是'],

22 ['老年', '是', '否', '非常好', '是'],

23 ['老年', '否', '否', '一般', '否'],]

24 labels = [u'年龄', u'有工作', u'有自己的房子', u'信贷情况', u'类别'] # 分类属性

25 return dataSet, labels # 返回数据集和分类属性

26

27

28 if __name__ == '__main__':

29 dataset,labels = origalData()

30 datasetFrame = pd.DataFrame(dataset)

31 print("datasetFrame:{}".format(datasetFrame))

32 X_train = datasetFrame.iloc[:,:-1]

33 Y_train = datasetFrame.iloc[:,4:]

34 a = np.column_stack((Y_train,X_train))

35 clf = tree.DecisionTreeClassifier(criterion='gini',max_depth=4)

36 clf =clf.fit(X_train,Y_train)

37 graph = Source(tree.export_graphviz(clf,out_file=None))

38 graph.format='png'

39 graph.render('dtYesNo',view=True)

40 print('X_train:{}\nY_train:{}'.format(X_train,Y_train))

41 # print("dataset:{}\nlabels:{}".format(dataset,labels))

运行结果:

1 import numpy as np

2 import pandas as pd

3 import matplotlib.pyplot as plt

4 from graphviz import Source

5 from sklearn.datasets import load_iris

6 from sklearn.metrics import accuracy_score

7 from sklearn.model_selection import train_test_split

8 from _collections import _count_elements

9 import math

10 from sklearn import tree

11 from math import log

12 import pprint

13 from sklearn.tree import DecisionTreeClassifier

14 from sklearn.tree import export_graphviz

15 import graphviz

16

17 def create_data():

18 iris = load_iris()

19 df = pd.DataFrame(iris.data,columns=iris.feature_names)

20 df['label']=iris.target

21 df.columns = ['speal length','speal width','petal length','petal width','label']

22 data = np.array(df.iloc[:100,[0,1,-1]])

23 print('data:')

24 print(data)

25

26 return data[:,:2],data[:,-1]

27 if __name__ == '__main__':

28 iris = load_iris()

29 X,y = create_data()

30 X_train,X_test,y_train,y_test = train_test_split(X,y,test_size=0.3)

31 #print(X_train,X_test,y_train,y_test)

32 clf = DecisionTreeClassifier(max_depth=4)

33 print(clf.fit(X_train,y_train,))

34 print(clf.score(X_test,y_test))

35 predict_results = clf.predict(X_test) # 使用模型对测试集进行预测

36 print(predict_results)

37 print(accuracy_score(predict_results, y_test))

38 graph = Source(tree.export_graphviz(clf, out_file=None))

39 graph.format = 'png'

40 graph.render('dt', view=True)

运行结果:

实验小结:

讨论ID3算法的应用场景

ID3算法应用场景:

通过本次实验,我对决策树算法实验和ID3算法有了更近一步的掌握,它的基础理论清晰,算法比较简单,学习能力较强,适合处理大规模的学习问题,是数据挖掘和知识发现领域中的一个很好的范例,为后来各学者提出优化算法奠定了理论基础,ID3算法特别在机器学、知识发现和数据挖掘等领域得到了极大地发展。

分析决策树剪枝策略:

- 如何进行决策树剪枝

先对数据集划分成训练集和验证集,训练集用来决定书生成过程中每个节点划分选择的属性,验证集在预剪枝中用于决定该节点是否有必要一句改属性进行展开,在后剪枝中用于判断该节点是否需要进行剪枝。先剪枝(pruning)的目的是为了避免决策树模型的过拟合。因为决策树算法在学习的过程中为了尽可能的正确的分类训练样本,不停地对结点进行划分,因此这会导致整棵树的分支过多,也就导致了过拟合。决策树的剪枝策略最基本的有两种:预剪枝(pre-pruning)和后剪枝(post-pruning): - 预剪枝(pre-pruning):预剪枝就是在构造决策树的过程中,先对每个结点在划分前进行估计,若果当前结点的划分不能带来决策树模型泛华性能的提升,则不对当前结点进行划分并且将当前结点标记为叶结点

预剪枝

通过提前停止树的构建而对树剪枝,一旦停止,节点就是树叶,该树叶持有子集元祖最频繁的类。

浙公网安备 33010602011771号

浙公网安备 33010602011771号