Kafka 3.7.0 集群进行水平扩容 (Kraft模式) - 扩容 broker、controller 节点

背景

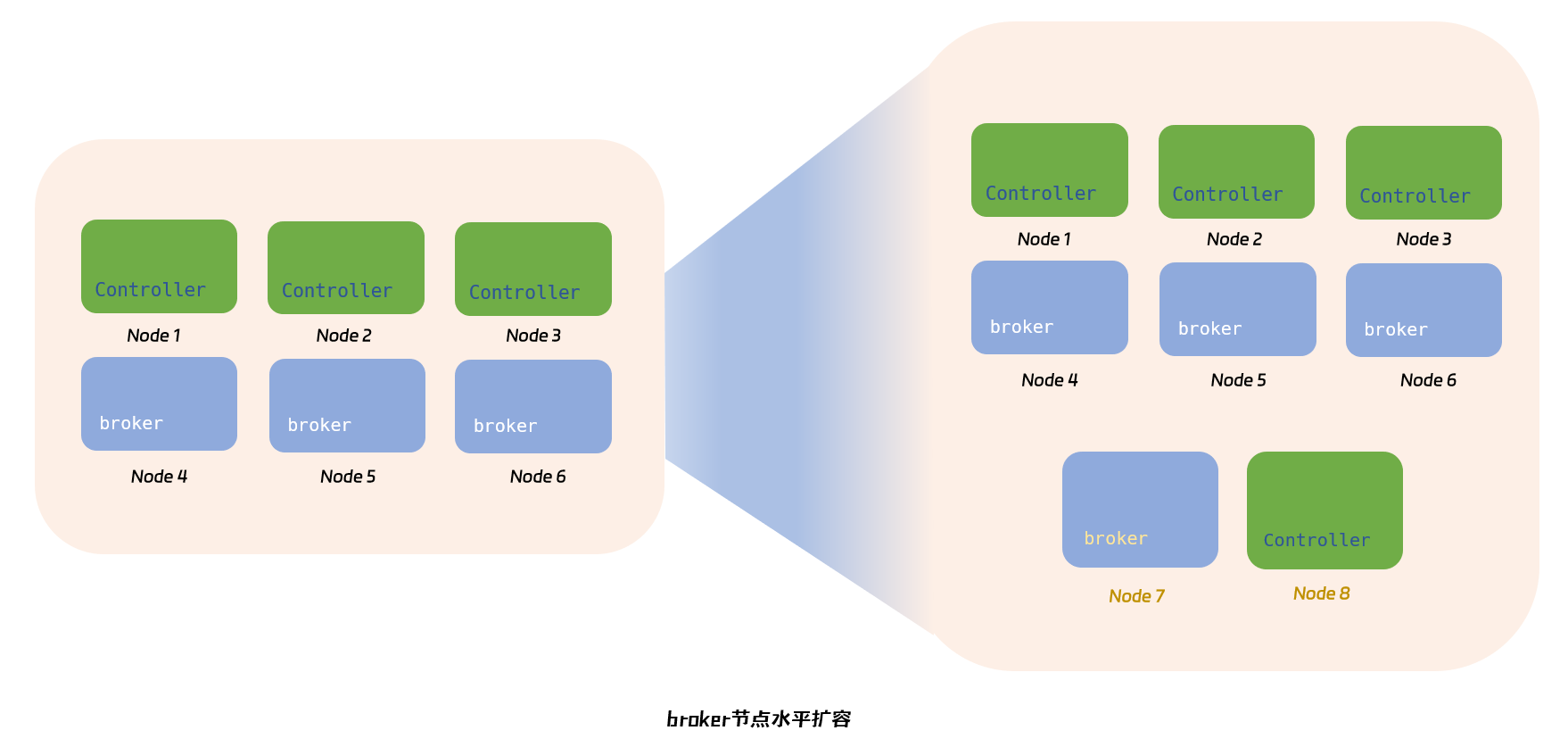

近期业务环境压力爬升,Kafka 作为后端业务的核心组件,存在业务压力警告状态,资源利用率一直很高,打算将原有 3 节点的kafka集群进行节点水平扩容,提升消息中间件的业务吞吐量。

- Kafka 版本:

3.7.0 - Kafka 节点角色:

controller,broker - Zk 节点:

none(采用 Kraft 模式)

计划扩容两个节点,(为了演示,这里分别扩容一个 broker 和一个 controller ),先进行本地环境的水平扩容预演。

1. 开始搭建初始集群

本地模拟环境采用 Docker 搭建,拓扑如下:

| 名称 | ip | role |

|---|---|---|

| controller-1 | 172.23.1.11 | controller |

| controller-2 | 172.23.1.12 | controller |

| controller-3 | 172.23.1.13 | controller |

| broker-1 | 172.23.1.14 | broker |

| broker-2 | 172.23.1.15 | broker |

| broker-3 | 172.23.1.16 | broker |

| kafka-ui | 172.23.1.20 | web-ui |

1.1 docker-compose 定义

docmer-compose.yaml 定义如下:

services:

controller-1:

image: apache/kafka:3.7.0

container_name: controller-1

privileged: true

user: root

environment:

KAFKA_NODE_ID: 1

KAFKA_PROCESS_ROLES: controller

KAFKA_LISTENERS: CONTROLLER://:9093

KAFKA_INTER_BROKER_LISTENER_NAME: PLAINTEXT

KAFKA_CONTROLLER_LISTENER_NAMES: CONTROLLER

KAFKA_CONTROLLER_QUORUM_VOTERS: 1@controller-1:9093,2@controller-2:9093,3@controller-3:9093

KAFKA_GROUP_INITIAL_REBALANCE_DELAY_MS: 0

CLUSTER_ID: gQ3YBuwyQsiCIbN3Ilq1cQ

volumes:

- ./volumes/controller-1:/tmp/kafka-logs

networks:

kafka_horizontal_upscale_network:

ipv4_address: 172.23.1.11

controller-2:

image: apache/kafka:3.7.0

container_name: controller-2

privileged: true

user: root

environment:

KAFKA_NODE_ID: 2

KAFKA_PROCESS_ROLES: controller

KAFKA_LISTENERS: CONTROLLER://:9093

KAFKA_INTER_BROKER_LISTENER_NAME: PLAINTEXT

KAFKA_CONTROLLER_LISTENER_NAMES: CONTROLLER

KAFKA_CONTROLLER_QUORUM_VOTERS: 1@controller-1:9093,2@controller-2:9093,3@controller-3:9093

KAFKA_GROUP_INITIAL_REBALANCE_DELAY_MS: 0

CLUSTER_ID: gQ3YBuwyQsiCIbN3Ilq1cQ

volumes:

- ./volumes/controller-2:/tmp/kafka-logs

networks:

kafka_horizontal_upscale_network:

ipv4_address: 172.23.1.12

controller-3:

image: apache/kafka:3.7.0

container_name: controller-3

privileged: true

user: root

environment:

KAFKA_NODE_ID: 3

KAFKA_PROCESS_ROLES: controller

KAFKA_LISTENERS: CONTROLLER://:9093

KAFKA_INTER_BROKER_LISTENER_NAME: PLAINTEXT

KAFKA_CONTROLLER_LISTENER_NAMES: CONTROLLER

KAFKA_CONTROLLER_QUORUM_VOTERS: 1@controller-1:9093,2@controller-2:9093,3@controller-3:9093

KAFKA_GROUP_INITIAL_REBALANCE_DELAY_MS: 0

CLUSTER_ID: gQ3YBuwyQsiCIbN3Ilq1cQ

volumes:

- ./volumes/controller-3:/tmp/kafka-logs

networks:

kafka_horizontal_upscale_network:

ipv4_address: 172.23.1.13

broker-1:

image: apache/kafka:3.7.0

container_name: broker-1

ports:

- 29092:9092

environment:

KAFKA_NODE_ID: 4

KAFKA_PROCESS_ROLES: broker

KAFKA_LISTENERS: 'PLAINTEXT://:19092,PLAINTEXT_HOST://:9092'

KAFKA_ADVERTISED_LISTENERS: 'PLAINTEXT://broker-1:19092,PLAINTEXT_HOST://localhost:29092'

KAFKA_INTER_BROKER_LISTENER_NAME: PLAINTEXT

KAFKA_CONTROLLER_LISTENER_NAMES: CONTROLLER

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: CONTROLLER:PLAINTEXT,PLAINTEXT:PLAINTEXT,PLAINTEXT_HOST:PLAINTEXT

KAFKA_CONTROLLER_QUORUM_VOTERS: 1@controller-1:9093,2@controller-2:9093,3@controller-3:9093

KAFKA_GROUP_INITIAL_REBALANCE_DELAY_MS: 0

KAFKA_NUM_PARTITIONS: 3

CLUSTER_ID: gQ3YBuwyQsiCIbN3Ilq1cQ

volumes:

- ./volumes/broker-1:/tmp/kafka-logs

networks:

kafka_horizontal_upscale_network:

ipv4_address: 172.23.1.14

depends_on:

- controller-1

- controller-2

- controller-3

broker-2:

image: apache/kafka:3.7.0

container_name: broker-2

ports:

- 39092:9092

environment:

KAFKA_NODE_ID: 5

KAFKA_PROCESS_ROLES: broker

KAFKA_LISTENERS: 'PLAINTEXT://:19092,PLAINTEXT_HOST://:9092'

KAFKA_ADVERTISED_LISTENERS: 'PLAINTEXT://broker-2:19092,PLAINTEXT_HOST://localhost:39092'

KAFKA_INTER_BROKER_LISTENER_NAME: PLAINTEXT

KAFKA_CONTROLLER_LISTENER_NAMES: CONTROLLER

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: CONTROLLER:PLAINTEXT,PLAINTEXT:PLAINTEXT,PLAINTEXT_HOST:PLAINTEXT

KAFKA_CONTROLLER_QUORUM_VOTERS: 1@controller-1:9093,2@controller-2:9093,3@controller-3:9093

KAFKA_GROUP_INITIAL_REBALANCE_DELAY_MS: 0

KAFKA_NUM_PARTITIONS: 3

CLUSTER_ID: gQ3YBuwyQsiCIbN3Ilq1cQ

volumes:

- ./volumes/broker-2:/tmp/kafka-logs

networks:

kafka_horizontal_upscale_network:

ipv4_address: 172.23.1.15

depends_on:

- controller-1

- controller-2

- controller-3

broker-3:

image: apache/kafka:3.7.0

container_name: broker-3

ports:

- 49092:9092

environment:

KAFKA_NODE_ID: 6

KAFKA_PROCESS_ROLES: broker

KAFKA_LISTENERS: 'PLAINTEXT://:19092,PLAINTEXT_HOST://:9092'

KAFKA_ADVERTISED_LISTENERS: 'PLAINTEXT://broker-3:19092,PLAINTEXT_HOST://localhost:49092'

KAFKA_INTER_BROKER_LISTENER_NAME: PLAINTEXT

KAFKA_CONTROLLER_LISTENER_NAMES: CONTROLLER

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: CONTROLLER:PLAINTEXT,PLAINTEXT:PLAINTEXT,PLAINTEXT_HOST:PLAINTEXT

KAFKA_CONTROLLER_QUORUM_VOTERS: 1@controller-1:9093,2@controller-2:9093,3@controller-3:9093

KAFKA_GROUP_INITIAL_REBALANCE_DELAY_MS: 0

KAFKA_NUM_PARTITIONS: 3

CLUSTER_ID: gQ3YBuwyQsiCIbN3Ilq1cQ

volumes:

- ./volumes/broker-3:/tmp/kafka-logs

networks:

kafka_horizontal_upscale_network:

ipv4_address: 172.23.1.16

depends_on:

- controller-1

- controller-2

- controller-3

kafka-ui:

image: docker.io/provectuslabs/kafka-ui:v0.7.2

container_name: kafka-ui

ports:

- 8080:8080

environment:

DYNAMIC_CONFIG_ENABLED: true

SPRING_CONFIG_ADDITIONAL-LOCATION: /kafka-ui/config.yaml

volumes:

- /root/kafka/kafka-ui/config.yaml:/kafka-ui/config.yaml

networks:

kafka_horizontal_upscale_network:

ipv4_address: 172.23.1.20

depends_on:

- broker-1

- broker-2

- broker-3

networks:

kafka_horizontal_upscale_network:

driver: bridge

ipam:

driver: default

config:

- subnet: 172.23.1.0/24

其中,定义了一个 kafka-ui 的管理工具容器,可以通过页面的方式对 topic 和 consumer 进行直观的管理和查看。

其所需要的配置文件如下 config.yaml:

auth:

type: LOGIN_FORM

kafka:

clusters:

- bootstrapServers: broker-1:19092,broker-2:19092,broker-3:19092

name: local

management:

health:

ldap:

enabled: false

metrics:

port: 9081

type: JMX

spring:

security:

oauth2: null

user:

name: admin

password: admin

1.2 启动初始集群

我们先在本地目录下,批量创建容器需要挂载的目录:

>_ mkdir -pv ./volumes/{controller,broker}-{1..4}

mkdir: created directory './volumes'

mkdir: created directory './volumes/broker-1'

mkdir: created directory './volumes/broker-2'

mkdir: created directory './volumes/broker-3'

mkdir: created directory './volumes/broker-4'

mkdir: created directory './volumes/controller-1'

mkdir: created directory './volumes/controller-2'

mkdir: created directory './volumes/controller-3'

mkdir: created directory './volumes/controller-4'

然后,启动 docker-compose 容器组:

>_ docker-compose up -d

等待两分钟过后,查看容器日志是否运行正常。

1.3 检查集群是否正常运行

# docker exec -it broker-1 bash

fd7bdb2e5ab1:/$ /opt/kafka/bin/kafka-topics.sh --bootstrap-server localhost:19092 --list

fd7bdb2e5ab1:/$

OK,这一步我们进到 broker-1 容器中,使用附带的工具查看 topics 信息。

可以看到,由于没有任何一个 topic,所以这里显示为空。

1.4 对kafka集群进行消息写入和消费测试

我们通过自带脚本创建一个测试 topic:

# docker exec -it broker-1 bash

fd7bdb2e5ab1:/$ /opt/kafka/bin/kafka-console-producer.sh --bootstrap-server broker-1:19092 --topic test123

>abcd

[2025-03-05 06:40:44,170] WARN [Producer clientId=console-producer] Error while fetching metadata with correlation id 7 : {test123=UNKNOWN_TOPIC_OR_PARTITION} (org.apache.kafka.clients.NetworkClient)

[2025-03-05 06:40:44,284] WARN [Producer clientId=console-producer] Error while fetching metadata with correlation id 8 : {test123=UNKNOWN_TOPIC_OR_PARTITION} (org.apache.kafka.clients.NetworkClient)

[2025-03-05 06:40:44,519] WARN [Producer clientId=console-producer] Error while fetching metadata with correlation id 9 : {test123=UNKNOWN_TOPIC_OR_PARTITION} (org.apache.kafka.clients.NetworkClient)

由于 topic 默认是允许自动创建的,所以写入一条消息后,按 ctrl-D 进行退出,该 topic 根据默认配置创建了 3 个主分区。

# docker exec -it broker-1 bash

fd7bdb2e5ab1:/$ /opt/kafka/bin/kafka-topics.sh --bootstrap-server broker-1:19092 --list

test123

我们查看 topics 列表后,可以看到刚创建的 topic test123。

我们利用 kafka-console-consumer.sh 脚本对这个 topic 进行从头消费:

# docker exec -it broker-1 bash

fd7bdb2e5ab1:/$ /opt/kafka/bin/kafka-console-consumer.sh --bootstrap-server broker-2:19092 --topic test123 --from-beginning

abcd

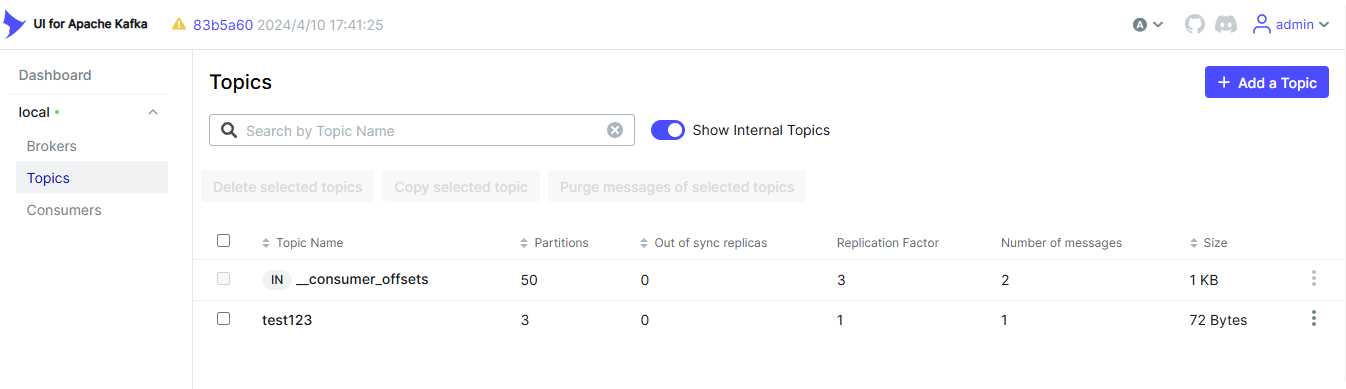

我们在通过映射到本机 8080 端口的 kafka-ui 工具查看 kafka 集群的情况:

可以看到,由于已经有了 consumer 所以,kafka 自动创建了 __consumer_offsets topic,并且默认为 50 个分区。

1.5 在外部宿主机节点进行消息读写

我们在宿主机上安装 kafka 工具,在宿主机上进程读写测试:

# cd ~/kafka

# wget https://archive.apache.org/dist/kafka/3.7.0/kafka_2.13-3.7.0.tgz

# tar -xf kafka_2.13-3.7.0.tgz

# ln -n kafka_2.13-3.7.0 kafka

# yum install java-17-openjdk-devel java-17-openjdk -y

# cd ~/kafka/kafka/bin

# ./bin/kafka-console-producer.sh --bootstrap-server localhost:29092,localhost:39092,localhost:49092 --topic test123

>123456

>7890

同样,使用 ctrl-D 进行退出。

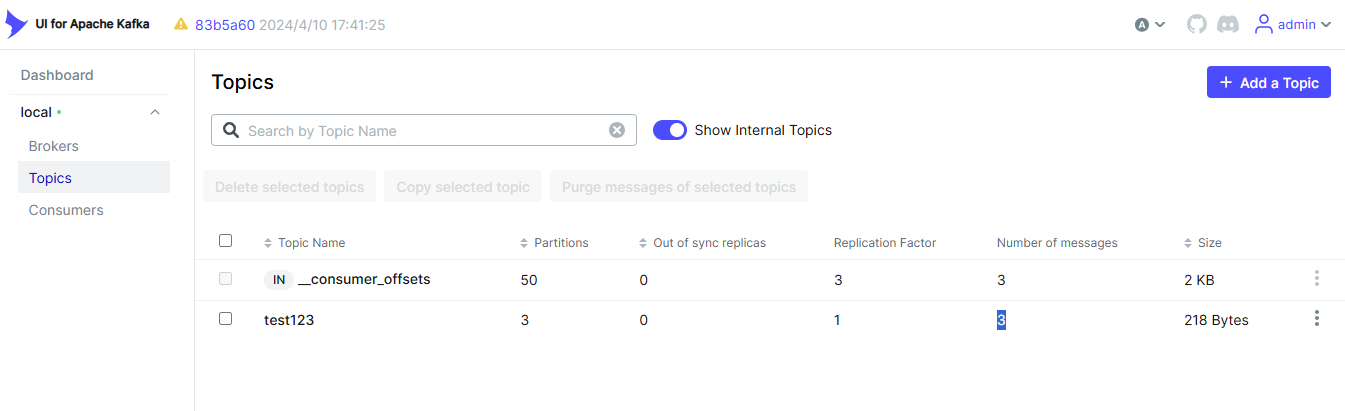

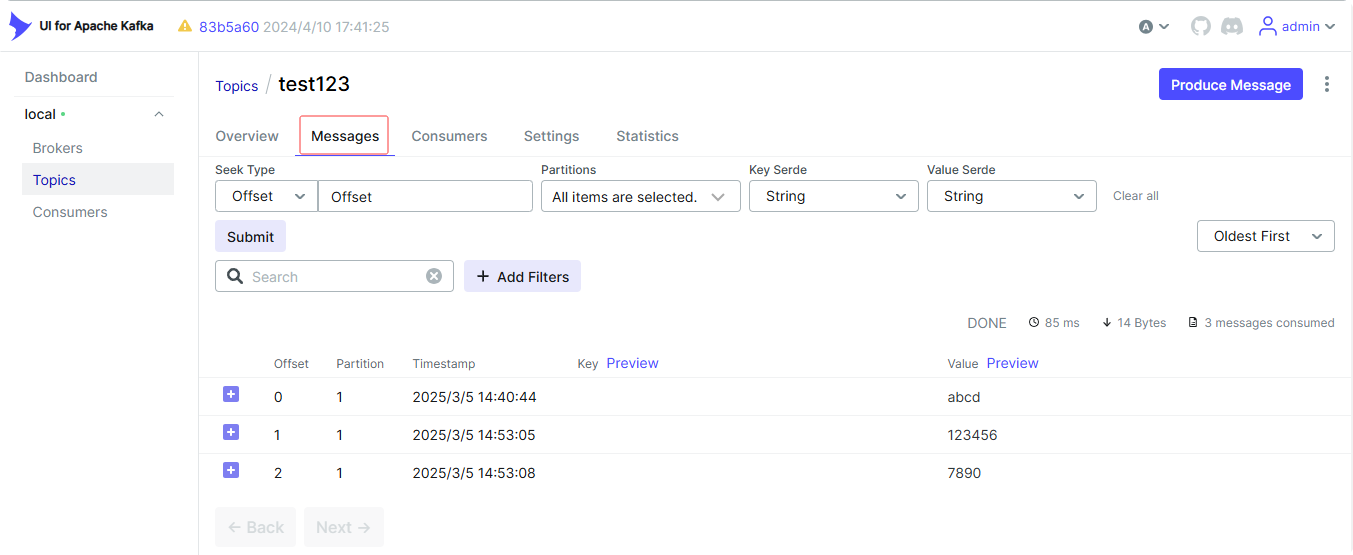

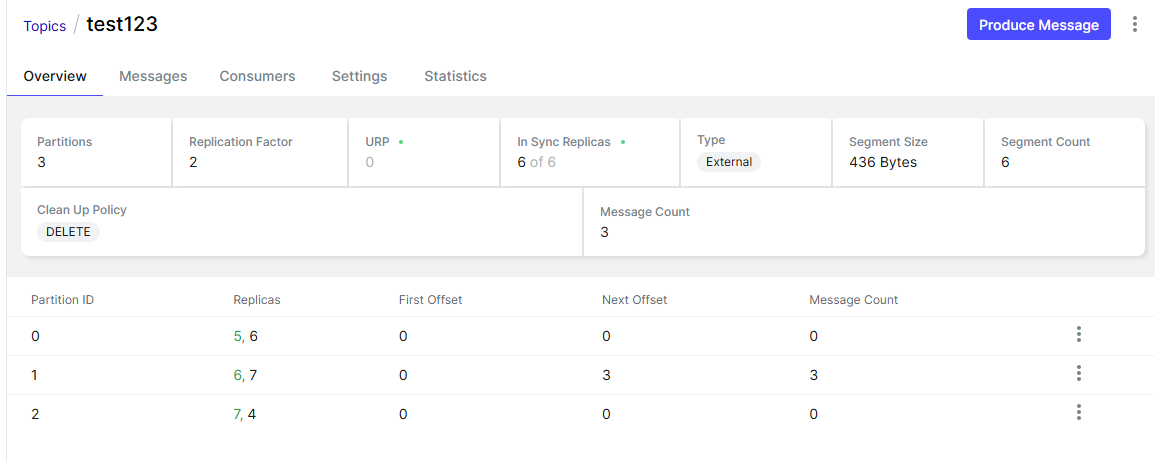

可以看到,该 test123 topic 已经有了 3 条消息。

证明,从外部写入 kafka 集群是正常的。

在 kafka-ui 可以对 topic 的消息进行浏览。

2. 对 kafka 集群的 broker 节点进行扩容

查阅 kafka 镜像的官方文档得知,apache/kafka 镜像封装的脚本中,已经配置有默认的 ["CLUSTER_ID"]="5L6g3nShT-eMCtK--X86sw",初始情况下,可以利用环境变量 CLUSTER_ID 进行覆盖此默认值。

文档见:https://github.com/apache/kafka/blob/18eca0229dc9f33c3253c705170a25b7f78eac40/docker/resources/common-scripts/configureDefaults#L20

由于我们初始集群已经单独设定了 CLUSTER_ID 环境变量,准备新增的节点也需要同步设置该环境变量。

新“节点”的启动脚本中会利用该 CLUSTER_ID=gQ3YBuwyQsiCIbN3Ilq1cQ 对数据目录下的 meta.properties 文件进行配置。

Tips: 如果实际环境中,新节点要加入旧集群,需要从旧集群的任一节点数据目录中拿到

cluster.id,然后利用./bin/kafka-storage.sh format -t "${CLUSTER_ID}" -c config/server.properties工具生成新节点数据目录下的meta.properties文件。

扩容的节点,我们采用手动 docker run 的方式,新启动一个容器(节点),并在启动容器命令中指明 --network 的方式,让新节点与 docker-compose 方式启动的旧集群处于同一网络环境内。

# docker run --name broker-4 -d \

-e KAFKA_NODE_ID=7 \

-e KAFKA_PROCESS_ROLES=broker \

-e KAFKA_LISTENERS="PLAINTEXT://:19092,PLAINTEXT_HOST://:9092" \

-e KAFKA_ADVERTISED_LISTENERS='PLAINTEXT://broker-4:19092,PLAINTEXT_HOST://localhost:59092' \

-e KAFKA_INTER_BROKER_LISTENER_NAME=PLAINTEXT \

-e KAFKA_CONTROLLER_LISTENER_NAMES=CONTROLLER \

-e KAFKA_LISTENER_SECURITY_PROTOCOL_MAP='CONTROLLER:PLAINTEXT,PLAINTEXT:PLAINTEXT,PLAINTEXT_HOST:PLAINTEXT' \

-e KAFKA_CONTROLLER_QUORUM_VOTERS='1@controller-1:9093,2@controller-2:9093,3@controller-3:9093' \

-e KAFKA_GROUP_INITIAL_REBALANCE_DELAY_MS=0 \

-e CLUSTER_ID=gQ3YBuwyQsiCIbN3Ilq1cQ \

-p 59092:9092 \

-u root \

--network kafka_kafka_horizontal_upscale_network \

-v ./volumes/broker-4:/tmp/kafka-logs \

apache/kafka:3.7.0

查看新增节点的 meta.properties 集群元数据文件。

>_ cat /tmp/kafka-logs/meta.properties

#

#Thu Mar 06 03:22:03 GMT 2025

cluster.id=Some(gQ3YBuwyQsiCIbN3Ilq1cQ)

directory.id=AGAMAiENnqOPETmsPTv6tw

node.id=7

version=1

查看新增节点 broker-4 的日志:

# docker logs -f broker-4

....

zookeeper.ssl.truststore.location = null

zookeeper.ssl.truststore.password = null

zookeeper.ssl.truststore.type = null

(kafka.server.KafkaConfig)

[2025-03-06 03:22:06,448] INFO [BrokerLifecycleManager id=7] The broker is in RECOVERY. (kafka.server.BrokerLifecycleManager)

[2025-03-06 03:22:06,449] INFO [BrokerServer id=7] Waiting for the broker to be unfenced (kafka.server.BrokerServer)

[2025-03-06 03:22:06,489] INFO [BrokerLifecycleManager id=7] The broker has been unfenced. Transitioning from RECOVERY to RUNNING. (kafka.server.BrokerLifecycleManager)

[2025-03-06 03:22:06,490] INFO [BrokerServer id=7] Finished waiting for the broker to be unfenced (kafka.server.BrokerServer)

[2025-03-06 03:22:06,493] INFO authorizerStart completed for endpoint PLAINTEXT_HOST. Endpoint is now READY. (org.apache.kafka.server.network.EndpointReadyFutures)

[2025-03-06 03:22:06,494] INFO authorizerStart completed for endpoint PLAINTEXT. Endpoint is now READY. (org.apache.kafka.server.network.EndpointReadyFutures)

[2025-03-06 03:22:06,494] INFO [SocketServer listenerType=BROKER, nodeId=7] Enabling request processing. (kafka.network.SocketServer)

[2025-03-06 03:22:06,499] INFO Awaiting socket connections on 0.0.0.0:9092. (kafka.network.DataPlaneAcceptor)

[2025-03-06 03:22:06,504] INFO Awaiting socket connections on 0.0.0.0:19092. (kafka.network.DataPlaneAcceptor)

[2025-03-06 03:22:06,508] INFO [BrokerServer id=7] Waiting for all of the authorizer futures to be completed (kafka.server.BrokerServer)

[2025-03-06 03:22:06,508] INFO [BrokerServer id=7] Finished waiting for all of the authorizer futures to be completed (kafka.server.BrokerServer)

[2025-03-06 03:22:06,508] INFO [BrokerServer id=7] Waiting for all of the SocketServer Acceptors to be started (kafka.server.BrokerServer)

[2025-03-06 03:22:06,509] INFO [BrokerServer id=7] Finished waiting for all of the SocketServer Acceptors to be started (kafka.server.BrokerServer)

[2025-03-06 03:22:06,509] INFO [BrokerServer id=7] Transition from STARTING to STARTED (kafka.server.BrokerServer)

[2025-03-06 03:22:06,509] INFO Kafka version: 3.7.0 (org.apache.kafka.common.utils.AppInfoParser)

[2025-03-06 03:22:06,509] INFO Kafka commitId: 2ae524ed625438c5 (org.apache.kafka.common.utils.AppInfoParser)

[2025-03-06 03:22:06,510] INFO Kafka startTimeMs: 1741231326509 (org.apache.kafka.common.utils.AppInfoParser)

[2025-03-06 03:22:06,511] INFO [KafkaRaftServer nodeId=7] Kafka Server started (kafka.server.KafkaRaftServer)

[2025-03-06 03:32:05,608] INFO [RaftManager id=7] Node 2 disconnected. (org.apache.kafka.clients.NetworkClient)

集群拓扑图也发生了变化:

| 名称 | ip | role |

|---|---|---|

| controller-1 | 172.23.1.11 | controller |

| controller-2 | 172.23.1.12 | controller |

| controller-3 | 172.23.1.13 | controller |

| broker-1 | 172.23.1.14 | broker |

| broker-2 | 172.23.1.15 | broker |

| broker-3 | 172.23.1.16 | broker |

| kafka-ui | 172.23.1.20 | web-ui |

| broker-4 | 172.23.1.17 | 新增 broker |

2.1 利用 kafka-console-consumer.sh 指定在新节点进行消费

在主机上,连接 broker-4 新节点的 adversted 端口进行消费:

# ./bin/kafka-console-consumer.sh --bootstrap-server localhost:59092 --topic test123 --from-beginning

abcd

123456

7890

可以看到,我们指定的 --bootstrap-server localhost:59092 连接到新节点,是能正常路由的,并且正常消费。

2.2 让新节点分配分区

要让新加入的节点分配分区,有如下几种方法:

-

- 手动利用脚本迁移现有的 topic

-

- 扩容现有 topic 分区数

-

- 重新创建 topic

2.2.1 利用自带的 kafka-reassign-partitions.sh 生成迁移计划

首先,需要准备一个 json 文件,其中包含有需要执行重新分配的 topics 列表:

# cat 01-topics.json

{

"topics":

[

{

"topic": "test123"

}

],

"version": 1

}

其次,执行脚本生成迁移计划文件:

>_ ./bin/kafka-reassign-partitions.sh --bootstrap-server localhost:29092 --generate --topics-to-move-json-file 01-topics.json --broker-list 4,5,6,7 | tee 02-plan.json

Current partition replica assignment

{"version":1,"partitions":[{"topic":"test123","partition":0,"replicas":[4,7],"log_dirs":["any","any"]},{"topic":"test123","partition":1,"replicas":[5,4],"log_dirs":["any","any"]},{"topic":"test123","partition":2,"replicas":[6,5],"log_dirs":["any","any"]}]}

Proposed partition reassignment configuration

{"version":1,"partitions":[{"topic":"test123","partition":0,"replicas":[5,6],"log_dirs":["any","any"]},{"topic":"test123","partition":1,"replicas":[6,7],"log_dirs":["any","any"]},{"topic":"test123","partition":2,"replicas":[7,4],"log_dirs":["any","any"]}]}

注意:此处在 kafka-ui 页面上对 test123 topic 进行了增加副本的操作,原设定没有副本分区,将

replication-factor从 1 设置为 2 后,kafka 会对该topic的每个分区新增一份副本分区。

可以看到这里 Proposed partition reassignment configuration 生成的计划中,对现有分区及其副本分区进行了自动分配。

我们对该输出 json 文件进行拆分,分为 02-current-assign.json 和 02-plan-assign.json 两个 json 文件:

>_ sed -n '2p' 02-plan.json > 02-current-assign.json

>_ sed -n '5p' 02-plan.json > 02-plan-assign.json

02-current-assign.json 可以用于恢复分区迁移操作。

02-plan-assign.json 用于执行分区迁移操作。

最后,根据分区迁移建议,执行迁移操作:

>_ ./bin/kafka-reassign-partitions.sh --bootstrap-server localhost:29092 --execute --reassignment-json-file 02-plan-assign.json

Current partition replica assignment

{"version":1,"partitions":[{"topic":"test123","partition":0,"replicas":[4,7],"log_dirs":["any","any"]},{"topic":"test123","partition":1,"replicas":[5,4],"log_dirs":["any","any"]},{"topic":"test123","partition":2,"replicas":[6,5],"log_dirs":["any","any"]}]}

Save this to use as the --reassignment-json-file option during rollback

Successfully started partition reassignments for test123-0,test123-1,test123-2

至此,分区迁移结束。

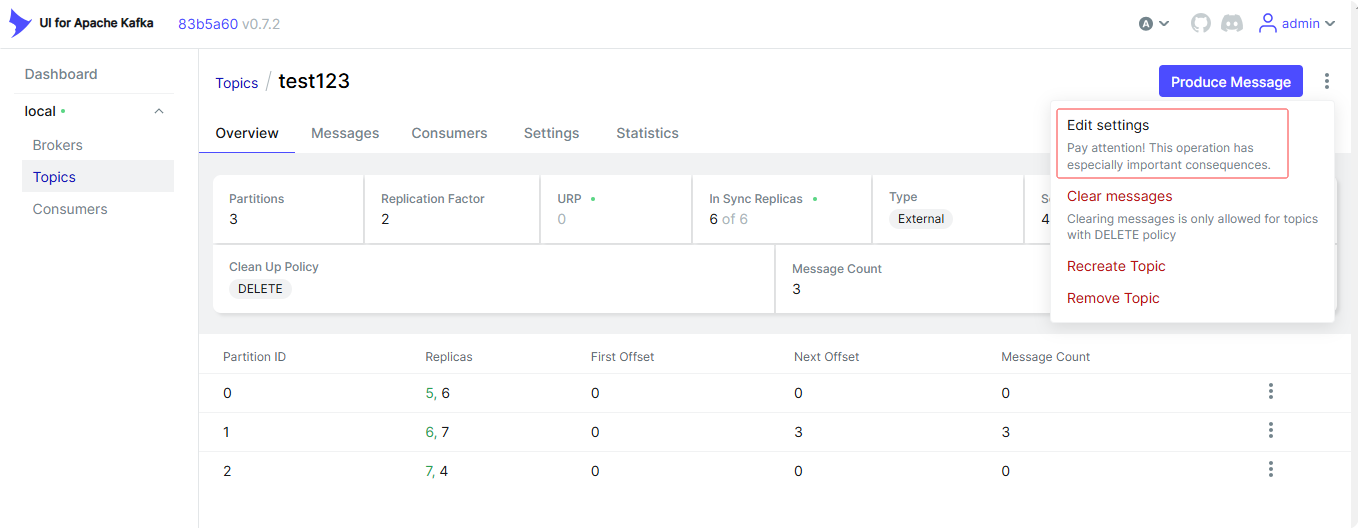

在 kafka-ui 上观察迁移结果的分布。

2.2.2 扩容现有分区数

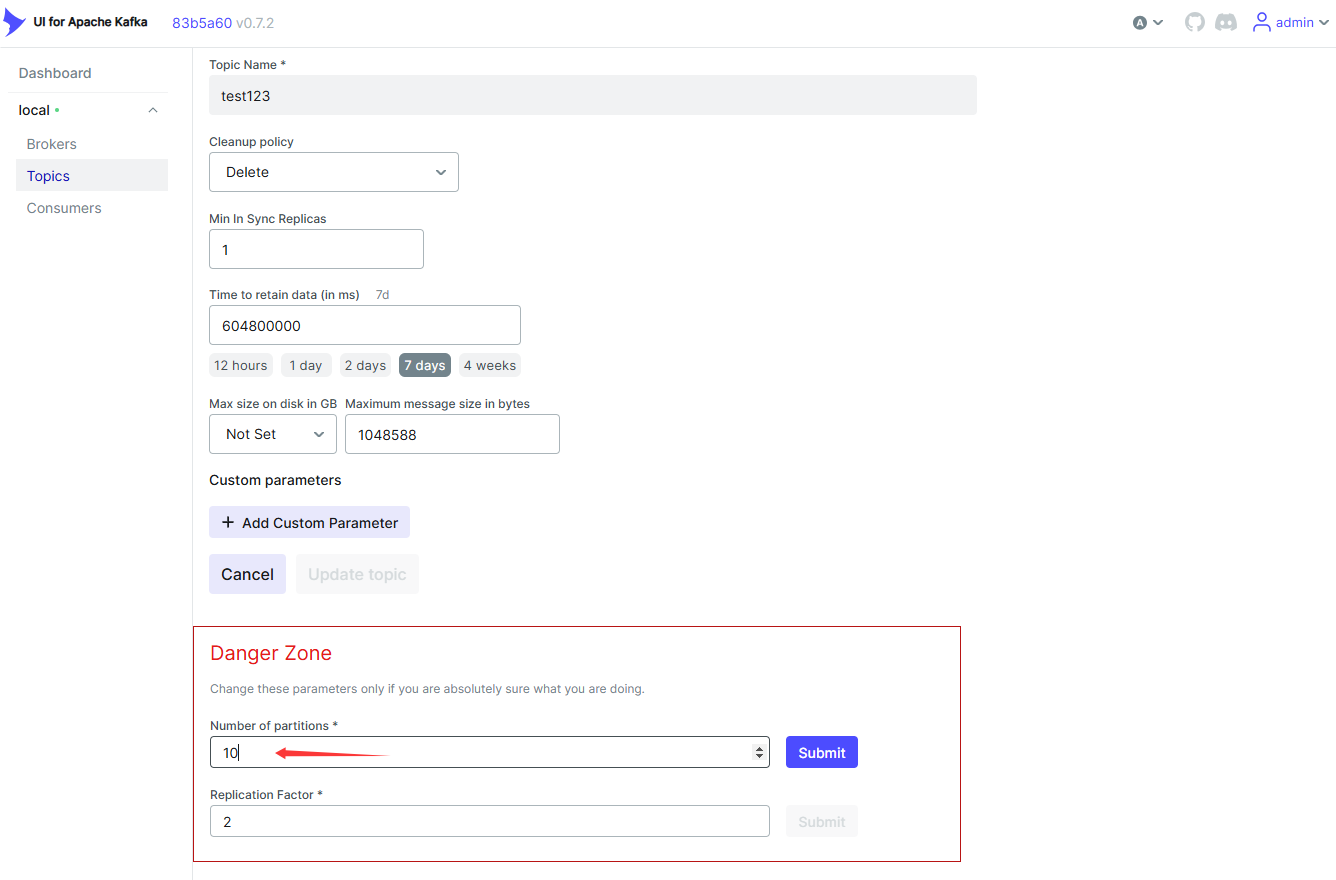

为了方便,这里就在 kafka-ui 上进行点击操作:

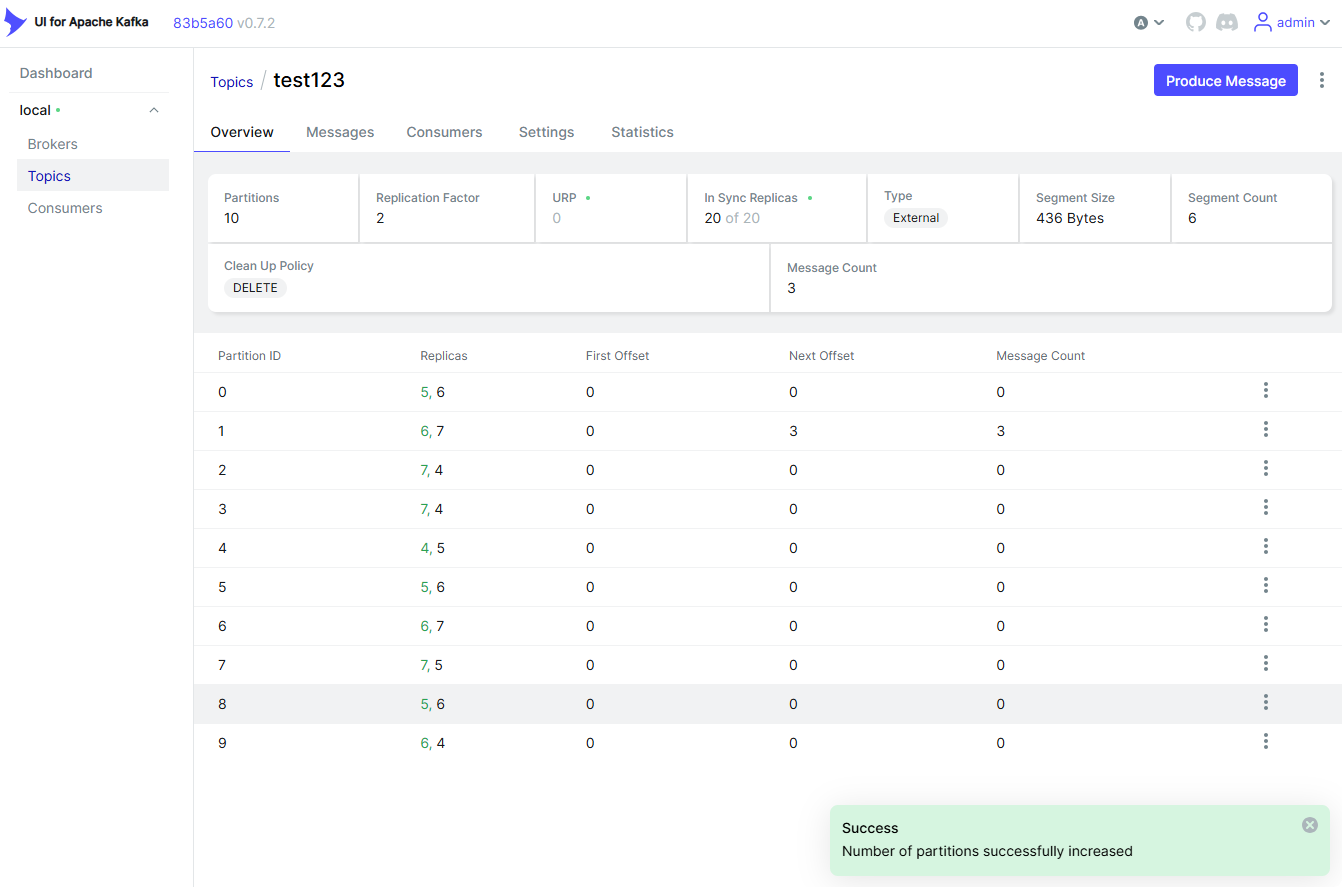

将分区从 3 扩容至 10 个。

扩容成功。

3. 扩容 controller 节点(可选)

仍旧利用 docker run 的方式手动新启一个controller“节点”。

切记,在实际生产业务环境中,controller 节点应保证足够的奇数节点,且 controller 节点数量不宜过大。

3.1 启动新的 controller-4 节点

docker run --name controller-4 -d \

-e KAFKA_NODE_ID=8 \

-e KAFKA_PROCESS_ROLES=controller \

-e KAFKA_LISTENERS="CONTROLLER://:9093" \

-e KAFKA_INTER_BROKER_LISTENER_NAME=PLAINTEXT \

-e KAFKA_CONTROLLER_LISTENER_NAMES=CONTROLLER \

-e KAFKA_CONTROLLER_QUORUM_VOTERS='1@controller-1:9093,2@controller-2:9093,3@controller-3:9093,8@controller-4:9093' \

-e KAFKA_GROUP_INITIAL_REBALANCE_DELAY_MS=0 \

-e CLUSTER_ID=gQ3YBuwyQsiCIbN3Ilq1cQ \

-u root \

--network kafka_kafka_horizontal_upscale_network \

--ip 172.23.1.18 \

-v ./volumes/controller-4:/tmp/kafka-logs \

apache/kafka:3.7.0

3.2 查看集群角色状态信息

我们登录任意一个容器内部,利用 kafka-metadata-quorum.sh 工具查看集群中节点的状态

>_ docker exec -it broker-1 bash

e058011f15d8:/# cd /opt/kafka

e058011f15d8:/opt/kafka# ./bin/kafka-metadata-quorum.sh --bootstrap-controller 172.23.1.18:9093 describe --replication

NodeId LogEndOffset Lag LastFetchTimestamp LastCaughtUpTimestamp Status

2 4339 0 1741241147141 1741241147141 Leader

1 4339 0 1741241147132 1741241147132 Follower

3 4339 0 1741241147132 1741241147132 Follower

4 4339 0 1741241147119 1741241147119 Observer

5 4339 0 1741241147119 1741241147119 Observer

6 4339 0 1741241147119 1741241147119 Observer

7 4339 0 1741241147119 1741241147119 Observer

8 4339 0 1741241147119 1741241147119 Observer

我们看到,NodeId 为 1,2,3,8 节点的状态应该为 Leader 或者 Follower,但是这里的新增节点 8 却和其他 broker 节点一样显示为 Observer。

3.3 更新旧集群中的 controller 节点配置信息

我们更新 docker-compose.yaml 配置中 controller-1 的 KAFKA_CONTROLLER_QUORUM_VOTERS 环境变量。

从旧的配置 KAFKA_CONTROLLER_QUORUM_VOTERS: 1@controller-1:9093,2@controller-2:9093,3@controller-3:9093

更新配置 KAFKA_CONTROLLER_QUORUM_VOTERS: 1@controller-1:9093,2@controller-2:9093,3@controller-3:9093,8@controller-4:9093

即,新增 8@controller-4:9093 新的 controller-4 节点配置。

然后重建 controller-1 节点容器:

>_ docker-compose down controller-1

>_ docker-compose up controller-1 -d

查看 controller-1 的新配置启动情况:

>_ docker logs -f controller-1

....

[2025-03-06 06:02:26,210] INFO Deleted producer state snapshot /tmp/kafka-logs/__cluster_metadata-0/00000000000000003912.snapshot (org.apache.kafka.storage.internals.log.SnapshotFile)

[2025-03-06 06:02:26,210] INFO [LogLoader partition=__cluster_metadata-0, dir=/tmp/kafka-logs] Producer state recovery took 3ms for snapshot load and 0ms for segment recovery from offset 0 (kafka.log.UnifiedLog$)

[2025-03-06 06:02:26,733] INFO [ProducerStateManager partition=__cluster_metadata-0] Wrote producer snapshot at offset 3912 with 0 producer ids in 24 ms. (org.apache.kafka.storage.internals.log.ProducerStateManager)

[2025-03-06 06:02:26,758] INFO [LogLoader partition=__cluster_metadata-0, dir=/tmp/kafka-logs] Loading producer state till offset 3912 with message format version 2 (kafka.log.UnifiedLog$)

[2025-03-06 06:02:26,758] INFO [LogLoader partition=__cluster_metadata-0, dir=/tmp/kafka-logs] Reloading from producer snapshot and rebuilding producer state from offset 3912 (kafka.log.UnifiedLog$)

[2025-03-06 06:02:26,758] INFO [ProducerStateManager partition=__cluster_metadata-0] Loading producer state from snapshot file 'SnapshotFile(offset=3912, file=/tmp/kafka-logs/__cluster_metadata-0/00000000000000003912.snapshot)' (org.apache.kafka.storage

.internals.log.ProducerStateManager)

[2025-03-06 06:02:26,760] INFO [LogLoader partition=__cluster_metadata-0, dir=/tmp/kafka-logs] Producer state recovery took 2ms for snapshot load and 0ms for segment recovery from offset 3912 (kafka.log.UnifiedLog$)

[2025-03-06 06:02:26,772] INFO Initialized snapshots with IDs SortedSet() from /tmp/kafka-logs/__cluster_metadata-0 (kafka.raft.KafkaMetadataLog$)

[2025-03-06 06:02:26,788] INFO [raft-expiration-reaper]: Starting (kafka.raft.TimingWheelExpirationService$ExpiredOperationReaper)

[2025-03-06 06:02:26,805] ERROR [SharedServer id=1] Got exception while starting SharedServer (kafka.server.SharedServer)

java.lang.IllegalStateException: Configured voter set: [1, 2, 3, 8] is different from the voter set read from the state file: [1, 2, 3]. Check if the quorum configuration is up to date, or wipe out the local state file if necessary

at org.apache.kafka.raft.QuorumState.initialize(QuorumState.java:132)

at org.apache.kafka.raft.KafkaRaftClient.initialize(KafkaRaftClient.java:375)

at kafka.raft.KafkaRaftManager.buildRaftClient(RaftManager.scala:248)

at kafka.raft.KafkaRaftManager.<init>(RaftManager.scala:174)

at kafka.server.SharedServer.start(SharedServer.scala:266)

at kafka.server.SharedServer.startForController(SharedServer.scala:138)

at kafka.server.ControllerServer.startup(ControllerServer.scala:206)

at kafka.server.KafkaRaftServer.$anonfun$startup$1(KafkaRaftServer.scala:98)

at kafka.server.KafkaRaftServer.$anonfun$startup$1$adapted(KafkaRaftServer.scala:98)

at scala.Option.foreach(Option.scala:437)

at kafka.server.KafkaRaftServer.startup(KafkaRaftServer.scala:98)

at kafka.Kafka$.main(Kafka.scala:112)

at kafka.Kafka.main(Kafka.scala)

[2025-03-06 06:02:26,807] ERROR Encountered fatal fault: caught exception (org.apache.kafka.server.fault.ProcessTerminatingFaultHandler)

java.lang.IllegalStateException: Configured voter set: [1, 2, 3, 8] is different from the voter set read from the state file: [1, 2, 3]. Check if the quorum configuration is up to date, or wipe out the local state file if necessary

at org.apache.kafka.raft.QuorumState.initialize(QuorumState.java:132)

at org.apache.kafka.raft.KafkaRaftClient.initialize(KafkaRaftClient.java:375)

at kafka.raft.KafkaRaftManager.buildRaftClient(RaftManager.scala:248)

at kafka.raft.KafkaRaftManager.<init>(RaftManager.scala:174)

at kafka.server.SharedServer.start(SharedServer.scala:266)

at kafka.server.SharedServer.startForController(SharedServer.scala:138)

at kafka.server.ControllerServer.startup(ControllerServer.scala:206)

at kafka.server.KafkaRaftServer.$anonfun$startup$1(KafkaRaftServer.scala:98)

at kafka.server.KafkaRaftServer.$anonfun$startup$1$adapted(KafkaRaftServer.scala:98)

at scala.Option.foreach(Option.scala:437)

at kafka.server.KafkaRaftServer.startup(KafkaRaftServer.scala:98)

at kafka.Kafka$.main(Kafka.scala:112)

at kafka.Kafka.main(Kafka.scala)

发现,进程启动失败,容器退出。

日志中明确告诉了,controller-1 进程在启动时,读取本地持久目录中的数据 /tmp/kafka-logs/__cluster_metadata-0,发现原有数据记录的 voter id 为 [1, 2, 3],但是新配置启动后与旧数据不符,所以启动失败。

解决这个报错的办法有两个:

-

- 确认 quorum 配置是否正确,或者回退老旧配置

-

- 清空本地目录的状态文件,再尝试启动

3.4 处理旧 controller 启动失败的问题

我们采用清理本地数据的方式,让 controller-1 根据 KAFKA_CONTROLLER_QUORUM_VOTERS 配置进行重建本地状态元数据文件。

>_ rm -rf ./volumes/controller-1/*

>_ docker-compose down controller-1

>_ docker-compose up controller-1 -d

>_ docker logs -f controller-1

....

[2025-03-06 06:03:44,325] INFO [RaftManager id=1] Completed transition to Voted(epoch=191, votedId=2, voters=[1, 2, 3, 8], electionTimeoutMs=1837) from Unattached(epoch=191, voters=[1, 2, 3, 8], electionTimeoutMs=1564) (org.apache.kafka.raft.QuorumState)

[2025-03-06 06:03:44,325] INFO [RaftManager id=1] Vote request VoteRequestData(clusterId='Some(gQ3YBuwyQsiCIbN3Ilq1cQ)', topics=[TopicData(topicName='__cluster_metadata', partitions=[PartitionData(partitionIndex=0, candidateEpoch=191, candidateId=2, lastOffsetEpoch=2, lastOffset=4092)])]) with epoch 191 is granted (org.apache.kafka.raft.KafkaRaftClient)

[2025-03-06 06:03:44,413] INFO [RaftManager id=1] Completed transition to FollowerState(fetchTimeoutMs=2000, epoch=191, leaderId=2, voters=[1, 2, 3, 8], highWatermark=Optional[LogOffsetMetadata(offset=4087, metadata=Optional.empty)], fetchingSnapshot=Optional.empty) from Voted(epoch=191, votedId=2, voters=[1, 2, 3, 8], electionTimeoutMs=1837) (org.apache.kafka.raft.QuorumState)

[2025-03-06 06:03:45,992] INFO [RaftManager id=1] Vote request VoteRequestData(clusterId='Some(gQ3YBuwyQsiCIbN3Ilq1cQ)', topics=[TopicData(topicName='__cluster_metadata', partitions=[PartitionData(partitionIndex=0, candidateEpoch=191, candidateId=8, lastOffsetEpoch=1, lastOffset=3912)])]) with epoch 191 is rejected (org.apache.kafka.raft.KafkaRaftClient)

....

可以看到,此处 controller-1 已经正确识别 voters 节点的 ID了。

3.5 如法炮制,将剩下的 controller-2 和 controller-3 节点进行配置更新

首先,更新 docker-compose.yaml 配置文件:

...

controller-2:

image: apache/kafka:3.7.0

container_name: controller-2

privileged: true

user: root

environment:

KAFKA_NODE_ID: 2

KAFKA_PROCESS_ROLES: controller

KAFKA_LISTENERS: CONTROLLER://:9093

KAFKA_INTER_BROKER_LISTENER_NAME: PLAINTEXT

KAFKA_CONTROLLER_LISTENER_NAMES: CONTROLLER

KAFKA_CONTROLLER_QUORUM_VOTERS: 1@controller-1:9093,2@controller-2:9093,3@controller-3:9093,8@controller-4:9093

KAFKA_GROUP_INITIAL_REBALANCE_DELAY_MS: 0

CLUSTER_ID: gQ3YBuwyQsiCIbN3Ilq1cQ

volumes:

- ./volumes/controller-2:/tmp/kafka-logs

networks:

kafka_horizontal_upscale_network:

ipv4_address: 172.23.1.12

controller-3:

image: apache/kafka:3.7.0

container_name: controller-3

privileged: true

user: root

environment:

KAFKA_NODE_ID: 3

KAFKA_PROCESS_ROLES: controller

KAFKA_LISTENERS: CONTROLLER://:9093

KAFKA_INTER_BROKER_LISTENER_NAME: PLAINTEXT

KAFKA_CONTROLLER_LISTENER_NAMES: CONTROLLER

KAFKA_CONTROLLER_QUORUM_VOTERS: 1@controller-1:9093,2@controller-2:9093,3@controller-3:9093

KAFKA_GROUP_INITIAL_REBALANCE_DELAY_MS: 0

CLUSTER_ID: gQ3YBuwyQsiCIbN3Ilq1cQ

volumes:

- ./volumes/controller-3:/tmp/kafka-logs

networks:

kafka_horizontal_upscale_network:

ipv4_address: 172.23.1.13

...

然后,停止容器,删除本地目录文件,启动容器:

>_ docker-compose down controller-2 controller-3

[+] Running 3/3

✔ Container controller-2 Removed

✔ Container controller-3 Removed

! Network kafka_kafka_horizontal_upscale_network Resource is still in use

>_ rm -rf ./volumes/controller-{2..3}/*

>_ docker-compose up controller-2 controller-3 -d

[+] Running 2/2

✔ Container controller-2 Started

✔ Container controller-3 Started

3.6 再次检查集群节点的角色状态

>_ docker exec -it broker-1 bash

e058011f15d8:/# cd /opt/kafka

e058011f15d8:/opt/kafka# ./bin/kafka-metadata-quorum.sh --bootstrap-controller 172.23.1.11:9093 describe --replication

NodeId LogEndOffset Lag LastFetchTimestamp LastCaughtUpTimestamp Status

1 7479 0 1741242734997 1741242734997 Leader

2 7479 0 1741242734648 1741242734648 Follower

3 7479 0 1741242734646 1741242734646 Follower

8 7479 0 1741242734646 1741242734646 Follower

4 7479 0 1741242734643 1741242734643 Observer

5 7479 0 1741242734643 1741242734643 Observer

6 7479 0 1741242734643 1741242734643 Observer

7 7479 0 1741242734638 1741242734638 Observer

这里可以看到,当集群中过半数的 controller 节点已经读取到新配置后,新增的 controller-8 节点就会变为 controller 角色,角色状态变为了 Follower 追随者。

最后,我们将旧集群中的 broker 节点配置进行更新,统一新增 KAFKA_CONTROLLER_QUORUM_VOTERS: 1@controller-1:9093,2@controller-2:9093,3@controller-3:9093,8@controller-4:9093 环境变量。

因为 broker 节点中的数据目录保存有 topic 的数据,所以我们就不能将 broker 数据目录进行全部清空。

其实,我们只需要删除一个文件即可:

>_ docker-compose down broker-{1..3}

[+] Running 4/4

✔ Container broker-2 Removed

✔ Container broker-1 Removed

✔ Container broker-3 Removed

! Network kafka_kafka_horizontal_upscale_network Resource is still in use

## 只需要删除 __cluster_metadata_0/quorum-state 文件即可

>_ rm ./volumes/broker-{1..3}/__cluster_metadata-0/quorum-state

rm: remove regular file './volumes/broker-1/__cluster_metadata-0/quorum-state'? y

rm: remove regular file './volumes/broker-2/__cluster_metadata-0/quorum-state'? y

rm: remove regular file './volumes/broker-3/__cluster_metadata-0/quorum-state'? y

>_ docker-compose up broker-{1..3} -d

[+] Running 6/6

✔ Container controller-2 Running

✔ Container controller-3 Running

✔ Container controller-1 Running

✔ Container broker-1 Started

✔ Container broker-2 Started

✔ Container broker-3 Started

>_ docker logs -f broker-1

[2025-03-06 06:52:28,226] INFO [SocketServer listenerType=BROKER, nodeId=4] Enabling request processing. (kafka.network.SocketServer)

[2025-03-06 06:52:28,231] INFO Awaiting socket connections on 0.0.0.0:19092. (kafka.network.DataPlaneAcceptor)

[2025-03-06 06:52:28,240] INFO Awaiting socket connections on 0.0.0.0:9092. (kafka.network.DataPlaneAcceptor)

[2025-03-06 06:52:28,250] INFO [BrokerServer id=4] Waiting for all of the authorizer futures to be completed (kafka.server.BrokerServer)

[2025-03-06 06:52:28,250] INFO [BrokerServer id=4] Finished waiting for all of the authorizer futures to be completed (kafka.server.BrokerServer)

[2025-03-06 06:52:28,251] INFO [BrokerServer id=4] Waiting for all of the SocketServer Acceptors to be started (kafka.server.BrokerServer)

[2025-03-06 06:52:28,251] INFO [BrokerServer id=4] Finished waiting for all of the SocketServer Acceptors to be started (kafka.server.BrokerServer)

[2025-03-06 06:52:28,251] INFO [BrokerServer id=4] Transition from STARTING to STARTED (kafka.server.BrokerServer)

[2025-03-06 06:52:28,252] INFO Kafka version: 3.7.0 (org.apache.kafka.common.utils.AppInfoParser)

[2025-03-06 06:52:28,252] INFO Kafka commitId: 2ae524ed625438c5 (org.apache.kafka.common.utils.AppInfoParser)

[2025-03-06 06:52:28,252] INFO Kafka startTimeMs: 1741243948251 (org.apache.kafka.common.utils.AppInfoParser)

[2025-03-06 06:52:28,256] INFO [KafkaRaftServer nodeId=4] Kafka Server started (kafka.server.KafkaRaftServer)

....

OK, Broker-1 重新上线。

集群拓扑图也发生了变化:

| 名称 | ip | role |

|---|---|---|

| controller-1 | 172.23.1.11 | controller |

| controller-2 | 172.23.1.12 | controller |

| controller-3 | 172.23.1.13 | controller |

| broker-1 | 172.23.1.14 | broker |

| broker-2 | 172.23.1.15 | broker |

| broker-3 | 172.23.1.16 | broker |

| kafka-ui | 172.23.1.20 | web-ui |

| broker-4 | 172.23.1.17 | 新增 broker |

| controller-4 | 172.23.1.18 | 新增 controller |

4. 小结

至此,kafka kraft 集群的 broker 和 controller 节点都已经扩容完毕。

注意:在实际生产环境中,为了控制器节点的可选举性,需要保证

controller节点的个数为奇数个。

当然,实际生产环境中,更多的是扩容 broker 节点。

浙公网安备 33010602011771号

浙公网安备 33010602011771号