kubernete 日志收集之 efk(es+Fluentd+Kibana)

简介

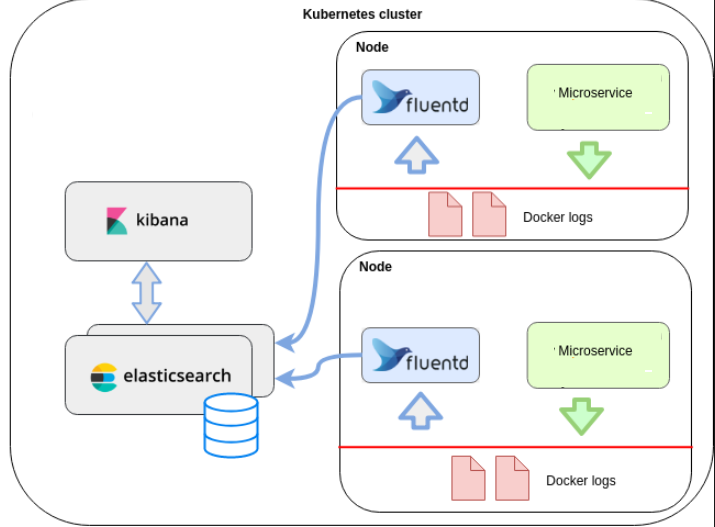

EFK工作示意

-

Elasticsearch

一个开源的分布式、Restful 风格的搜索和数据分析引擎,它的底层是开源库Apache Lucene。它可以被下面这样准确地形容:- 一个分布式的实时文档存储,每个字段可以被索引与搜索;

- 一个分布式实时分析搜索引擎;

- 能胜任上百个服务节点的扩展,并支持 PB 级别的结构化或者非结构化数据。

-

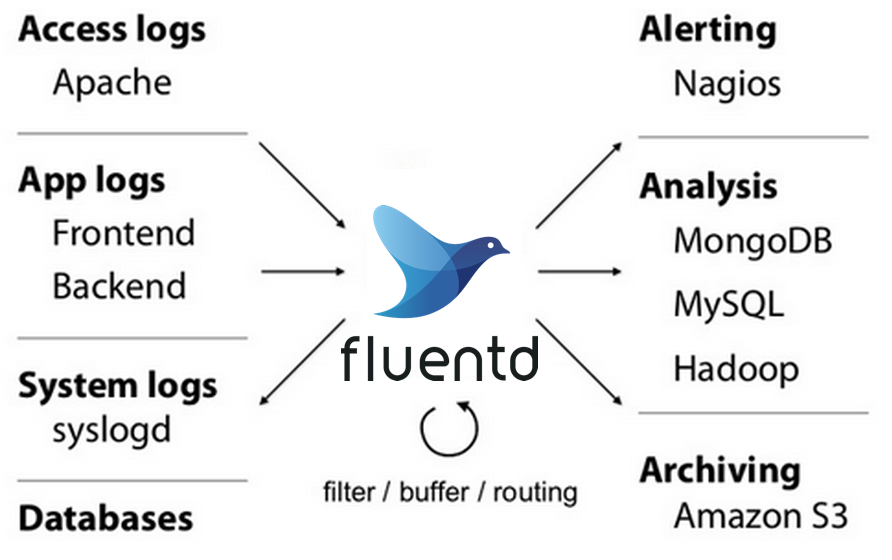

Fluentd

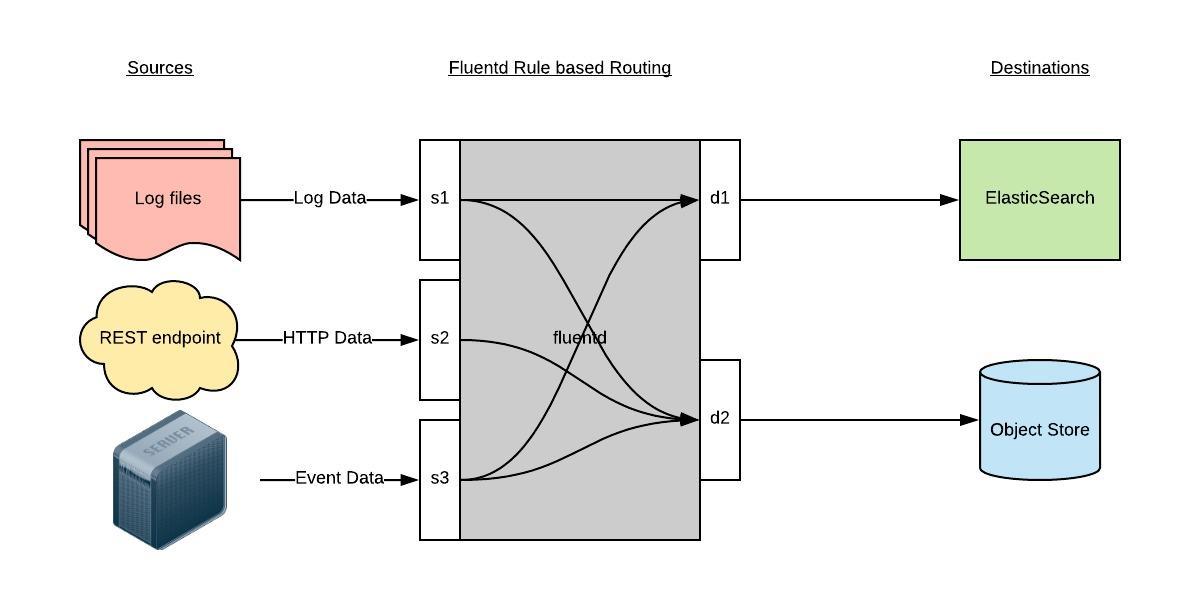

一个针对日志的收集、处理、转发系统。通过丰富的插件系统,可以收集来自于各种系统或应用的日志,转化为用户指定的格式后,转发到用户所指定的日志存储系统之中。

Fluentd 通过一组给定的数据源抓取日志数据,处理后(转换成结构化的数据格式)将它们转发给其他服务,比如 Elasticsearch、对象存储、kafka等等。Fluentd 支持超过300个日志存储和分析服务,所以在这方面是非常灵活的。主要运行步骤如下- 首先 Fluentd 从多个日志源获取数据

- 结构化并且标记这些数据

- 然后根据匹配的标签将数据发送到多个目标服务

-

Kibana

Kibana是一个开源的分析和可视化平台,设计用于和Elasticsearch一起工作。可以通过Kibana来搜索,查看,并和存储在Elasticsearch索引中的数据进行交互。也可以轻松地执行高级数据分析,并且以各种图标、表格和地图的形式可视化数据。

部署es服务

部署分析

- es生产环境是部署es集群,通常会使用statefulset进行部署;演示环境可以单点部署,生产环境必须集群部署

- 数据存储挂载主机路径

- es默认使用elasticsearch用户启动进程,es的数据目录是通过宿主机的路径挂载,因此目录权限被主机的目录权限覆盖,因此可以利用init container容器在es进程启动之前把目录的权限修改掉,注意init container要用特权模式启动。

部署 es 集群版

es 三个节点

efk/elasticsearch.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

labels:

addonmanager.kubernetes.io/mode: Reconcile

k8s-app: es

version: v7.4.2

name: es

namespace: monitoring

spec:

replicas: 3

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: es

version: v7.4.2

serviceName: elasticsearch

template:

metadata:

labels:

k8s-app: es

version: v7.4.2

spec:

nodeSelector:

log: es # 指定部署在哪个节点。需根据环境来修改

containers:

- env:

- name: NAMESPACE

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

- name: cluster.name

value: es-cluster

- name: discovery.zen.ping.unicast.hosts

value: es-0.elasticsearch,es-1.elasticsearch,es-2.elasticsearch

- name: discovery.zen.minimum_master_nodes

value: "2"

- name: network.host

value: "0.0.0.0"

- name: ES_JAVA_OPTS

value: "-Xms5g -Xmx5g"

name: es

image: mrliulei/elasticsearch:v7.4.2

ports:

- containerPort: 9200

name: db

protocol: TCP

- containerPort: 9300

name: transport

protocol: TCP

volumeMounts:

- mountPath: /usr/share/elasticsearch/data

name: elasticsearch-logging

dnsConfig:

options:

- name: single-request-reopen

initContainers:

- command:

- /sbin/sysctl

- -w

- vm.max_map_count=262144

image: alpine:3.6

imagePullPolicy: IfNotPresent

name: elasticsearch-logging-init

resources: {}

securityContext:

privileged: true

- name: fix-permissions

image: alpine:3.6

command: ["sh", "-c", "chown -R 1000:1000 /usr/share/elasticsearch/data"]

securityContext:

privileged: true

volumeMounts:

- name: elasticsearch-logging

mountPath: /usr/share/elasticsearch/data

volumes:

- name: elasticsearch-logging

hostPath:

path: /esdata

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: es

name: elasticsearch

namespace: monitoring

spec:

ports:

- port: 9200

protocol: TCP

name: db

- port: 9300

protocol: TCP

name: transport

selector:

k8s-app: es

type: ClusterIP

# clusterIP: None

# 检查集群状态

# 登录 es 的pod 检查集群状态

kubectl -n monitoring exec -it elasticsearch-0 bash

curl http://elasticsearch:9200/_cat/health?v

epoch timestamp cluster status node.total node.data shards pri relo init unassign pending_tasks max_task_wait_time active_shards_percent

1658396628 09:43:48 es-cluster green 3 3 10 5 0 0 0 0 - 100.0%

curl http://localhost:9200/_cat/health?v

curl http://elasticsearch:9200/_cluster/state?pretty

curl http://localhost:9200/_cluster/state?pretty

部署kibana

部署分析

- kibana需要暴漏web页面给前端使用,因此使用ingress配置域名来实现对kibana的访问

- kibana为无状态应用,直接使用Deployment来启动

- kibana需要访问es,直接利用k8s服务发现访问此地址即可,http://elasticsearch:9200

部署并验证

资源文件 efk/kibana.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana

namespace: monitoring

labels:

app: kibana

spec:

selector:

matchLabels:

app: kibana

template:

metadata:

labels:

app: kibana

spec:

containers:

- name: kibana

image: mrliulei/kibana:v7.4.2

resources:

limits:

cpu: 1000m

requests:

cpu: 100m

env:

- name: ELASTICSEARCH_URL

value: elasticsearch:9200

ports:

- containerPort: 5601

---

apiVersion: v1

kind: Service

metadata:

name: kibana

namespace: monitoring

labels:

app: kibana

spec:

ports:

- port: 5601

protocol: TCP

targetPort: 5601

type: ClusterIP

selector:

app: kibana

---

# 这里我使用的是 之前 创建出来的 ingress ,所以这里的ingress我注视掉了;

# apiVersion: extensions/v1beta1

# kind: Ingress

# metadata:

# name: kibana

# namespace: monitoring

# spec:

# rules:

# - host: kibana.devops.cn

# http:

# paths:

# - path: /

# backend:

# serviceName: kibana

# servicePort: 5601

部署fluentd

fluentd 工作流程介绍:

https://docs.fluentd.org/quickstart

事件流的生命周期:https://docs.fluentd.org/quickstart/life-of-a-fluentd-event

Input -> filter1 -> ... -> filter N -> Buffer -> Output

部署分析

- fluentd为日志采集服务,kubernetes集群的每个业务节点都有日志产生,因此需要使用daemonset的模式进行部署

- 为进一步控制资源,会为daemonset指定一个选择表情,fluentd=true来做进一步过滤,只有带有此标签的节点才会部署fluentd

- 日志采集,需要采集哪些目录下的日志,采集后发送到es端,因此需要配置的内容比较多,我们选择使用configmap的方式把配置文件整个挂载出来

部署服务

配置文件,efk/fluentd-es-main.yaml

apiVersion: v1

data:

fluent.conf: |-

# This is the root config file, which only includes components of the actual configuration

#

# Do not collect fluentd's own logs to avoid infinite loops.

<match fluent.**>

@type null

</match>

@include /fluentd/etc/config.d/*.conf

kind: ConfigMap

metadata:

labels:

addonmanager.kubernetes.io/mode: Reconcile

name: fluentd-es-config-main

namespace: logging

配置文件,fluentd-config.yaml,注意点:

- 数据源source的配置,k8s会默认把容器的标准和错误输出日志重定向到宿主机中

- 默认集成了 kubernetes_metadata_filter 插件,来解析日志格式,得到k8s相关的元数据,raw.kubernetes

- match输出到es端的flush配置

efk/fluentd-configmap.yaml

kind: ConfigMap

apiVersion: v1

metadata:

name: fluentd-config

namespace: logging

labels:

addonmanager.kubernetes.io/mode: Reconcile

data:

system.conf: |-

<system>

root_dir /tmp/fluentd-buffers/

</system>

containers.input.conf: |-

<source>

@id fluentd-containers.log

# 日志是追加的,所以这里使用 type 类型为 tail

@type tail

# 收集日志的路径,宿主机的目录;

path /var/log/containers/*.log

pos_file /var/log/es-containers.log.pos

time_format %Y-%m-%dT%H:%M:%S.%NZ

localtime

tag raw.kubernetes.*

format json

read_from_head true

</source>

# Detect exceptions in the log output and forward them as one log entry.

# https://github.com/googlecloudplatform/fluent-plugin-detect-exceptions

<match raw.kubernetes.**>

@id raw.kubernetes

# 做了一个异常的合并,详细请查看 上边 github 链接;

@type detect_exceptions

remove_tag_prefix raw

message log

stream stream

multiline_flush_interval 5

max_bytes 500000

max_lines 1000

</match>

forward.input.conf: |-

# Takes the messages sent over TCP

<source>

@type forward

</source>

output.conf: |-

# Enriches records with Kubernetes metadata

<filter kubernetes.**>

# 引用了 kubernetes 的插件,做了日志方面的规则;

@type kubernetes_metadata

</filter>

<match **>

@id elasticsearch

# 输出到 es

@type elasticsearch

@log_level info

include_tag_key true

# 定义 es 的 svc 名称

host elasticsearch

# 定义 es 的端口

port 9200

logstash_format true

request_timeout 30s

# buffer 配置,这里也比较重要

# 调优就是调整 buffer 数值

<buffer>

# buffer 类型,选择 file ,放到文件里边,本地保存;还有一种放到内存中

@type file

# 缓存路径

path /var/log/fluentd-buffers/kubernetes.system.buffer

flush_mode interval

retry_type exponential_backoff

flush_thread_count 2

# 5s 做一次 flush

flush_interval 5s

retry_forever

retry_max_interval 30

# chunk size 为2M

chunk_limit_size 2M

# 本地最多缓存 2*8M;和5s,哪个先到,走哪个;

queue_limit_length 8

overflow_action block

</buffer>

</match>

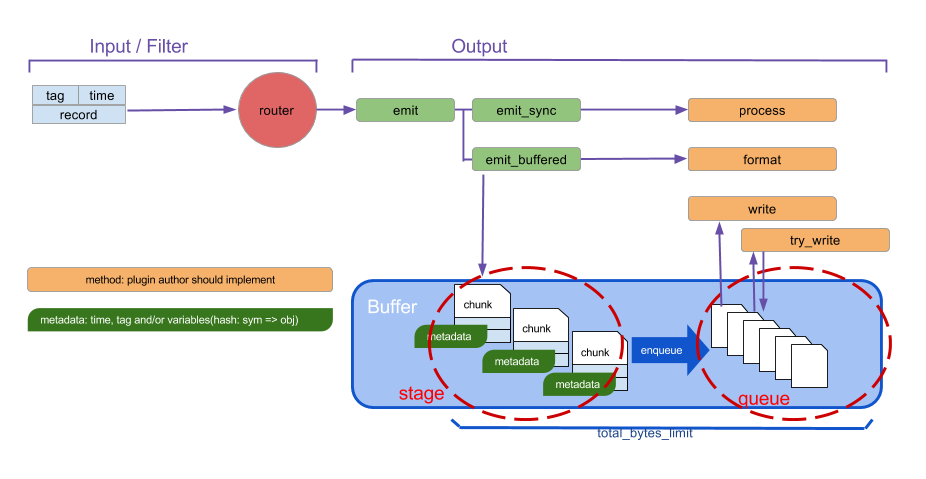

buffer 流程图:

把数据先缓存到本地;

减轻 网络 io,不频繁发送;

daemonset定义文件,fluentd.yaml,注意点:

- 需要配置rbac规则,因为需要访问k8s api去根据日志查询元数据

- 需要将/var/log/containers/目录挂载到容器中

- 需要将fluentd的configmap中的配置文件挂载到容器内

- 想要部署fluentd的节点,需要添加fluentd=true的标签

efk/fluentd.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: fluentd-es

namespace: logging

labels:

k8s-app: fluentd-es

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: fluentd-es

labels:

k8s-app: fluentd-es

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups:

- ""

resources:

- "namespaces"

- "pods"

verbs:

- "get"

- "watch"

- "list"

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: fluentd-es

labels:

k8s-app: fluentd-es

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

subjects:

- kind: ServiceAccount

name: fluentd-es

namespace: logging

apiGroup: ""

roleRef:

kind: ClusterRole

name: fluentd-es

apiGroup: ""

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

addonmanager.kubernetes.io/mode: Reconcile

k8s-app: fluentd-es

name: fluentd-es

namespace: logging

spec:

selector:

matchLabels:

k8s-app: fluentd-es

template:

metadata:

labels:

k8s-app: fluentd-es

spec:

containers:

- env:

- name: FLUENTD_ARGS

value: --no-supervisor -q

image: mrliulei/fluentd-es-root:v1.6.2-1.0

imagePullPolicy: IfNotPresent

name: fluentd-es

resources:

limits:

memory: 500Mi

requests:

cpu: 100m

memory: 200Mi

volumeMounts:

- mountPath: /var/log

name: varlog

- mountPath: /var/lib/docker/containers

name: varlibdockercontainers

readOnly: true

- mountPath: /home/docker/containers

name: varlibdockercontainershome

readOnly: true

- mountPath: /fluentd/etc/config.d

name: config-volume

- mountPath: /fluentd/etc/fluent.conf

name: config-volume-main

subPath: fluent.conf

nodeSelector:

# fluentd: "true"

securityContext: {}

serviceAccount: fluentd-es

serviceAccountName: fluentd-es

volumes:

- hostPath:

path: /var/log

type: ""

name: varlog

- hostPath:

path: /var/lib/docker/containers

type: ""

name: varlibdockercontainers

- hostPath:

path: /home/docker/containers

type: ""

name: varlibdockercontainershome

- configMap:

defaultMode: 420

name: fluentd-config

name: config-volume

- configMap:

defaultMode: 420

items:

- key: fluent.conf

path: fluent.conf

name: fluentd-es-config-main

name: config-volume-main

# 创建服务

$ kubectl create -f fluentd-es-config-main.yaml

$ kubectl create -f fluentd-configmap.yaml

$ kubectl create -f fluentd.yaml

EFK功能验证

验证思路

k8s-slave1和slave2中启动服务,同时往标准输出中打印测试日志,到kibana中查看是否可以收集

创建测试容器

apiVersion: v1

kind: Pod

metadata:

name: counter

spec:

nodeSelector:

# fluentd: "true"

containers:

- name: count

image: alpine:3.6

args: [/bin/sh, -c,

'i=0; while true; do echo "$i: $(date)"; i=$((i+1)); sleep 1; done']

$ kubectl get po

NAME READY STATUS RESTARTS AGE

counter 1/1 Running 0 6s

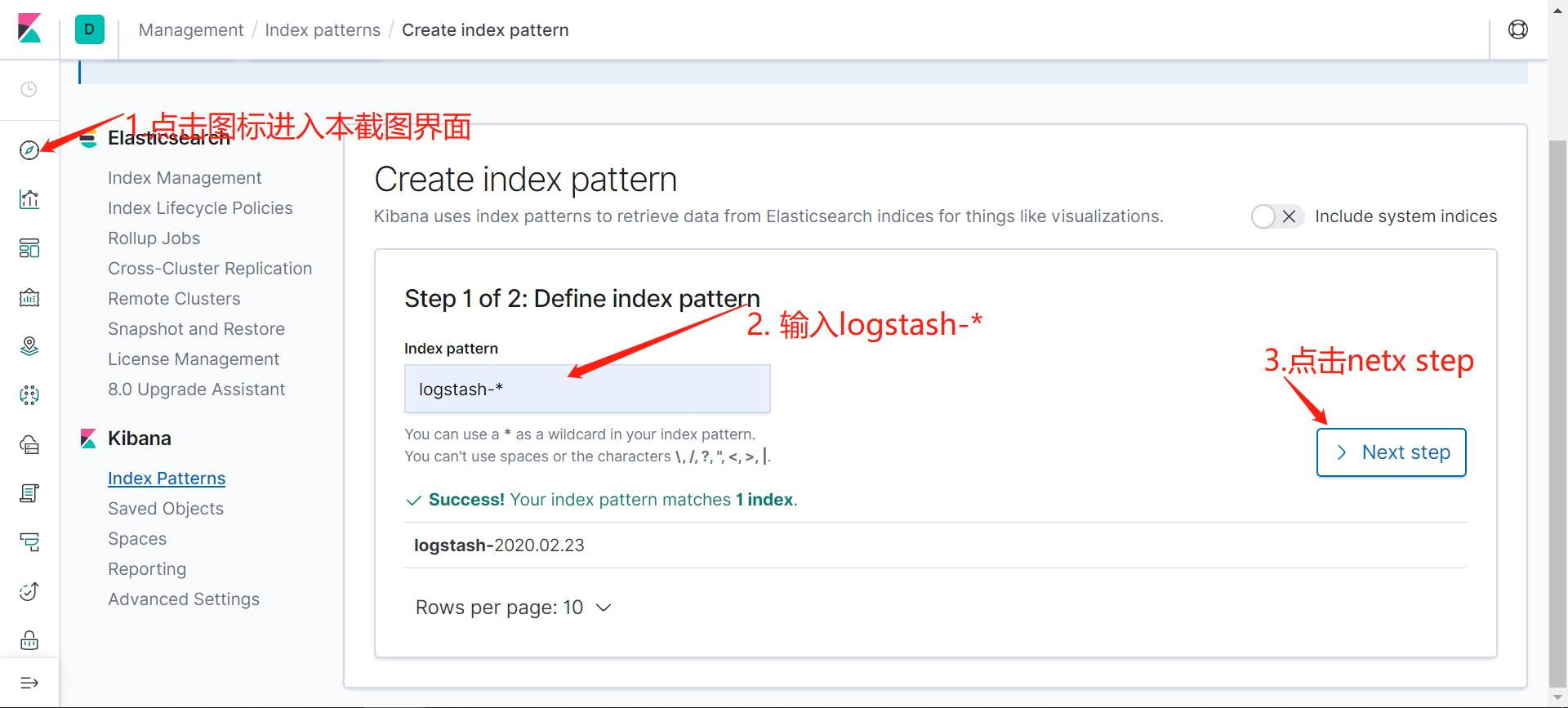

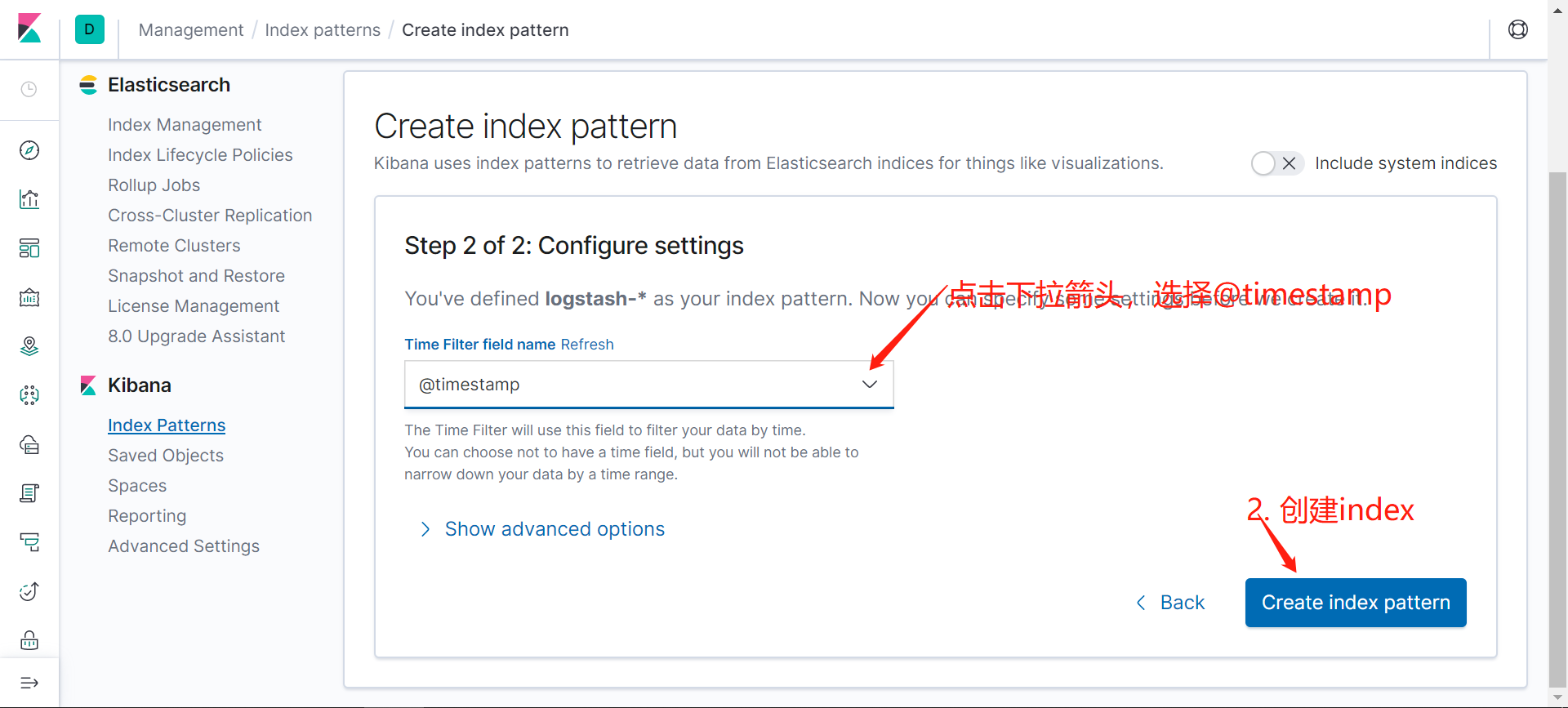

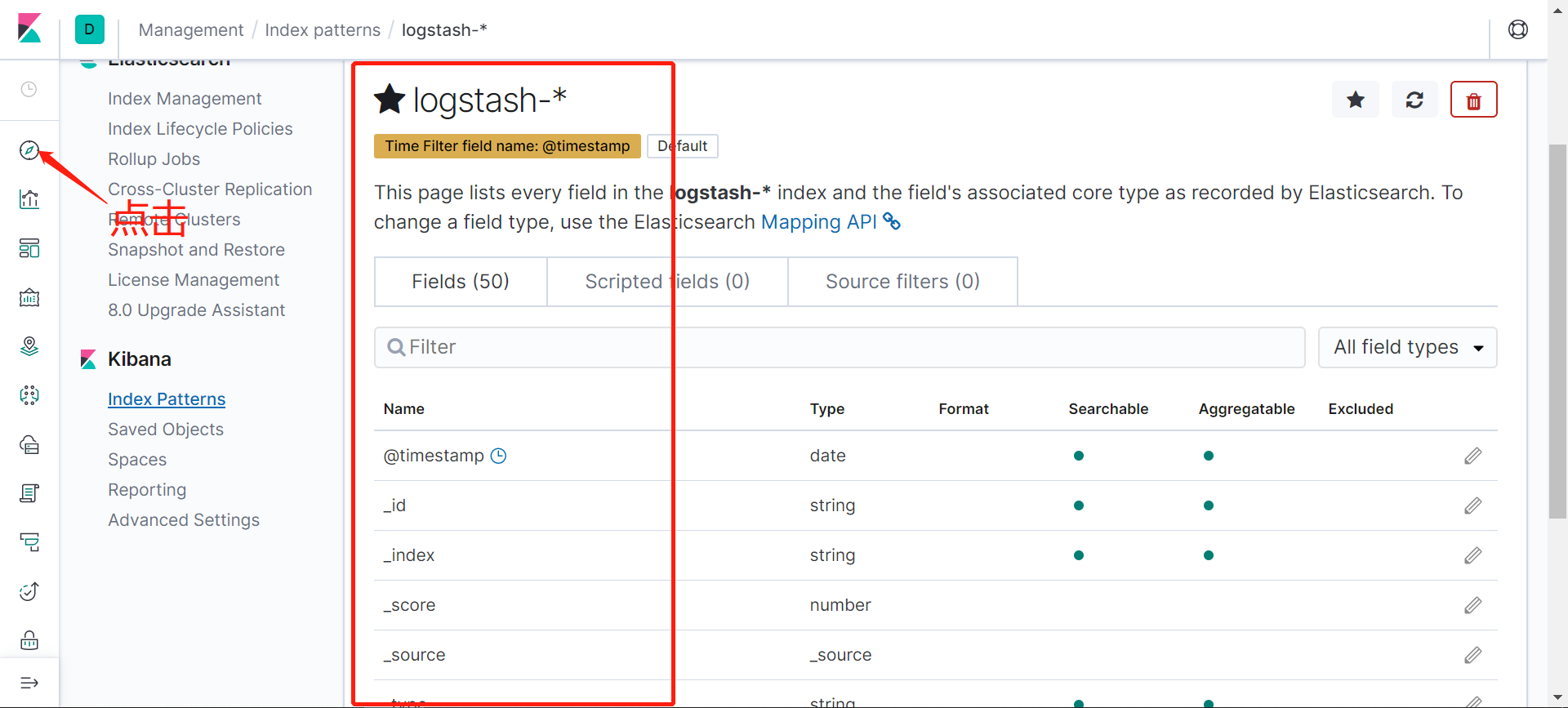

配置kibana

登录kibana界面,按照截图的顺序操作:

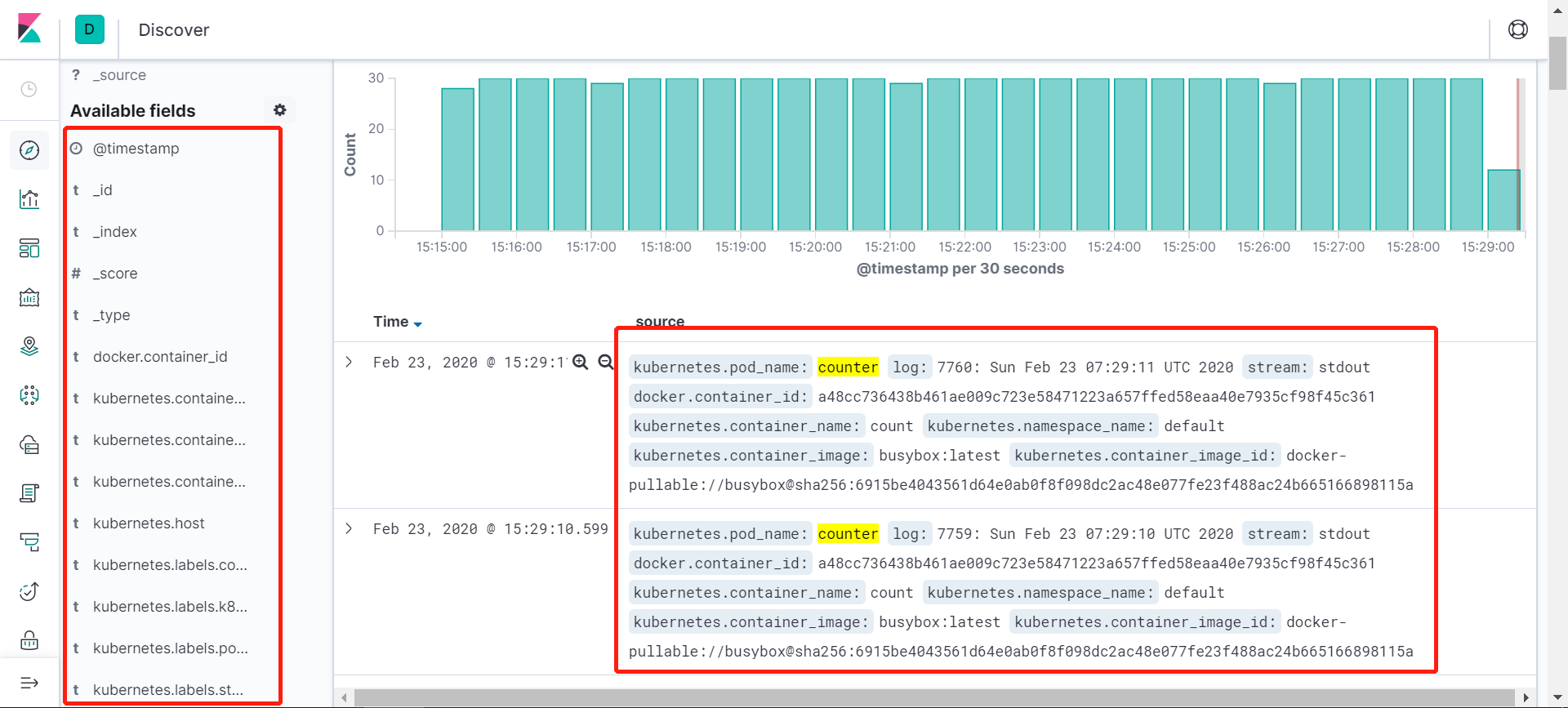

也可以通过其他元数据来过滤日志数据,比如可以单击任何日志条目以查看其他元数据,如容器名称,Kubernetes 节点,命名空间等,比如kubernetes.pod_name : counter

到这里,我们就在 Kubernetes 集群上成功部署了 EFK ,要了解如何使用 Kibana 进行日志数据分析,可以参考 Kibana 用户指南文档:https://www.elastic.co/guide/en/kibana/current/index.html

本文来自博客园, 作者:Star-Hitian, 转载请注明原文链接:https://www.cnblogs.com/Star-Haitian/p/16502875.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号