有一些数据需要实时的往 StarRocks 写,最开始想得很简单,直接用 insert into values 写入,后来遇到版本号超过 1000 问题。查官网才知道,每个 insert 都会生成一个版本,短时间往后台insert 大量数据是不可行的,才开始用 Stream Load 方式

java.sql.SQLSyntaxErrorException: Too many versions. tablet_id: 18398, version_count: 1001, limit: 1000

insert into values 方式写数据

insert into values 方式写数据很简单,就是 jdbc, 直接 insert 就可以。

样例:

INSERT INTO source_wiki_edit

WITH LABEL insert_load_wikipedia

VALUES

("2015-09-12 00:00:00","#en.wikipedia","AustinFF",0,0,0,0,0,21,5,0),

("2015-09-12 00:00:00","#ca.wikipedia","helloSR",0,1,0,1,0,3,23,0);

一切都如预想的一样,很快就把 insert into values 的程序写好了,上线运行才是噩梦的开始。

程序执行的时候,但凡数据量大一点(几万条),就经常触发 “Too many versions” 报错,只能降低写入频率,这样一来写入速度又慢了很多。

在看官网文档的时候,在 通过 INSERT INTO 语句导入数据 看到这样的描述:

频繁使用 INSERT INTO 语句导入小批量数据会产生过多的数据版本,从而影响查询性能,因此不建议您频繁使用 INSERT INTO 语句导入数据或将其作为生产环境的日常例行导入任务。如果您的业务场景需要流式导入或者小批量多次导入数据,建议使用 Apache Kafka® 作为数据源并通过 Routine Load 方式进行导入作业。

INSERT INTO VALUES 语句导入方式仅适用于导入少量数据作为验证 DEMO 用途,不适用于大规模测试或生产环境。如需大规模导入数据,请选择其他导入方式。

我们的业务场景是 流式导入,又不方便使用 flink,就决定“借鉴一下” StarRocks 提供 flink-connector-starrocks 的代码,通过缓存并批量由 stream load 导入

Stream Load 导入

官网介绍:

StarRocks 提供基于 HTTP 协议的 Stream Load 导入方式,帮助您从本地文件系统或流式数据源导入数据。

Stream Load 是一种同步的导入方式。您提交导入作业以后,StarRocks 会同步地执行导入作业,并返回导入作业的结果信息。您可以通过返回的结果信息来判断导入作业是否成功。

导入本地数据文件:

一般可采用 curl 命令直接提交一个导入作业,将本地数据文件的数据导入到 StarRocks 中。

导入实时产生的数据流:

一般可采用 Apache Flink® 等程序提交一个导入作业,持续生成一系列导入任务,将实时产生的数据流持续不断地导入到 StarRocks 中。

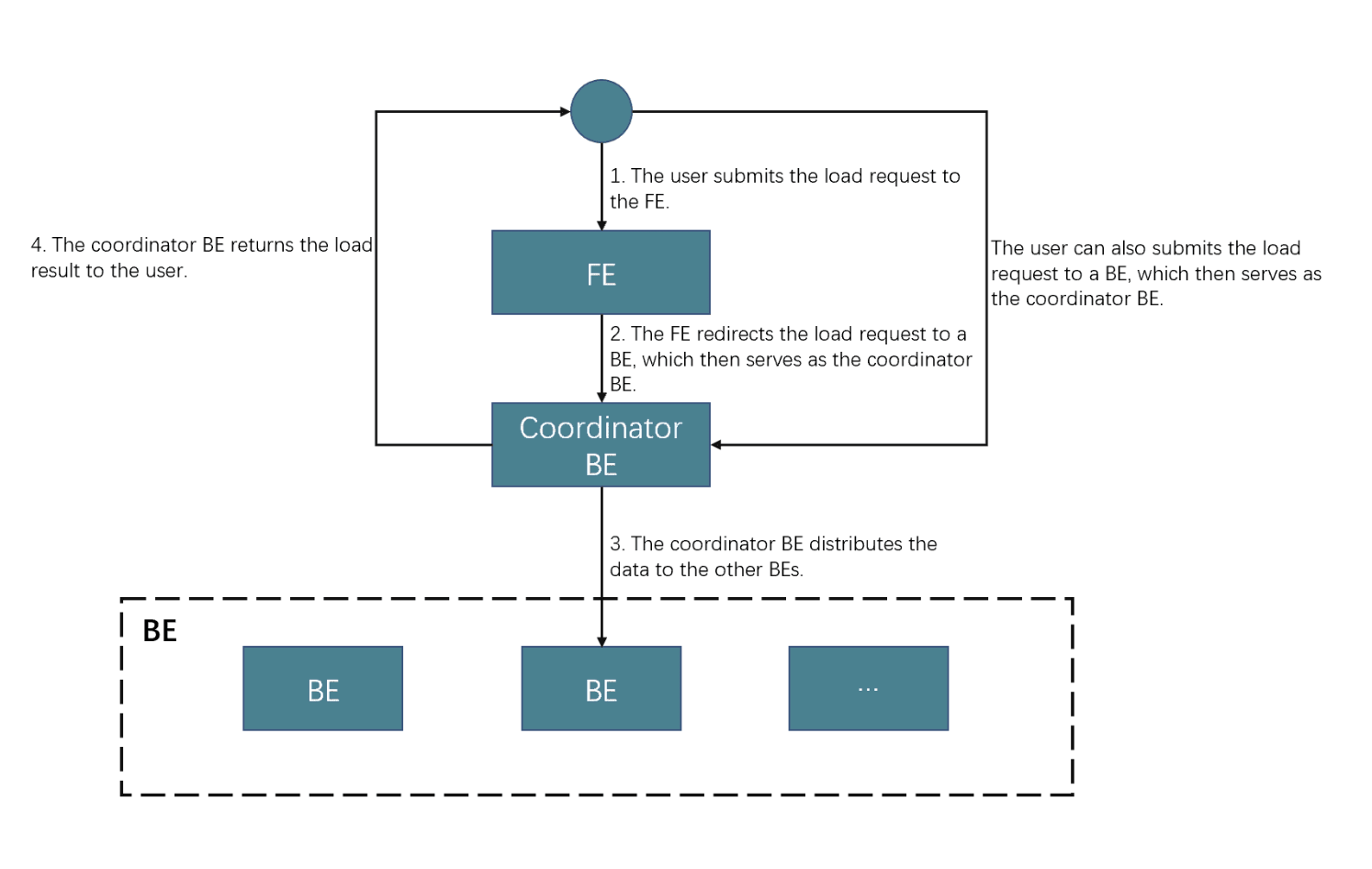

导入原理

Stream Load 需要您在客户端上通过 HTTP 发送导入作业请求给 FE 节点,FE 节点会通过 HTTP 重定向 (Redirect) 指令将请求转发给某一个 BE 节点。

接收导入作业请求的 BE 节点作为 Coordinator BE 节点,将数据按表结构划分、并分发数据到相关的 BE 节点。导入作业的结果信息由 Coordinator BE 节点返回给客户端。

主要流程:

注:以上内容来自官网

官网样例

StarRocks 的 github 提供了一个 java 集成 Stream Load 的样例:

public class StarRocksStreamLoad {

private final static String STARROCKS_HOST = "xxx.com";

private final static String STARROCKS_DB = "test";

private final static String STARROCKS_TABLE = "stream_test";

private final static String STARROCKS_USER = "root";

private final static String STARROCKS_PASSWORD = "xxx";

private final static int STARROCKS_HTTP_PORT = 8030;

private void sendData(String content) throws Exception {

final String loadUrl = String.format("http://%s:%s/api/%s/%s/_stream_load",

STARROCKS_HOST,

STARROCKS_HTTP_PORT,

STARROCKS_DB,

STARROCKS_TABLE);

final HttpClientBuilder httpClientBuilder = HttpClients

.custom()

.setRedirectStrategy(new DefaultRedirectStrategy() {

@Override

protected boolean isRedirectable(String method) {

return true;

}

});

try (CloseableHttpClient client = httpClientBuilder.build()) {

HttpPut put = new HttpPut(loadUrl);

StringEntity entity = new StringEntity(content, "UTF-8");

put.setHeader(HttpHeaders.EXPECT, "100-continue");

put.setHeader(HttpHeaders.AUTHORIZATION, basicAuthHeader(STARROCKS_USER, STARROCKS_PASSWORD));

// the label header is optional, not necessary

// use label header can ensure at most once semantics

put.setHeader("label", "39c25a5c-7000-496e-a98e-348a264c81de");

put.setEntity(entity);

try (CloseableHttpResponse response = client.execute(put)) {

String loadResult = "";

if (response.getEntity() != null) {

loadResult = EntityUtils.toString(response.getEntity());

}

final int statusCode = response.getStatusLine().getStatusCode();

// statusCode 200 just indicates that starrocks be service is ok, not stream load

// you should see the output content to find whether stream load is success

if (statusCode != 200) {

throw new IOException(

String.format("Stream load failed, statusCode=%s load result=%s", statusCode, loadResult));

}

System.out.println(loadResult);

}

}

}

private String basicAuthHeader(String username, String password) {

final String tobeEncode = username + ":" + password;

byte[] encoded = Base64.encodeBase64(tobeEncode.getBytes(StandardCharsets.UTF_8));

return "Basic " + new String(encoded);

}

public static void main(String[] args) throws Exception {

int id1 = 1;

int id2 = 10;

String id3 = "Simon";

int rowNumber = 10;

String oneRow = id1 + "\t" + id2 + "\t" + id3 + "\n";

StringBuilder stringBuilder = new StringBuilder();

for (int i = 0; i < rowNumber; i++) {

stringBuilder.append(oneRow);

}

stringBuilder.deleteCharAt(stringBuilder.length() - 1);

String loadData = stringBuilder.toString();

StarRocksStreamLoad starrocksStreamLoad = new StarRocksStreamLoad();

starrocksStreamLoad.sendData(loadData);

}

}

注: 代码来源 https://github.com/StarRocks/demo/blob/master/MiscDemo/stream_load/java/StarRocksStreamLoad.java

代码逻辑:

1、main 函数里面生产了 10 条数据,用 "\n" 做分隔符,拼接在一起

2、使用 http 的方式把数据发给 StarRocks

实际使用

实际使用的时候,参考了 flink-connector-starrocks 的代码

http 创建的时候添加 ContentLengthHeaderRemover 拦截器,移除 http request 中的 Content-Length 参数,否则可能遇到报错: Content-Length header already present

header 参数:

1. columns 设置数据中列的顺序

2. row_delimiter 设置行分隔符

3. column_separator 设置列分隔符

核心代码如下:

private static void write(String database, String table, List<String> records) throws Exception {

// 转 byte

StarRocksSinkBufferEntity bufferEntity = new StarRocksSinkBufferEntity(database, table, labelPrefix);

for (String record : records) {

byte[] bts = record.getBytes(StandardCharsets.UTF_8);

bufferEntity.addToBuffer(bts);

}

// 多行合并

byte[] data = joinRows(bufferEntity.getBuffer(), (int) bufferEntity.getBatchSize());

// 导入的 url

String loadUrl = new StringBuilder(url)

.append("/api/")

.append(bufferEntity.getDatabase())

.append("/")

.append(bufferEntity.getTable())

.append("/_stream_load")

.toString();

// random load label

String label = createBatchLabel();

// do http

Map<String, Object> map = doHttpPut(loadUrl, label, data);

for (Map.Entry entry : map.entrySet()) {

System.out.println(entry.getKey() + " : " + entry.getValue().toString());

}

}

public static String createBatchLabel() {

StringBuilder sb = new StringBuilder();

if (!Strings.isNullOrEmpty(labelPrefix)) {

sb.append(labelPrefix);

}

return sb.append(UUID.randomUUID().toString()).toString();

}

private static Map<String, Object> doHttpPut(String loadUrl, String label, byte[] data) throws IOException {

LOG.info(String.format("Executing stream load to: '%s', size: '%s', thread: %d", loadUrl, data.length, Thread.currentThread().getId()));

final HttpClientBuilder httpClientBuilder = HttpClients.custom()

.setRedirectStrategy(new DefaultRedirectStrategy() {

@Override

protected boolean isRedirectable(String method) {

return true;

}

})

// 解决 Content-Length bug : Caused by: org.apache.http.ProtocolException: Content-Length header already present at

.addInterceptorFirst(new ContentLengthHeaderRemover());

try (CloseableHttpClient httpclient = httpClientBuilder.build()) {

HttpPut httpPut = new HttpPut(loadUrl);

// 指定列名

httpPut.setHeader("columns", "`col1`,`col2`,`col3`,`col4`");

if (!httpPut.containsHeader("timeout")) {

httpPut.setHeader("timeout", "60");

}

if ("json".equals(format)) {

httpPut.setHeader("strip_outer_array", "true");

httpPut.setHeader("format", "json");

} else {

// 行、列分隔符

httpPut.setHeader("row_delimiter", "\\\\x02");

httpPut.setHeader("column_separator", "\\\\x01");

}

httpPut.setHeader("Expect", "100-continue");

httpPut.setHeader("label", label);

// 用户名、密码

httpPut.setHeader("Authorization", getBasicAuthHeader(user, pass));

httpPut.setEntity(new ByteArrayEntity(data));

httpPut.setConfig(RequestConfig.custom().setRedirectsEnabled(true).build());

try (CloseableHttpResponse resp = httpclient.execute(httpPut)) {

HttpEntity respEntity = getHttpEntity(resp);

if (respEntity == null)

return null;

return (Map<String, Object>) JSON.parse(EntityUtils.toString(respEntity));

}

}

}

private static String getBasicAuthHeader(String username, String password) {

String auth = username + ":" + password;

byte[] encodedAuth = Base64.encodeBase64(auth.getBytes(StandardCharsets.UTF_8));

return new StringBuilder("Basic ").append(new String(encodedAuth)).toString();

}

private static HttpEntity getHttpEntity(CloseableHttpResponse resp) {

int code = resp.getStatusLine().getStatusCode();

if (200 != code) {

LOG.warn("Request failed with code:{}", code);

return null;

}

HttpEntity respEntity = resp.getEntity();

if (null == respEntity) {

LOG.warn("Request failed with empty response.");

return null;

}

return respEntity;

}

private static class ContentLengthHeaderRemover implements HttpRequestInterceptor {

@Override

public void process(HttpRequest request, HttpContext context) throws HttpException, IOException {

request.removeHeaders(HTTP.CONTENT_LEN);// fighting org.apache.http.protocol.RequestContent's ProtocolException("Content-Length header already present");

}

}

private static byte[] joinRows(List<byte[]> rows, int totalBytes) {

if ("csv".equals(format)) {

// csv

byte[] lineDelimiter = ROW_SEP.getBytes(StandardCharsets.UTF_8);

ByteBuffer bos = ByteBuffer.allocate(totalBytes + rows.size() * lineDelimiter.length);

Iterator var5 = rows.iterator();

while (var5.hasNext()) {

byte[] row = (byte[]) var5.next();

bos.put(row);

bos.put(lineDelimiter);

}

return bos.array();

} else {

ByteBuffer bos = ByteBuffer.allocate(totalBytes + (rows.isEmpty() ? 2 : rows.size() + 1));

bos.put("[".getBytes(StandardCharsets.UTF_8));

byte[] jsonDelimiter = ",".getBytes(StandardCharsets.UTF_8);

boolean isFirstElement = true;

for (byte[] row : rows) {

if (!isFirstElement) {

bos.put(jsonDelimiter);

}

bos.put(row);

isFirstElement = false;

}

bos.put("]".getBytes(StandardCharsets.UTF_8));

return bos.array();

}

}

StarRocksSinkBufferEntity 如下(借鉴过来的):

public class StarRocksSinkBufferEntity implements Serializable {

private static final long serialVersionUID = 1L;

private ArrayList<byte[]> buffer = new ArrayList<>();

private int batchCount = 0;

private long batchSize = 0;

private String label;

private String database;

private String table;

private boolean EOF;

private String labelPrefix;

public StarRocksSinkBufferEntity(String database, String table, String labelPrefix) {

this.database = database;

this.table = table;

this.labelPrefix = labelPrefix;

label = createBatchLabel();

}

public StarRocksSinkBufferEntity asEOF() {

EOF = true;

return this;

}

public boolean EOF() {

return EOF;

}

public String getDatabase() {

return database;

}

public void setDatabase(String database) {

this.database = database;

}

public String getTable() {

return table;

}

public void setTable(String table) {

this.table = table;

}

public String getLabel() {

return label;

}

public ArrayList<byte[]> getBuffer() {

return buffer;

}

public void addToBuffer(byte[] bts) {

incBatchCount();

incBatchSize(bts.length);

buffer.add(bts);

}

public int getBatchCount() {

return batchCount;

}

private void incBatchCount() {

this.batchCount += 1;

}

public long getBatchSize() {

return batchSize;

}

private void incBatchSize(long batchSize) {

this.batchSize += batchSize;

}

public synchronized void clear() {

buffer.clear();

batchCount = 0;

batchSize = 0;

label = createBatchLabel();

}

public String reGenerateLabel() {

return label = createBatchLabel();

}

public String createBatchLabel() {

StringBuilder sb = new StringBuilder();

if (!Strings.isNullOrEmpty(labelPrefix)) {

sb.append(labelPrefix);

}

return sb.append(UUID.randomUUID().toString()).toString();

}

}

总结

改成 stream load 设置的是:每分钟或者每 1000 条往 StarRocks 写一次,暂时没遇到什么问题

需要注意的是,字符串类型的 Null ,需要同 "\N" 替换,不然写到 StarRocks 会变成 字符串 ‘null’,或者 空字符串

参考:

github starrocks-connector-for-apache-flink

github starrocks demo

欢迎关注Flink菜鸟公众号,会不定期更新Flink(开发技术)相关的推文

浙公网安备 33010602011771号

浙公网安备 33010602011771号