Flink 编译 1.14 版本的 cdc connector

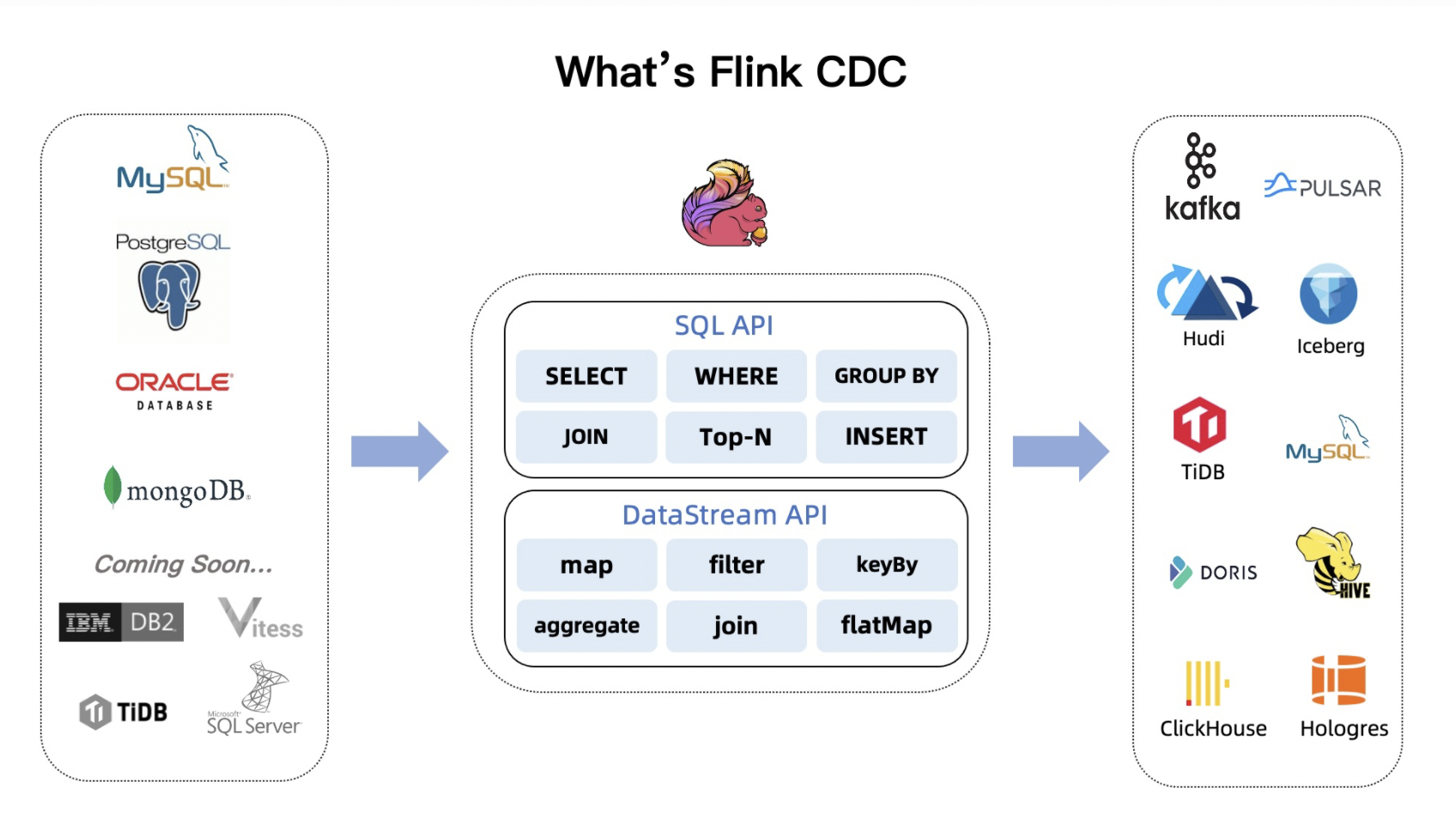

Flink CDC Connectors 是一组用于 Apache Flink 的源连接器,使用变更数据捕获 (CDC) 从不同的数据库中获取变更。 Flink CDC 连接器集成了 Debezium 作为引擎来捕获数据变化。 所以它可以充分发挥 Debezium 的能力。

作为 Flink 最火的 connector 之一,从一开源就火爆全场,从最开始的 Mysql、PostgreSQL,到现在的 MongoDB、Oracle、SqlServer,充分满足用户的多种数据源同步需求。

特别是 Flink cdc connector 2.0 发布,稳定性大幅提升(动态分片,初始化阶段支持checkpoint)、功能增强(无锁初始化)

What's Flink CDC:

从 FLink 1.14 发布,支持部分算子是 finish 状态的 checkpoint,我的 Flink 版本就升级到 Flink 1.14(经常有流表关联的任务,表的数据万年不更新)

问题是 Flink cdc connector 只支持 Flink 1.13.x,这样我的任务就需要在两个 Flink 版本切换,有点烦

刚好最近重新弄 cdc 的任务,就尝试了把 flink cdc connector 的 flink 版本换成 1.14.3

准备

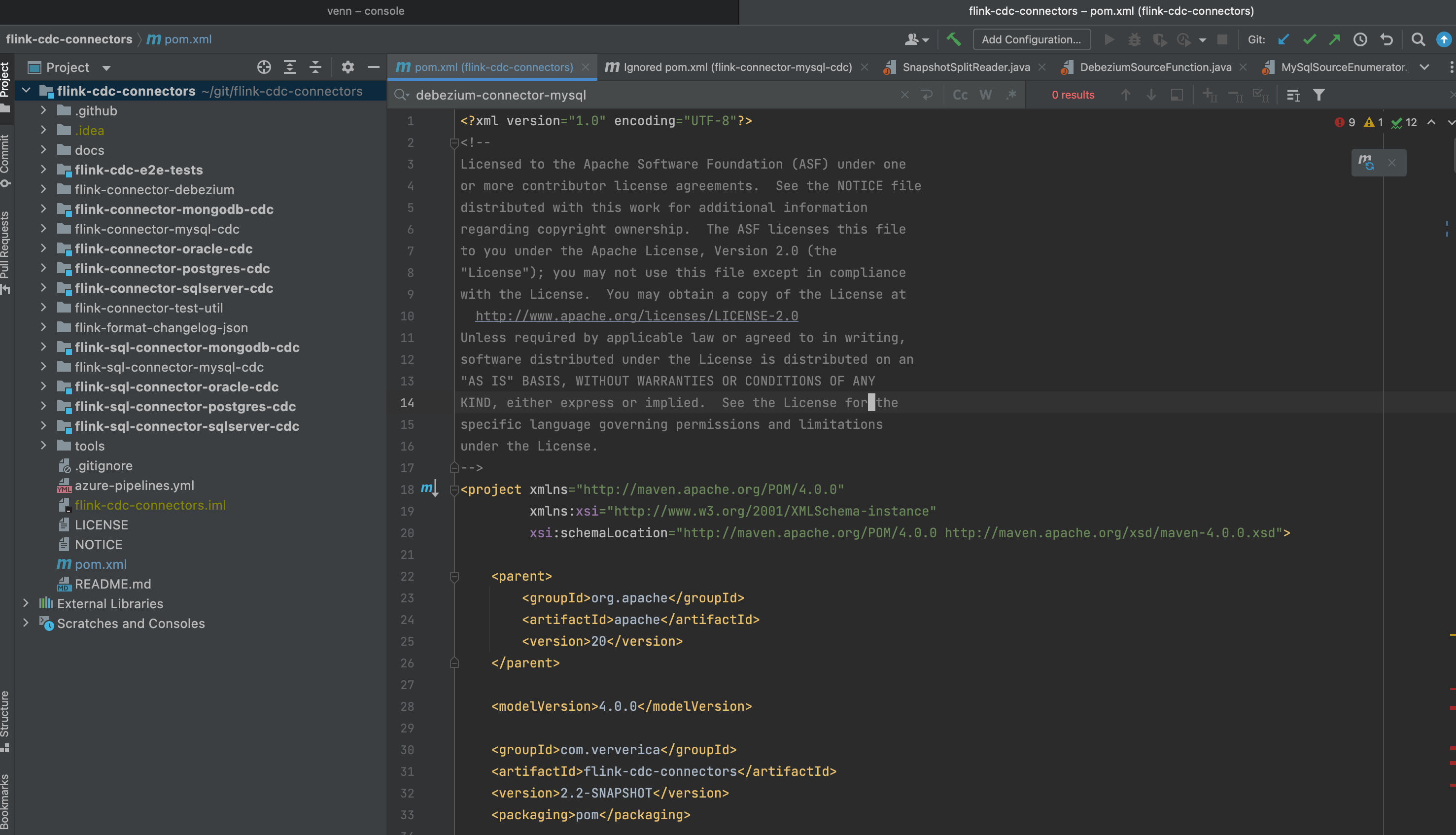

从 github 上 fork https://github.com/ververica/flink-cdc-connectors 到自己的仓库,然后 clone 下来,进入目录

venn@venn git % git clone git@github.com:springMoon/flink-cdc-connectors.git

Cloning into 'flink-cdc-connectors'...

remote: Enumerating objects: 6717, done.

remote: Counting objects: 100% (1056/1056), done.

remote: Compressing objects: 100% (220/220), done.

remote: Total 6717 (delta 855), reused 892 (delta 795), pack-reused 5661

Receiving objects: 100% (6717/6717), 10.21 MiB | 3.36 MiB/s, done.

Resolving deltas: 100% (2502/2502), done.

venn@venn git % cd flink-cdc-connectors

venn@venn flink-cdc-connectors % ls

LICENSE flink-connector-debezium flink-connector-test-util flink-sql-connector-sqlserver-cdc

NOTICE flink-connector-mongodb-cdc flink-format-changelog-json pom.xml

README.md flink-connector-mysql-cdc flink-sql-connector-mongodb-cdc tools

azure-pipelines.yml flink-connector-oracle-cdc flink-sql-connector-mysql-cdc

docs flink-connector-postgres-cdc flink-sql-connector-oracle-cdc

flink-cdc-e2e-tests flink-connector-sqlserver-cdc flink-sql-connector-postgres-cdc

venn@venn flink-cdc-connectors %

导入项目 到 Idea

修改 flink 和 scala 版本

<flink.version>1.14.3</flink.version>

<scala.binary.version>2.12</scala.binary.version>

- scala 版本选的是 2.12

修改 blink 依赖

flink 1.14 版本以后,之前版本 blink-planner 转正为 flink 唯一的 planner,对于的依赖包的名字也从:flink-table-planner-blink -> flink-table-planner,flink-table-runtime-blink -> flink-table-runtime

所以需要修改 flink-table-planner-blink 和 flink-table-runtime-blink 的包名

- 注:只需要 mysql 的 cdc,所以把 pg、oracle、MongoDB、sqlServer 的代码都删除,flink-cdc-e2e-tests 也删除了,代码就剩下这些:

venn@venn flink-cdc-connectors % ls

LICENSE azure-pipelines.yml flink-connector-debezium flink-format-changelog-json tools

NOTICE docs flink-connector-mysql-cdc flink-sql-connector-mysql-cdc

README.md flink-cdc-connectors.iml flink-connector-test-util pom.xml

venn@venn flink-cdc-connectors %

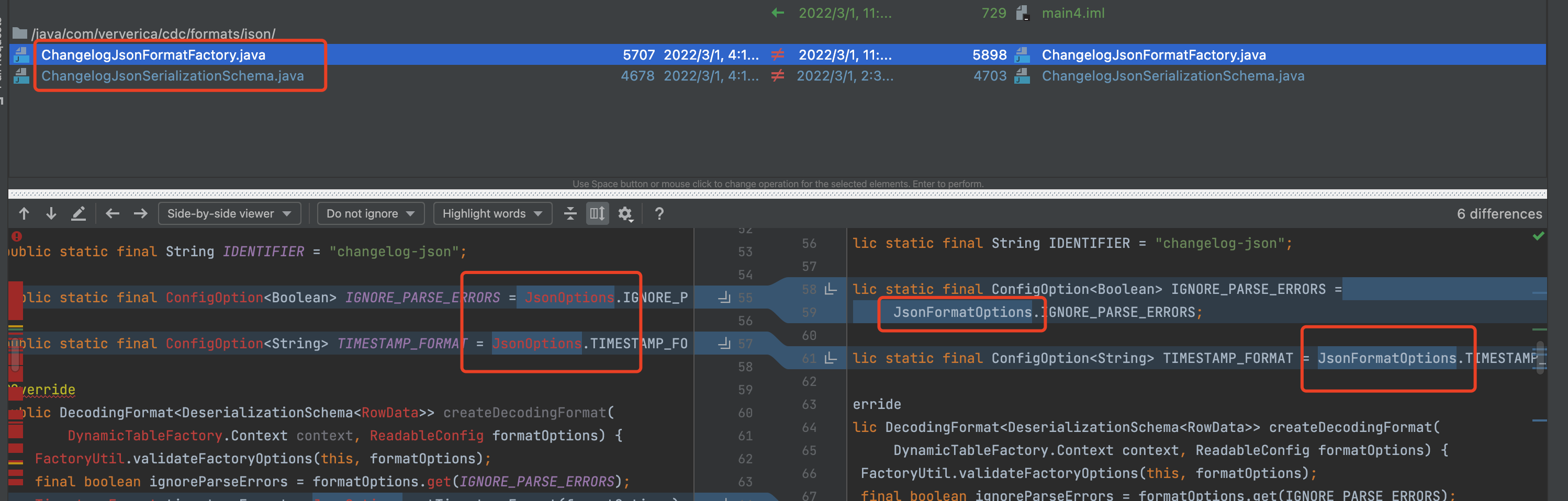

修改 flink-json 包的修改

Flink 1.14 中原来的: org.apache.flink.formats.json.JsonOptions 改为: org.apache.flink.formats.json.JsonFormatOptions、JsonFormatOptionsUtil

- 注: 还有两个 check style 的问题,这里就不细写了

修改 flink-shaded-guava 版本

修改到这里,就能编译成功了,但是在使用的时候会遇到 flink-shaded-guava 包冲突的问题。

java.lang.RuntimeException: One or more fetchers have encountered exception

at org.apache.flink.connector.base.source.reader.fetcher.SplitFetcherManager.checkErrors(SplitFetcherManager.java:225)

at org.apache.flink.connector.base.source.reader.SourceReaderBase.getNextFetch(SourceReaderBase.java:169)

at org.apache.flink.connector.base.source.reader.SourceReaderBase.pollNext(SourceReaderBase.java:130)

at org.apache.flink.streaming.api.operators.SourceOperator.emitNext(SourceOperator.java:350)

at org.apache.flink.streaming.runtime.io.StreamTaskSourceInput.emitNext(StreamTaskSourceInput.java:68)

at org.apache.flink.streaming.runtime.io.StreamOneInputProcessor.processInput(StreamOneInputProcessor.java:65)

at org.apache.flink.streaming.runtime.tasks.StreamTask.processInput(StreamTask.java:496)

at org.apache.flink.streaming.runtime.tasks.mailbox.MailboxProcessor.runMailboxLoop(MailboxProcessor.java:203)

at org.apache.flink.streaming.runtime.tasks.StreamTask.runMailboxLoop(StreamTask.java:809)

at org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:761)

at org.apache.flink.runtime.taskmanager.Task.runWithSystemExitMonitoring(Task.java:958)

at org.apache.flink.runtime.taskmanager.Task.restoreAndInvoke(Task.java:937)

at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:766)

at org.apache.flink.runtime.taskmanager.Task.run(Task.java:575)

at java.lang.Thread.run(Thread.java:750)

Caused by: java.lang.NoClassDefFoundError: org/apache/flink/shaded/guava18/com/google/common/util/concurrent/ThreadFactoryBuilder

at com.ververica.cdc.connectors.mysql.debezium.reader.SnapshotSplitReader.<init>(SnapshotSplitReader.java:81)

at com.ververica.cdc.connectors.mysql.source.reader.MySqlSplitReader.checkSplitOrStartNext(MySqlSplitReader.java:128)

at com.ververica.cdc.connectors.mysql.source.reader.MySqlSplitReader.fetch(MySqlSplitReader.java:69)

at org.apache.flink.connector.base.source.reader.fetcher.FetchTask.run(FetchTask.java:58)

at org.apache.flink.connector.base.source.reader.fetcher.SplitFetcher.runOnce(SplitFetcher.java:142)

at org.apache.flink.connector.base.source.reader.fetcher.SplitFetcher.run(SplitFetcher.java:105)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

... 1 more

flink 1.14 和 1.13 使用的 flink-shaded-guava 版本不一样,两个版本不兼容,需要修改 cdc 中的 flink-shaded-guava 版本为 flink 1.14 依赖的版本

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-shaded-guava</artifactId>

<version>18.0-13.0</version>

</dependency>

改为:

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-shaded-guava</artifactId>

<version>30.1.1-jre-14.0</version>

</dependency>

修改对应包中对 flink-shaded-guava 类的应用

[ERROR] Failed to execute goal org.apache.maven.plugins:maven-compiler-plugin:3.7.0:compile (default-compile) on project flink-connector-debezium: Compilation failure: Compilation failure:

[ERROR] /Users/venn/git/flink-cdc-connectors/flink-connector-debezium/src/main/java/com/ververica/cdc/debezium/DebeziumSourceFunction.java:[41,73] 程序包org.apache.flink.shaded.guava18.com.google.common.util.concurrent不存在

[ERROR] /Users/venn/git/flink-cdc-connectors/flink-connector-debezium/src/main/java/com/ververica/cdc/debezium/DebeziumSourceFunction.java:[219,21] 找不到符号

[ERROR] 符号: 类 ThreadFactoryBuilder

[ERROR] 位置: 类 com.ververica.cdc.debezium.DebeziumSourceFunction<T>

删除应用的 org.apache.flink.shaded.guava18.com.google.common.util.concurrent.ThreadFactoryBuilder 改为 : import org.apache.flink.shaded.guava30.com.google.common.util.concurrent.ThreadFactoryBuilder;

编译

venn@venn flink-cdc-connectors % mvn clean install -DskipTests

[INFO] Scanning for projects...

[INFO] ------------------------------------------------------------------------

[INFO] Reactor Build Order:

[INFO]

[INFO] flink-cdc-connectors

[INFO] flink-connector-debezium

[INFO] flink-connector-test-util

[INFO] flink-connector-mysql-cdc

[INFO] flink-sql-connector-mysql-cdc

[INFO] flink-format-changelog-json

[INFO]

[INFO] ------------------------------------------------------------------------

[INFO] Building flink-cdc-connectors 2.2-SNAPSHOT

[INFO] ------------------------------------------------------------------------

[INFO]

....忽略一万行

tory/com/ververica/flink-format-changelog-json/2.2-SNAPSHOT/flink-format-changelog-json-2.2-SNAPSHOT-tests.jar

[INFO] ------------------------------------------------------------------------

[INFO] Reactor Summary:

[INFO]

[INFO] flink-cdc-connectors ............................... SUCCESS [ 7.295 s]

[INFO] flink-connector-debezium ........................... SUCCESS [ 17.599 s]

[INFO] flink-connector-test-util .......................... SUCCESS [ 3.390 s]

[INFO] flink-connector-mysql-cdc .......................... SUCCESS [ 10.641 s]

[INFO] flink-sql-connector-mysql-cdc ...................... SUCCESS [ 7.478 s]

[INFO] flink-format-changelog-json ........................ SUCCESS [ 0.761 s]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 47.450 s

[INFO] Finished at: 2022-03-01T17:09:57+08:00

[INFO] Final Memory: 124M/2872M

[INFO] ------------------------------------------------------------------------

venn@venn flink-cdc-connectors %

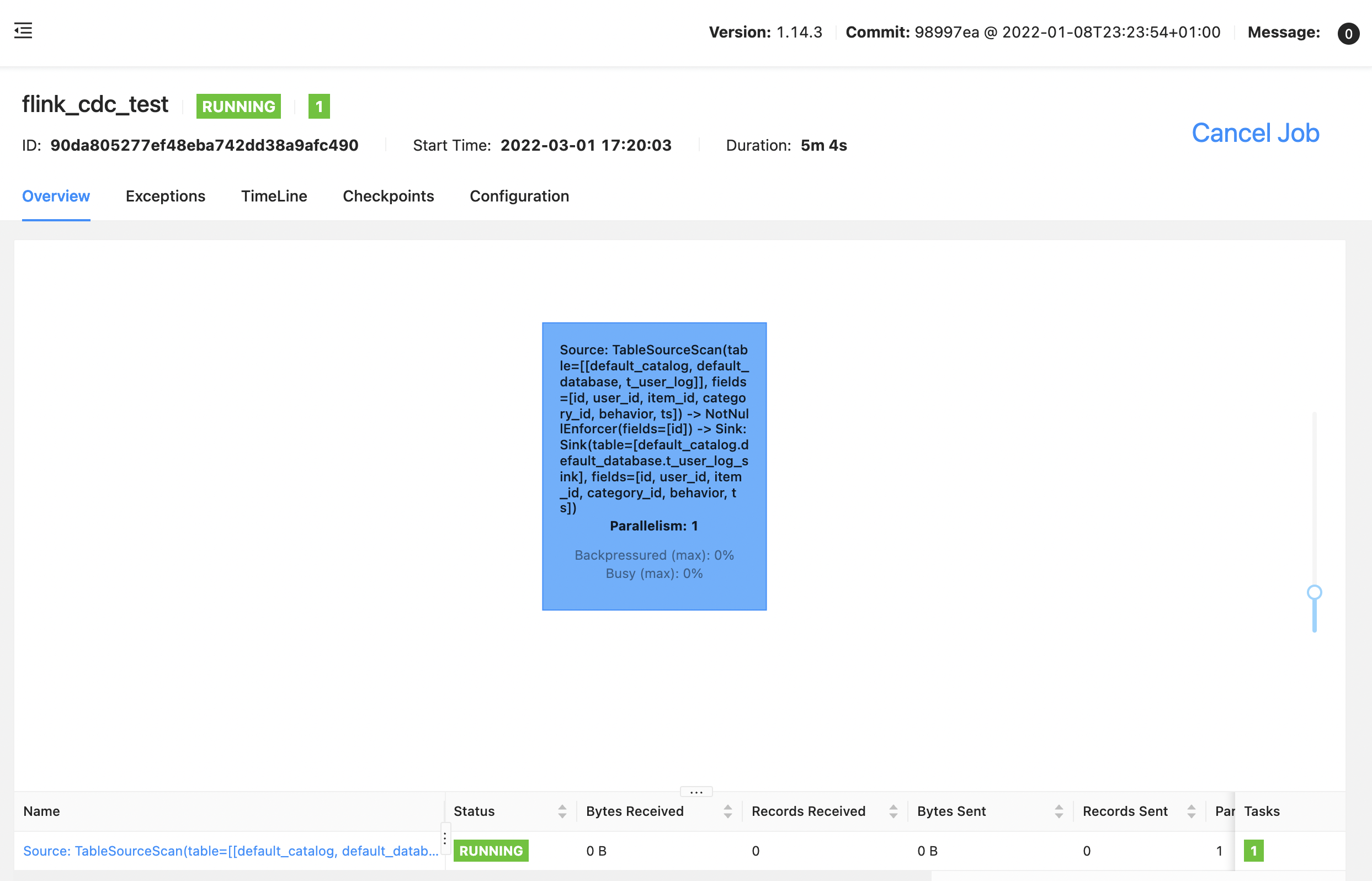

sqlSubmit 使用

修改 sqlSubmit pom.xml 的 flink cdc connector 版本

<flink.cdc.version>2.2-SNAPSHOT</flink.cdc.version>

再来个 cdc 的 SQL 任务

-- mysql cdc to print

-- creates a mysql table source

CREATE TABLE t_user_log (

id bigint

,user_id bigint

,item_id bigint

,category_id bigint

,behavior varchar

,ts timestamp(3)

,proc_time as PROCTIME()

,PRIMARY KEY (id) NOT ENFORCED

) WITH (

'connector' = 'mysql-cdc'

,'hostname' = 'localhost'

,'port' = '3306'

,'username' = 'root'

,'password' = '123456'

,'database-name' = 'venn'

,'table-name' = 'user_log'

,'server-id' = '123456789'

,'scan.startup.mode' = 'initial'

-- ,'scan.startup.mode' = 'specific-offset'

-- ,'scan.startup.specific-offset.file' = 'mysql-bin.000001'

-- ,'scan.startup.specific-offset.pos' = '1'

);

-- kafka sink

drop table if exists t_user_log_sink;

CREATE TABLE t_user_log_sink (

id bigint

,user_id bigint

,item_id bigint

,category_id bigint

,behavior varchar

,ts timestamp(3)

,PRIMARY KEY (id) NOT ENFORCED

) WITH (

'connector' = 'print'

);

insert into t_user_log_sink

select id, user_id, item_id, category_id, behavior, ts

from t_user_log;

启动任务

mysql-cdc 的 "scan.startup.mode" = "initial" ,启动的时候初始化全量数据,新数据回从 binlog 解析

+I[2, 2, 100, 10, pv, 2022-03-01T09:19:43]

+I[3, 3, 100, 10, pv, 2022-03-01T09:19:45]

+I[1, 1, 100, 10, pv, 2022-03-01T09:19:40]

+I[4, 4, 100, 10, pv, 2022-03-01T09:19:46]

+I[5, 5, 100, 10, pv, 2022-03-01T09:19:48]

mysql 驱动版本冲突

开始我用的 mysql 版本是 8.0.27,有如下版本,定位到是 mysql 驱动包冲突了,修改 为 flink cdc connector 依赖的 8.0.21 就可以了

2022-03-01 17:28:51,970 WARN - Source: TableSourceScan(table=[[default_catalog, default_database, t_user_log]], fields=[id, user_id, item_id, category_id, behavior, ts]) -> NotNullEnforcer(fields=[id]) -> Sink: Sink(table=[default_catalog.default_database.t_user_log_sink], fields=[id, user_id, item_id, category_id, behavior, ts]) (1/1)#2 (5d66100fdfcd37d688545fc52d60f9e5) switched from RUNNING to FAILED with failure cause: java.lang.RuntimeException: One or more fetchers have encountered exception

at org.apache.flink.connector.base.source.reader.fetcher.SplitFetcherManager.checkErrors(SplitFetcherManager.java:225)

at org.apache.flink.connector.base.source.reader.SourceReaderBase.getNextFetch(SourceReaderBase.java:169)

at org.apache.flink.connector.base.source.reader.SourceReaderBase.pollNext(SourceReaderBase.java:130)

at org.apache.flink.streaming.api.operators.SourceOperator.emitNext(SourceOperator.java:350)

at org.apache.flink.streaming.runtime.io.StreamTaskSourceInput.emitNext(StreamTaskSourceInput.java:68)

at org.apache.flink.streaming.runtime.io.StreamOneInputProcessor.processInput(StreamOneInputProcessor.java:65)

at org.apache.flink.streaming.runtime.tasks.StreamTask.processInput(StreamTask.java:496)

at org.apache.flink.streaming.runtime.tasks.mailbox.MailboxProcessor.runMailboxLoop(MailboxProcessor.java:203)

at org.apache.flink.streaming.runtime.tasks.StreamTask.runMailboxLoop(StreamTask.java:809)

at org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:761)

at org.apache.flink.runtime.taskmanager.Task.runWithSystemExitMonitoring(Task.java:958)

at org.apache.flink.runtime.taskmanager.Task.restoreAndInvoke(Task.java:937)

at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:766)

at org.apache.flink.runtime.taskmanager.Task.run(Task.java:575)

at java.lang.Thread.run(Thread.java:750)

Caused by: java.lang.NoSuchMethodError: com.mysql.cj.CharsetMapping.getJavaEncodingForMysqlCharset(Ljava/lang/String;)Ljava/lang/String;

at io.debezium.connector.mysql.MySqlValueConverters.charsetFor(MySqlValueConverters.java:331)

at io.debezium.connector.mysql.MySqlValueConverters.converter(MySqlValueConverters.java:298)

at io.debezium.relational.TableSchemaBuilder.createValueConverterFor(TableSchemaBuilder.java:400)

at io.debezium.relational.TableSchemaBuilder.convertersForColumns(TableSchemaBuilder.java:321)

at io.debezium.relational.TableSchemaBuilder.createValueGenerator(TableSchemaBuilder.java:246)

at io.debezium.relational.TableSchemaBuilder.create(TableSchemaBuilder.java:138)

at io.debezium.relational.RelationalDatabaseSchema.buildAndRegisterSchema(RelationalDatabaseSchema.java:130)

at io.debezium.relational.HistorizedRelationalDatabaseSchema.recover(HistorizedRelationalDatabaseSchema.java:52)

at com.ververica.cdc.connectors.mysql.debezium.task.context.StatefulTaskContext.validateAndLoadDatabaseHistory(StatefulTaskContext.java:162)

at com.ververica.cdc.connectors.mysql.debezium.task.context.StatefulTaskContext.configure(StatefulTaskContext.java:114)

at com.ververica.cdc.connectors.mysql.debezium.reader.SnapshotSplitReader.submitSplit(SnapshotSplitReader.java:92)

at com.ververica.cdc.connectors.mysql.debezium.reader.SnapshotSplitReader.submitSplit(SnapshotSplitReader.java:63)

at com.ververica.cdc.connectors.mysql.source.reader.MySqlSplitReader.checkSplitOrStartNext(MySqlSplitReader.java:163)

at com.ververica.cdc.connectors.mysql.source.reader.MySqlSplitReader.fetch(MySqlSplitReader.java:73)

at org.apache.flink.connector.base.source.reader.fetcher.FetchTask.run(FetchTask.java:58)

at org.apache.flink.connector.base.source.reader.fetcher.SplitFetcher.runOnce(SplitFetcher.java:142)

at org.apache.flink.connector.base.source.reader.fetcher.SplitFetcher.run(SplitFetcher.java:105)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

... 1 more

jar 包: flink-connector-mysql-cdc-2.2-SNAPSHOT.jar 包

完整示例参考: github sqlSubmit

欢迎关注Flink菜鸟公众号,会不定期更新Flink(开发技术)相关的推文

浙公网安备 33010602011771号

浙公网安备 33010602011771号