Checkpoint 源码流程:

Flink CheckpointCoordinator 启动流程

最近一段时间,在看 Flink Checkpoint 相关的源码,从 CheckpointCoordinator 创建开始,Debug 出了 MiniCluster 的启动流程、创建 JobMaster/TaskManager、创建CheckpointCoordinator、触发 Checkpoint 等的流程,先描述下 MiniCluster 的启动流程,后续会把 Checkpoint的流程也做个分享

-------------------

Flink 任务执行有三种模式:

* yarn cluster

* yarn session

* Application

现在要说的就是 Application 模式,也称为: 本地模式,即在 IDEA 中直接启动一个 Flink 任务,就是使用 Application 模式(以下简称“本地模式”)

以下内容来自官网翻译

```

在上述所有模式下,应用程序的main()方法都是在客户端执行的。 此过程包括在本地下载应用程序的依赖项,

执行main()提取Flink运行时可以理解的应用程序表示形式(即JobGraph),并将依赖项和JobGraph发送到

集群中。 这使客户端成为大量的资源消耗者,因为它可能需要大量的网络带宽来下载依赖项并将二进制文件运送

到群集,并且需要CPU周期来执行main()。 在用户之间共享客户端时,此问题可能会更加明显。

基于此观察,“应用程序模式”将为每个提交的应用程序创建一个群集,但是这次,该应用程序的main()

方法在JobManager上执行。 每个应用程序创建集群可以看作是创建仅在特定应用程序的作业之间共享的

会话集群,并且在应用程序完成时被删除。 通过这种体系结构,应用程序模式可以提供与逐作业模式相

同的资源隔离和负载平衡保证,但要保证整个应用程序的粒度。 在JobManager上执行main()可以节

省所需的CPU周期,但也可以节省本地下载依赖项所需的带宽。 此外,由于每个应用程序只有一个

JobManager,因此它可以更均匀地分散下载群集中应用程序相关性的网络负载。

与 Per-Job 模式相比,应用程序模式允许提交由多个作业组成的应用程序。 作业执行的顺序不受部署

模式的影响,但受启动作业的调用的影响。 使用被阻塞的execute()可以建立一个顺序,这将导致

“下一个”作业的执行被推迟到“该”作业完成为止。 使用无阻塞的executeAsync()将导致“下一个”

作业在“此”作业完成之前开始。

```

官网地址:https://ci.apache.org/projects/flink/flink-docs-release-1.11/ops/deployment/#deployment-modes

官网缺乏的描述是,Application 模式定义为本地执行的模式,用于开发和调试。

1. 代码调用 execute

本地模式是从用户代码的 StreamExecutionEnvironment.execute 开始的

execute 有 4 个重载的方法:

def execute()

def execute(jobName: String)

def executeAsync()

def executeAsync(jobName: String)

分为两组同步和异步,同步和异步的区别官网也有描述,主要是在同时启动多个任务时任务执行时间的问题

同步 execute 调用 javaEnv.execute 源码如下:

def execute(jobName: String) = javaEnv.execute(jobName)

2. javaEnv.execute 生成 SreamGraph

这一步中会生成任务的 StreamGraph,Flink 有三层抽象的图结构(物理执行图是实际的执行的结构了):

StreamGraph ---> JobGraph ---> ExecutionGraph ---> 物理执行图

三层图结构扩展阅读: https://www.cnblogs.com/bethunebtj/p/9168274.html#21-flink%E7%9A%84%E4%B8%89%E5%B1%82%E5%9B%BE%E7%BB%93%E6%9E%84

javaEnv.execute 源码如下:

/** * Triggers the program execution. The environment will execute all parts of * the program that have resulted in a "sink" operation. Sink operations are * for example printing results or forwarding them to a message queue. * * <p>The program execution will be logged and displayed with the provided name * * @param jobName * Desired name of the job * @return The result of the job execution, containing elapsed time and accumulators. * @throws Exception which occurs during job execution. */ public JobExecutionResult execute(String jobName) throws Exception { Preconditions.checkNotNull(jobName, "Streaming Job name should not be null."); // 生成 StreamGraph后,作为参数 继续 execute return execute(getStreamGraph(jobName)); } 相关代码如下: public StreamGraph getStreamGraph(String jobName) { // clearTransformations: true 执行算子后,清理env 里面的 算子 clearTransformations return getStreamGraph(jobName, true); } public StreamGraph getStreamGraph(String jobName, boolean clearTransformations) { // generate 是真正生成 streamGraph 的地方 StreamGraph streamGraph = getStreamGraphGenerator().setJobName(jobName).generate(); if (clearTransformations) { this.transformations.clear(); } return streamGraph; } // private StreamGraphGenerator getStreamGraphGenerator() { if (transformations.size() <= 0) { throw new IllegalStateException("No operators defined in streaming topology. Cannot execute."); } // 创建 StreamGraphGenerator return new StreamGraphGenerator(transformations, config, checkpointCfg) .setStateBackend(defaultStateBackend) .setChaining(isChainingEnabled) .setUserArtifacts(cacheFile) .setTimeCharacteristic(timeCharacteristic) .setDefaultBufferTimeout(bufferTimeout); }

使用生成的 StreamGraph 调用 LocalStreamEnvironment 的 execute 方法

public JobExecutionResult execute(StreamGraph streamGraph) throws Exception { return super.execute(streamGraph); }

注: 算子的 transformations 是在 addOperator 方法中添加,source 在 addSource 方法中单独添加

又回到 StreamExecutionEnvironment.execute

@Internal public JobExecutionResult execute(StreamGraph streamGraph) throws Exception { // 调用异步执行 final JobClient jobClient = executeAsync(streamGraph); try { final JobExecutionResult jobExecutionResult; if (configuration.getBoolean(DeploymentOptions.ATTACHED)) { jobExecutionResult = jobClient.getJobExecutionResult(userClassloader).get(); } else { jobExecutionResult = new DetachedJobExecutionResult(jobClient.getJobID()); } jobListeners.forEach(jobListener -> jobListener.onJobExecuted(jobExecutionResult, null)); return jobExecutionResult; } catch (Throwable t) { jobListeners.forEach(jobListener -> { jobListener.onJobExecuted(null, ExceptionUtils.stripExecutionException(t)); }); ExceptionUtils.rethrowException(t); // never reached, only make javac happy return null; } }

executeAsync

@Internal public JobClient executeAsync(StreamGraph streamGraph) throws Exception { checkNotNull(streamGraph, "StreamGraph cannot be null."); checkNotNull(configuration.get(DeploymentOptions.TARGET), "No execution.target specified in your configuration file."); final PipelineExecutorFactory executorFactory = executorServiceLoader.getExecutorFactory(configuration); checkNotNull( executorFactory, "Cannot find compatible factory for specified execution.target (=%s)", configuration.get(DeploymentOptions.TARGET)); CompletableFuture<JobClient> jobClientFuture = executorFactory .getExecutor(configuration) // 调用 LocalExecutor.execute .execute(streamGraph, configuration); try { JobClient jobClient = jobClientFuture.get(); jobListeners.forEach(jobListener -> jobListener.onJobSubmitted(jobClient, null)); return jobClient; } catch (ExecutionException executionException) { final Throwable strippedException = ExceptionUtils.stripExecutionException(executionException); jobListeners.forEach(jobListener -> jobListener.onJobSubmitted(null, strippedException)); throw new FlinkException( String.format("Failed to execute job '%s'.", streamGraph.getJobName()), strippedException); } }

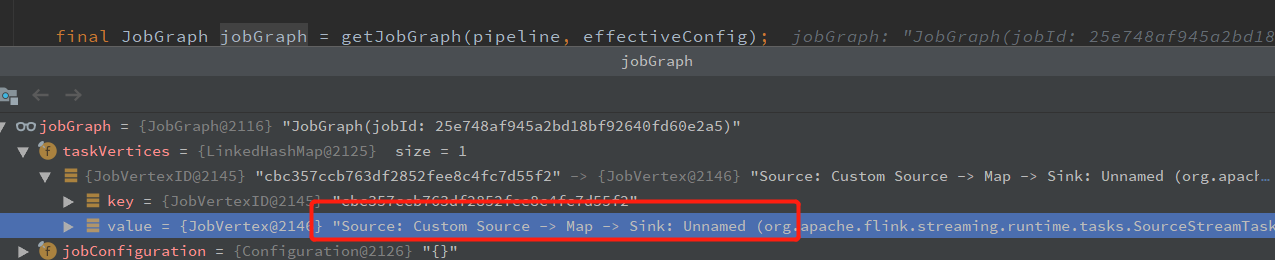

3、 生成 JobGraph

public CompletableFuture<JobClient> execute(Pipeline pipeline, Configuration configuration) throws Exception { checkNotNull(pipeline); checkNotNull(configuration); Configuration effectiveConfig = new Configuration(); effectiveConfig.addAll(this.configuration); effectiveConfig.addAll(configuration); // we only support attached execution with the local executor. checkState(configuration.getBoolean(DeploymentOptions.ATTACHED)); // 生成 JobGraph final JobGraph jobGraph = getJobGraph(pipeline, effectiveConfig); // 提交任务 return PerJobMiniClusterFactory.createWithFactory(effectiveConfig, miniClusterFactory).submitJob(jobGraph); } // 生成 JobGraph private JobGraph getJobGraph(Pipeline pipeline, Configuration configuration) throws MalformedURLException { // This is a quirk in how LocalEnvironment used to work. It sets the default parallelism // to <num taskmanagers> * <num task slots>. Might be questionable but we keep the behaviour // for now. if (pipeline instanceof Plan) { Plan plan = (Plan) pipeline; final int slotsPerTaskManager = configuration.getInteger( TaskManagerOptions.NUM_TASK_SLOTS, plan.getMaximumParallelism()); final int numTaskManagers = configuration.getInteger( ConfigConstants.LOCAL_NUMBER_TASK_MANAGER, 1); plan.setDefaultParallelism(slotsPerTaskManager * numTaskManagers); } return PipelineExecutorUtils.getJobGraph(pipeline, configuration); }

4、 启动 MiniCluster

public CompletableFuture<JobClient> submitJob(JobGraph jobGraph) throws Exception { MiniClusterConfiguration miniClusterConfig = getMiniClusterConfig(jobGraph.getMaximumParallelism()); MiniCluster miniCluster = miniClusterFactory.apply(miniClusterConfig); // 启动 miniCluster, 启动一大堆服务: Rpc、Metrics、 启动 TaskManager 等 miniCluster.start(); return miniCluster // 提交任务 .submitJob(jobGraph) .thenApply(result -> new PerJobMiniClusterJobClient(result.getJobID(), miniCluster)) .whenComplete((ignored, throwable) -> { if (throwable != null) { // We failed to create the JobClient and must shutdown to ensure cleanup. shutDownCluster(miniCluster); } }) .thenApply(Function.identity()); }

至此本地的 MiniCluster 启动完成,任务也提交执行了

调用栈如下:

```java

KafkaToKafka.execute

StreamExecutionEnvironment.execute

StreamExecutionEnvironment.execute

LocalStreamEnvironment.execute

StreamExecutionEnvironment.execute

StreamExecutionEnvironment.executeAsync

LocalExecutor.execute

Pipeline 生成 jobGraph

PerJobMiniClusterFactory.submitJob

MiniCluster.submitJob

调用 miniCluster.start(); 有启动 startTaskManagers

至此,供本地运行的 MiniCluster 启动

```

欢迎关注Flink菜鸟公众号,会不定期更新Flink(开发技术)相关的推文

浙公网安备 33010602011771号

浙公网安备 33010602011771号