kubernetes集群重置部署(四)

kubernetes集群重置部署

1 集群环境清理

1.1 清理流程

1) rm -rf $HOME/.kube

2) rm -rf /var/lib/etcd

3) rm -rf /etc/kubernetes/manifests/

4) pkill kube*

5) pkill kube-apiserver

6) rm -rf /etc/cni/net.d

7) mv /etc/kubernetes/kubelet.conf /etc/kubernetes/kubelet.confbak

8) rm -rf /etc/kubernetes/pki/ca.crt

9) rm -rf /var/lib/kubelet1.2 清理过程

在所有主节点执行:

pkill kube-apiserver && pkill kube* && rm -rf /var/lib/etcd && rm -rf /etc/kubernetes/manifests/ && rm -rf $HOME/.kube && rm -rf /etc/cni/net.d && rm -rf /var/lib/kubelet所有节点:

mv /etc/kubernetes/kubelet.conf /etc/kubernetes/kubelet.confbak 所有工作节点:

rm -rf /etc/kubernetes/pki/ca.crt上述内容环境清理干净之后,就可以执行重置操作。

2 重置K8S集群

所有节点执行:

先关闭所有节点的kubelet功能后,在执行集群的初始化。

systemctl stop kubelet

#关闭kubelet之后就可以执行kubeadm命令了。

#kubeadm reset

[root@k8s-master01 ~]# kubeadm reset

[reset] Reading configuration from the cluster...

[reset] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

W0908 22:22:06.321324 14127 reset.go:120] [reset] Unable to fetch the kubeadm-config ConfigMap from cluster: failed to get config map: Get "https://192.168.21.6:6443/api/v1/namespaces/kube-system/configmaps/kubeadm-config?timeout=10s": dial tcp 192.168.21.6:6443: connect: connection refused

W0908 22:22:06.321871 14127 preflight.go:56] [reset] WARNING: Changes made to this host by 'kubeadm init' or 'kubeadm join' will be reverted.

[reset] Are you sure you want to proceed? [y/N]: y

[preflight] Running pre-flight checks

W0908 22:22:09.907698 14127 removeetcdmember.go:106] [reset] No kubeadm config, using etcd pod spec to get data directory

[reset] Deleted contents of the etcd data directory: /var/lib/etcd

[reset] Stopping the kubelet service

[reset] Unmounting mounted directories in "/var/lib/kubelet"

W0908 22:23:11.600939 14127 cleanupnode.go:99] [reset] Failed to remove containers: [failed to stop running pod 1bc5470e95147ccc07db3d11ac4838c708562e80c6f2f55ecf5fbecf55d2aeb9: output: E0908 22:22:20.286794 14204 remote_runtime.go:205] "StopPodSandbox from runtime service failed" err="rpc error: code = DeadlineExceeded desc = context deadline exceeded" podSandboxID="1bc5470e95147ccc07db3d11ac4838c708562e80c6f2f55ecf5fbecf55d2aeb9"

time="2025-09-08T22:22:20+08:00" level=fatal msg="stopping the pod sandbox \"1bc5470e95147ccc07db3d11ac4838c708562e80c6f2f55ecf5fbecf55d2aeb9\": rpc error: code = DeadlineExceeded desc = context deadline exceeded"

: exit status 1, failed to stop running pod ab2a5d2f0f4154fd5e1943647ea7195ce945ff32d52d99924fda3fe9d18c5c97: output: E0908 22:22:30.446271 14268 remote_runtime.go:205] "StopPodSandbox from runtime service failed" err="rpc error: code = DeadlineExceeded desc = context deadline exceeded" podSandboxID="ab2a5d2f0f4154fd5e1943647ea7195ce945ff32d52d99924fda3fe9d18c5c97"

time="2025-09-08T22:22:30+08:00" level=fatal msg="stopping the pod sandbox \"ab2a5d2f0f4154fd5e1943647ea7195ce945ff32d52d99924fda3fe9d18c5c97\": rpc error: code = DeadlineExceeded desc = context deadline exceeded"

: exit status 1, failed to stop running pod 009b91e1f63268bbaee4c884d5332a769f9c7095e07e33dde2a90e6b636b79ad: output: E0908 22:22:40.576116 14351 remote_runtime.go:205] "StopPodSandbox from runtime service failed" err="rpc error: code = DeadlineExceeded desc = context deadline exceeded" podSandboxID="009b91e1f63268bbaee4c884d5332a769f9c7095e07e33dde2a90e6b636b79ad"

time="2025-09-08T22:22:40+08:00" level=fatal msg="stopping the pod sandbox \"009b91e1f63268bbaee4c884d5332a769f9c7095e07e33dde2a90e6b636b79ad\": rpc error: code = DeadlineExceeded desc = context deadline exceeded"

: exit status 1, failed to stop running pod 6486aa6b13bf826ba7ec43d7b9408fdcb254b4b45b2be23a49307ac18469b8eb: output: E0908 22:22:51.058361 14474 remote_runtime.go:205] "StopPodSandbox from runtime service failed" err="rpc error: code = DeadlineExceeded desc = context deadline exceeded" podSandboxID="6486aa6b13bf826ba7ec43d7b9408fdcb254b4b45b2be23a49307ac18469b8eb"

time="2025-09-08T22:22:51+08:00" level=fatal msg="stopping the pod sandbox \"6486aa6b13bf826ba7ec43d7b9408fdcb254b4b45b2be23a49307ac18469b8eb\": rpc error: code = DeadlineExceeded desc = context deadline exceeded"

: exit status 1, failed to stop running pod 0eb3fa777585704f2e7341894b3cdea10f97ec99635e954377b3e42e9a7b216f: output: E0908 22:23:01.200915 14557 remote_runtime.go:205] "StopPodSandbox from runtime service failed" err="rpc error: code = DeadlineExceeded desc = context deadline exceeded" podSandboxID="0eb3fa777585704f2e7341894b3cdea10f97ec99635e954377b3e42e9a7b216f"

time="2025-09-08T22:23:01+08:00" level=fatal msg="stopping the pod sandbox \"0eb3fa777585704f2e7341894b3cdea10f97ec99635e954377b3e42e9a7b216f\": rpc error: code = DeadlineExceeded desc = context deadline exceeded"

: exit status 1, failed to stop running pod 5963858c46864825ccc06d3de4cae7ab4478ba98a8593c49334596fe676957a6: output: E0908 22:23:11.393534 14621 remote_runtime.go:205] "StopPodSandbox from runtime service failed" err="rpc error: code = DeadlineExceeded desc = context deadline exceeded" podSandboxID="5963858c46864825ccc06d3de4cae7ab4478ba98a8593c49334596fe676957a6"

time="2025-09-08T22:23:11+08:00" level=fatal msg="stopping the pod sandbox \"5963858c46864825ccc06d3de4cae7ab4478ba98a8593c49334596fe676957a6\": rpc error: code = DeadlineExceeded desc = context deadline exceeded"

: exit status 1]

[reset] Deleting contents of directories: [/etc/kubernetes/manifests /var/lib/kubelet /etc/kubernetes/pki]

[reset] Deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf]

The reset process does not clean CNI configuration. To do so, you must remove /etc/cni/net.d

The reset process does not reset or clean up iptables rules or IPVS tables.

If you wish to reset iptables, you must do so manually by using the "iptables" command.

If your cluster was setup to utilize IPVS, run ipvsadm --clear (or similar)

to reset your system's IPVS tables.

The reset process does not clean your kubeconfig files and you must remove them manually.

Please, check the contents of the $HOME/.kube/config file.注意:出现上述提示,则表示集群重置成功。

3 集群的二次初始化

3.1 执行 kubeadm init 命令

#再次初始化之前先重启一下docker环境和kubelet环境,所有节点执行。

systemctl restart docker

systemctl restart cri-dockerd

systemctl restart kubelet

#在执行初始化命令

kubeadm init --kubernetes-version=1.28.2 \

--node-name=k8s-master01 \

--apiserver-advertise-address=192.168.21.6 \

--image-repository registry.k8s.io \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16 \

--cri-socket=unix:///var/run/cri-dockerd.sock --v=5

#执行过程如下:

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.21.6:6443 --token b1dfcg.7rp8gdvzrreyv0pd \

--discovery-token-ca-cert-hash sha256:d15cdbfbbae4211c49cc0f0157abd7414da256601c28eb0901ca05866d8b27b4

[root@k8s-master01 ~]# 3.2 配置主节点家目录

配置主节点家目录数据同步,方便后续主节点之间的数据认证同步。

在主节点执行:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config3.3 执行 kubeadm join 命令

添加集群节点,本章节测试用例环境为一个主节点和两个工作节点组成,这里只需添加剩下的工作节点即可。

kubeadm join 192.168.21.6:6443 --token b1dfcg.7rp8gdvzrreyv0pd \

--discovery-token-ca-cert-hash sha256:d15cdbfbbae4211c49cc0f0157abd7414da256601c28eb0901ca05866d8b27b4 \

--cri-socket=unix:///var/run/cri-dockerd.sock --v=5执行成功之后,我们下一步就在主节点检查工作节点的加入是否成功。

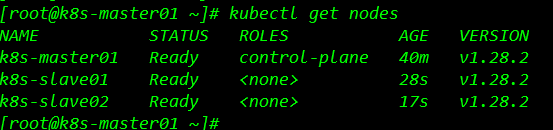

4 查看集群状态

到此集群重置就顺利完成了。

5 集群重置后的优化工作

1) 优化端口范围服务。

2) 优化证书期限。

3) 优化Metrics-Server服务等。

本博客只限用于测试环境参考,生产环境的节点变更或重置,请慎重,博友们可根据自己的实际环境情况来进行规范化的流程变更哦,测试中这里有不足之处,还望博友们赐教,感谢博友们的指导和支持。

学而时习之,不亦说乎?有朋自远方来,不亦乐乎?人不知而不愠,不亦君子乎?

浙公网安备 33010602011771号

浙公网安备 33010602011771号