U-Net分割目标骨骼

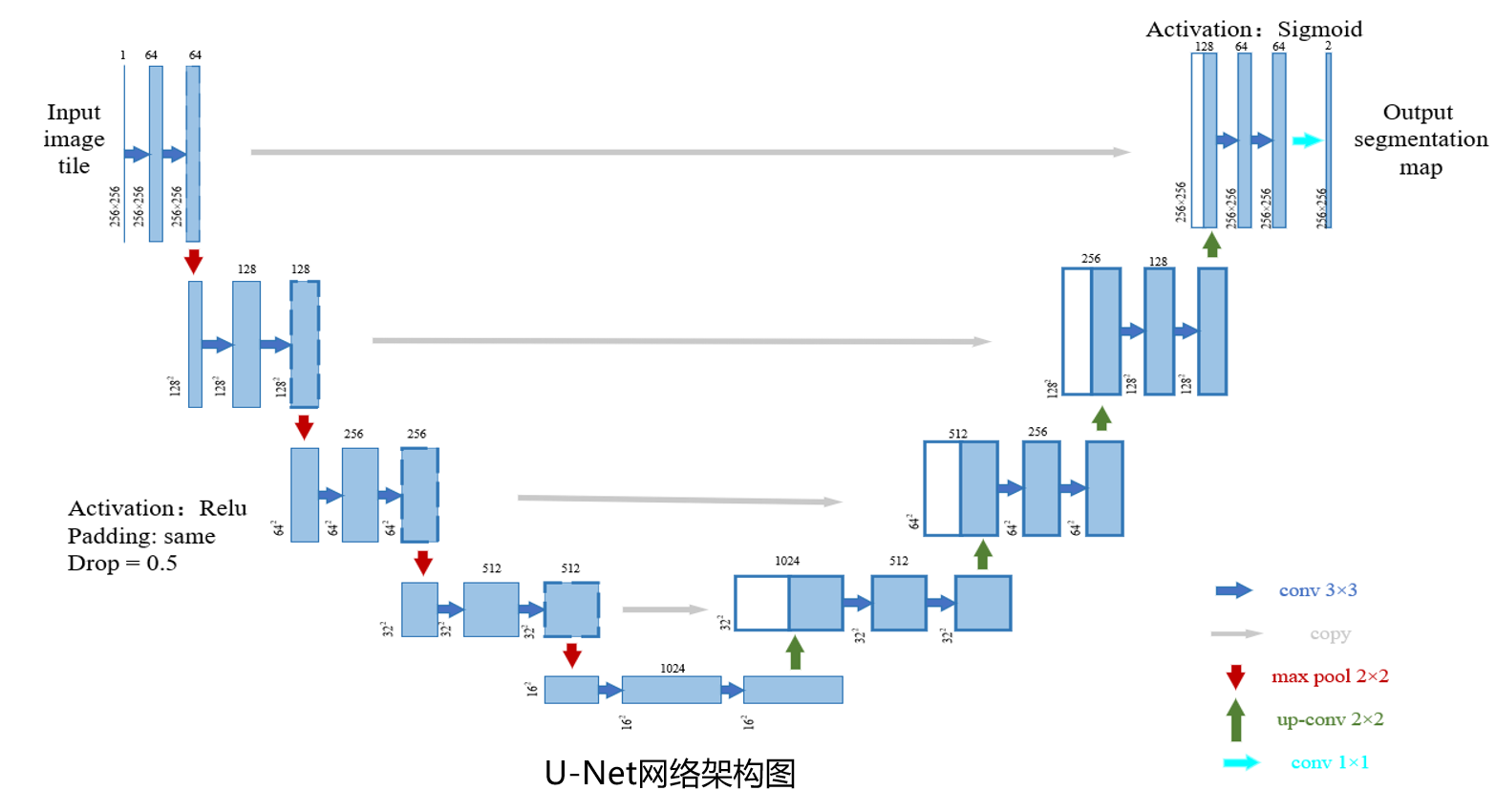

上采样中,上采样部分先上采样放大,再进行卷积操作,相当于转置卷积,上采样的卷积核大小为22,每次上采样完成后将前面下采样中拼接操作。在最后一步中采用1一个11的卷积核。

Accuracy 采用的是dice系数 X Y 分别表示预测的结果和真实的结果,{%asset_img 1.png This%}损失函数则就就采用1-dice 系数 dice系数越高 loss 就降得越低 {%asset_img 2.png This%},网络架构图图下图所示:

数据集

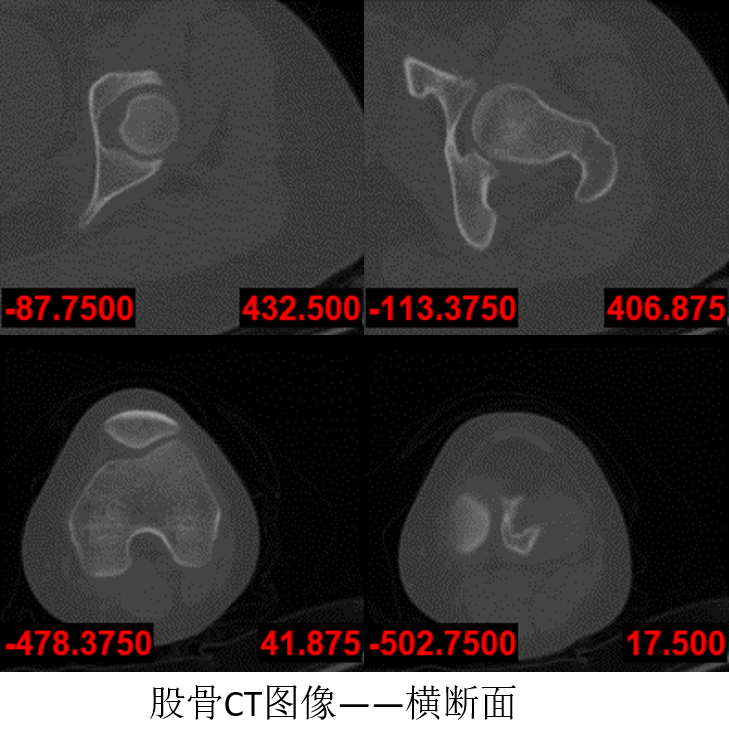

本实验是对髋骨近端的股骨进行分割,下图是股骨CT图像的部分图像,目前股骨分割所面临的问题主要有以下几点:

-

人工分割股骨耗时耗力,且需要一定的专业知识;

-

部分股骨CT图像对比度不高,骨与骨之间连接紧密,分割难度大;

-

分割算法复杂,分割结果精确度不高

数据集

本实验是对髋骨近端的股骨进行分割,下图是股骨CT图像的部分图像,目前股骨分割所面临的问题主要有以下几点:

-

人工分割股骨耗时耗力,且需要一定的专业知识;

-

部分股骨CT图像对比度不高,骨与骨之间连接紧密,分割难度大;

-

分割算法复杂,分割结果精确度不高

-

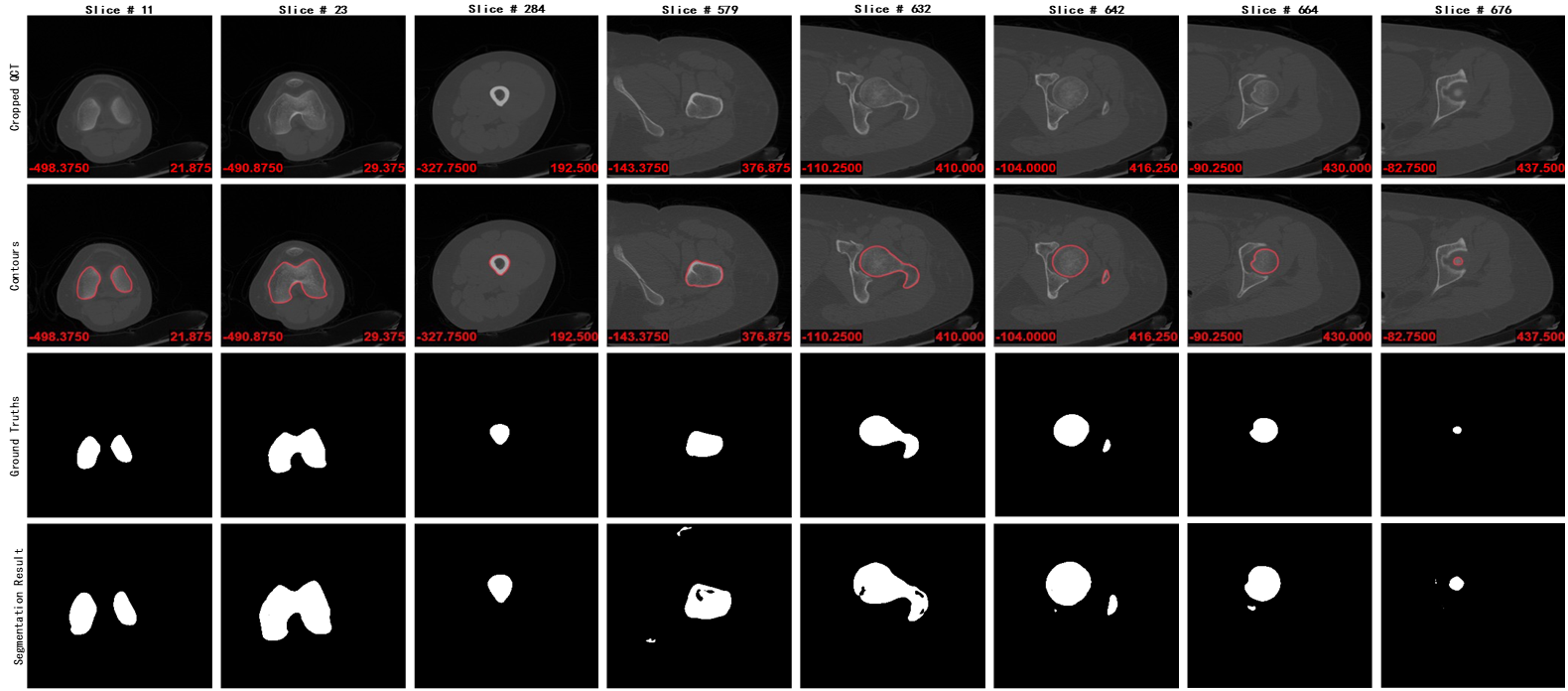

我们标注了来自20名不同患者的左股骨的作为数据集进行训练,并用一组左股骨序列图片作为测试集,得出最终结果如下图所示:

从上至下每一行分别为股骨CT图片、股骨真实轮廓线、人工标注股骨区域、模型预测结果,最终模型预测的精度能够达到98.56±0.02,为了提高模型精度,后期会股骨远端和近端分别训练,以达到更好的分割效果。

代码部分

数据处理部分

#data.py from __future__ import print_function from keras.preprocessing.image import ImageDataGenerator import numpy as np import os import glob import skimage.io as io import skimage.transform as trans from skimage import img_as_uint Sky = [128,128,128] Building = [128,0,0] Pole = [192,192,128] Road = [128,64,128] Pavement = [60,40,222] Tree = [128,128,0] SignSymbol = [192,128,128] Fence = [64,64,128] Car = [64,0,128] Pedestrian = [64,64,0] Bicyclist = [0,128,192] Unlabelled = [0,0,0] COLOR_DICT = np.array([Sky, Building, Pole, Road, Pavement, Tree, SignSymbol, Fence, Car, Pedestrian, Bicyclist, Unlabelled]) def adjustData(img,mask,flag_multi_class,num_class): # 将图片归一化 if(flag_multi_class): # 此程序不是多类情况,所以不考虑这个 img = img / 255 mask = mask[:, :, :, 0] if(len(mask.shape) == 4) else mask[:, :, 0] new_mask = np.zeros(mask.shape + (num_class,)) # np.zeros里面是shape元组,此目的是将数据厚度扩展num_class层,以在层的方向实现one-hot结构 for i in range(num_class): #for one pixel in the image, find the class in mask and convert it into one-hot vector #index = np.where(mask == i) #index_mask = (index[0],index[1],index[2],np.zeros(len(index[0]),dtype = np.int64) + i) # if (len(mask.shape) == 4) else (index[0],index[1],np.zeros(len(index[0]),dtype = np.int64) + i) #new_mask[index_mask] = 1 new_mask[mask == i, i] = 1 #将平面的mask的每类,都单独变成一层 new_mask = np.reshape(new_mask, (new_mask.shape[0], new_mask.shape[1]*new_mask.shape[2], new_mask.shape[3])) if\ flag_multi_class else np.reshape(new_mask, (new_mask.shape[0]*new_mask.shape[1], new_mask.shape[2])) mask = new_mask elif(np.max(img) > 1): img = img / 255 mask = mask /255 mask[mask > 0.5] = 1 # 二值化 mask[mask <= 0.5] = 0 return (img, mask) # 生成训练所需要的图片和标签 def trainGenerator(batch_size, train_path, image_folder, mask_folder, aug_dict, image_color_mode = "grayscale", mask_color_mode = "grayscale", image_save_prefix = "image", mask_save_prefix = "mask", flag_multi_class = False, num_class = 2, save_to_dir = None, target_size = (256,256),seed = 1): ''' can generate image and mask at the same time use the same seed for image_datagen and mask_datagen to ensure the transformation for image and mask is the same if you want to visualize the results of generator, set save_to_dir = "your path" ''' image_datagen = ImageDataGenerator(**aug_dict) # 图片生成器对数据进行增强,扩充数据集大小,增强模型的泛化能力。比如进行旋转 mask_datagen = ImageDataGenerator(**aug_dict) image_generator = image_datagen.flow_from_directory( # 根据文件路径,增强数据 train_path, # 训练数据文件夹路径 classes = [image_folder], # 类别文件夹,对哪一个类进行增强 class_mode = None, # 不返回标签 color_mode = image_color_mode, # 灰度,单通道模式 target_size = target_size, # 转换后的目标图片大小 batch_size = batch_size, # 每次产生的(进行转换)图片张数 save_to_dir = save_to_dir, # 保存图片的路径 save_prefix = image_save_prefix, # 生成图片的前缀,仅当提供save_to_dir时有效 seed = seed) # flow_from_directory(): # directory: 目标文件夹路径 # classes: 子文件夹列表 # class_mode: 确定返回的标签数组的类型 # color_mode: 颜色模式,grayscale为单通道,rgb三通道 # target_size: 目标图像的尺寸 # batch_size: 每批数据的大小 # save to dir: 保存图片 # save_prefix: 保存提升后图片时使用的前缀,仅当设置了save_to_dir时生效 # seed: 随机种子数,用于shuffle mask_generator = mask_datagen.flow_from_directory( train_path, classes = [mask_folder], class_mode = None, color_mode = mask_color_mode, target_size = target_size, batch_size = batch_size, save_to_dir = save_to_dir, save_prefix = mask_save_prefix, seed = seed) train_generator = zip(image_generator, mask_generator) # 将image和mask打包成元组的列表[(iamge1,masek1),(image2,mask2),...] for (img,mask) in train_generator: # 由于batch是2,所以一次返回两张,即img是一个2张灰度图片的数组,[2,256,256] img, mask = adjustData(img,mask, flag_multi_class, num_class) yield (img, mask) # 每次分别产出两张图片和标签 # 上面这个函数主要是产生一个数据增强的图片生成器,方便后面使用这个生成器不断生成图片 def testGenerator(test_path, num_image = 328, target_size = (256,256), flag_multi_class = False, as_gray = False): for i in range(num_image): img = io.imread(os.path.join(test_path,"%d.png"%i),as_gray = as_gray) img = img / 255.0 # 归一化 img = trans.resize(img, target_size) img = np.reshape(img, img.shape+(1,)) if (not flag_multi_class) else img img = np.reshape(img, (1,)+img.shape) # (1,width,height) # 将测试图片扩展一个维度,与训练时的输入[2,256,256]保持一致 yield img # 上面这个函数主要是对测试图片进行规范,使其尺寸和维度上和训练图片一致 def geneTrainNpy(image_path,mask_path,flag_multi_class = False,num_class = 2,image_prefix = "image",mask_prefix = "mask",image_as_gray = True,mask_as_gray = True): image_name_arr = glob.glob(os.path.join(image_path,"%s*.png"%image_prefix)) # 相当于文件搜索,搜索某路径下与字符相匹配的文件 image_arr = [] mask_arr = [] for index, item in enumerate(image_name_arr): # enumerate是枚举,输出[(0,item0),(1,item1),(2,item2)] img = io.imread(item, as_gray = image_as_gray) img = np.reshape(img, img.shape + (1,)) if image_as_gray else img mask = io.imread(item.replace(image_path, mask_path).replace(image_prefix, mask_prefix), as_gray = mask_as_gray) # 重新在mask_path文件夹下搜索带有mask字符的图片(标签图片) mask = np.reshape(mask, mask.shape + (1,)) if mask_as_gray else mask img, mask = adjustData(img, mask, flag_multi_class, num_class) image_arr.append(img) mask_arr.append(mask) image_arr = np.array(image_arr) mask_arr = np.array(mask_arr) return image_arr, mask_arr # 该函数主要是分别在训练集文件夹下和标签文件夹下搜索图片,然后扩展一个维度后以array的形式返回, # 是为了在没用数据增强时的读取文件夹内自带的数据 def labelVisualize(num_class,color_dict,img): img = img[:,:,0] if len(img.shape) == 3 else img img_out = np.zeros(img.shape + (3,)) # 变成RGB空间,因为其他颜色只能在RGB空间才会显示 for i in range(num_class): img_out[img == i,:] = color_dict[i] # 上面函数是给出测试后的输出之后,为输出涂上不同的颜色,多类情况下才起作用,两类的话无用 return img_out / 255.0 def saveResult(save_path, npyfile, flag_multi_class = False, num_class = 2): for i, item in enumerate(npyfile): img = labelVisualize(num_class, COLOR_DICT, item) if flag_multi_class else item[:, :, 0] # 多类的话就图成彩色,非多类(两类)的话就是黑白色 io.imsave(os.path.join(save_path, "%d.png"%(i)), img_as_uint(img))

from tensorflow import keras import numpy as np import os import skimage.io as io import skimage.transform as trans from keras.models import * from keras.layers import * from keras.optimizers import * from keras.layers.merge import concatenate from keras.callbacks import ModelCheckpoint, LearningRateScheduler from keras import backend as keras # 多分类dice def dice_coef(y_true, y_pred): # flatten是numpy.ndarray.flatten的一个函数,即返回一个折叠成一维的数组。但是该函数只能适用于numpy对象,即array或者mat # ,普通的list列表是不行的。 smooth = 1. y_true_f = K.flatten(y_true) y_pred_f = K.flatten(y_pred) intersection = K.sum(y_true_f * y_pred_f) return (2. * intersection + smooth) / (K.sum(y_true_f) + K.sum(y_pred_f) + smooth) def dice_coef_loss(y_true, y_pred): return 1 - dice_coef(y_true, y_pred) def unet(pretrained_weights=None, input_size=(256, 256, 1)): # 长宽256 通道为1(灰度图) inputs = Input(input_size) # 初始化keras张量 conv1 = Conv2D(64, 3, activation='relu', padding='same', kernel_initializer='he_normal')(inputs) # filters: 输出的维度 # kernel_size:卷积核的尺寸 # activation: 激活函数 # padding: 边缘填充 # kernel_initializer: kenerl权值初始化 conv1 = Conv2D(64, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv1) # 64 he_normal( # ):初始loss约为150,训练0.5个epoch后loss下降到3.5,一个epoch就看达到97.82%的Test Error pool1 = MaxPooling2D(pool_size=(2, 2))(conv1) conv2 = Conv2D(128, 3, activation='relu', padding='same', kernel_initializer='he_normal')(pool1) conv2 = Conv2D(128, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv2) pool2 = MaxPooling2D(pool_size=(2, 2))(conv2) conv3 = Conv2D(256, 3, activation='relu', padding='same', kernel_initializer='he_normal')(pool2) conv3 = Conv2D(256, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv3) pool3 = MaxPooling2D(pool_size=(2, 2))(conv3) conv4 = Conv2D(512, 3, activation='relu', padding='same', kernel_initializer='he_normal')(pool3) conv4 = Conv2D(512, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv4) drop4 = Dropout(0.5)(conv4) # 每次训练时随机忽略50%的神经元,减少过拟合 pool4 = MaxPooling2D(pool_size=(2, 2))(drop4) conv5 = Conv2D(1024, 3, activation='relu', padding='same', kernel_initializer='he_normal')(pool4) conv5 = Conv2D(1024, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv5) drop5 = Dropout(0.4)(conv5) up6 = Conv2D(512, 2, activation='relu', padding='same', kernel_initializer='he_normal')( UpSampling2D(size=(2, 2))(drop5)) # 先上采样放大,再进行卷积操作,相当于转置卷积 merge6 = concatenate([drop4, up6], axis=3) # (width,height,channels) 拼接通道数 conv6 = Conv2D(512, 3, activation='relu', padding='same', kernel_initializer='he_normal')(merge6) conv6 = Conv2D(512, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv6) up7 = Conv2D(256, 2, activation='relu', padding='same', kernel_initializer='he_normal')( UpSampling2D(size=(2, 2))(conv6)) merge7 = concatenate([conv3, up7], axis=3) conv7 = Conv2D(256, 3, activation='relu', padding='same', kernel_initializer='he_normal')(merge7) conv7 = Conv2D(256, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv7) up8 = Conv2D(128, 2, activation='relu', padding='same', kernel_initializer='he_normal')( UpSampling2D(size=(2, 2))(conv7)) merge8 = concatenate([conv2, up8], axis=3) conv8 = Conv2D(128, 3, activation='relu', padding='same', kernel_initializer='he_normal')(merge8) conv8 = Conv2D(128, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv8) up9 = Conv2D(64, 2, activation='relu', padding='same', kernel_initializer='he_normal')( UpSampling2D(size=(2, 2))(conv8)) merge9 = concatenate([conv1, up9], axis=3) conv9 = Conv2D(64, 3, activation='relu', padding='same', kernel_initializer='he_normal')(merge9) conv9 = Conv2D(64, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv9) conv10 = Conv2D(1, 1, activation='sigmoid')(conv9) model = Model(input=inputs, output=conv10) model.compile(optimizer=Adam(lr=1e-5), loss=dice_coef_loss, metrics=[dice_coef]) # optimizer: 优化器 # binary_crossentropy: 与sigmoid相对应的损失函数 # metrics: 评估模型在训练和测试时的性能指标 # model.summary() if (pretrained_weights): model.load_weights(pretrained_weights) return model

from model import * from data import * # 导入这两个文件中所有函数 gpuNo='1' print("gpuNo=", gpuNo) os.environ['CUDA_VISIBLE_DEVICES'] = gpuNo # os.environ["CUDA_VISIBLE_DEVICES"] = "0" data_gen_args = dict(rotation_range=0.2, # 旋转 width_shift_range=0.05, # 宽度变化 height_shift_range=0.05, # 高度变化 shear_range=0.05, # 错切变换 zoom_range=0.05, # 缩放 horizontal_flip=True, # 水平翻转 fill_mode='nearest') # 数据增强时的变换方式的字典 myGene = trainGenerator(20, '0', 'image', 'label', data_gen_args, save_to_dir=None) # 得到一个生成器,以batch=2的速率无限生成增强后的数据 model = unet() model_checkpoint = ModelCheckpoint('rgbfemur1.hdf5', monitor='loss', verbose=1, save_best_only=True) # 回调函数,在每个epoch后保存模型到filepath # filename: 保存模型 # monitor:需要监视的值,检测loss使他最小 # verbose: 信息展示模式,1展示,0不展示; # save_best_only: 保存在验证集上性能最好的模型 model.fit_generator(myGene, steps_per_epoch=100, epochs=300, callbacks=[model_checkpoint]) # 上面一行是利用生成器进行batch_size数量的训练,样本和标签通过myGene传入 # generator: 生成器(image,mask) # steps_per_epoch: 训练steps_per_epoch个数据时记一个epoch结束, # epoch: 数据迭代轮数 # callbacks: 回调函数 # # testGene = testGenerator("data/membrane/test") # results = model.predict_generator(testGene, 30, verbose=1) # # 30是step,steps:在停止之前,来自generator的总步数(样本批次)。可选参数Sequence:如果未指定,则将使用len(generator)作为步数 # # 上面返回值是:预测值的Numpy数组 # # 为来自数据生成器的输入样本生成预测 # # generator: 生成器 # # verbose = 0 为不在标准输出流输出日志信息 # # verbose = 1 为输出进度条记录 # # verbose = 2 为每个epoch输出一行记录 # # steps: 在声明一个epoch完成,并开始下一个epoch之前从生成器产生的总步数 # # workers: 最大进程数 # # use_multiprocessing: 多线程 # # saveResult("data/membrane/test", results)

from model import * from data import * # 导入这两个文件中所有函数 gpuNo='1' print("gpuNo=", gpuNo) os.environ['CUDA_VISIBLE_DEVICES'] = gpuNo model = unet(pretrained_weights='rgbfemur1.hdf5') testGene = testGenerator("test/") results = model.predict_generator(testGene, 69, verbose=1) # 30是step,steps:在停止之前,来自generator的总步数(样本批次)。可选参数Sequence:如果未指定,则将使用len(generator)作为步数 # 上面返回值是:预测值的Numpy数组 # 为来自数据生成器的输入样本生成预测 # generator: 生成器 # verbose = 0 为不在标准输出流输出日志信息 # verbose = 1 为输出进度条记录 # verbose = 2 为每个epoch输出一行记录 # steps: 在声明一个epoch完成,并开始下一个epoch之前从生成器产生的总步数 # workers: 最大进程数 # use_multiprocessing: 多线程 saveResult("test/gray/", results)

浙公网安备 33010602011771号

浙公网安备 33010602011771号