P21_神经网络——搭建小实战和Sequential的使用

21.1打开pytorch官网

1.神经网络-搭建小实战和Sequential的使用

(1)Sequential的使用:将网络结构放入其中即可,可以简化代码。

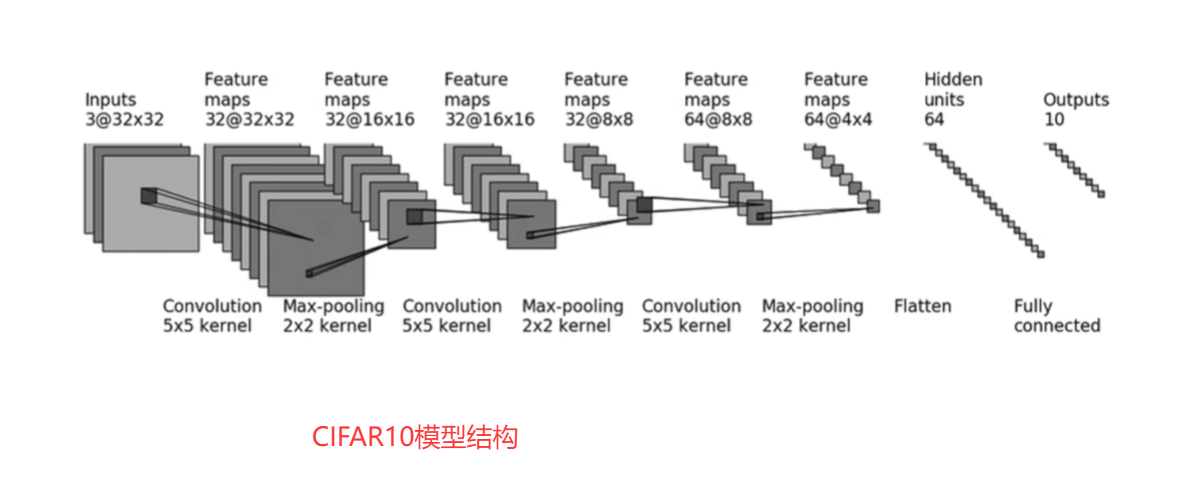

(2)一个对CIFAR10进行分类的模型

(3)模型的实现(下文)

21.2打开pycharm实现模型

1.第一步的Convolution

(1)在第一步的Convolution:in_channel是3,out_channel是32,kernel_size是5×5

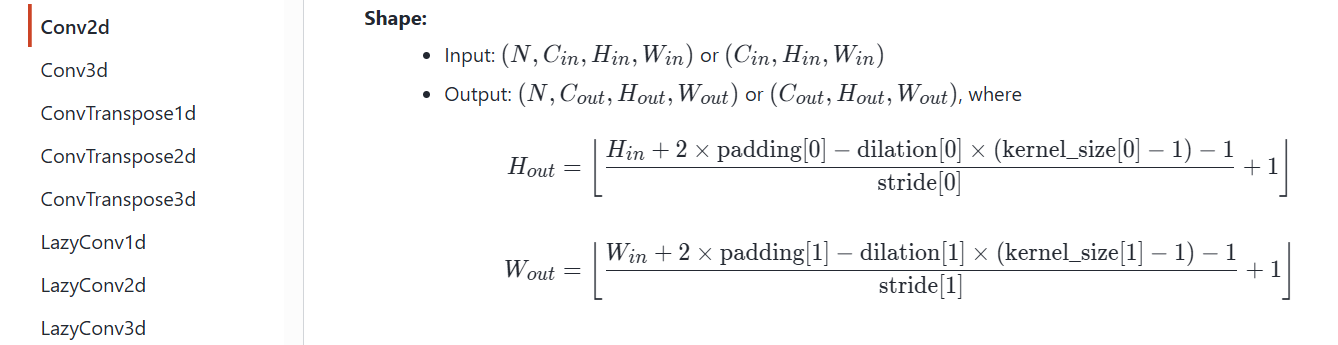

(2)Conv2d的参数:

点击查看代码

class torch.nn.Conv2d(in_channels, out_channels, kernel_size, stride=1, padding=0, dilation=1, groups=1, bias=True, padding_mode='zeros', device=None, dtype=None)

其中in_channel、out_channel和kernel_size已知,至于stride|padding|dilation是否要设置还是默认,要根据计算得知;

(3)根据Conv2d的计算公式和上图的对CIFAR10进行分类的模型来计算:

①NCHW是指一种张量数据的存储格式,具体表示为N(批大小)-C(通道数)-H(高度)-W(宽度)

②在公式中可知:

Hin=32,Hout=32,dilation默认是1,stride默认是1,padding默认是0

③则32=(32+2*padding-dilation×(5-1)-1)/stride +1

设置dilation为默认值1,stride设置为默认值1,则代入得:32=(32+2×padding-1×(5-1)-1)/1+1即padding=2

④即:

self.conv1 = Conv2d(in_channels=3,out_channels=32,kernel_size=5,padding=2)#stride和dilation默认

2.第二步的Maxpooling

self.maxpool1 = MaxPool2d(kernel_size=2)

3.第三步的Convolution

self.conv2 = Conv2d(32,32,5,padding=2)

4.第四步的Maxpooling

self.maxpool2 = MaxPool2d(kernel_size=2)

5.第五步的Convolution

self.conv3 = Conv2d(32,64,5, padding=2)

6.第六步的Maxpooling

self.maxpool3 = MaxPool2d(kernel_size=2)

7.第七步的Flatten

self.flatten = Flatten()

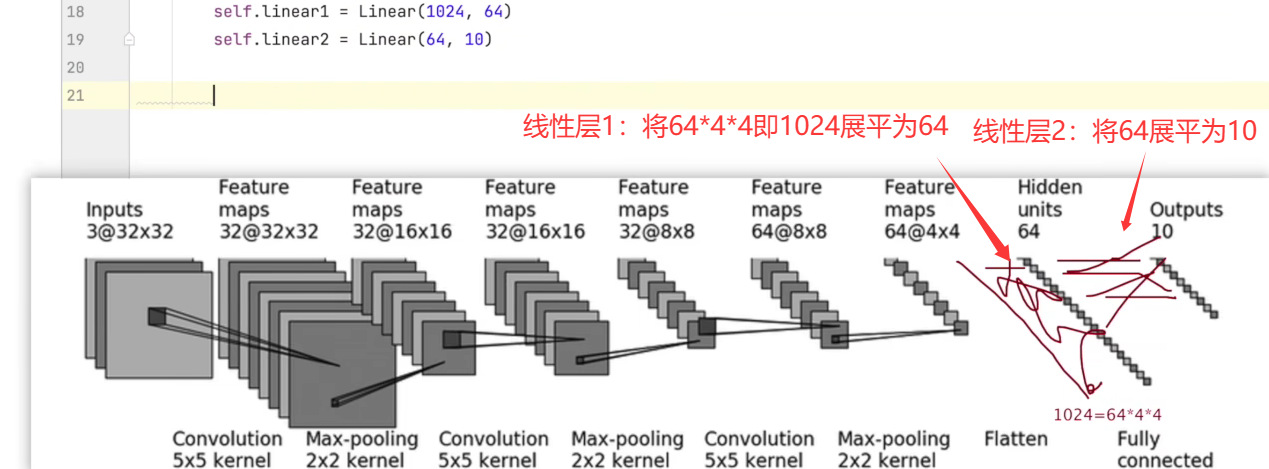

8.第八步的linear

self.linear1 = Linear(1024,64)

9.第九步的linear

self.linear2 = Linear(64,10)

linear示意图:

10.定义forward

点击查看代码

def forward(self,x):

x = self.conv1(x)

x = self.maxpool1(x)

x = self.conv2(x)

x = self.maxpool2(x)

x = self.conv3(x)

x = self.maxpool3(x)

x = self.flatten(x)

x = self.linear1(x)

x = self.linear2(x)

return x

11.搭建网络,实例化并print输出

点击查看代码

dyl_seq = DYL_seq()

print(dyl_seq)

得到:

点击查看代码

x DYL_seq( (conv1): Conv2d(3, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2)) (maxpool1): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False) (conv2): Conv2d(32, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2)) (maxpool2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False) (conv3): Conv2d(32, 64, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2)) (maxpool3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False) (flatten): Flatten(start_dim=1, end_dim=-1) (linear1): Linear(in_features=1024, out_features=64, bias=True) (linear2): Linear(in_features=64, out_features=10, bias=True))

12.创建输入,使用ones指定我们想要创建的形状大小

创建一个假想的输入:

torch.ones(64,3,32,32) #64个batch_size,3个通道,32×32的尺寸

点击查看代码

#torch.ones可以指定我们想要创建的数的形状大小

input = torch.ones(64,3,32,32) #64个batch_size,3个通道,32×32的尺寸

output = dyl_seq(input)

print(output.shape)

其中64表示64个batch_size,可以想象成64张图片

21.3使用Sequential实现模型

1.原始繁琐的代码:

点击查看代码

import torch

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear

class DYL_seq(nn.Module):

def __init__(self):

super(DYL_seq, self).__init__()

self.conv1 = Conv2d(in_channels=3,out_channels=32,kernel_size=5,padding=2)#stride和dilation默认

self.maxpool1 = MaxPool2d(kernel_size=2)

self.conv2 = Conv2d(32,32,5,padding=2)

self.maxpool2 = MaxPool2d(kernel_size=2)

self.conv3 = Conv2d(32,64,5, padding=2)

self.maxpool3 = MaxPool2d(kernel_size=2)

self.flatten = Flatten()

self.linear1 = Linear(1024,64)

self.linear2 = Linear(64, 10)

def forward(self,x):

x = self.conv1(x)

x = self.maxpool1(x)

x = self.conv2(x)

x = self.maxpool2(x)

x = self.conv3(x)

x = self.maxpool3(x)

x = self.flatten(x)

x = self.linear1(x)

x = self.linear2(x)

return x

dyl_seq = DYL_seq()

print(dyl_seq)

#创建一个假想的输入

#torch.ones可以指定我们想要创建的数的形状大小

input = torch.ones(64,3,32,32) #64个batch_size,3个通道,32×32的尺寸

output = dyl_seq(input)

print(output.shape)

输出结果如下:

点击查看代码

DYL_seq(

(conv1): Conv2d(3, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(maxpool1): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(conv2): Conv2d(32, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(maxpool2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(conv3): Conv2d(32, 64, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(maxpool3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(flatten): Flatten(start_dim=1, end_dim=-1)

(linear1): Linear(in_features=1024, out_features=64, bias=True)

(linear2): Linear(in_features=64, out_features=10, bias=True)

)

torch.Size([64, 10])

2.使用Sequential对模型重新进行实现

点击查看代码

import torch

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

class DYL_seq(nn.Module):

def __init__(self):

super(DYL_seq, self).__init__()

self.model1 = Sequential(

Conv2d(in_channels=3, out_channels=32, kernel_size=5, padding=2),

MaxPool2d(kernel_size=2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(kernel_size=2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(kernel_size=2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self,x):

x = self.model1(x)

return x

dyl_seq = DYL_seq()

print(dyl_seq)

#创建一个假想的输入

#torch.ones可以指定我们想要创建的数的形状大小

input = torch.ones(64,3,32,32) #64个batch_size,3个通道,32×32的尺寸

output = dyl_seq(input)

print(output.shape)

实现了如上同样的效果:

点击查看代码

xxxxxxxxxx DYL_seq( (model1): Sequential( (0): Conv2d(3, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2)) (1): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False) (2): Conv2d(32, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2)) (3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False) (4): Conv2d(32, 64, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2)) (5): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False) (6): Flatten(start_dim=1, end_dim=-1) (7): Linear(in_features=1024, out_features=64, bias=True) (8): Linear(in_features=64, out_features=10, bias=True) ))torch.Size([64, 10])

可见,代码变得简洁很多

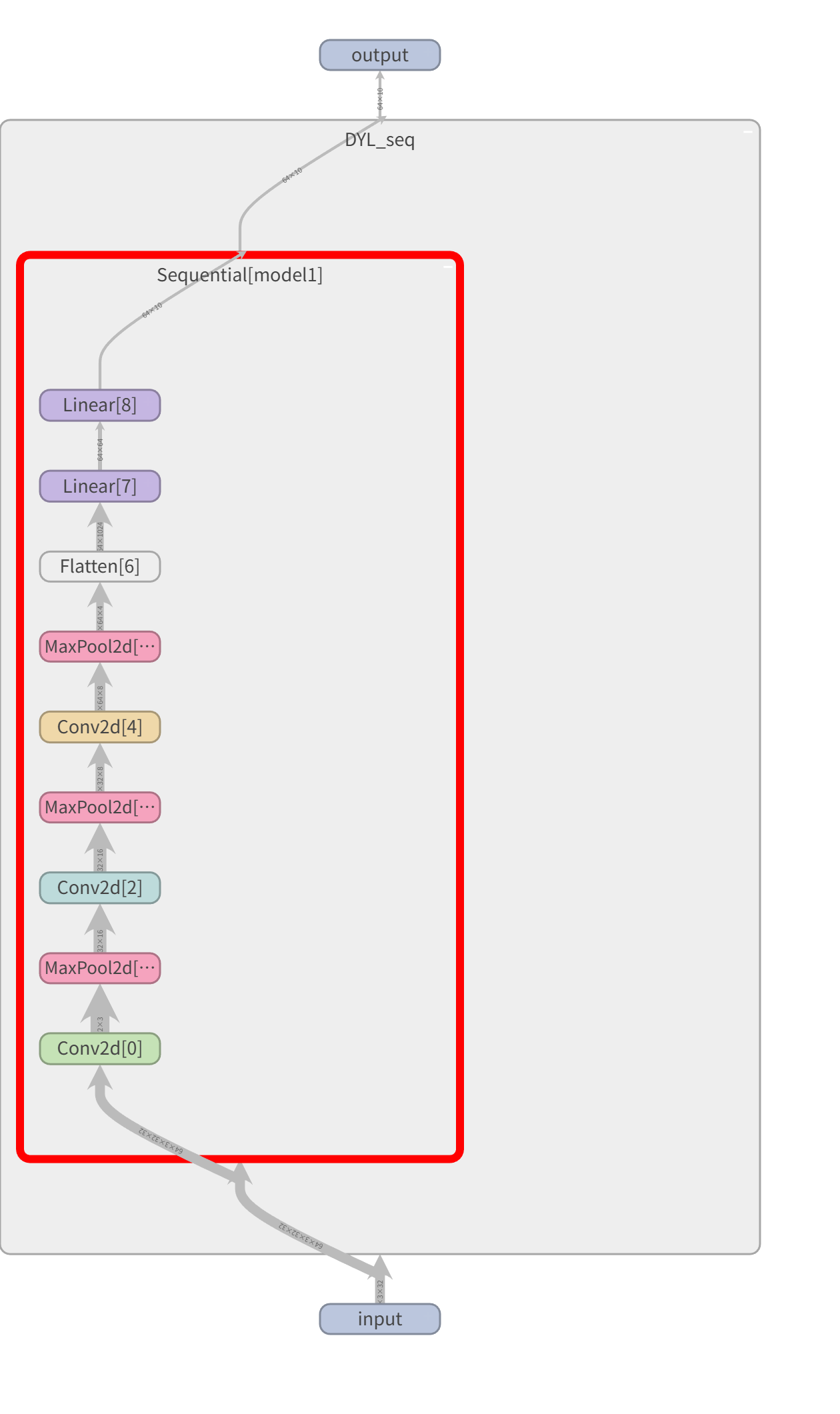

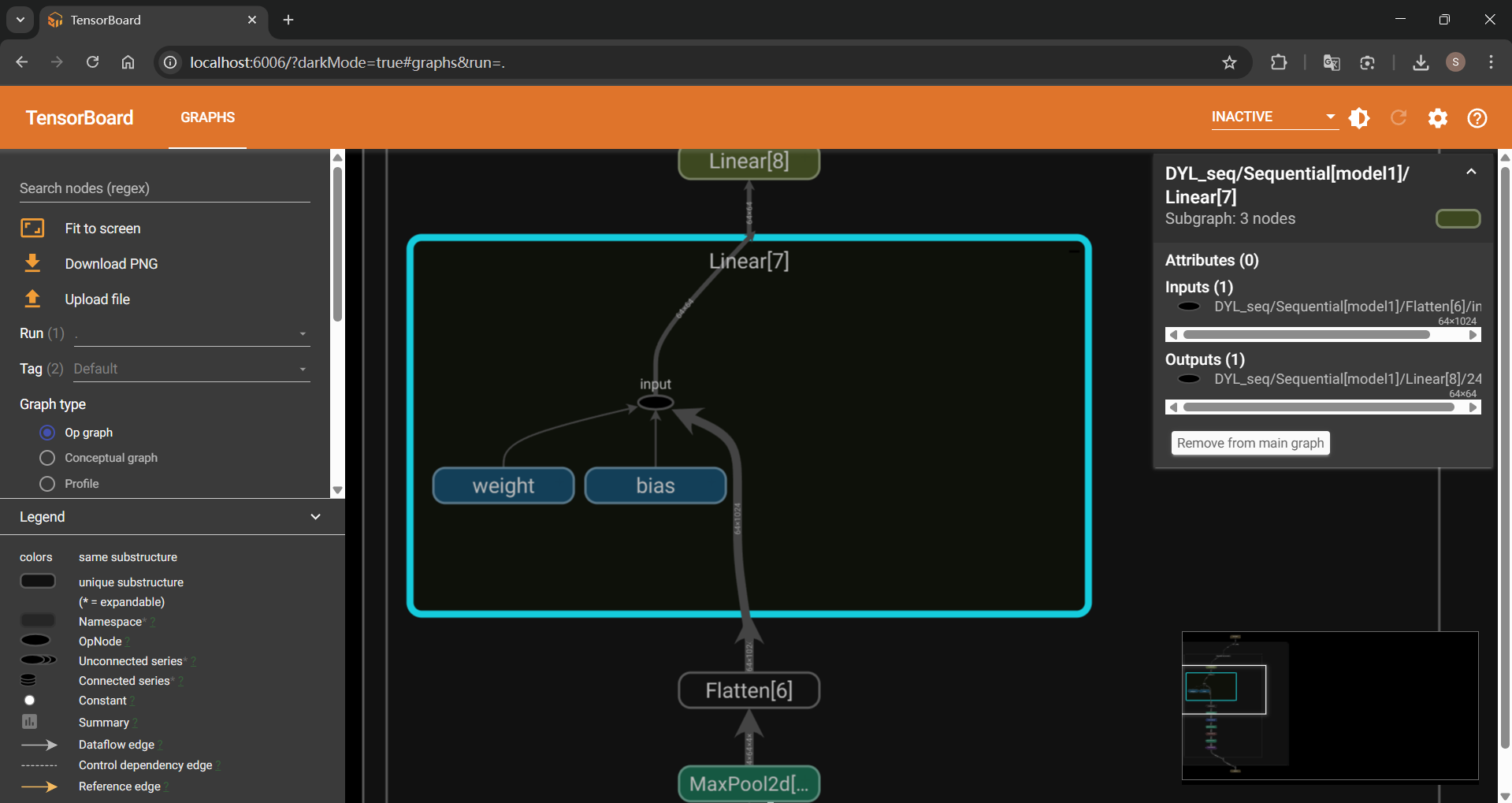

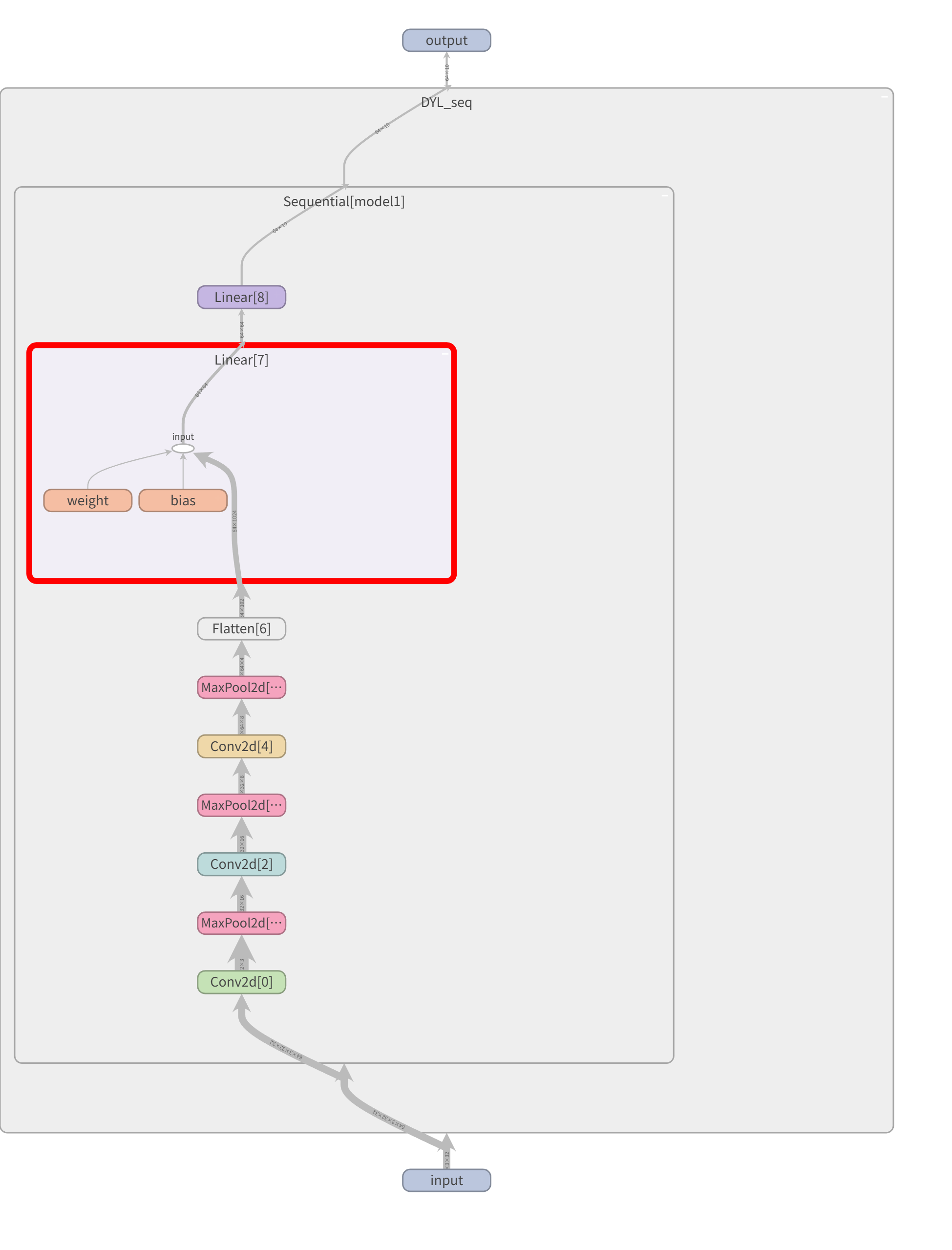

21.4使用tensorboard进行可视化

tensorboard --logdir=dyl_seq

双击dyl_seq就会得到中间有一个Sequential,再双击Sequential就可以看到各网络层:

然后在网络层继续双击,就可以看到:

每个箭头上显示的我们送到该网络层的尺寸的大小,设置的各参数:

浙公网安备 33010602011771号

浙公网安备 33010602011771号