李宏毅老师regression部分全部

1. where does the error come from?

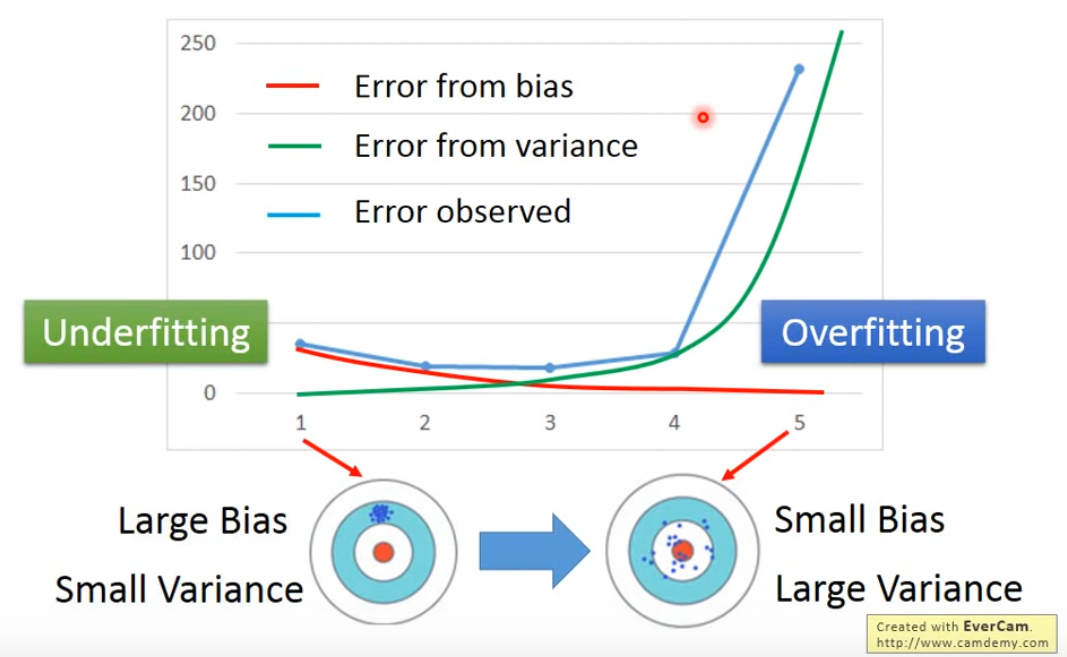

error due to "bias" and "variance"

variance:

simple model : small variance

complex model : large variance

simple model is less influenced by the sampled data

bais:

if we average all the f*, is it close to f^?

simple model : large bais

complex model : small bais

diagnosis:

if your model cannot even fit the training examples,then your model have lager bias

if your model fit the train data,but lager error on testing data,then you probably have lager variance

for bias,redesign your model: •add more feature as input

•a more complex model

for variance: •more data

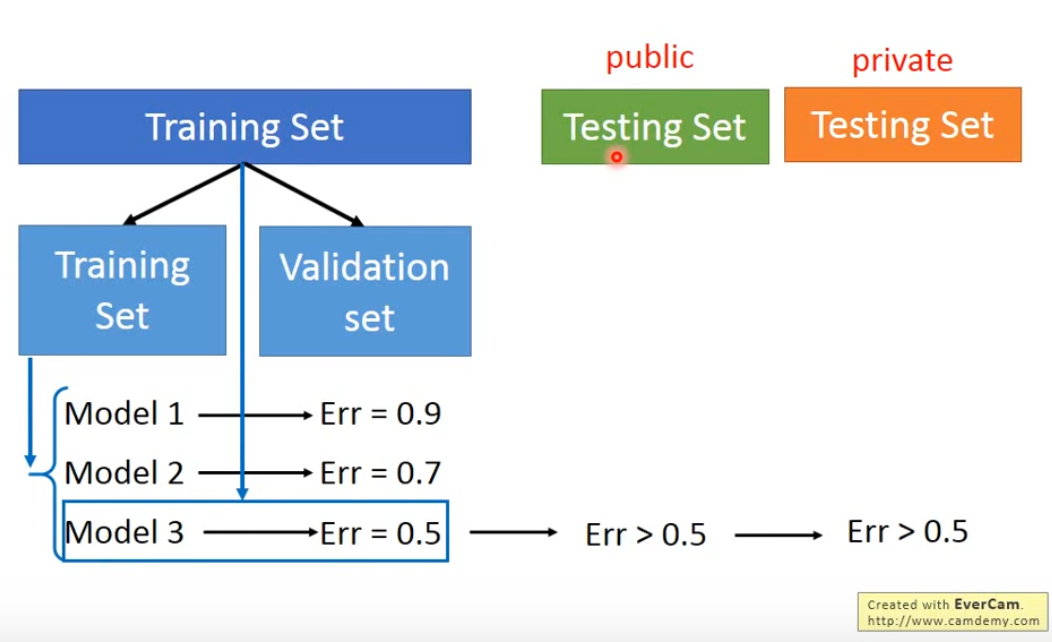

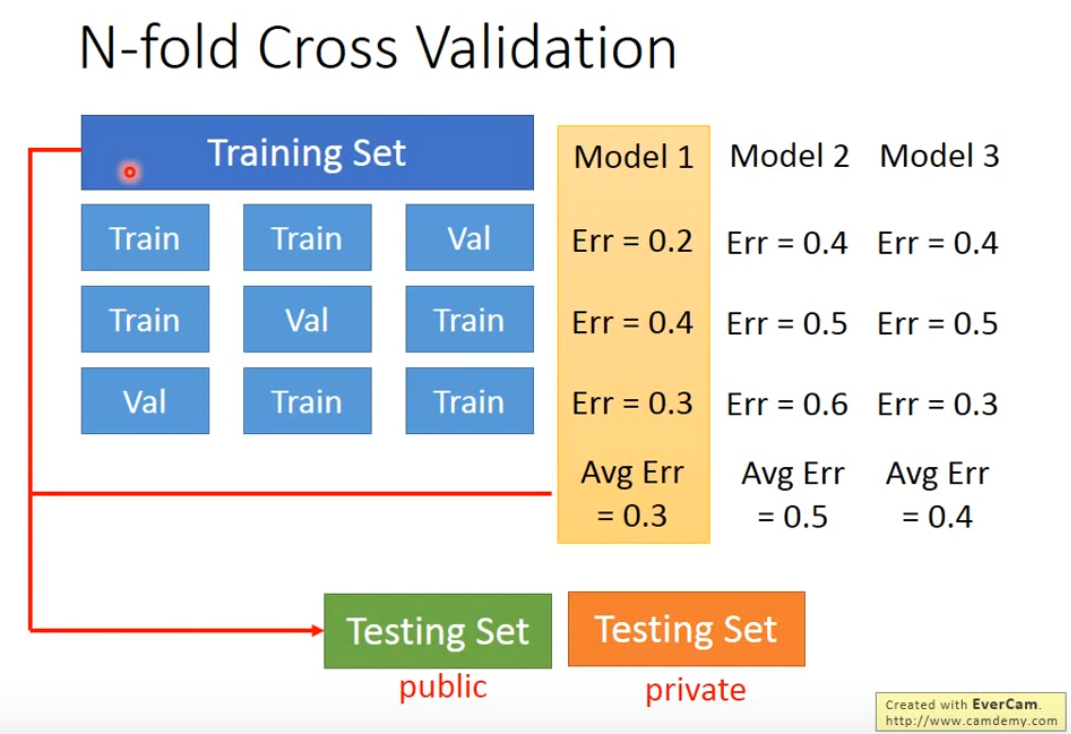

2.cross validation & N-fold cross validation

N-fold cross validation

3.gradient descent

1.tuning your learning learing rate

2.adaptive learning rate

•popular & simple idea:reduce the learning rate by some factor every few epochs

•at the begining,we are far from the destination,so we use larger learning rate

•after several epochs,we close to the destination,so we reduce the learning rate

•E.g. Π=Π/(1+t)½

•learning rate cannot be one-size-fits-all

•giving different parameters different learning rates

•adagrad

Divide the learning rate of each paramenter by the root mean square of its previaous derivatives

wt+1=wt-Πtgt/σt

Πt=Π/(1+t)½ gt=∂L(θ)/∂w σt=((1+t)-1Σ(gi)²)½

wt+1=wt-Πgt/(Σ(gi)²)½

3.stochastic gradient descent

pick a example xn

Ln=(y^n-(b+Σwixin))2

loss only for one example

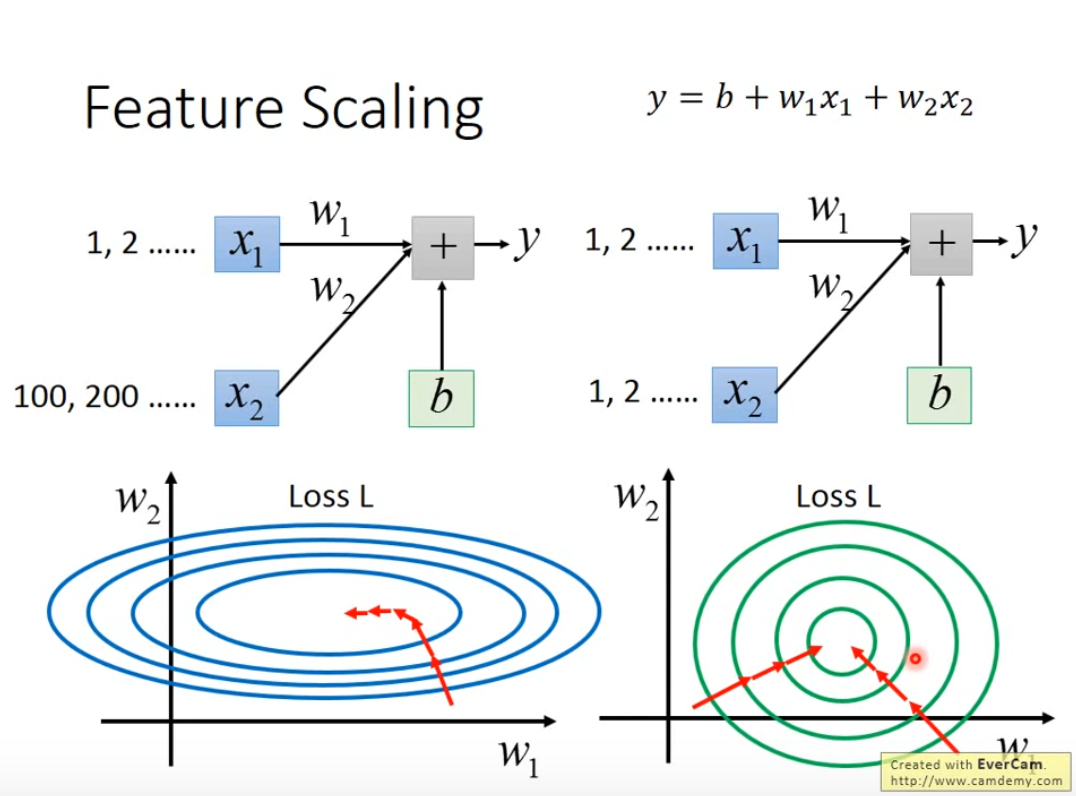

4.feature scaling

make different feature have the same scaling

浙公网安备 33010602011771号

浙公网安备 33010602011771号