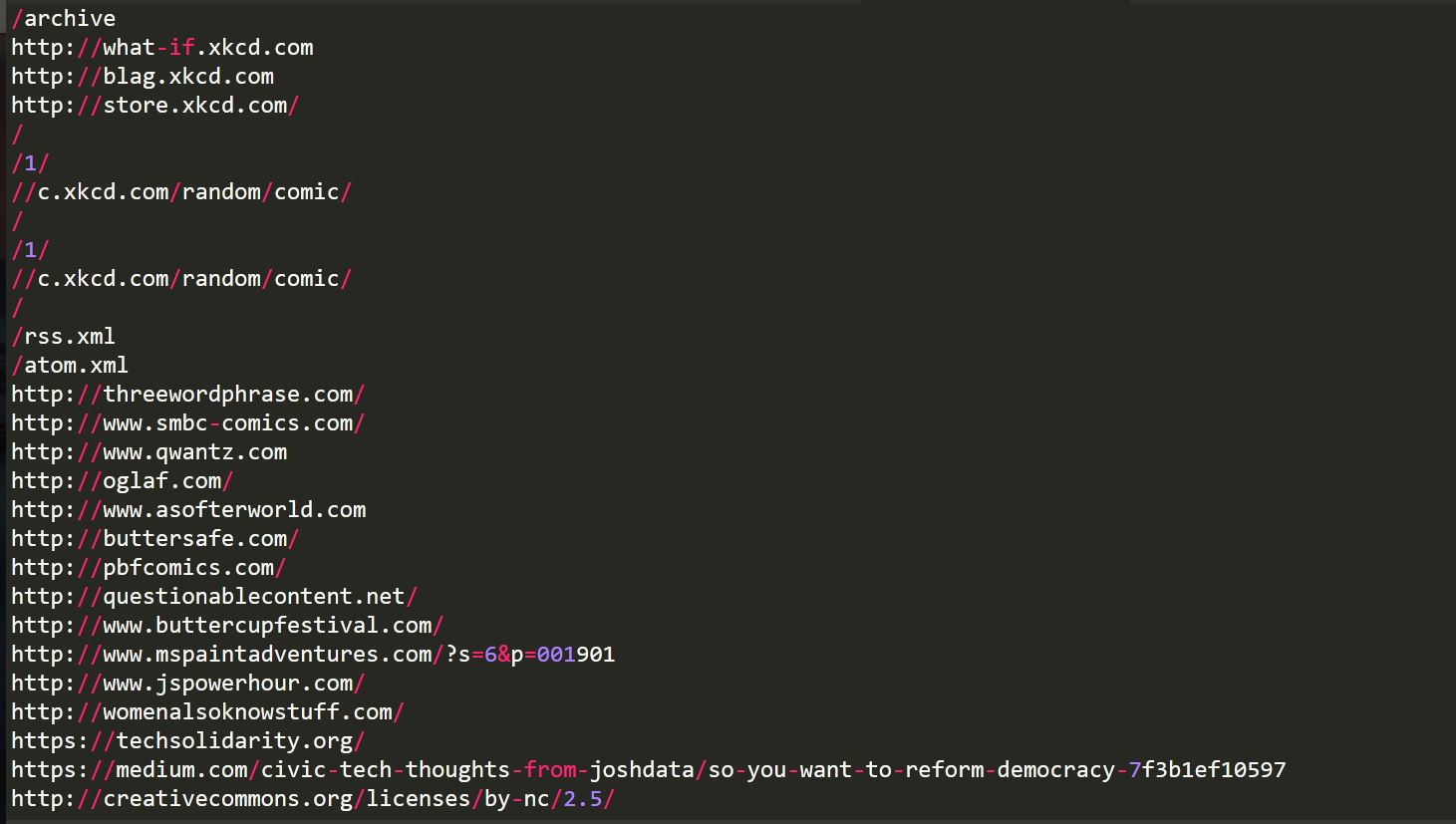

【Web crawler】print_all_links

- How to repeat Procedures&Control

CS重要概念

1.1 过程procedures

封装代码,代码重用

1.2 控制Control

DEMO

# -*- coding: UTF-8 -*-

# procedures过程

def get_next_target(page):

start_link = page.find('<a href=')

if start_link == -1: # not found

return None,0

start_quote = page.find('"',start_link)

end_quote = page.find('"',start_quote+1)

url = page[start_quote+1:end_quote]

return url,end_quote

# 循环

def print_all_links(page):

while True:

url,endpos = get_next_target(page)

if url:

print url

page = page[endpos:]

else:

break

# 获取网页源代码

def get_page(url):

try:

import urllib

return urllib.urlopen(url).read()

except:

return ''

# print_all_links('this <a href="test1">link 1</a> is <a href="test2"link 2</a> a <a href="test3">link3</a>')

# >>>test1

# >>>test2

# >>>test3

# content = get_page('http://xkcd.com/353/')

# print_all_links(content)

print_all_links(get_page('http://xkcd.com/353/'))

#print_all_links(get_page('https://www.baidu.com/'))

浙公网安备 33010602011771号

浙公网安备 33010602011771号