逻辑回归实践

1.逻辑回归是怎么防止过拟合的?为什么正则化可以防止过拟合?(大家用自己的话介绍下)

答:正则化;在过拟合的情况下,拟合函数的系数一般非常大,正则化约束参数的范数不过大,从而防止过拟合。

2.用logiftic回归来进行实践操作,数据不限。

from sklearn.datasets import load_breast_cancer from sklearn.linear_model import LogisticRegression from sklearn.model_selection import train_test_split from sklearn.metrics import classification_report import numpy as np data = load_breast_cancer()# 载入load_breast_cancer数据集 x = data['data'] y = data['target'] x_train, x_test, y_train, y_test = train_test_split(x, y, test_size=0.3) LR_model = LogisticRegression() # 构建逻辑回归模型 LR_model.fit(x_train, y_train) # 训练模型 pre = LR_model.predict(x_test) # 预测模型 print('训练数据集评分:', LR_model.score(x_train, y_train)) print('测试数据集评分:', LR_model.score(x_test, y_test)) print('测试样本的个数:',x_test.shape[0]) print('测试样本中预测正确个数:',x_test.shape[0]*LR_model.score(x_test,y_test)) print("召回率:", classification_report(y_test, pre))

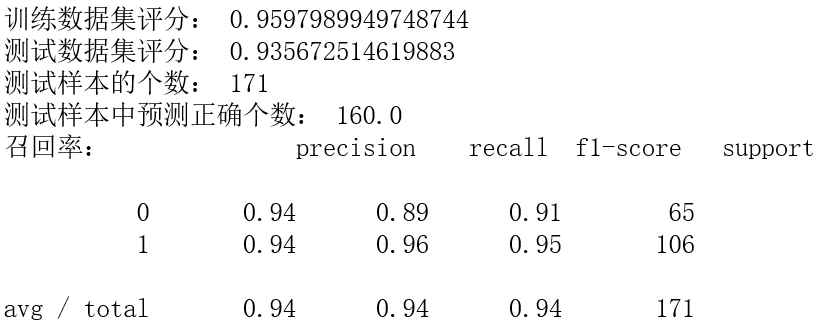

运行结果如图:

浙公网安备 33010602011771号

浙公网安备 33010602011771号