k8s基础与资源

k8s基础

一、k8s常用的资源

k8s通过资源把容器 docker管理的更好

1.创建pod资源

pod是最小的资源单位

k8s所有的资源都可以用yaml文件创建

1)k8s yaml的主要组成:

apiVersion: v1 api版本

kind: pod 资源类型

metadata: 属性

spec: 详细

2)编辑k8s_pod.yaml文件

[root@k8s-master ~]# mkdir k8s_yaml

[root@k8s-master ~]# cd k8s_yaml/

[root@k8s-master ~/k8s_yaml]# mkdir pod

[root@k8s-master ~/k8s_yaml]# cd pod/

[root@k8s-master ~/k8s_yaml/pod]# vim k8s_pod.yaml

apiVersion: v1 [版本]

kind: Pod [资源类型]

metadata:

name: nginx

labels:

app: web [标签]

spec:

containers:

- name: nginx

image: 10.0.0.5:5000/nginx:1.13

ports:

- containerPort: 80

3)创建这个资源

[root@k8s-master ~/k8s_yaml/pod]# kubectl create -f k8s_pod.yaml

pod "nginx" created

4)查看资源

# 这个资源一直是容器创建

[root@k8s-master ~/k8s_yaml/pod]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx 0/1 ContainerCreating 0 1m

# 查看下详细的 发现没有分ip 被调动在7上面了 一直没起来

[root@k8s-master ~/k8s_yaml/pod]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx 0/1 ContainerCreating 0 2m <none> 10.0.0.7

# docker看看也没有启动这个容器

[root@k8s-master ~/k8s_yaml/pod]# docker ps -a -l

5)删除资源

[root@k8s-master ~/k8s_yaml/pod]# kubectl delete pod nginx --force --grace-period=0

6)查看详细描述

# 发现了他的报错信息

[root@k8s-master ~/k8s_yaml/pod]# kubectl describe pod nginx

# 证书问题

details: (open /etc/docker/certs.d/registry.access.redhat.com/redhat-ca.crt: no such file or directory)"

# 去7上看 有这个文件 但是软链接是错的

[root@k8s-node-2 ~]# ll /etc/docker/certs.d/registry.access.redhat.com/redhat-ca.crt

/etc/docker/certs.d/registry.access.redhat.com/redhat-ca.crt -> /etc/rhsm/ca/redhat-uep.pem

7)用本地仓库下载 解决红帽证书问题

# 1.拖入下载好的镜像包

[root@k8s-master ~]# ll

-rw-r--r-- 1 root root 214888960 Aug 15 09:36 pod-infrastructure-latest.tar.gz

# 2.下载好的镜像包哪来的

[root@k8s-master ~]# docker search pod-infrastructure

# 3.上传镜像

[root@k8s-master ~]# docker load -i pod-infrastructure-latest.tar.gz

Loaded image: docker.io/tianyebj/pod-infrastructure:latest

# 4.上传到私有仓库

[root@k8s-master ~]# docker tag docker.io/tianyebj/pod-infrastructure:latest 10.0.0.5:5000/pod-infrastructure:latest

[root@k8s-master ~]# docker push 10.0.0.5:5000/pod-infrastructure:latest

# 5.修改7上的下载地址

[root@k8s-node-2 ~]# vim /etc/kubernetes/kubelet

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=10.0.0.5:5000/pod-infrastructure:latest"

# 6.重启服务

[root@k8s-node-2 ~]# systemctl restart kubelet.service

# 7.再次查看详细描述 报错信息变为没有nginx镜像

[root@k8s-master ~/k8s_yaml/pod]# kubectl describe pod nginx

Warning Failed Failed to pull image "10.0.0.5:5000/nginx:1.13": Error: image nginx:1.13 not found

# 8.拖镜像包并导入

[root@k8s-master ~]# ll

-rw-r--r-- 1 root root 112689664 Aug 15 09:35 docker_nginx1.13.tar.gz

[root@k8s-master ~]# docker load -i docker_nginx1.13.tar.gz

# 9.上传镜像到私有仓库

[root@k8s-master ~]# docker tag docker.io/nginx:1.13 10.0.0.5:5000/nginx:1.13

[root@k8s-master ~]# docker push 10.0.0.5:5000/nginx:1.13

# 10.查看详细信息

[root@k8s-master ~]# kubectl describe pod nginx

Successfully

[root@k8s-master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx 1/1 Running 0 33m 172.21.14.2 10.0.0.7

2.pod资源是什么?

一个pod资源至少有两个容器组成,pod资源容器和业务容器 最多1+4

# 访问刚分配的ip没问题 说明容器启动成功

[root@k8s-master ~]# curl -I 172.21.14.2

HTTP/1.1 200 OK

Server: nginx/1.13.12

Date: Sat, 04 Jan 2020 12:46:28 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Mon, 09 Apr 2018 16:01:09 GMT

Connection: keep-alive

ETag: "5acb8e45-264"

Accept-Ranges: bytes

# 在7上查看启动容器 有两个 一个是pod资源 一个是nginx业务

[root@k8s-node-2 ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

7ed840e93ff5 10.0.0.5:5000/nginx:1.13 "nginx -g 'daemon ..." 7 minutes ago Up 7 minutes k8s_nginx.8060363_nginx_default_54794ebc-2eeb-11ea-8118-000c293eb8e2_1d6ce124

d2b309dfd7c1 10.0.0.5:5000/pod-infrastructure:latest "/pod" 18 minutes ago Up 18 minutes k8s_POD.905f0183_nginx_default_54794ebc-2eeb-11ea-8118-000c293eb8e2_0cf0653d

# 查看这两个容器的ip地址 发现他们两共用一个ip地址

pod:

[root@k8s-node-2 ~]# docker inspect d2b309dfd7c1 |tail -20

172.21.14.2

nginx:

[root@k8s-node-2 ~]# docker inspect 7ed840e93ff5 |tail -20

null

# 共用一个ip地址端口不能冲突 所以pod只启容器 不占用端口

pod负责k8s高级功能 nginx负责访问功能 共用一个网络 这就是启两个容器的原因。

注:可以启多个容器

# 1.修改配置文件

[root@k8s-master ~]# vim k8s_yaml/pod/k8s_pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: test

labels:

app: web

spec:

containers:

- name: nginx

image: 10.0.0.5:5000/nginx:1.13

ports:

- containerPort: 80

- name: busybox

image: 10.0.0.5:5000/busybox:latest

command: ["sleep","3600"]

# 2.创建资源

[root@k8s-master ~/k8s_yaml/pod]# kubectl create -f k8s_pod.yaml

pod "test" created

# 3.查看pod资源分配

[root@k8s-master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx 1/1 Running 0 1h 172.21.14.3 10.0.0.7

test 2/2 Running 0 30m 172.21.14.2 10.0.0.7

#我前面有报错 ImagePullBackOff 是因为配置文件里面的image名和私有仓库的名对不上

[root@k8s-node-2 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED

10.0.0.5:5000/busybox latest 6d5fcfe5ff17 8 days ago

10.0.0.5:5000/busybox latse 6d5fcfe5ff17 8 days ago

3.ReplicationController资源 (RC)

副本控制器

rc:保证指定数量的pod始终存活,rc通过标签选择器来关联pod,容器数量多了或者少了都会操作,加一个或者减掉一个最年轻的容器

3.1.为什么引用RC?

进一步保证高可用 不能因为node节点挂了导致pod资源死了

让pod节点转移

3.2.k8s资源的常见操作

#创建资源

kubectl create -f xxx.yaml

#查看资源

kubectl get pod|rc

kubectl describe pod nginx

#删除

kubectl delete pod nginx

kubectl delete -f xxx.yaml

#修改创建好的文件

kubectl edit pod nginx

3.3.RC-k8s的自愈功能

1)创建RC资源

# 1.修改配置文件

[root@k8s-master ~/k8s_yaml]# mkdir RC

[root@k8s-master ~/k8s_yaml]# cd RC/

[root@k8s-master ~/k8s_yaml/RC]# vim k8s_rc.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: nginx

spec:

replicas: 5 #副本 就是一模一样的容器 启5个

selector:

app: myweb #确定哪些小弟是我的 防止之后rc过多删掉不需要的容器 不同容器标签唯一

template:

metadata:

labels:

app: myweb #小弟标签

spec:

containers:

- name: myweb

image: 10.0.0.5:5000/nginx:1.13

ports:

- containerPort: 80

# 2.创建资源

[root@k8s-master ~/k8s_yaml/RC]# kubectl create -f k8s_rc.yaml

replicationcontroller "nginx" created

# 3.查看资源 5个

[root@k8s-master ~/k8s_yaml/RC]# kubectl get rc

NAME DESIRED CURRENT READY AGE

nginx 5 5 5 1m

# 4.查看调度资源

[root@k8s-master ~/k8s_yaml/RC]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx 1/1 Running 0 2h 172.21.14.3 10.0.0.7

nginx-0v9t1 1/1 Running 0 1m 172.21.30.2 10.0.0.6

nginx-b36v3 1/1 Running 0 1m 172.21.30.4 10.0.0.6

nginx-gbtcd 1/1 Running 0 1m 172.21.14.4 10.0.0.7

nginx-rvv98 1/1 Running 0 1m 172.21.14.5 10.0.0.7

nginx-t8mvs 1/1 Running 0 1m 172.21.30.3 10.0.0.6

test 2/2 Running 0 1h 172.21.14.2 10.0.0.7

2)测试删除资源

# 1.删掉一个资源

[root@k8s-master ~/k8s_yaml/RC]# kubectl delete pod nginx-0v9t1

pod "nginx-0v9t1" deleted

# 2.立马启一个随机新资源

[root@k8s-master ~/k8s_yaml/RC]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx 1/1 Running 0 2h 172.21.14.3 10.0.0.7

nginx-b36v3 1/1 Running 0 5m 172.21.30.4 10.0.0.6

nginx-gbtcd 1/1 Running 0 5m 172.21.14.4 10.0.0.7

nginx-q380j 1/1 Running 0 2s 172.21.30.5 10.0.0.6

nginx-rvv98 1/1 Running 0 5m 172.21.14.5 10.0.0.7

nginx-t8mvs 1/1 Running 0 5m 172.21.30.3 10.0.0.6

test 2/2 Running 0 1h 172.21.14.2 10.0.0.7

3)测试删掉一个node节点

# 1.删除一个node节点

[root@k8s-master ~/k8s_yaml/RC]# kubectl delete node 10.0.0.7

node "10.0.0.7" deleted

# 2.发现有RC的全部转移到6上 没有RC的就删掉了

[root@k8s-master ~/k8s_yaml/RC]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-b36v3 1/1 Running 0 8m 172.21.30.4 10.0.0.6

nginx-lbhv5 1/1 Running 0 5s 172.21.30.6 10.0.0.6

nginx-q380j 1/1 Running 0 3m 172.21.30.5 10.0.0.6

nginx-t8mvs 1/1 Running 0 8m 172.21.30.3 10.0.0.6

nginx-wr30w 1/1 Running 0 5s 172.21.30.2 10.0.0.6

4)恢复节点 资源分配

# 1.恢复节点

[root@k8s-node-2 ~]# systemctl restart kubelet.service

[root@k8s-master ~]# kubectl get nodes

NAME STATUS AGE

10.0.0.6 Ready 1d

10.0.0.7 Ready 19s

# 2.创建资源 分配到7

[root@k8s-master ~/k8s_yaml/pod]# kubectl create -f k8s_pod.yaml

pod "test" created

[root@k8s-master ~/k8s_yaml/pod]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-b36v3 1/1 Running 0 16m 172.21.30.4 10.0.0.6

nginx-lbhv5 1/1 Running 0 8m 172.21.30.6 10.0.0.6

nginx-q380j 1/1 Running 0 11m 172.21.30.5 10.0.0.6

nginx-t8mvs 1/1 Running 0 16m 172.21.30.3 10.0.0.6

nginx-wr30w 1/1 Running 0 8m 172.21.30.2 10.0.0.6

test 2/2 Running 0 8s 172.21.14.2 10.0.0.7

# 3.删掉一个RC配置的资源 重新启动一个随机资源到7上

[root@k8s-master ~/k8s_yaml/pod]# kubectl delete pod nginx-b36v3

pod "nginx-b36v3" deleted

[root@k8s-master ~/k8s_yaml/pod]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-lbhv5 1/1 Running 0 10m 172.21.30.6 10.0.0.6

nginx-q380j 1/1 Running 0 13m 172.21.30.5 10.0.0.6

nginx-s2bln 1/1 Running 0 3s 172.21.14.3 10.0.0.7

nginx-t8mvs 1/1 Running 0 18m 172.21.30.3 10.0.0.6

nginx-wr30w 1/1 Running 0 10m 172.21.30.2 10.0.0.6

test 2/2 Running 0 1m 172.21.14.2 10.0.0.7

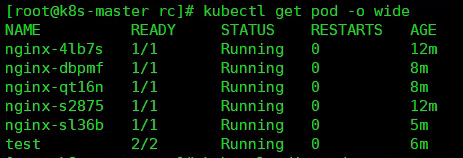

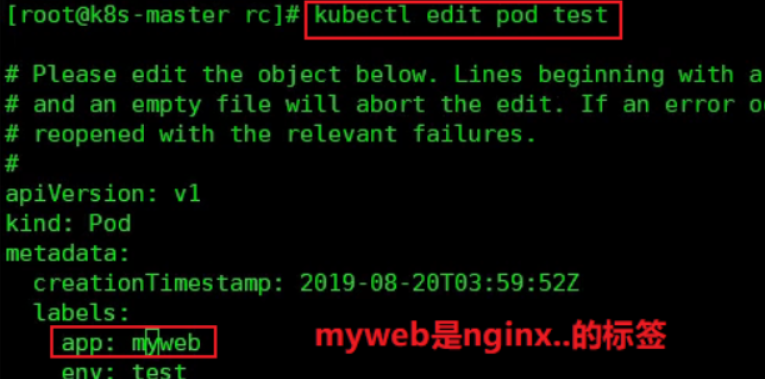

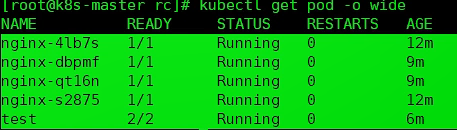

5)修改标签名 让5个容器变6个

之前的所有容器

修改test容器标签

删掉了我最年轻的nginx 上图5m的那个

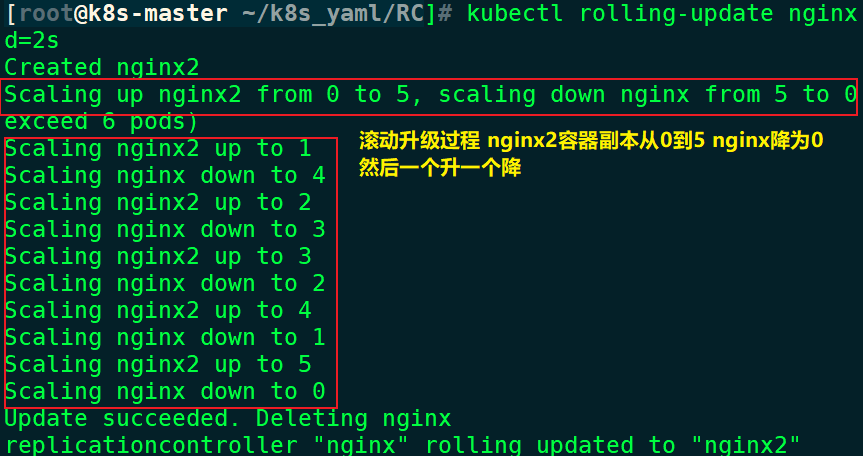

3.4.RC-k8s的滚动升级与一键回滚

1)滚动升级

# 1.修改配置文件 nginx:1.13升级到nginx:1.15

[root@k8s-master ~/k8s_yaml/RC]# ll

-rw-r--r-- 1 root root 304 Jan 4 22:14 k8s_rc.yaml

[root@k8s-master ~/k8s_yaml/RC]# cp k8s_rc.yaml k8s_rc2.yaml

[root@k8s-master ~/k8s_yaml/RC]# vim k8s_rc2.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: nginx2 #滚动升级过程中两个会同时存在 所以名字和标签都不能相同

spec:

replicas: 5

selector:

app: myweb2

template:

metadata:

labels:

app: myweb2

spec:

containers:

- name: myweb

image: 10.0.0.5:5000/nginx:1.15

ports:

- containerPort: 80

# 2.上传nginx:1.15镜像 并导入私有仓库

[root@k8s-master ~]# ll

-rw-r--r-- 1 root root 112811008 Aug 15 09:35 docker_nginx1.15.tar.gz

[root@k8s-master ~]# docker load -i docker_nginx1.15.tar.gz

Loaded image: docker.io/nginx:latest

[root@k8s-master ~]# docker tag docker.io/nginx:latest 10.0.0.5:5000/nginx:1.15

[root@k8s-master ~]# docker push 10.0.0.5:5000/nginx:1.15

# 3.查看当前版本

[root@k8s-master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-lbhv5 1/1 Running 0 42m 172.21.30.6 10.0.0.6

nginx-q380j 1/1 Running 0 45m 172.21.30.5 10.0.0.6

nginx-s2bln 1/1 Running 0 32m 172.21.14.3 10.0.0.7

nginx-t8mvs 1/1 Running 0 50m 172.21.30.3 10.0.0.6

nginx-wr30w 1/1 Running 0 42m 172.21.30.2 10.0.0.6

test 2/2 Running 0 34m 172.21.14.2 10.0.0.7

[root@k8s-master ~]# curl -I 172.21.30.2

HTTP/1.1 200 OK

Server: nginx/1.13.12 [是1.13没错]

# 4.滚动升级

[root@k8s-master ~/k8s_yaml/RC]# kubectl rolling-update nginx -f k8s_rc2.yaml --update-period=2s [默认1分钟]

# 5.查看版本 升级之后ip地址会变

[root@k8s-master ~/k8s_yaml/RC]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx2-46kkp 1/1 Running 0 3m 172.21.14.5 10.0.0.7

nginx2-kfhf1 1/1 Running 0 3m 172.21.30.4 10.0.0.6

nginx2-tsbj3 1/1 Running 0 3m 172.21.30.6 10.0.0.6

nginx2-v6t6n 1/1 Running 0 4m 172.21.14.4 10.0.0.7

nginx2-xdx9r 1/1 Running 0 3m 172.21.14.3 10.0.0.7

test 2/2 Running 0 41m 172.21.14.2 10.0.0.7

[root@k8s-master ~/k8s_yaml/RC]# curl -I 172.21.30.6

HTTP/1.1 200 OK

Server: nginx/1.15.5 [升级到1.15了]

2)一键回滚

# 反过来执行就行了

[root@k8s-master ~/k8s_yaml/RC]# kubectl rolling-update nginx2 -f k8s_rc.yaml --update-period=2s

3.5.修改副本数量(启动容器数量)

# 方法一:

[root@k8s-master ~/k8s_yaml/svc]# kubectl edit rc nginx2

replicas: 3 [修改这个地方的数量]

# 方法二:

[root@k8s-master ~/k8s_yaml/svc]# kubectl scale rc nginx2 --replicas=2

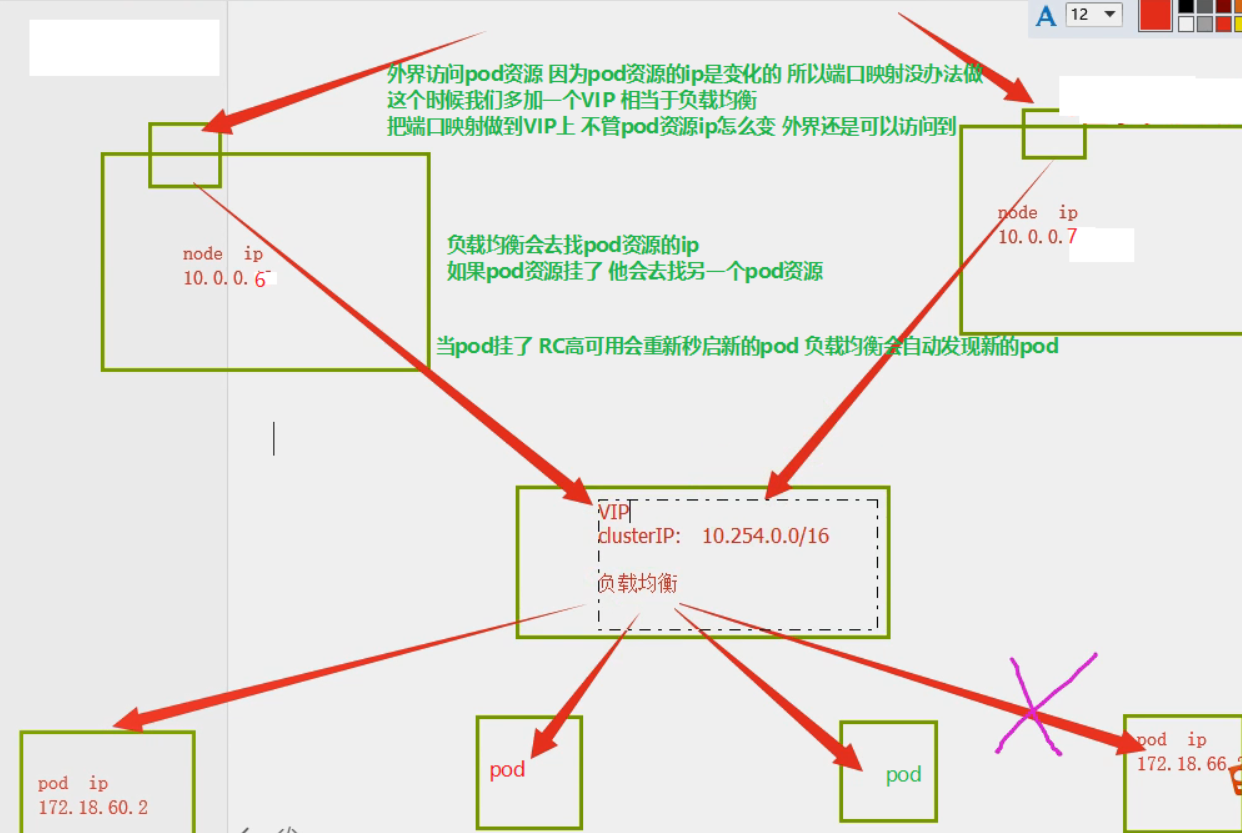

4.service资源

service帮助pod暴露端口,service通过标签选择器来关联pod

4.1.为什么需要service资源?

现有的资源都配有ip地址 各个主机间互通 但是这些网段都不能被外界访问。

docker是通过端口映射解决这个问题的,但是k8s会资源转移,所以端口映射不行,这个时候就要做一个特定不变的东西来做端口映射。

4.2.service-k8s的服务自动发现和负载均衡

4.3.创建service

# 1.创建配置文件

[root@k8s-master ~/k8s_yaml]# mkdir svc

[root@k8s-master ~/k8s_yaml]# cd svc

[root@k8s-master ~/k8s_yaml/svc]# vim k8s_svc.yaml

apiVersion: v1

kind: Service

metadata:

name: myweb

spec:

type: NodePort #ClusterIP

ports:

- port: 80 #clusterIP

nodePort: 30000 #node port [这个端口默认30000-32767]

targetPort: 80 #pod port

selector:

app: myweb2

# 2.创建资源

[root@k8s-master ~/k8s_yaml/svc]# kubectl create -f k8s_svc.yaml

service "myweb" created

# 3.查看资源

[root@k8s-master ~/k8s_yaml/svc]# kubectl get svc -o wide

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kubernetes 10.254.0.1 <none> 443/TCP 1d <none>

myweb 10.254.44.139 <nodes> 80:30000/TCP 56s app=myweb2

查看一下全部资源 注意rc和svc的标签 所有的pod资源都受rc控制 如果svc想和pod关联 标签必须一样

SELECTOR:app=myweb2

[root@k8s-master ~/k8s_yaml/svc]# kubectl get all -o wide

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rc/nginx2 5 5 5 1h myweb 10.0.0.5:5000/nginx:1.15 app=myweb2

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/kubernetes 10.254.0.1 <none> 443/TCP 1d <none>

svc/myweb 10.254.44.139 <nodes> 80:30000/TCP 6m app=myweb2

NAME READY STATUS RESTARTS AGE IP NODE

po/nginx2-46kkp 1/1 Running 0 1h 172.21.14.5 10.0.0.7

po/nginx2-kfhf1 1/1 Running 0 1h 172.21.30.4 10.0.0.6

po/nginx2-tsbj3 1/1 Running 0 1h 172.21.30.6 10.0.0.6

po/nginx2-v6t6n 1/1 Running 0 1h 172.21.14.4 10.0.0.7

po/nginx2-xdx9r 1/1 Running 0 1h 172.21.14.3 10.0.0.7

po/test 2/2 Running 2 2h 172.21.14.2 10.0.0.7

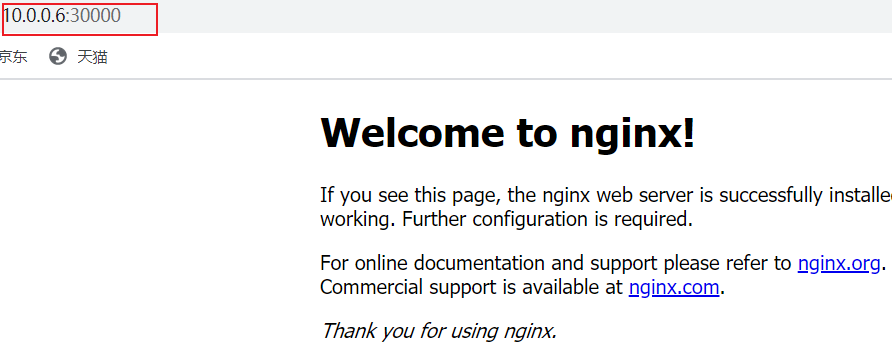

访问网页

进入容器修改页面测试

先删掉一些容器 好测试 剩下两个

[root@k8s-master ~/k8s_yaml/svc]# kubectl get all -o wide

NAME READY STATUS RESTARTS AGE IP NODE

po/nginx2-kfhf1 1/1 Running 0 1h 172.21.30.4 10.0.0.6

po/nginx2-v6t6n 1/1 Running 0 1h 172.21.14.4 10.0.0.7

# 进入容器

[root@k8s-master ~/k8s_yaml/svc]# kubectl exec -it nginx2-kfhf1 /bin/bash

root@nginx2-kfhf1:/# echo 'web01' >/usr/share/nginx/html/index.html

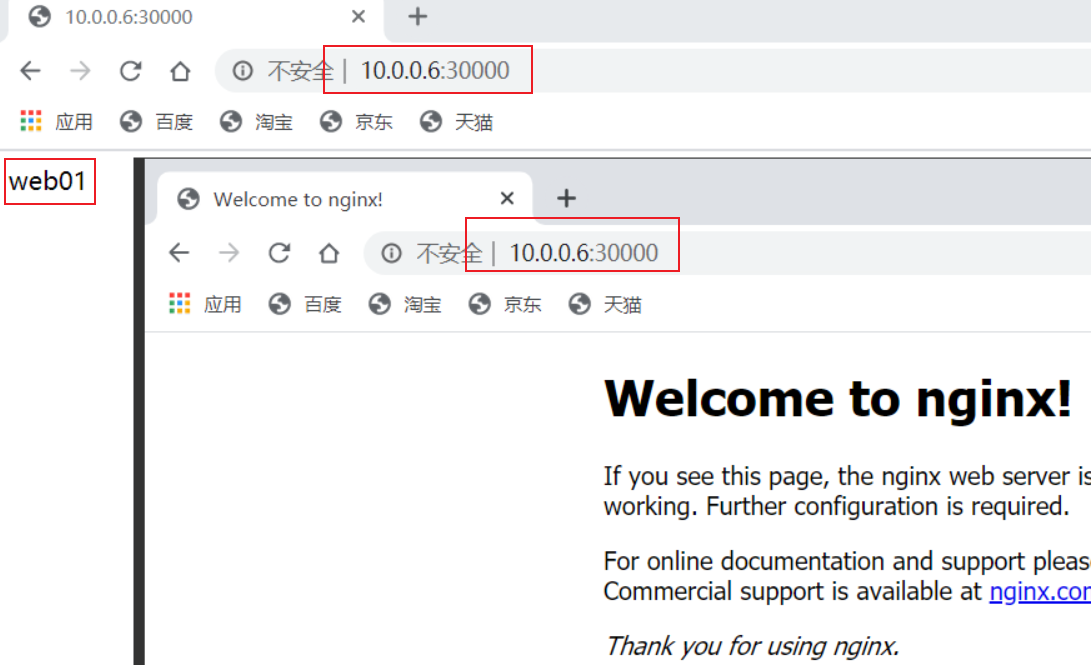

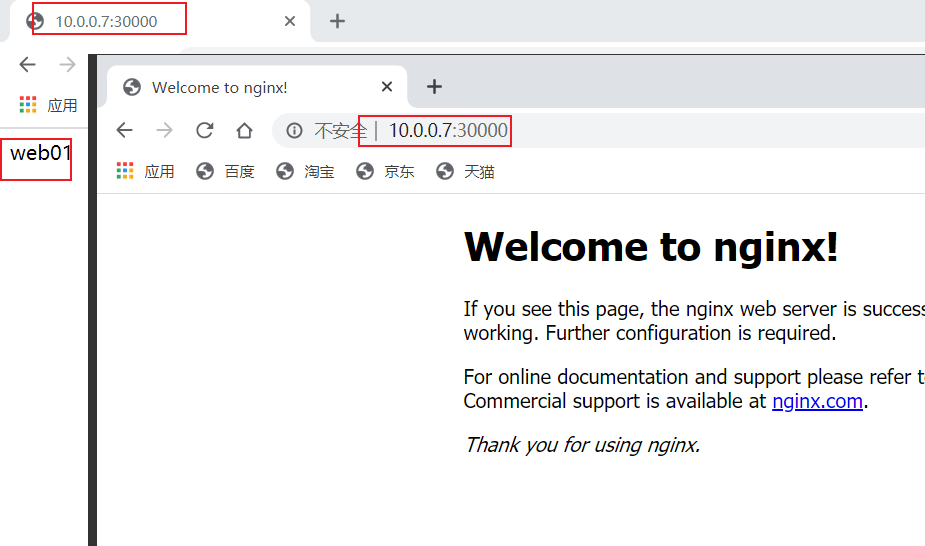

查看网页

走了负载均衡

查看详细信息

服务自动发现

[root@k8s-master ~/k8s_yaml/svc]# kubectl describe svc myweb

Name: myweb

Namespace: default

Labels: <none>

Selector: app=myweb2

Type: NodePort

IP: 10.254.44.139

Port: <unset> 80/TCP

NodePort: <unset> 30000/TCP

Endpoints: 172.21.14.4:80,172.21.30.4:80 [pod起来了,这里自动加上 pod死了,这里自动踢掉]

Session Affinity: None

No events.

4.4.svc资源扩展

# 之前nodo端口默认范围是30000-32767

[root@k8s-master ~]# vim /etc/kubernetes/apiserver

KUBE_API_ARGS="--service-node-port-range=3000-50000"

[root@k8s-master ~]# systemctl restart kube-apiserver.service

service默认使用iptables来实现负载均衡(4层), k8s 1.8新版本中推荐使用lvs(4层)

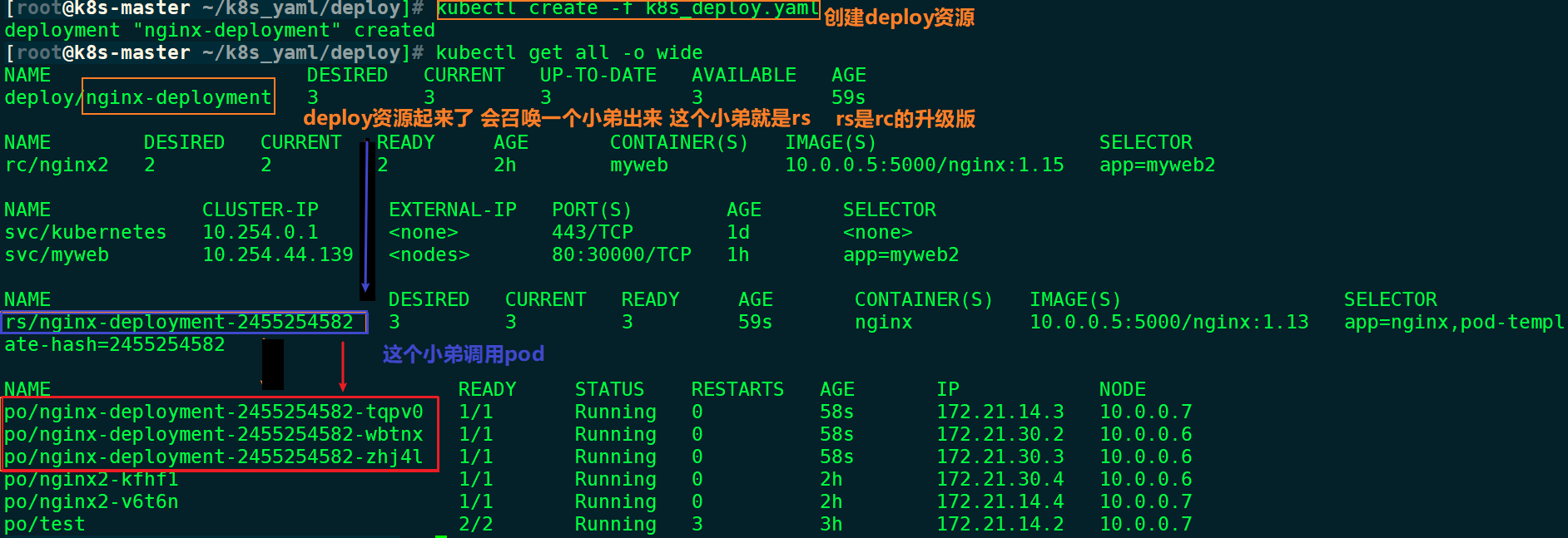

5.deployment资源

rc在滚动升级之后,会造成服务访问中断(升级和回滚的时候设置标签不能一样,但是标签不一样svc和pod就不能关联),于是k8s引入了deployment资源。

5.1.创建deployment

# 1.创建配置文件

[root@k8s-master ~/k8s_yaml]# mkdir deploy

[root@k8s-master ~/k8s_yaml]# cd deploy/

[root@k8s-master ~/k8s_yaml/deploy]# vim k8s_deploy.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: 10.0.0.5:5000/nginx:1.13

ports:

- containerPort: 80

resources:

limits:

cpu: 100m

requests:

cpu: 100m

# 2.创建资源

[root@k8s-master ~/k8s_yaml/deploy]# kubectl create -f k8s_deploy.yaml

deployment "nginx-deployment" created

# 3.查看全部资源

# 4.创建新的svc

[root@k8s-master ~/k8s_yaml]# cp svc/k8s_svc.yaml deploy/

[root@k8s-master ~/k8s_yaml/deploy]# vim k8s_svc.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx-deployment

spec:

type: NodePort #ClusterIP

ports:

- port: 80 #clusterIP

nodePort: 30001 #node port

targetPort: 80 #pod port

selector:

app: nginx

# 5.查看svc

[root@k8s-master ~/k8s_yaml/deploy]# kubectl get svc

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes 10.254.0.1 <none> 443/TCP 1d

myweb 10.254.44.139 <nodes> 80:30000/TCP 1h

nginx-deployment 10.254.134.5 <nodes> 80:30001/TCP 38s

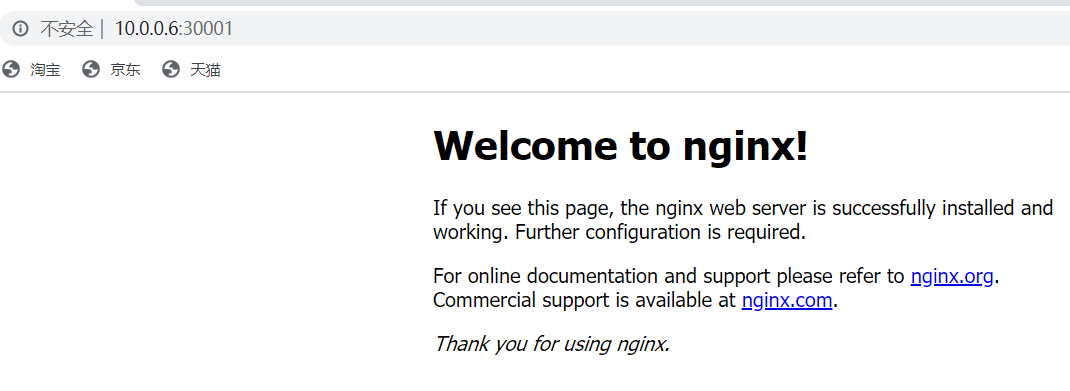

5.2.访问网页

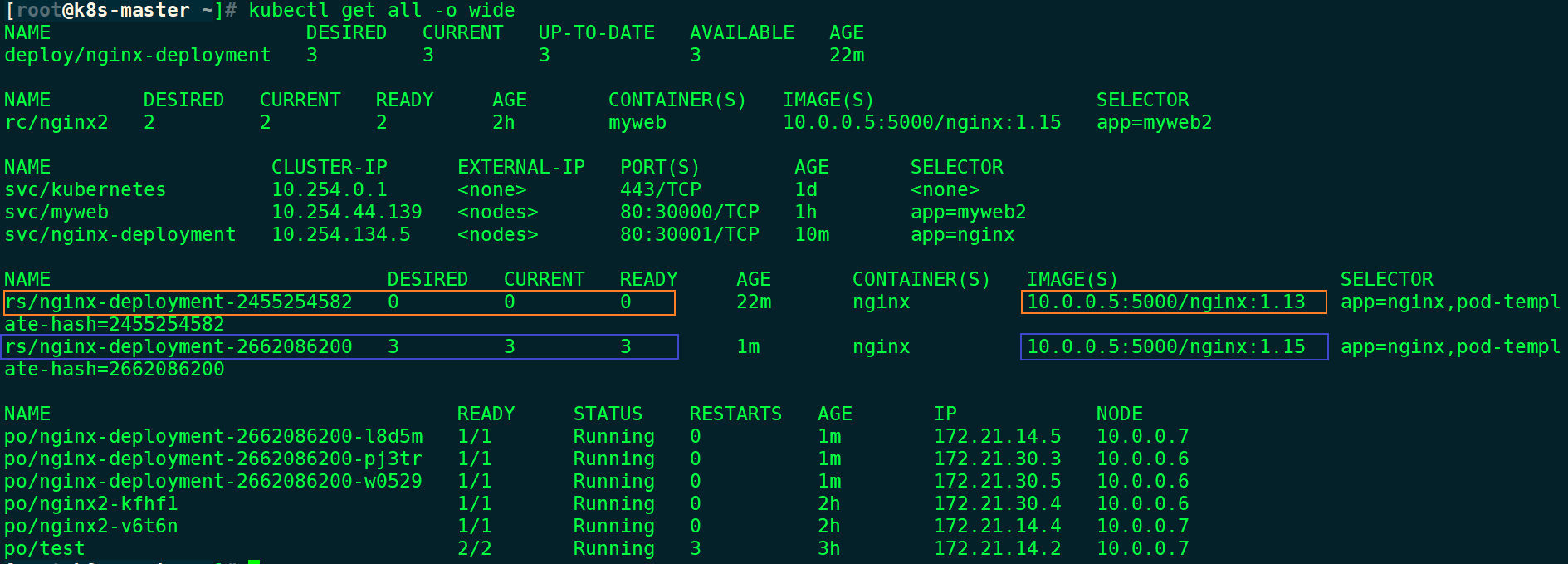

5.3.deployment-k8s的滚动升级与回滚

升级

[root@k8s-master ~]# kubectl edit deployment nginx-deployment

containers:

- image: 10.0.0.5:5000/nginx:1.15 [这里直接修改为1.15]

全程服务无中断 而且升级不需要依赖任何配置文件

回滚

#方法一:

像升级一样 修改配置文件

#方法二:

命令行回滚

# 查看有多少个版本

[root@k8s-master ~]# kubectl rollout history deployment nginx-deployment

deployments "nginx-deployment"

REVISION CHANGE-CAUSE

1 <none>

2 <none>

# 回滚上一个版本

[root@k8s-master ~]# kubectl rollout undo deployment nginx-deployment

# 指定回滚版本

[root@k8s-master ~]# kubectl rollout undo deployment nginx-deployment --to-revision=1

5.4.使用命令行创建deploy资源 升级回滚

用命令行创建 升级 回滚 是有执行过程记录的

# 命令行创建deployment

[root@k8s-master ~]# kubectl run nginx --image=10.0.0.5:5000/nginx:1.13 --replicas=3 --record

# 命令行升级版本

[root@k8s-master ~]# kubectl set image deploy nginx nginx=10.0.0.5:5000/nginx:1.15

# 查看deployment所有历史版本 有执行记录

[root@k8s-master ~]# kubectl rollout history deployment nginx

deployments "nginx"

REVISION CHANGE-CAUSE

1 kubectl run nginx --image=10.0.0.5:5000/nginx:1.13 --replicas=3 --record

2 kubectl set image deploy nginx nginx=10.0.0.5:5000/nginx:1.15

# deployment回滚到上一个版本

[root@k8s-master ~]# kubectl rollout undo deployment nginx

# 查看deployment所有历史版本

# 当回滚之后 老版本会自动删除 新版本会自动往后排序

[root@k8s-master ~]# kubectl rollout history deployment nginx

deployments "nginx"

REVISION CHANGE-CAUSE

2 kubectl set image deploy nginx nginx=10.0.0.5:5000/nginx:1.15

3 kubectl run nginx --image=10.0.0.5:5000/nginx:1.13 --replicas=3 --record

6.tomcat+mysql练习

之前都是单台服务器,并没有交互

如果有VIP连的是web的pod 有VIP连的是mysql的pod,那么web的pod的ip会变,mysql的pod的ip也会变,他们两怎么关联???

6.1.创建测试环境

# 1.上传测试文件

[root@k8s-master ~/k8s_yaml]# mkdir tomcat_demo

[root@k8s-master ~/k8s_yaml]# cd tomcat_demo/

[root@k8s-master ~/k8s_yaml/tomcat_demo]# ll

-rw-r--r-- 1 root root 416 Aug 15 09:31 mysql-rc.yml

-rw-r--r-- 1 root root 145 Aug 15 09:31 mysql-svc.yml

-rw-r--r-- 1 root root 483 Aug 15 09:31 tomcat-rc.yml

-rw-r--r-- 1 root root 162 Aug 15 09:31 tomcat-svc.yml

# 2.测试环境中把所有资源删除

[root@k8s-master ~]# rm -rf /var/lib/etcd/default.etcd/*

# 3.重启所有服务

[root@k8s-master ~]# systemctl restart etcd.service

[root@k8s-master ~]# systemctl restart kube-apiserver.service

[root@k8s-master ~]# systemctl restart kube-controller-manager.service

[root@k8s-master ~]# systemctl restart kube-scheduler.service

[root@k8s-node-1 ~]# systemctl restart kubelet.service

[root@k8s-node-1 ~]# systemctl restart kube-proxy.service

[root@k8s-node-2 ~]# systemctl restart kubelet.service

[root@k8s-node-2 ~]# systemctl restart kube-proxy.service

# 3.查看资源

[root@k8s-master ~]# kubectl get all -o wide

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/kubernetes 10.254.0.1 <none> 443/TCP 2m <none>

[root@k8s-master ~]# kubectl get nodes

NAME STATUS AGE

10.0.0.6 Ready 2m

10.0.0.7 Ready 1m

[root@k8s-master ~]# kubectl get componentstatus

NAME STATUS MESSAGE ERROR

etcd-0 Healthy {"health":"true"}

controller-manager Healthy ok

scheduler Healthy ok

# 4.恢复flannel网络 [因为flannel网络也是会传数据进etcd数据库的]

[root@k8s-master ~]# etcdctl mk /atomic.io/network/config '{ "Network": "172.16.0.0/16" }'

##全部窗口执行重启

systemctl restart flanneld

systemctl restart docker

# 5.修改配置文件

[root@k8s-master ~/k8s_yaml/tomcat_demo]# vim mysql-rc.yml

apiVersion: v1

kind: ReplicationController

metadata:

name: mysql

spec:

replicas: 1 #启一个就够了 数据不会丢

selector:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

containers:

- name: mysql

image: 10.0.0.5:5000/mysql:5.7

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: '123456'

[root@k8s-master ~/k8s_yaml/tomcat_demo]# vim tomcat-rc.yml

apiVersion: v1

kind: ReplicationController

metadata:

name: myweb

spec:

replicas: 1

selector:

app: myweb

template:

metadata:

labels:

app: myweb

spec:

containers:

- name: myweb

image: 10.0.0.5:5000/tomcat-app:v2

ports:

- containerPort: 8080

env:

- name: MYSQL_SERVICE_HOST

value: 'mysql'

- name: MYSQL_SERVICE_PORT

value: '3306'

# 6.上传镜像至私有仓库

[root@k8s-master ~]# ll

-rw-r--r-- 1 root root 392823296 Aug 15 09:36 docker-mysql-5.7.tar.gz

-rw-r--r-- 1 root root 369691136 Aug 15 09:35 tomcat-app-v2.tar.gz

[root@k8s-master ~]# docker load -i docker-mysql-5.7.tar.gz

Loaded image: docker.io/mysql:5.7

[root@k8s-master ~]# docker tag docker.io/mysql:5.7 10.0.0.5:5000/mysql:5.7

[root@k8s-master ~]# docker push 10.0.0.5:5000/mysql:5.7

[root@k8s-master ~]# docker load -i tomcat-app-v2.tar.gz

Loaded image: docker.io/kubeguide/tomcat-app:v2

[root@k8s-master ~]# docker tag docker.io/kubeguide/tomcat-app:v2 10.0.0.5:5000/tomcat-app:v2

[root@k8s-master ~]# docker push 10.0.0.5:5000/tomcat-app:v2

# 7.创建资源

[root@k8s-master ~/k8s_yaml/tomcat_demo]# kubectl create -f mysql-rc.yml

# 8.外界访问 配置mysql文件

[root@k8s-master ~/k8s_yaml/tomcat_demo]# vim mysql-svc.yml

apiVersion: v1

kind: Service

metadata:

name: mysql

spec:

ports:

- port: 3306 #vip端口

targetPort: 3306 #pod端口

selector:

app: mysql

# 9.创建资源

[root@k8s-master ~/k8s_yaml/tomcat_demo]# kubectl create -f mysql-svc.yml

# 10.查看全部资源

[root@k8s-master ~/k8s_yaml/tomcat_demo]# kubectl get all -o wide

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rc/mysql 1 1 1 5m mysql 10.0.0.5:5000/mysql:5.7 app=mysql

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/kubernetes 10.254.0.1 <none> 443/TCP 38m <none>

svc/mysql 10.254.137.230 <none> 3306/TCP 1m app=mysql

NAME READY STATUS RESTARTS AGE IP NODE

po/mysql-lnhjq 1/1 Running 0 5m 172.16.66.2 10.0.0.7

# 11.修改tomcat配置文件 注意mysql的ip地址

[root@k8s-master ~/k8s_yaml/tomcat_demo]# vim tomcat-rc.yml

apiVersion: v1

kind: ReplicationController

metadata:

name: myweb

spec:

replicas: 1

selector:

app: myweb

template:

metadata:

labels:

app: myweb

spec:

containers:

- name: myweb

image: 10.0.0.5:5000/tomcat-app:v2

ports:

- containerPort: 8080

env:

- name: MYSQL_SERVICE_HOST

value: '10.254.137.230'

- name: MYSQL_SERVICE_PORT

value: '3306'

# 12.创建资源

[root@k8s-master ~/k8s_yaml/tomcat_demo]# kubectl create -f tomcat-rc.yml

# 13.创建资源

[root@k8s-master ~/k8s_yaml/tomcat_demo]# vim tomcat-svc.yml

apiVersion: v1

kind: Service

metadata:

name: myweb

spec:

type: NodePort

ports:

- port: 8080

nodePort: 30008

selector:

app: myweb

[root@k8s-master ~/k8s_yaml/tomcat_demo]# kubectl create -f tomcat-svc.yml

# 14.查看全部资源

[root@k8s-master ~/k8s_yaml/tomcat_demo]# kubectl get all -o wide

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rc/mysql 1 1 1 14m mysql 10.0.0.5:5000/mysql:5.7 app=mysql

rc/myweb 1 1 1 2m myweb 10.0.0.5:5000/tomcat-app:v2 app=myweb

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/kubernetes 10.254.0.1 <none> 443/TCP 46m <none>

svc/mysql 10.254.137.230 <none> 3306/TCP 10m app=mysql

svc/myweb 10.254.168.24 <nodes> 8080:30008/TCP 49s app=myweb

NAME READY STATUS RESTARTS AGE IP NODE

po/mysql-lnhjq 1/1 Running 0 14m 172.16.66.2 10.0.0.7

po/myweb-d66r1 1/1 Running 0 2m 172.16.65.2 10.0.0.6

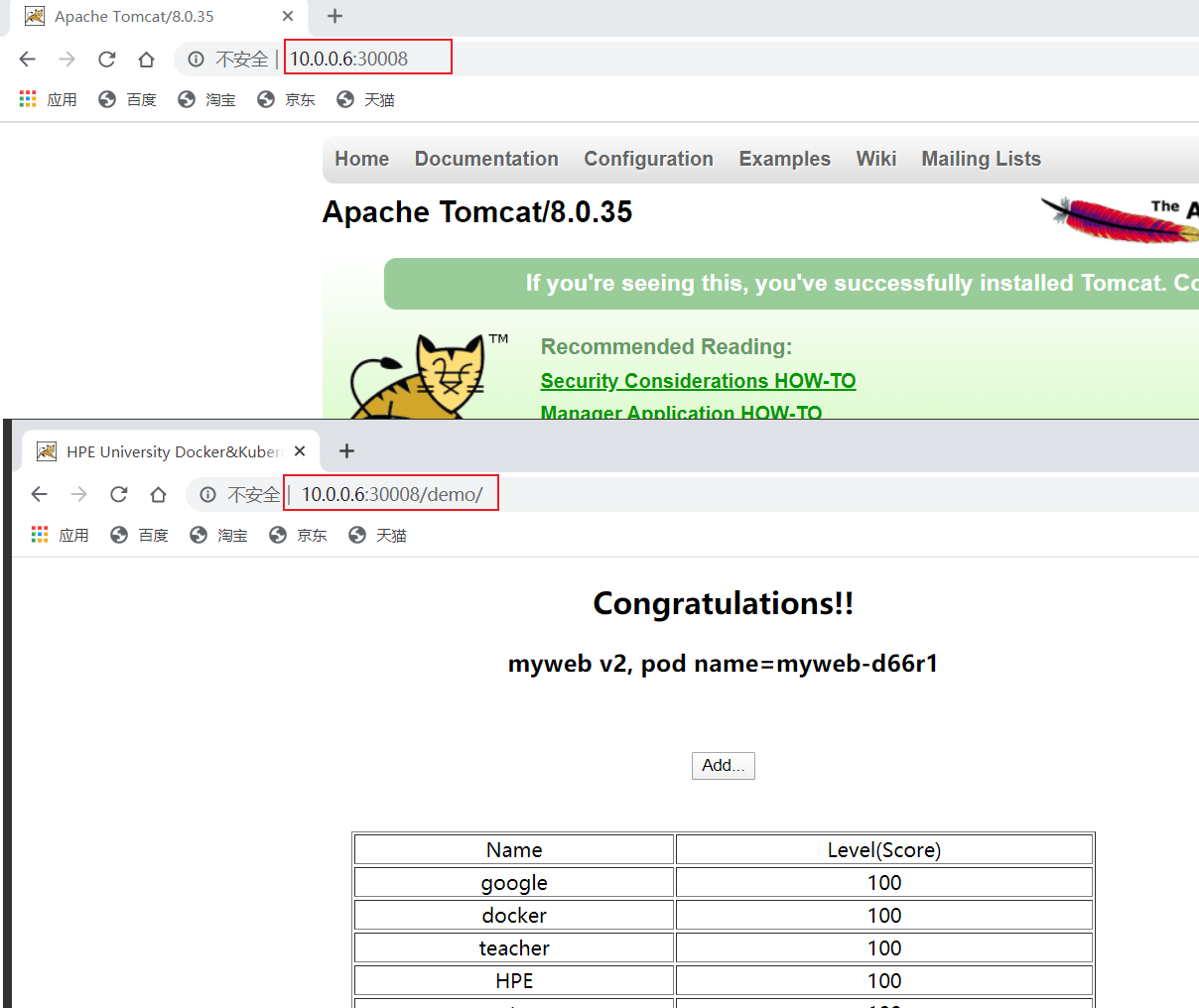

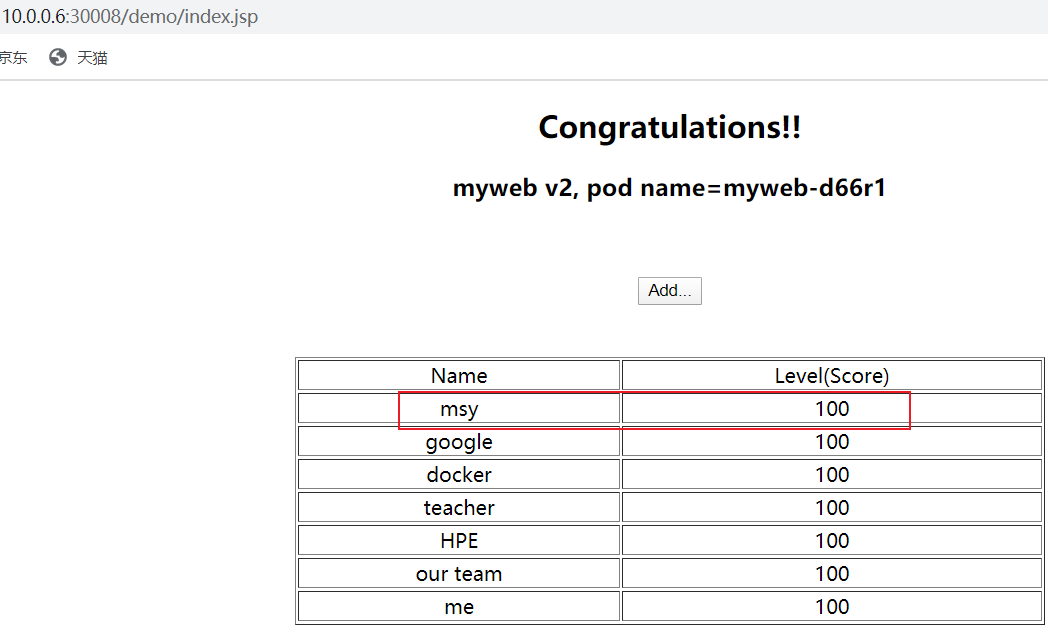

6.2.网页访问

6.3.网页数据库插入数据 虚拟机查看

注意:网页上myweb-d66r1就是虚拟机上查看全部资源启来的容器名

# 进入容器查看有没有插入的数据

[root@k8s-master ~/k8s_yaml/tomcat_demo]# kubectl exec -it mysql-lnhjq /bin/bash

root@mysql-lnhjq:/# mysql -uroot -p123456

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| HPE_APP |

| mysql |

| performance_schema |

| sys |

+--------------------+

mysql> use HPE_APP

mysql> show tables;

+-------------------+

| Tables_in_HPE_APP |

+-------------------+

| T_USERS |

+-------------------+

mysql> select * from T_USERS;

+----+-----------+-------+

| ID | USER_NAME | LEVEL |

+----+-----------+-------+

| 1 | me | 100 |

| 2 | our team | 100 |

| 3 | HPE | 100 |

| 4 | teacher | 100 |

| 5 | docker | 100 |

| 6 | google | 100 |

| 7 | msy | 100 | #我之前网页上加的msy 100

+----+-----------+-------+

以上实验说明:网页与数据库能正常访问 交互成功 外界访问成功。

浙公网安备 33010602011771号

浙公网安备 33010602011771号