Hadoop使用实例

一.词频统计

1.下载喜欢的电子书或大量文本数据,并保存在本地文本文件中

wget http://www.gutenberg.org/files/1342/1342-0.txt

2.编写map与reduce函数

#mapper.py

#!/usr/bin/env python import sys for line in sys.stdin: line = line.strip() words = line.split() for word in words: print "%s\t%s" % (word, 1)

#reducer.py

#!/usr/bin/env python

from operator import itemgetter

import sys

current_word = None

current_count = 0

word = None

for line in sys.stdin:

line = line.strip()

word, count = line.split('\t', 1)

try:

count = int(count)

except ValueError:

continue

if current_word == word:

current_count += count

else:

if current_word:

print "%s\t%s" % (current_word, current_count)

current_count = count

current_word = word

if word == current_word:

print "%s\t%s" % (current_word, current_count)

3.本地测试map与reduce

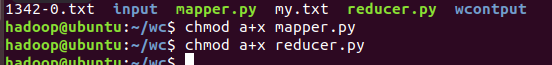

#授权 ls -l chmod a+x mapper.py chmod a+x reducer.py ls -l

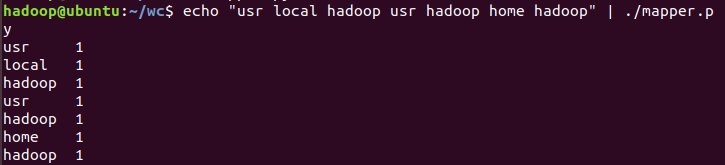

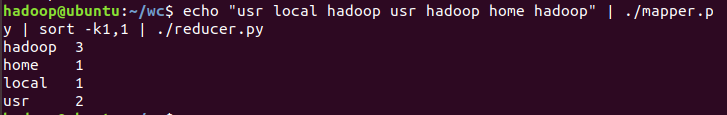

echo “usr local hadoop user hadoop home hadoop" | ./mapper.py echo “usr local hadoop user hadoop home hadoop" | ./mapper.py | ./reducer.py echo “usr local hadoop user hadoop home hadoop" | ./mapper.py | sort -k1,1 | ./reducer.py

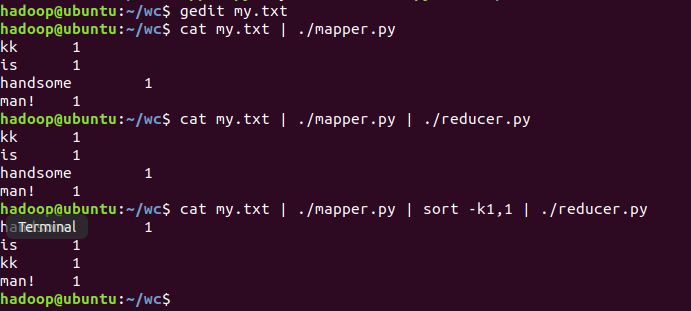

cat my.txt | ./mapper.py cat my.txt | ./mapper.py | ./reducer.py cat my.txt | ./mapper.py | sort -k1,1 | ./reducer.py

4.将文本数据上传至HDFS上

start-dfs.sh start.yarn.sh jps hdfs dfs -put *.txt /input hdfs dfs -ls /input hdfs dfs -du /input

5.用hadoop streaming提交任务

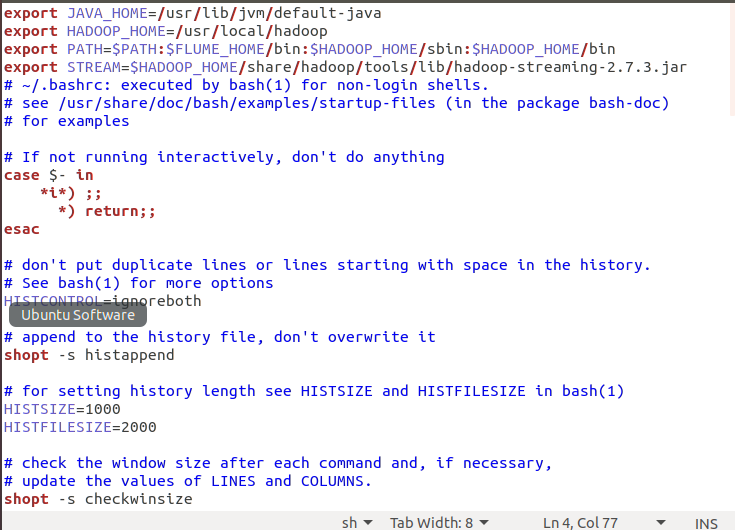

配置~/.bashrc

export STREAM=$HADOOP_HOME /share/hadoop/tools/lib/hadoop-streaming-2.7.3.jar

二、气象数据分析

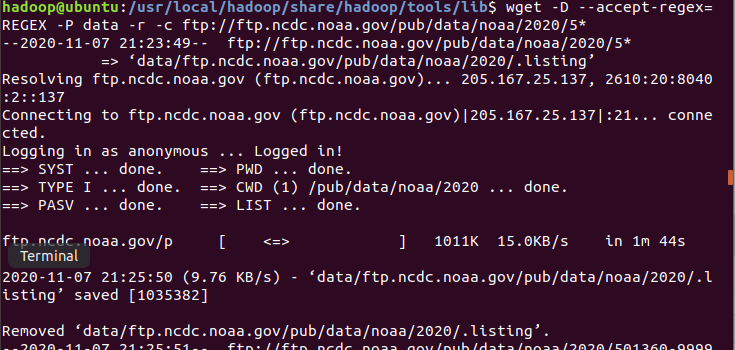

1.批量下载气象数据

wget -D --accept-regex=REGEX -P data -r -c ftp://ftp.ncdc.noaa.gov/pub/data/noaa/2020/5*

2.解压数据集,并保存在本地文本文件中

cd data/ftp.ncdc.noaa.gov/pub/data/noaa/2020 ls -l zcat data/ftp.ncdc.noaa.gov/pub/data/noaa/2020/5*.gz >qxdata.txt

3.将气象数据上传至HDFS上

hdfs dfs -mkdir /wether hdfs dfs -put data /wether hdfs dfs -ls /wether

浙公网安备 33010602011771号

浙公网安备 33010602011771号