人工智能实战2019 第五次作业 焦宇恒 16721088

| 标题 | 内容 |

|---|---|

| 这个作业属于哪个课程 | 人工智能实战2019 |

| 这个作业的要求在哪里 | 逻辑与非门 |

| 这个作业在哪个具体方面帮助我实现目标 | 神经网络二分类法 |

逻辑与门训练样本

| X1 | X2 | Y |

|---|---|---|

| 0 | 0 | 0 |

| 0 | 1 | 0 |

| 1 | 0 | 0 |

| 1 | 1 | 1 |

逻辑或门训练样本

| X1 | X2 | Y |

|---|---|---|

| 0 | 0 | 0 |

| 0 | 1 | 1 |

| 1 | 0 | 1 |

| 1 | 1 | 1 |

实验代码

为使篇幅易读,省略部分函数代码

import numpy as np

import matplotlib.pyplot as plt

from pathlib import Path

import math

# 定义对率函数

def Sigmoid(x):

s=1/(1+np.exp(-x))

return s

# 前向计算

def ForwardCalculationBatch(W, B, batch_X):

Z = np.dot(W, batch_X) + B

A = Sigmoid(Z)

return A

# 计算损失函数值

def CheckLoss(W, B, X, Y):

m = X.shape[1]

A = ForwardCalculationBatch(W,B,X)

p1 = 1 - Y

p2 = np.log(1-A)

p3 = np.log(A)

p4 = np.multiply(p1 ,p2)

p5 = np.multiply(Y, p3)

LOSS = np.sum(-(p4 + p5)) #binary classification

loss = LOSS / m

return loss

# 反向计算

# X:input example, Y:lable example, Z:predicated value

def BackPropagationBatch(batch_X, batch_Y, A):

m = batch_X.shape[1]

dZ = A - batch_Y

# dZ列相加,即一行内的所有元素相加

dB = dZ.sum(axis=1, keepdims=True)/m

dW = np.dot(dZ, batch_X.T)/m

return dW, dB

# 更新权重参数

def UpdateWeights(W, B, dW, dB, eta):

W = W - eta * dW

B = B - eta * dB

return W, B

# 推理函数

def Inference(W,B,X_norm,xt):

xt_normalized = NormalizePredicateData(xt, X_norm)

A = ForwardCalculationBatch(W,B,xt_normalized)

return A, xt_normalized

def train(method, X, Y, ForwardCalculationBatch, CheckLoss):

num_example = X.shape[1]

num_feature = X.shape[0]

num_category = Y.shape[0]

# hyper parameters

eta, max_epoch,batch_size = InitializeHyperParameters(method,num_example)

# W(num_category, num_feature), B(num_category, 1)

W, B = InitialWeights(num_feature, num_category, "zero")

# calculate loss to decide the stop condition

loss = 5 # initialize loss (larger than 0)

error = 1e-3 # stop condition

dict_loss = {} # loss history

# if num_example=200, batch_size=10, then iteration=200/10=20

max_iteration = (int)(num_example / batch_size)

for epoch in range(max_epoch):

print("epoch=%d" %epoch)

for iteration in range(max_iteration):

# get x and y value for one sample

batch_x, batch_y = GetBatchSamples(X,Y,batch_size,iteration)

# get z from x,y

batch_a = ForwardCalculationBatch(W, B, batch_x)

# calculate gradient of w and b

dW, dB = BackPropagationBatch(batch_x, batch_y, batch_a)

# update w,b

W, B = UpdateWeights(W, B, dW, dB, eta)

# calculate loss for this batch

loss = CheckLoss(W,B,X,Y)

if method == "SGD":

if iteration % 10 == 0:

print(epoch,iteration,loss,W,B)

# end if

else:

print(epoch,iteration,loss,W,B)

# end if

dict_loss[loss] = CData(loss, W, B, epoch, iteration)

# end for

if math.isnan(loss):

break

if loss < error:

break

# end for

# 主程序

if __name__ == '__main__':

# SGD, MiniBatch, FullBatch

method = "SGD"

# read data

XData = np.array([0, 0, 1, 1, 0, 1, 0, 1]).reshape(2, 4)

if logic == "and":

YData = np.array([0, 0, 0, 1]).reshape(1, 4)

elif logic == "or"

YData = np.array([0, 1, 1, 1]).reshape(1, 4)

X, X_norm = NormalizeData(XData)

ShowData(XData, YData)

Y = ToBool(YData)

W, B = train(method, X, Y, ForwardCalculationBatch, CheckLoss)

print("W=",W)

print("B=",B)

xt = np.array([5,1,6,9,5,5]).reshape(2,3,order='F')

result, xt_norm = Inference(W,B,X_norm,xt)

print("result=",result)

print(np.around(result))

ShowResult(X,YData,W,B,xt_norm)

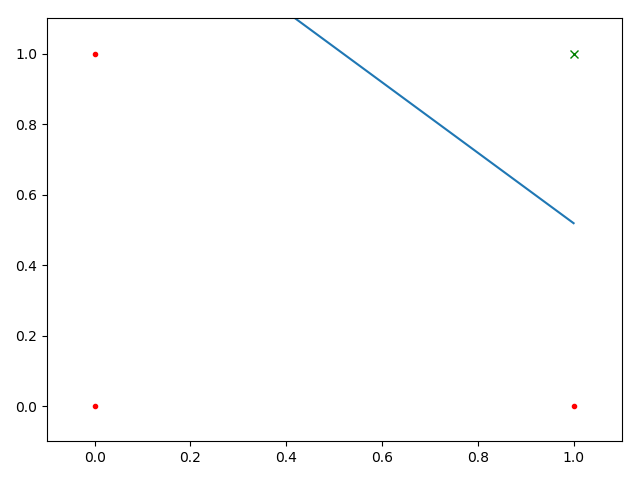

逻辑与门实验结果

epoch=4999, iteration=3, loss=0.008636

W= [[8.83112606 8.82986228]]

B= [[-13.41376608]]

result= [[1. 1. 1.]]

[[1. 1. 1.]]W= [[8.83112606 8.82986228]]

B= [[-13.41376608]]

result= [[1. 1. 1.]]

[[1. 1. 1.]]

逻辑或门实验结果

epoch=4999, iteration=3, loss=0.004591

W= [[10.07943175 10.08022559]]

B= [[-4.57788412]]

result= [[1. 1. 1.]]

[[1. 1. 1.]]