解决onnxruntime框架报错FAIL : Failed to load library libonnxruntime_providers_cuda.so with error: libcublasLt.so.12: cannot open shared object file: No such file or directory

2024-09-07 14:23:35.189200391 [E:onnxruntime:Default, provider_bridge_ort.cc:1978 TryGetProviderInfo_TensorRT] /onnxruntime_src/onnxruntime/core/session/provider_bridge_ort.cc:1637 onnxruntime::Provider& onnxruntime::ProviderLibrary::Get() [ONNXRuntimeError] : 1 : FAIL : Failed to load library libonnxruntime_providers_tensorrt.so with error: libcublas.so.12: cannot open shared object file: No such file or directory

*************** EP Error ***************

EP Error /onnxruntime_src/onnxruntime/python/onnxruntime_pybind_state.cc:490 void onnxruntime::python::RegisterTensorRTPluginsAsCustomOps(PySessionOptions&, const onnxruntime::ProviderOptions&) Please install TensorRT libraries as mentioned in the GPU requirements page, make sure they're in the PATH or LD_LIBRARY_PATH, and that your GPU is supported.

when using ['TensorrtExecutionProvider', 'CUDAExecutionProvider', 'CPUExecutionProvider']

Falling back to ['CUDAExecutionProvider', 'CPUExecutionProvider'] and retrying.

2024-09-07 14:23:35.282705908 [E:onnxruntime:Default, provider_bridge_ort.cc:1992 TryGetProviderInfo_CUDA] /onnxruntime_src/onnxruntime/core/session/provider_bridge_ort.cc:1637 onnxruntime::Provider& onnxruntime::ProviderLibrary::Get() [ONNXRuntimeError] : 1 : FAIL : Failed to load library libonnxruntime_providers_cuda.so with error: libcublasLt.so.12: cannot open shared object file: No such file or directory

解决办法:

put cudnn 9.4 dlls into the capi folder and add that capi folder to user path

2025-04-25 11:01:32

在英伟达官网下载了cudnn-linux-x86_64-9.4.0.58_cuda12-archive.tar.xz

解压之后想把lib目录下的so文件复制到onnxruntime/capi下,

为了安全起见,首先备份一下onnxruntime/capi目录下的文件到这里

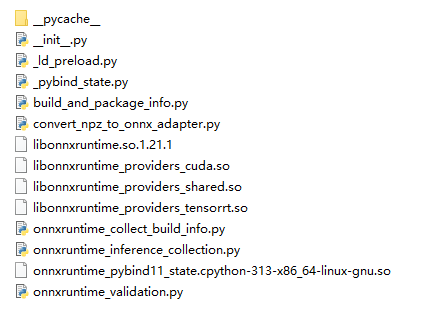

原capi目录的文件

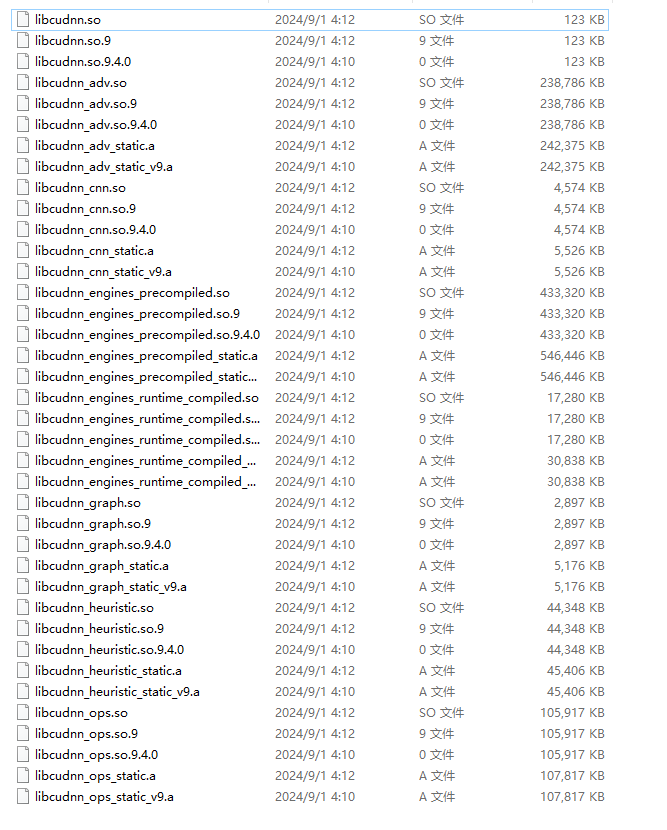

解压cudnn-linux-x86_64-9.4.0.58_cuda12-archive.tar.xz之后的lib目录

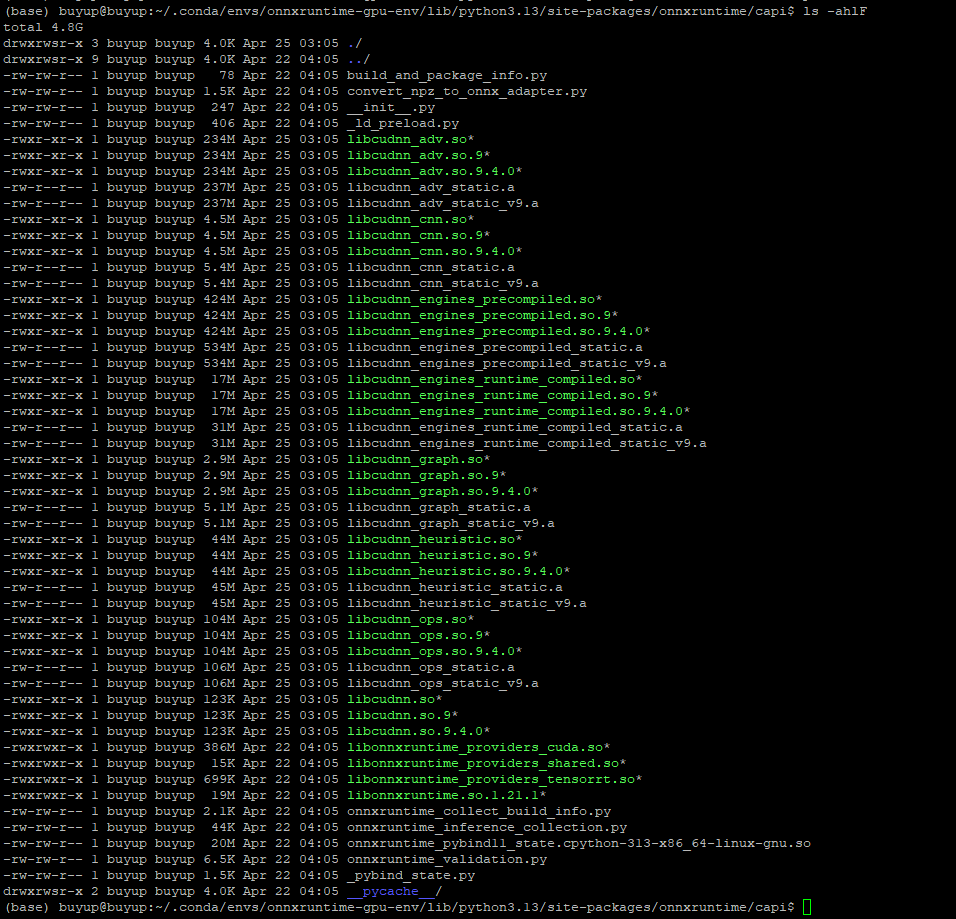

把解压cudnn-linux-x86_64-9.4.0.58_cuda12-archive.tar.xz之后的lib目录下的文件复制到

~/.conda/envs/onnxruntime-gpu-env/lib/python3.13/site-packages/onnxruntime/capi下截图:

最后一步:把/home/buyup/.conda/envs/onnxruntime-gpu-env/lib/python3.13/site-packages/onnxruntime/capi加入.bashrc文件

export PATH=$PATH:/usr/local/cuda-12.4/bin

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/cuda-12.4/lib64

export PATH=$PATH:/home/buyup/.conda/envs/onnxruntime-gpu-env/lib/python3.13/site-packages/onnxruntime/capi

之后运行就可以利用GPU跑onnxruntime库不会报错

浙公网安备 33010602011771号

浙公网安备 33010602011771号