centOS 上部署hadoop+mysql+hive 服务之mysql 和hive安装

hive默认存放元数据的数据库是Derby数据库,Derby数据库是嵌入式数据库,它只能单用户访问,也就是只能有一个会话连接到元数据存储,不适合多用户连接操作需求。比如,多用户同时进行查询或并发操作时,Derby无法处理,这会导致性能瓶颈或直接报错。因此,建议替换为用mysql在存放元数据信息。

mysql安装:

1、下载mysql的yum配置文件

yum install https://dev.mysql.com/get/mysql80-community-release-el7-7.noarch.rpm

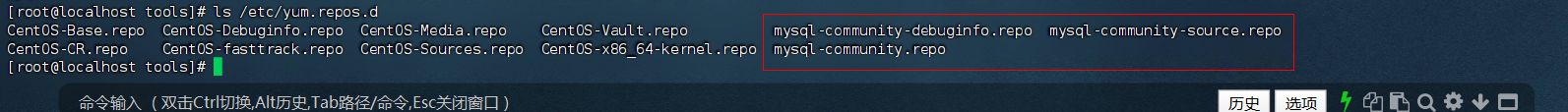

安装成功后,在/etc/yum.repos.d/目录下显示mysql的yum配置文件信息

2、通过yum命令安装mysql服务

yum install mysql-community-server -y

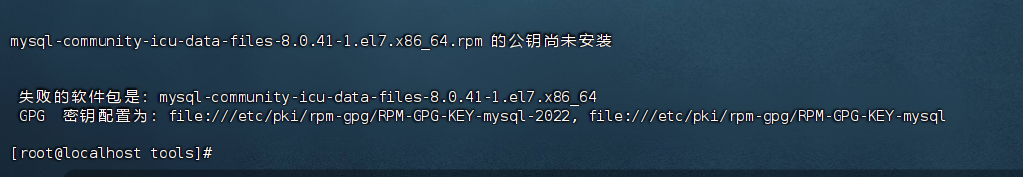

如果报错:

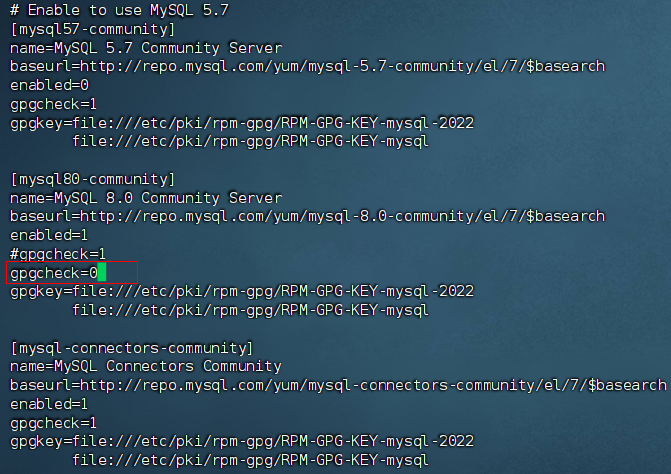

编辑/etc/yum.repos.d/mysql-community.repo 中[mysql80-community] gpgcheck改为0,不检查密钥,再重新安装即可

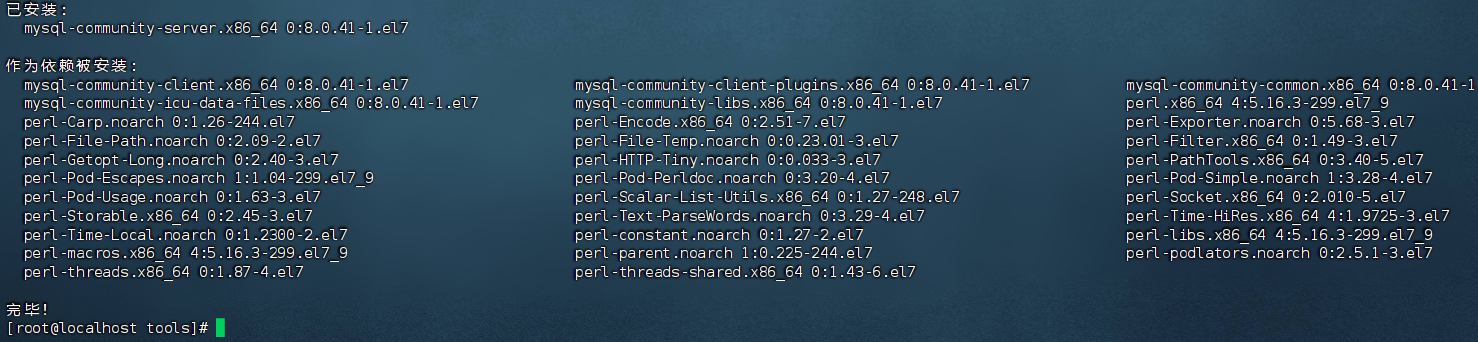

mysql安装完成后,如下:

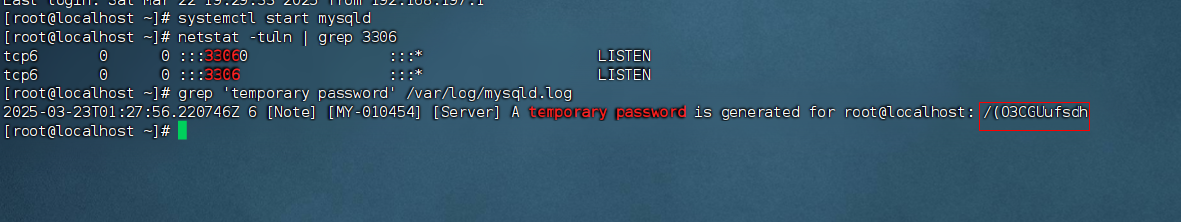

3、启动mysqld服务

systemctl start mysqld

4、获取mysql的临时密码

grep 'temporary password' /var/log/mysqld.log

5、通过临时密码登录mysql服务

mysql -u root -p

6、修改root账号密码并重新登录

alter user "root"@"localhost" identified by "Test@123456";

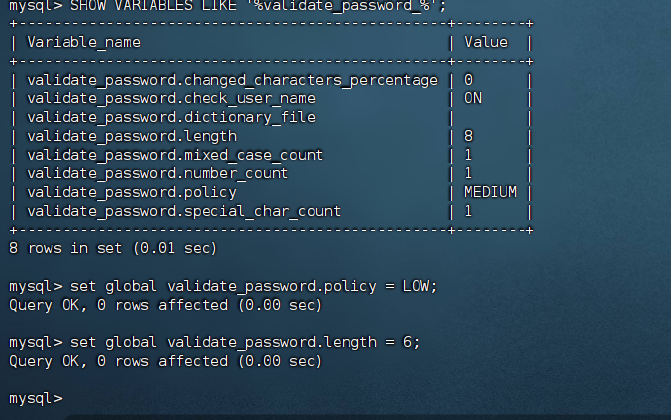

7、修改mysql密码策略(初次修改密码时需要设置复杂的密码,通过修改mysql密码策略,可以设置简短的密码)

# 查询当前mysql的密码策略 SHOW VARIABLES LIKE '%validate_password_%';

设置密码validate_password.policy=LOW 和 validate_password.length = 6

set global validate_password.policy = LOW; set global validate_password.length = 6;

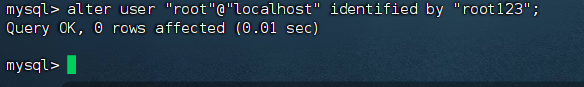

验证mysql密码策略是否设置成功

alter user "root"@"localhost" identified by "root123";

8、创建mysql 用户hive信息

create user "hive"@"%" identified by "hive123"; grant all privileges on *.* to "hive"@"%" with grant option; flush privileges;

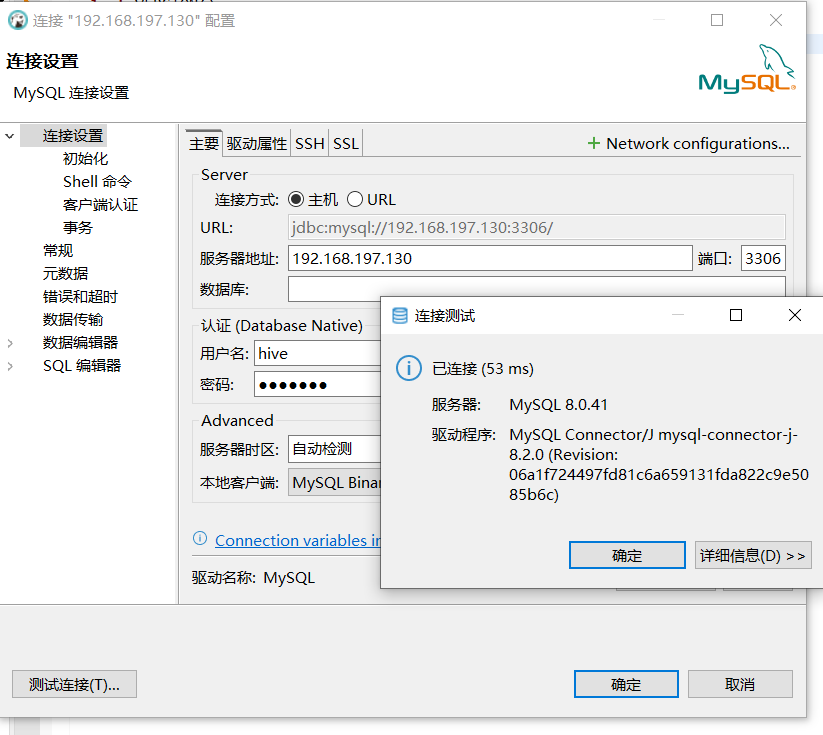

验证hive用户连接mysql

hive 4.0.0安装及配置:

hive 国内下载地址:https://mirrors.huaweicloud.com/apache/hive/

wget https://mirrors.huaweicloud.com/apache/hive/hive-4.0.0/apache-hive-4.0.0-bin.tar.gz

1、解压hive包

tar zxvf apache-hive-4.0.0-bin.tar.gz

将hive移动到/usr/local 目录下

mv apache-hive-4.0.0-bin /usr/local/hive

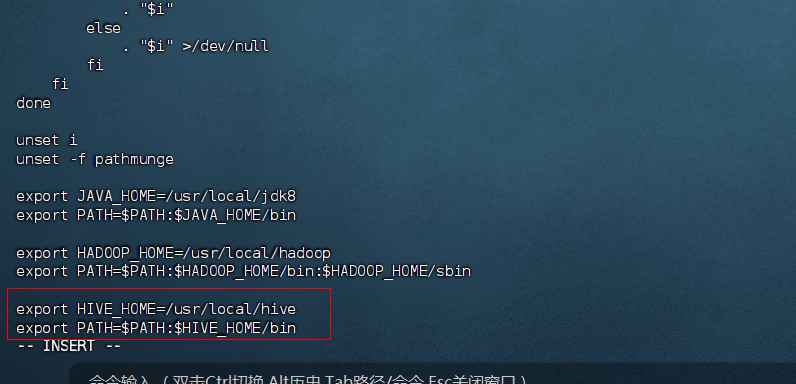

2、配置/etc/profile环境变量,加入hive信息

刷新/etc/profile配置信息

source /etc/profile

3、配置hive信息

cp hive-default.xml.template hive-site.xml cp hive-env.sh.template hive-env.sh

通过vi 配置hive-site.xml 替换以下信息:

<configuration>

<!-- Hive 元数据存储位置 -->

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost:3306/hive_01?createDatabaseIfNotExist=true</value>

<description>JDBC connect string for a JDBC metastore</description>

</property>

<!-- 数据库驱动 -->

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.cj.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<!-- 数据库用户名 -->

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>hive</value>

<description>Username to use against metastore database</description>

</property>

<!-- 数据库密码 -->

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>hive123</value>

<description>Password to use against metastore database</description>

</property>

<!-- Hive 数据仓库目录 -->

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/local/hive/warehouse</value>

<description>Location of default database for the warehouse</description>

</property>

</configuration>

配置hive-env.xml信息,如下

hadoop安装路径

HADOOP_HOME=/usr/local/hadoop

hive配置文件路径

export HIVE_CONF_DIR=/usr/local/hive/conf

4、下载mysql连接驱动jar包

wget https://mirrors.huaweicloud.com/mysql/Downloads/Connector-J/mysql-connector-java-8.0.24.zip

解压mysql-connentor-java的压缩包

unzip mysql-connector-java-8.0.24.zip

将mysql-connector-java.jar包移动hive的lib目录下,便于hive连接mysql

mv mysql-connector-java-8.0.24.jar /usr/local/hive/lib/

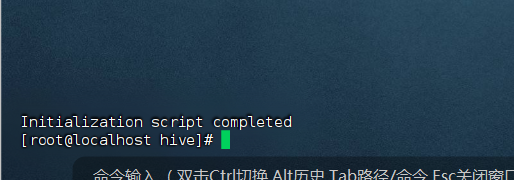

5、初始化元数据库

schematool -dbType mysql -initSchema

初始化成功如下:

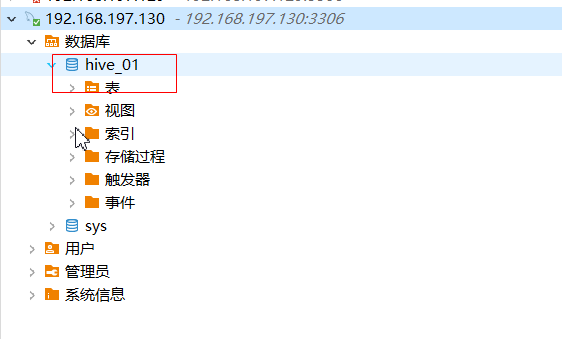

刷新mysql数据库,显示创建新的数据库:hive_01

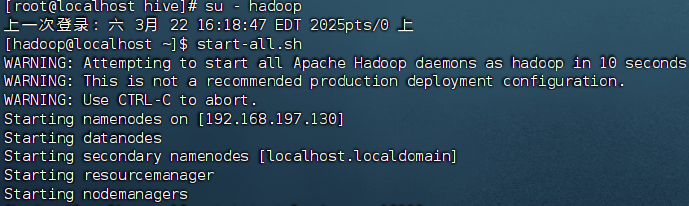

6、启动hadoop服务

# 切换hadoop用户 su - hadoop # 启动hadoop服务 start-all.sh

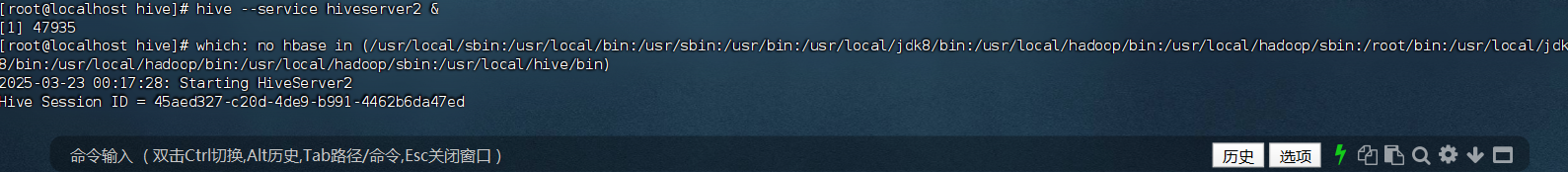

7、启动hive 的hiveserver2 服务

hive --service hiveserver2 &

报错一如下:

which: no hbase in (/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/usr/local/jdk8/bin:/usr/local/hadoop/bin:/usr/local/hadoop/sbin:/root/bin:/usr/local/jdk8/bin:/usr/local/hadoop/bin:/usr/local/hadoop/sbin:/usr/local/hive/bin) SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/local/hive/lib/log4j-slf4j-impl-2.18.0.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/local/hadoop/share/hadoop/common/lib/slf4j-reload4j-1.7.36.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

原因:hive和hadoop的日志冲突

解决:将hive的日志jar 备份后删除

# 查看jar路径是否正确

ls /usr/local/hive/lib/log4j-slf4j-impl-2.18.0.jar

# 备份jar包

cp /usr/local/hive/lib/log4j-slf4j-impl-2.18.0.jar /usr/local/hive/lib/log4j-slf4j-impl-2.18.0.jar.backup

# 删除掉低版本的日志jar包

rm /usr/local/hive/lib/log4j-slf4j-impl-2.18.0.jar

再次启动hiveserver2

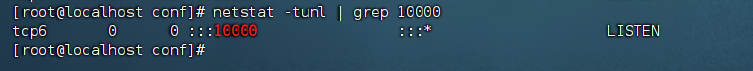

启动成功后,可以查询到(默认端口)10000端口

netstat -tunl | grep 10000

hiveserver2 启动异常:

1、java.lang.RuntimeException: Error applying authorization policy on hive configuration: java.net.URISyntaxException: Relative path in absolute URI: ${system:java.io.tmpdir%7D/$%7Bsystem:user.name%7D

在hive-site.xml中搜索:system:java.io.tmpdir ,替换为绝对路径,如:/usr/local/hive/tmp ,并且创建该目录和赋予权限: mkdir /usr/local/hive/tmp

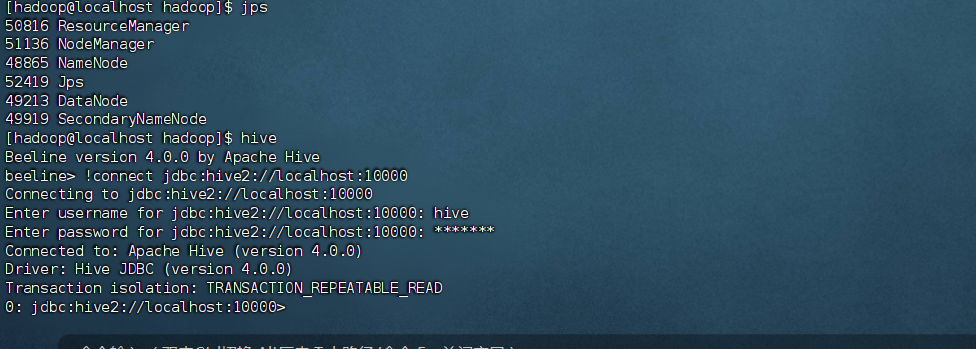

beeline 连接报错:

beeline> !connect jdbc:hive2://localhost:10000 Connecting to jdbc:hive2://localhost:10000 Enter username for jdbc:hive2://localhost:10000: hive Enter password for jdbc:hive2://localhost:10000: ******* 25/03/23 02:43:28 [main]: WARN jdbc.HiveConnection: Failed to connect to localhost:10000 Error: Could not open client transport with JDBC Uri: jdbc:hive2://localhost:10000: Failed to open new session: java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.authorize.AuthorizationException): User: root is not allowed to impersonate hive (state=08S01,code=0)

解决方法:在hadoop的core-site.xml的配置文件中加入代理的用户和用户组是:root,然后重启hadoop服务:

<property>

<name>hadoop.proxyuser.root.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.root.groups</name>

<value>*</value>

再次尝试通过beeline进行连接,就可以正常连接成功了

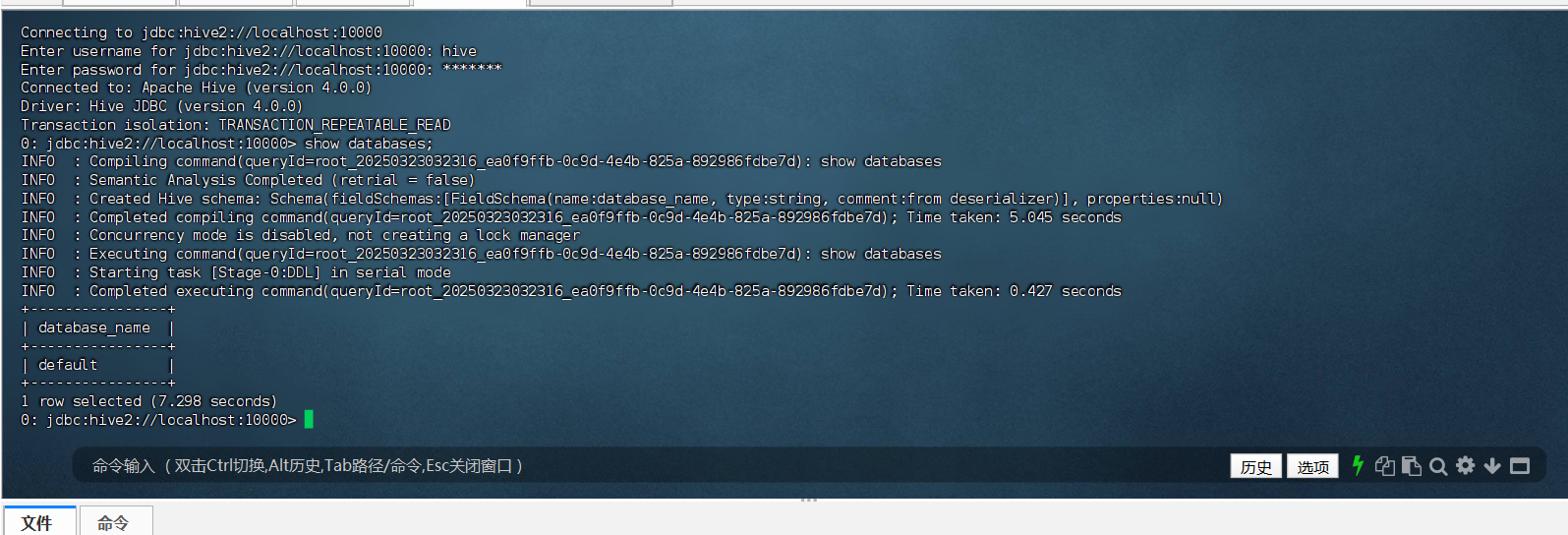

hive查询数据库:show databases;报异常:

Error: Unable to get the next row set with exception: java.io.IOException: java.lang.IllegalArgumentException: java.net.URISyntaxException: Relative path in absolute URI: ${system:user.name%7D (state=,code=0)

解决方式:在hive的配置文件:hive-site.xml中找到hive.exec.local.scratchdir 属性,将${system:user.name} 改为:${user.name}

<property>

<name>hive.exec.local.scratchdir</name>

<value>/usr/local/hive/tmp/${system:user.name}</value>

<description>Local scratch space for Hive jobs</description>

</property>

改为:

<property>

<name>hive.exec.local.scratchdir</name>

<value>/usr/local/hive/tmp/${user.name}</value>

<description>Local scratch space for Hive jobs</description>

</property>

然后再次重启hiveserver2 就会生效:

浙公网安备 33010602011771号

浙公网安备 33010602011771号