通过k8s部署dubbo微服务并接入ELK架构

需要这样一套日志收集、分析的系统:

- 收集 -- 能够采集多种来源的日志数据 (流式日志收集器)

- 传输 -- 能够稳定的把日志数据传输到中央系统 (消息队列)

- 存储 -- 可以将日志以结构化数据的形式存储起来 (搜索引擎)

- 分析 -- 支持方便的分析、检索方法,最好有GUI管理系统 (前端)

- 警告 -- 能够提供错误报告,监控机制 (监控工具)

优秀的社区开源解决方案 ---- ELK Stack

- E ----- ElasticSearch

- L ----- LogStash

- K ----- Kibana

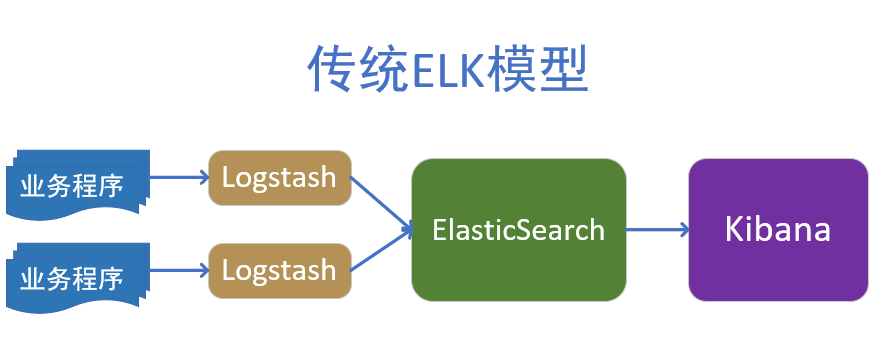

1.传统ELK模型

缺点:

- Logstash使用Jruby语言开发,吃资源,大量部署消耗极高

- 业务程序与Logstash耦合过松,不利于业务迁移

- 日志收集与ES解耦又过紧,易打爆、丢数据

- 在容器云环境下,传统ELK模型难以完成工作

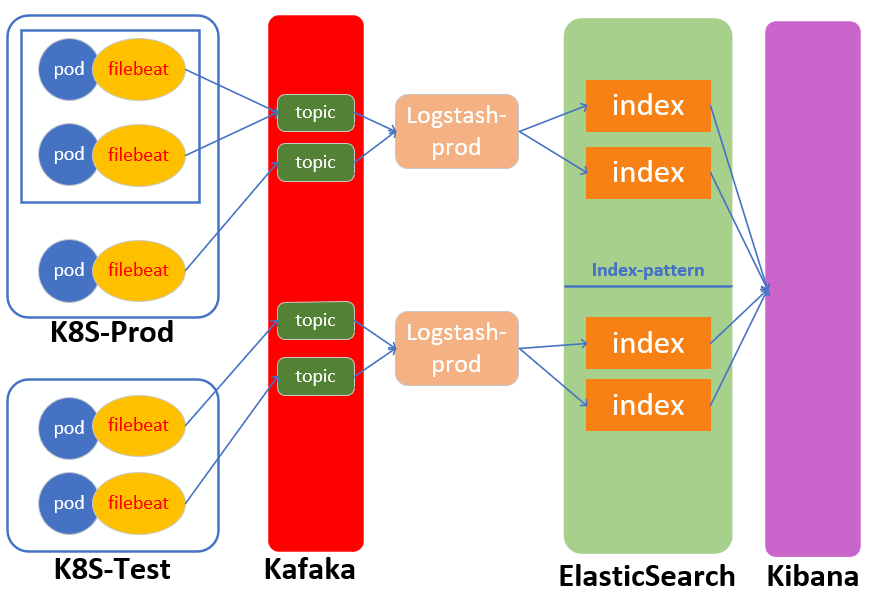

2.ELK架构图

3.制作tomcat容器的底包镜像

Tomcat官网:https://tomcat.apache.org/

(1)下载Tomcat二进制包

在运维主机(mfyxw50)上执行

Tomcat 8.5.57下载地址:https://mirror.bit.edu.cn/apache/tomcat/tomcat-8/v8.5.57/bin/apache-tomcat-8.5.57.tar.gz

下载Tomcat二进制包并保存至/opt/src目录

[root@mfyxw50 ~]# cd /opt/src

[root@mfyxw50 src]#

[root@mfyxw50 src]# wget https://mirror.bit.edu.cn/apache/tomcat/tomcat-8/v8.5.57/bin/apache-tomcat-8.5.57.tar.gz

--2020-08-17 22:52:23-- https://mirror.bit.edu.cn/apache/tomcat/tomcat-8/v8.5.57/bin/apache-tomcat-8.5.57.tar.gz

Resolving mirror.bit.edu.cn (mirror.bit.edu.cn)... 219.143.204.117, 202.204.80.77, 2001:da8:204:1205::22

Connecting to mirror.bit.edu.cn (mirror.bit.edu.cn)|219.143.204.117|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 10379806 (9.9M) [application/octet-stream]

Saving to: ‘apache-tomcat-8.5.57.tar.gz’

100%[==================================================================================================>] 10,379,806 153KB/s in 70s

2020-08-17 22:53:33 (144 KB/s) - ‘apache-tomcat-8.5.57.tar.gz’ saved [10379806/10379806]

创建存放Tomcat的目录并解压至此目录

[root@mfyxw50 ~]# mkdir -p /data/dockerfile/tomcat8

[root@mfyxw50 ~]# tar -xf /opt/src/apache-tomcat-8.5.57.tar.gz -C /data/dockerfile/tomcat8

(2)简单配置tomcat

关闭ajp端口

在/data/dockerfile/tomcat8/apache-tomcat-8.5.57/conf/server.xml找到AJP的,添加上注释即可关闭AJP端口

<!-- <Connector protocol="AJP/1.3"

address="::1"

port="8009"

redirectPort="8443" />

-- >

(3)配置日志

删除3manager,4host-manager的handlers并注释相关的内容

文件路径如下:/data/dockerfile/tomcat8/apache-tomcat-8.5.57/conf/logging.properties

修改好后如下所示

handlers = 1catalina.org.apache.juli.AsyncFileHandler, 2localhost.org.apache.juli.AsyncFileHandler, java.util.logging.ConsoleHandler

注释3manager和4host-manager的日志配置内容

#3manager.org.apache.juli.AsyncFileHandler.level = FINE

#3manager.org.apache.juli.AsyncFileHandler.directory = ${catalina.base}/logs

#3manager.org.apache.juli.AsyncFileHandler.prefix = manager.

#3manager.org.apache.juli.AsyncFileHandler.encoding = UTF-8

#4host-manager.org.apache.juli.AsyncFileHandler.level = FINE

#4host-manager.org.apache.juli.AsyncFileHandler.directory = ${catalina.base}/logs

#4host-manager.org.apache.juli.AsyncFileHandler.prefix = host-manager.

#4host-manager.org.apache.juli.AsyncFileHandler.encoding = UTF-8

将其它的日志的等级修改为INFO

1catalina.org.apache.juli.AsyncFileHandler.level = INFO

2localhost.org.apache.juli.AsyncFileHandler.level = INFO

java.util.logging.ConsoleHandler.level = INFO

(4)准备Dockerfile文件

dockerfile文件内容如下:

[root@mfyxw50 ~]# cat > /data/dockerfile/tomcat8/Dockerfile << EOF

From harbor.od.com/base/jre8:8u112

RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime &&\

echo 'Asia/Shanghai' >/etc/timezone

ENV CATALINA_HOME /opt/tomcat

ENV LANG zh_CN.UTF-8

ADD apache-tomcat-8.5.57/ /opt/tomcat

ADD config.yml /opt/prom/config.yml

ADD jmx_javaagent-0.3.1.jar /opt/prom/jmx_javaagent-0.3.1.jar

WORKDIR /opt/tomcat

ADD entrypoint.sh /entrypoint.sh

CMD ["/entrypoint.sh"]

EOF

config.yml文件内容如下:

[root@mfyxw50 ~]# cat > /data/dockerfile/tomcat8/config.yml << EOF

---

rules:

- pattern: '.*'

EOF

下载jmx_javaagent-0.3.1.jar文件

wget https://repo1.maven.org/maven2/io/prometheus/jmx/jmx_prometheus_javaagent/0.3.1/jmx_prometheus_javaagent-0.3.1.jar -O /data/dockerfile/tomcat8/jmx_javaagent-0.3.1.jar

entrypoint.sh文件内容如下:

[root@mfyxw50 ~]# cat > /data/dockerfile/tomcat8/entrypoint.sh << EOF

#!/bin/bash

M_OPTS="-Duser.timezone=Asia/Shanghai -javaagent:/opt/prom/jmx_javaagent-0.3.1.jar=\$(hostname -i):\${M_PORT:-"12346"}:/opt/prom/config.yml"

C_OPTS=\${C_OPTS}

MIN_HEAP=\${MIN_HEAP:-"128m"}

MAX_HEAP=\${MAX_HEAP:-"128m"}

JAVA_OPTS=\${JAVA_OPTS:-"-Xmn384m -Xss256k -Duser.timezone=GMT+08 -XX:+DisableExplicitGC -XX:+UseConcMarkSweepGC -XX:+UseParNewGC -XX:+CMSParallelRemarkEnabled -XX:+UseCMSCompactAtFullCollection -XX:CMSFullGCsBeforeCompaction=0 -XX:+CMSClassUnloadingEnabled -XX:LargePageSizeInBytes=128m -XX:+UseFastAccessorMethods -XX:+UseCMSInitiatingOccupancyOnly -XX:CMSInitiatingOccupancyFraction=80 -XX:SoftRefLRUPolicyMSPerMB=0 -XX:+PrintClassHistogram -Dfile.encoding=UTF8 -Dsun.jnu.encoding=UTF8"}

CATALINA_OPTS="\${CATALINA_OPTS}"

JAVA_OPTS="\${M_OPTS} \${C_OPTS} -Xms\${MIN_HEAP} -Xmx\${MAX_HEAP} \${JAVA_OPTS}"

sed -i -e "1a\JAVA_OPTS=\"\$JAVA_OPTS\"" -e "1a\CATALINA_OPTS=\"\$CATALINA_OPTS\"" /opt/tomcat/bin/catalina.sh

cd /opt/tomcat && /opt/tomcat/bin/catalina.sh run 2>&1 >> /opt/tomcat/logs/stdout.log

EOF

给entrypoint.sh文件加可执行文件

[root@mfyxw50 ~]# ls -l /data/dockerfile/tomcat8/entrypoint.sh

-rw-r--r-- 1 root root 827 Aug 17 23:47 /data/dockerfile/tomcat8/entrypoint.sh

[root@mfyxw50 ~]#

[root@mfyxw50 ~]# chmod u+x /data/dockerfile/tomcat8/entrypoint.sh

[root@mfyxw50 ~]#

[root@mfyxw50 ~]# ls -l /data/dockerfile/tomcat8/entrypoint.sh

-rwxr--r-- 1 root root 827 Aug 17 23:47 /data/dockerfile/tomcat8/entrypoint.sh

(5)构建tomcat底包

[root@mfyxw50 ~]# cd /data/dockerfile/tomcat8/

[root@mfyxw50 tomcat8]#

[root@mfyxw50 tomcat8]# docker build . -t harbor.od.com/base/tomcat:v8.5.57

Sending build context to Docker daemon 10.35MB

Step 1/10 : From harbor.od.com/base/jre8:8u112

---> 1237758f0be9

Step 2/10 : RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && echo 'Asia/Shanghai' >/etc/timezone

---> Running in dd43a1973ae6

Removing intermediate container dd43a1973ae6

---> 7d376f75a369

Step 3/10 : ENV CATALINA_HOME /opt/tomcat

---> Running in 5d1a8302488f

Removing intermediate container 5d1a8302488f

---> e6d8a5069f4b

Step 4/10 : ENV LANG zh_CN.UTF-8

---> Running in 9ab19ad646de

Removing intermediate container 9ab19ad646de

---> c61931622aae

Step 5/10 : ADD apache-tomcat-8.5.57/ /opt/tomcat

---> 6953dffb9b11

Step 6/10 : ADD config.yml /opt/prom/config.yml

---> 4d67798f76f5

Step 7/10 : ADD jmx_javaagent-0.3.1.jar /opt/prom/jmx_javaagent-0.3.1.jar

---> 2ff30950c856

Step 8/10 : WORKDIR /opt/tomcat

---> Running in f0692b96c235

Removing intermediate container f0692b96c235

---> 00847c31b601

Step 9/10 : ADD entrypoint.sh /entrypoint.sh

---> 6a44a6205708

Step 10/10 : CMD ["/entrypoint.sh"]

---> Running in d2e6b80af0af

Removing intermediate container d2e6b80af0af

---> c3c4fcdbe8fd

Successfully built c3c4fcdbe8fd

Successfully tagged harbor.od.com/base/tomcat:v8.5.57

(6)将构建好的tomcat底包上传至私有仓库

[root@mfyxw50 ~]# docker login harbor.od.com

Authenticating with existing credentials...

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

[root@mfyxw50 ~]#

[root@mfyxw50 ~]# docker push harbor.od.com/base/tomcat:v8.5.57

The push refers to repository [harbor.od.com/base/tomcat]

1adedb0df456: Pushed

c456d7815bdc: Pushed

adbd684689e5: Pushed

fc2e7ea50383: Pushed

1d6b6320a33e: Pushed

c012afdfa38e: Mounted from base/jre8

30934063c5fd: Mounted from base/jre8

0378026a5ac0: Mounted from base/jre8

12ac448620a2: Mounted from base/jre8

78c3079c29e7: Mounted from base/jre8

0690f10a63a5: Mounted from base/jre8

c843b2cf4e12: Mounted from base/jre8

fddd8887b725: Mounted from base/jre8

42052a19230c: Mounted from base/jre8

8d4d1ab5ff74: Mounted from base/jre8

v8.5.57: digest: sha256:83098849296b452d1f4886f9c84db8978c3d8d16b12224f8b76f20ba79abd8d6 size: 3448

4.实战交付tomcat形式的dubbo服务消费者到K8S集群

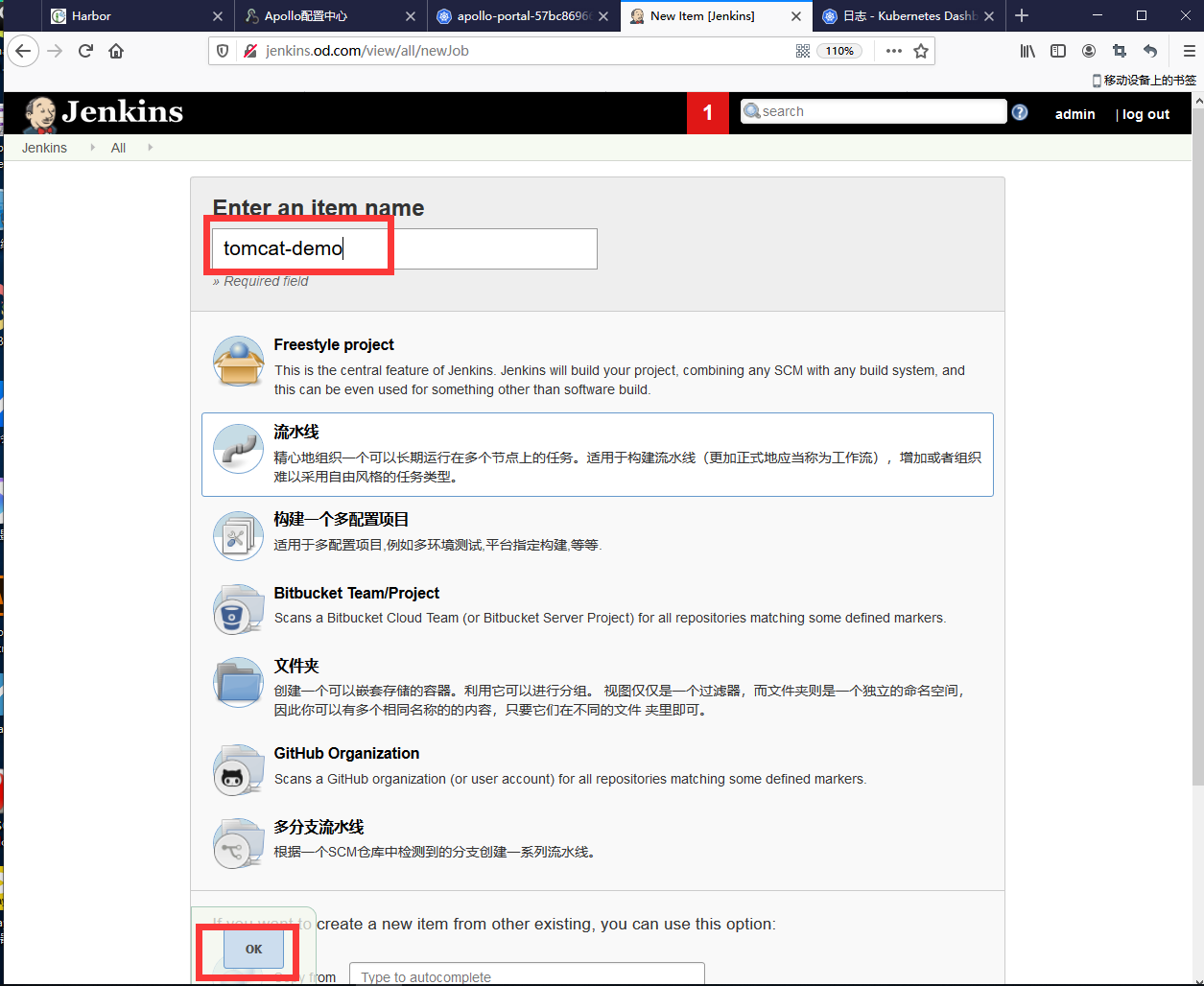

(1)创建Tomcat的jenkins流水线

给予tomcat创建流水线

使用admin用户登录,New Item ----> Create new jobs ----> Enter an item name,输入流水线的名字,比如:tomcat-demo

下面是设置流水线的参数:

-

Discard old builds

Days to keep builds: 3

Max # of builds to keep: 30

-

This project is parameterized

-

Add Parameter ---> String Parameter

Name: app_name

Default value:

Description: project name. e.g: dubbo-demo-web

- [√] Trim the string

-

Add Parameter ---> String Parameter

Name: image_name

Default Value:

Description :project docker image name. e.g: app/dubbo-demo-web

- [√] Trim the string

-

Add Parameter ---> String Parameter

Name: git_repo

Default Value:

Description : project git repository. e.g: git@gitee.com😗***/dubbo-demo-web.git

- [√] Trim the string

-

Add Parameter ---> String Parameter

Name: git_ver

Default Value: tomcat

Description : git commit id of the project.

- [√] Trim the string

-

Add Parameter ---> String Parameter

Name: add_tag

Default Value:

Description: project docker image tag, date_timestamp recommended. e.g: 20200818_1508

- [√] Trim the string

-

Add Parameter ---> String Parameter

Name: mvn_dir

Default Value: ./

Description: **project maven directory. e.g:./ **

- [√] Trim the string

-

Add Parameter ---> String Parameter

Name: target_dir

Default Value: ./dubbo-client/target

Description : the relative path of target file such as .jar or .war package. e.g: ./dubbo-client/target

- [√] Trim the string

-

Add Parameter ---> String Parameter

Name: mvn_cmd

Default Value: mvn clean package -Dmaven.test.skip=true

Description : maven command. e.g: mvn clean package -e -q -Dmaven.test.skip=true

- [√] Trim the string

-

Add Parameter -> Choice Parameter

Name: base_image

Default Value:

- base/tomcat:v8.5.57

- base/tomcat:v9.0.17

- base/tomcat:v7.0.94

Description: project base image list in harbor.od.com.

-

Add Parameter -> Choice Parameter

Name: maven

Default Value:

- 3.6.1-8u232

- 3.2.5-6u025

- 2.2.1-6u025

Description: different maven edition.

-

Add Parameter ---> String Parameter

Name: root_url

Default Value: ROOT

Description: webapp dir.

- [√] Trim the string

-

Pipeline Script

pipeline {

agent any

stages {

stage('pull') { //get project code from repo

steps {

sh "git clone ${params.git_repo} ${params.app_name}/${env.BUILD_NUMBER} && cd ${params.app_name}/${env.BUILD_NUMBER} && git checkout ${params.git_ver}"

}

}

stage('build') { //exec mvn cmd

steps {

sh "cd ${params.app_name}/${env.BUILD_NUMBER} && /var/jenkins_home/maven-${params.maven}/bin/${params.mvn_cmd}"

}

}

stage('unzip') { //unzip target/*.war -c target/project_dir

steps {

sh "cd ${params.app_name}/${env.BUILD_NUMBER} && cd ${params.target_dir} && mkdir project_dir && unzip *.war -d ./project_dir"

}

}

stage('image') { //build image and push to registry

steps {

writeFile file: "${params.app_name}/${env.BUILD_NUMBER}/Dockerfile", text: """FROM harbor.od.com/${params.base_image}

ADD ${params.target_dir}/project_dir /opt/tomcat/webapps/${params.root_url}"""

sh "cd ${params.app_name}/${env.BUILD_NUMBER} && docker build -t harbor.od.com/${params.image_name}:${params.git_ver}_${params.add_tag} . && docker push harbor.od.com/${params.image_name}:${params.git_ver}_${params.add_tag}"

}

}

}

}

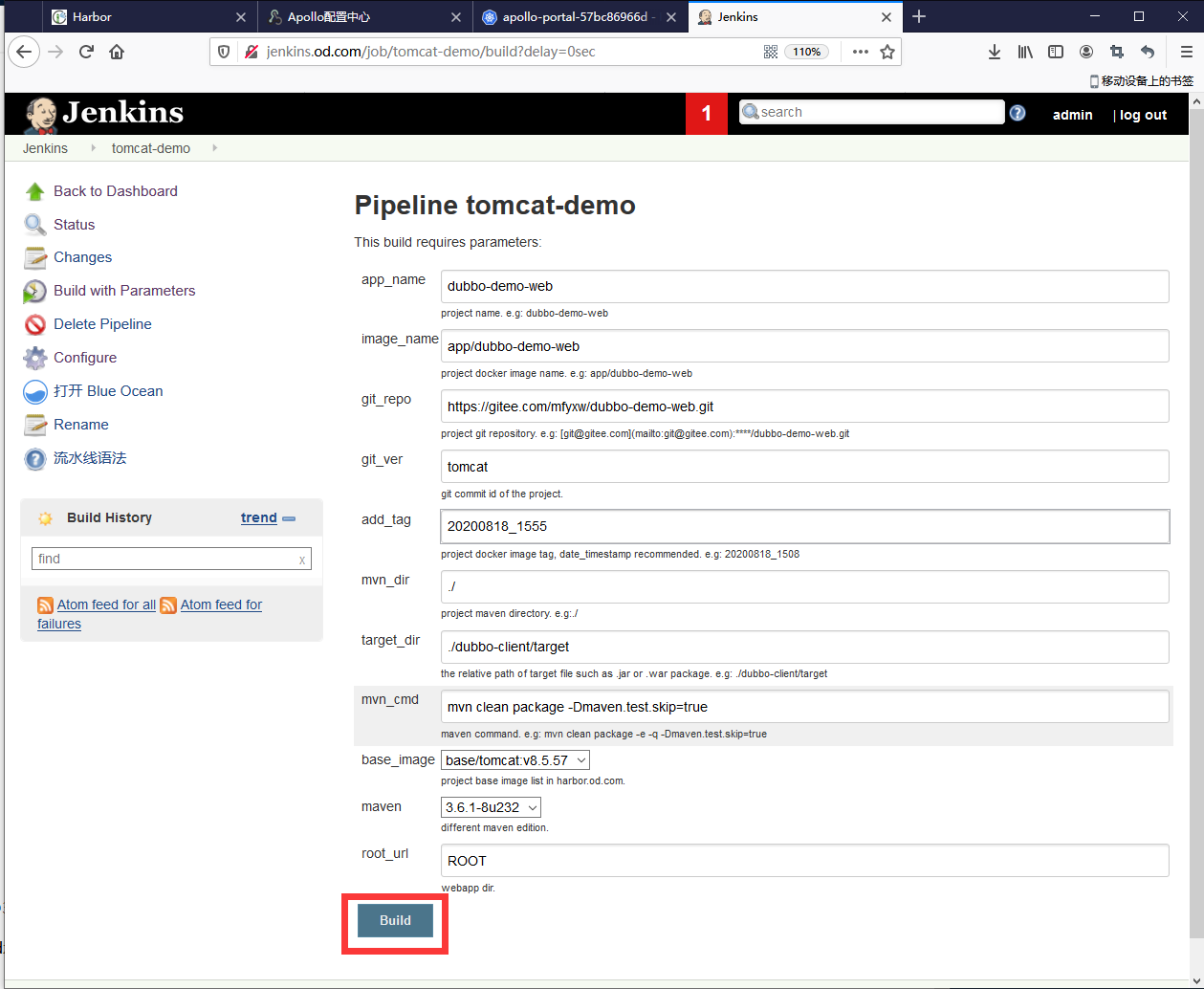

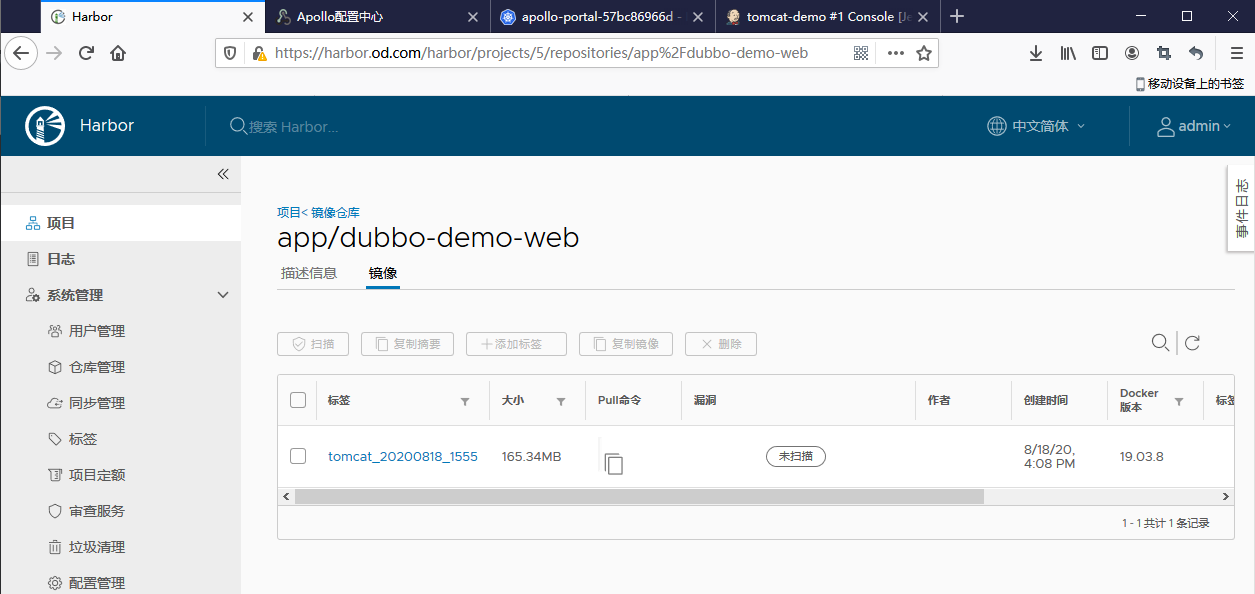

(2)构建应用镜像

成功构建dubbo-demo-web

(3)登录harbor.od.com查看是否上传成功

(4)以apollo的test的环境为例

将以apollo实验环境中的test的环境的dubbo-demo-consumer的deployment.yaml来起,只需要修改deployment.yaml即可

deployment.yaml文件内容如下:

在运维主机(mfyxw50)上执行

[root@mfyxw50 ~]# cat > /data/k8s-yaml/test/dubbo-demo-consumer/deployment.yaml << EOF

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: dubbo-demo-consumer

namespace: test

labels:

name: dubbo-demo-consumer

spec:

replicas: 1

selector:

matchLabels:

name: dubbo-demo-consumer

template:

metadata:

labels:

app: dubbo-demo-consumer

name: dubbo-demo-consumer

spec:

containers:

- name: dubbo-demo-consumer

image: harbor.od.com/app/dubbo-demo-web:tomcat_20200818_1555

ports:

- containerPort: 8080

protocol: TCP

env:

- name: JAR_BALL

value: dubbo-client.jar

- name: C_OPTS

value: -Denv=fat -Dapollo.meta=http://config-test.od.com

imagePullPolicy: IfNotPresent

imagePullSecrets:

- name: harbor

restartPolicy: Always

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 0

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

EOF

先将运行着的apollo的test环境下的dubbo-demo-consumer的deployment设置为0

在master节点(mfyxw30或mfyxw40)任意一台执行即可

[root@mfyxw30 ~]# kubectl get deployment -n test

NAME READY UP-TO-DATE AVAILABLE AGE

apollo-adminservice 1/1 1 1 166m

apollo-configservice 1/1 1 1 168m

dubbo-demo-consumer 1/1 1 1 87m

dubbo-demo-service 1/1 1 1 123m

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl scale --replicas=0 deployment/dubbo-demo-consumer -n test

deployment.extensions/dubbo-demo-consumer scaled

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl get deployment -n test

NAME READY UP-TO-DATE AVAILABLE AGE

apollo-adminservice 1/1 1 1 166m

apollo-configservice 1/1 1 1 168m

dubbo-demo-consumer 0/0 0 0 87m

dubbo-demo-service 1/1 1 1 123m

(5)应用test环境下的dubbo-demo-consumer的deployment资源配置清单

在master节点(mfyxw30或mfyxw40)任意一台执行即可

[root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/test/dubbo-demo-consumer/deployment.yaml

deployment.extensions/dubbo-demo-consumer configured

再次将test环境下的dubbo-demo-consumer的deployment设置为1个副本

[root@mfyxw30 ~]# kubectl get deployment -n test

NAME READY UP-TO-DATE AVAILABLE AGE

apollo-adminservice 1/1 1 1 166m

apollo-configservice 1/1 1 1 168m

dubbo-demo-consumer 0/0 0 0 87m

dubbo-demo-service 1/1 1 1 123m

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl scale --replicas=1 deployment/dubbo-demo-consumer -n test

deployment.extensions/dubbo-demo-consumer scaled

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl get deployment -n test

NAME READY UP-TO-DATE AVAILABLE AGE

apollo-adminservice 1/1 1 1 166m

apollo-configservice 1/1 1 1 168m

dubbo-demo-consumer 1/1 1 1 87m

dubbo-demo-service 1/1 1 1 123m

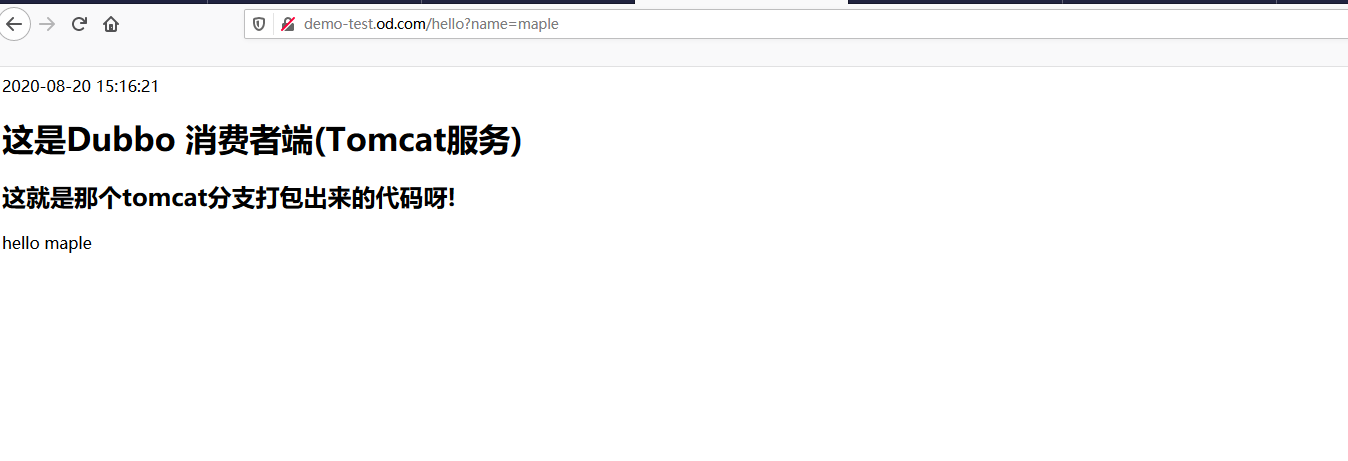

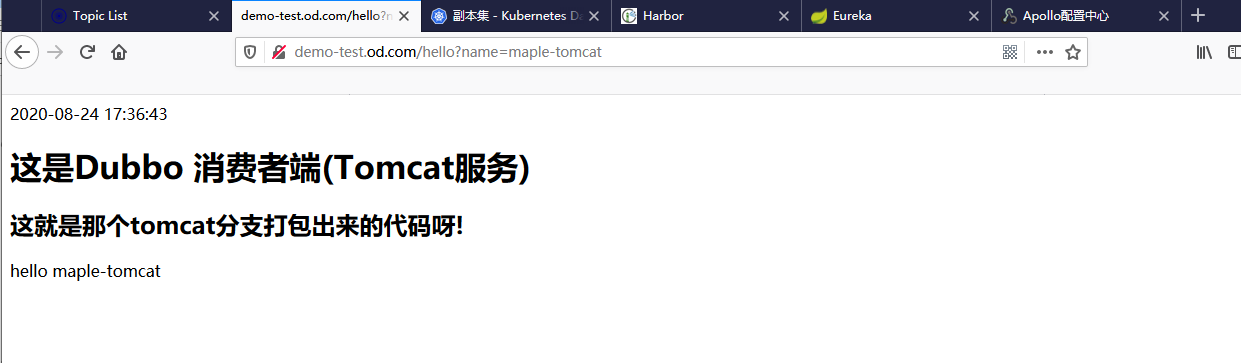

(6)访问http://demo-test.od.com/hello?name=maple

5.二进制安装部署elasticsearch

elasticsearch官网:https://www.elastic.co/cn/

elasticsearch的github地址:https://github.com/elastic/elasticsearch

将elasticsearch安装至mfyxw20.mfyxw.com主机,elasticsearch 6.8.6需要java jdk的1.8.0版本及以上

(1)下载elasticsearch-6.8.6并解压制作超链接

[root@mfyxw20 ~]# mkdir -p /opt/src

[root@mfyxw20 ~]# wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.8.6.tar.gz -O /opt/src/elasticsearch-6.8.6.tar.gz

[root@mfyxw20 ~]# tar xf /opt/src/elasticsearch-6.8.6.tar.gz -C /opt/

[root@mfyxw20 ~]# ln -s /opt/elasticsearch-6.8.6/ /opt/elasticsearch

(2)创建存储数据和日志的目录

[root@mfyxw20 ~]# mkdir -p /data/elasticsearch/{data,logs}

(3)配置elasticsearch

编辑/opt/elasticsearch/config/elasticsearch.yml文件,修改相对应的内容为如下的值

cluster.name: es.od.com

node.name: mfyxw20.mfyxw.com

path.data: /data/elasticsearch/data

path.logs: /data/elasticsearch/logs

bootstrap.memory_lock: true

network.host: 192.168.80.20

http.port: 9200

编辑/opt/elasticsearch/config/jvm.options文件,修改相对应的内容如下:如下的值可以根据实现的环境来设置,建议不超过32G

-Xms512m

-Xmx512m

(4)为elasticsearch创建一个普通用户,方便以后使用此用户启动

[root@mfyxw20 ~]# useradd -s /bin/bash -M es

[root@mfyxw20 ~]# chown -R es.es /opt/elasticsearch-6.8.6

[root@mfyxw20 ~]# chown -R es.es /data/elasticsearch

(5)添加es的文件描述符

[root@mfyxw20 ~]# cat > /etc/security/limits.d/es.conf << EOF

es hard nofile 65536

es soft fsize unlimited

es hard memlock unlimited

es soft memlock unlimited

EOF

(6)调整内核参数

[root@mfyxw20 ~]# sysctl -w vm.max_map_count=262144

vm.max_map_count = 262144

#或者

[root@mfyxw20 ~]# echo "vm.max_map_count=262144" > /etc/sysctl.conf

[root@mfyxw20 ~]# sysctl -p

vm.max_map_count = 262144

[root@mfyxw20 ~]#

(7)启动es

[root@mfyxw20 ~]# su -c "/opt/elasticsearch/bin/elasticsearch -d" es

[root@mfyxw20 ~]#

[root@mfyxw20 ~]# ps aux | grep elasticsearch

es 9492 78.7 40.6 3135340 757876 ? Sl 22:08 0:19 /usr/java/jdk/bin/java -Xms512m -Xmx512m -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=75 -XX:+UseCMSInitiatingOccupancyOnly -Des.networkaddress.cache.ttl=60 -Des.networkaddress.cache.negative.ttl=10 -XX:+AlwaysPreTouch -Xss1m -Djava.awt.headless=true -Dfile.encoding=UTF-8 -Djna.nosys=true -XX:-OmitStackTraceInFastThrow -Dio.netty.noUnsafe=true -Dio.netty.noKeySetOptimization=true -Dio.netty.recycler.maxCapacityPerThread=0 -Dlog4j.shutdownHookEnabled=false -Dlog4j2.disable.jmx=true -Djava.io.tmpdir=/tmp/elasticsearch-605583263416383431 -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=data -XX:ErrorFile=logs/hs_err_pid%p.log -XX:+PrintGCDetails -XX:+PrintGCDateStamps -XX:+PrintTenuringDistribution -XX:+PrintGCApplicationStoppedTime -Xloggc:logs/gc.log -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=32 -XX:GCLogFileSize=64m -Des.path.home=/opt/elasticsearch -Des.path.conf=/opt/elasticsearch/config -Des.distribution.flavor=default -Des.distribution.type=tar -cp /opt/elasticsearch/lib/* org.elasticsearch.bootstrap.Elasticsearch -d

es 9515 0.0 0.2 72140 5096 ? Sl 22:08 0:00 /opt/elasticsearch/modules/x-pack-ml/platform/linux-x86_64/bin/controller

root 9622 0.0 0.0 112712 968 pts/0 S+ 22:08 0:00 grep --color=auto elasticsearch

(8)制作elsaticsearch自启动脚本

[root@mfyxw20 ~]# cat > /etc/systemd/system/es.service << EOF

[Unit]

Description=ElasticSearch

Requires=network.service

After=network.service

[Service]

User=es

Group=es

LimitNOFILE=65536

LimitMEMLOCK=infinity

Environment=JAVA_HOME=/usr/java/jdk

ExecStart=/opt/elasticsearch/bin/elasticsearch

SuccessExitStatus=143

[Install]

WantedBy=multi-user.target

EOF

(9)设置es.service拥有可执行权限

[root@mfyxw20 ~]# chmod +x /etc/systemd/system/es.service

(10)加入开机自动服务

[root@mfyxw20 ~]# systemctl daemon-reload

[root@mfyxw20 ~]# systemctl start es

(11)调整ES日志模板

[root@mfyxw20 ~]# curl -H "Content-Type:application/json" -XPUT http://192.168.80.20:9200/_template/k8s -d '{

"template" : "k8s*",

"index_patterns": ["k8s*"],

"settings": {

"number_of_shards": 5,

"number_of_replicas": 0

}

}'

6.安装部署kafka

kafka官网:http://kafka.apache.org/

kafka的github地址:https://github.com/apache/kafka

在mfyxw10主机上安装kafka

(1)下载kafka-2.2.2并解压及超链接

[root@mfyxw10 ~]# mkdir -p /opt/src

[root@mfyxw10 ~]#

[root@mfyxw10 ~]# wget https://mirrors.tuna.tsinghua.edu.cn/apache/kafka/2.2.2/kafka_2.12-2.2.2.tgz -O /opt/src/kafka_2.12-2.2.2.tgz

[root@mfyxw10 ~]#

[root@mfyxw10 ~]# tar xf /opt/src/kafka_2.12-2.2.2.tgz -C /opt

[root@mfyxw10 ~]#

[root@mfyxw10 ~]# ln -s /opt/kafka_2.12-2.2.2/ /opt/kafka

(2)创建存储kafka的日志目录

[root@mfyxw10 ~]# mkdir -p /data/kafka/logs

(3)修改kafka配置文件/opt/kafka/config/server.properties

log.dirs=/data/kafka/logs

zookeeper.connect=localhost:2181

log.flush.interval.messages=10000

log.flush.interval.ms=1000

delete.topic.enable=true

host.name=mfyxw10.mfyxw.com

(4)设置开机自启

创建开机自启脚本

[root@mfyxw10 ~]# cat > /etc/systemd/system/kafka.service << EOF

[Unit]

Description=Kafka

After=network.target zookeeper.service

[Service]

Type=simple

Environment="PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/java/jdk/bin"

User=root

Group=root

ExecStart=/opt/kafka/bin/kafka-server-start.sh /opt/kafka/config/server.properties

ExecStop=/opt/kafka/bin/kafka-server-stop.sh

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

启动kafka

[root@mfyxw10 ~]# chmod +x /etc/systemd/system/kafka.service

[root@mfyxw10 ~]#

[root@mfyxw10 ~]# systemctl daemon-reload

[root@mfyxw10 ~]#

[root@mfyxw10 ~]# systemctl enable kafka

[root@mfyxw10 ~]#

[root@mfyxw10 ~]# systemctl start kafka

[root@mfyxw10 ~]#

[root@mfyxw10 ~]# netstat -luntp|grep 9092

tcp6 0 0 192.168.80.10:9092 :::* LISTEN 37926/java

7.部署kafka-manager

kafka-manager的github地址:https://github.com/yahoo/CMAK

在运维主机(mfyxw50.mfyxw.com)操作

(1)创建kafka-manager存放Dockerfile目录

[root@mfyxw50 ~]# mkdir -p /data/dockerfile/kafka-manager

(2)准备Dckerfile文件

注意:安装最新版的cmak 3.0.0.4及以上,需要zookeeper 3.5.X及以上的版本才行,不然,在添加Cluster时保存会报如下错误:

Yikes! KeeperErrorCode = Unimplemented for /kafka-manager/mutex Try again.

[root@mfyxw50 ~]# cat > /data/dockerfile/kafka-manager/Dockerfile << EOF

FROM hseeberger/scala-sbt:11.0.2-oraclelinux7_1.3.13_2.13.3

ENV ZK_HOSTS=192.168.80.10:2181 KM_VERSION=3.0.0.5

RUN yum -y install wget unzip && mkdir -p /tmp && cd /tmp && wget https://github.com/yahoo/kafka-manager/archive/\${KM_VERSION}.tar.gz && tar xxf \${KM_VERSION}.tar.gz && cd /tmp/CMAK-\${KM_VERSION} && sbt clean dist && unzip -d / /tmp/CMAK-\${KM_VERSION}/target/universal/cmak-\${KM_VERSION} && rm -fr /tmp/\${KM_VERSION} /tmp/CMAK-\${KM_VERSION}

WORKDIR /cmak-\${KM_VERSION}

EXPOSE 9000

ENTRYPOINT ["./bin/cmak","-Dconfig.file=conf/application.conf"]

EOF

(2)制作kafka-manager的docker镜像

[root@mfyxw50 ~]# cd /data/dockerfile/kafka-manager/

[root@mfyxw50 ~]# docker build . -t harbor.od.com/infra/kafka-manager:v3.0.0.5

[root@mfyxw50 ~]# docker login harbor.od.com

[root@mfyxw50 ~]# docker push harbor.od.com/infra/kafka-manager:v3.0.0.5

(3)准备kafka-manager资源配置清单

创建存放kafka-manager的yaml文件存放目录

[root@mfyxw50 ~]# mkdir -p /data/k8s-yaml/kafka-manager

创建资源配置清单

deployment.yaml文件内容如下:

[root@mfyxw50 ~]# cat > /data/k8s-yaml/kafka-manager/deployment.yaml << EOF

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: kafka-manager

namespace: infra

labels:

name: kafka-manager

spec:

replicas: 1

selector:

matchLabels:

name: kafka-manager

template:

metadata:

labels:

app: kafka-manager

name: kafka-manager

spec:

containers:

- name: kafka-manager

image: harbor.od.com/infra/kafka-manager:v3.0.0.5

ports:

- containerPort: 9000

protocol: TCP

env:

- name: ZK_HOSTS

value: zk1.od.com:2181

- name: APPLICATION_SECRET

value: letmein

imagePullPolicy: IfNotPresent

imagePullSecrets:

- name: harbor

restartPolicy: Always

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 0

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

EOF

service.yaml文件内容如下:

[root@mfyxw50 ~]# cat > /data/k8s-yaml/kafka-manager/service.yaml << EOF

kind: Service

apiVersion: v1

metadata:

name: kafka-manager

namespace: infra

spec:

ports:

- protocol: TCP

port: 9000

targetPort: 9000

selector:

app: kafka-manager

clusterIP: None

type: ClusterIP

sessionAffinity: None

EOF

Ingress.yaml文件内容如下:

[root@mfyxw50 ~]# cat > /data/k8s-yaml/kafka-manager/Ingress.yaml << EOF

kind: Ingress

apiVersion: extensions/v1beta1

metadata:

name: kafka-manager

namespace: infra

spec:

rules:

- host: km.od.com

http:

paths:

- path: /

backend:

serviceName: kafka-manager

servicePort: 9000

EOF

(4)应用kafka-manager资源配置清单

在master节点(mfyxw30或mfyxw40)任意一台执行

[root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/kafka-manager/deployment.yaml

deployment.extensions/kafka-manager created

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/kafka-manager/service.yaml

service/kafka-manager created

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/kafka-manager/Ingress.yaml

ingress.extensions/kafka-manager created

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl get pod -n infra | grep kafka

kafka-manager-69b7585d95-w7ch5 1/1 Running 0 80s

(5)解析域名

在mfyxw10主机执行

[root@mfyxw10 ~]# cat > /var/named/od.com.zone << EOF

\$ORIGIN od.com.

\$TTL 600 ; 10 minutes

@ IN SOA dns.od.com. dnsadmin.od.com. (

;序号请加1,表示比之前版本要新

2020031317 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.od.com.

\$TTL 60 ; 1 minute

dns A 192.168.80.10

harbor A 192.168.80.50 ;添加harbor记录

k8s-yaml A 192.168.80.50

traefik A 192.168.80.100

dashboard A 192.168.80.100

zk1 A 192.168.80.10

zk2 A 192.168.80.20

zk3 A 192.168.80.30

jenkins A 192.168.80.100

dubbo-monitor A 192.168.80.100

demo A 192.168.80.100

mysql A 192.168.80.10

config A 192.168.80.100

portal A 192.168.80.100

zk-test A 192.168.80.10

zk-prod A 192.168.80.20

config-test A 192.168.80.100

config-prod A 192.168.80.100

demo-test A 192.168.80.100

demo-prod A 192.168.80.100

blackbox A 192.168.80.100

prometheus A 192.168.80.100

grafana A 192.168.80.100

km A 192.168.80.100

EOF

重启dns服务

[root@mfyxw10 ~]# systemctl restart named

测试域名解析

[root@mfyxw10 ~]# dig -t A km.od.com @192.168.80.10 +short

192.168.80.100

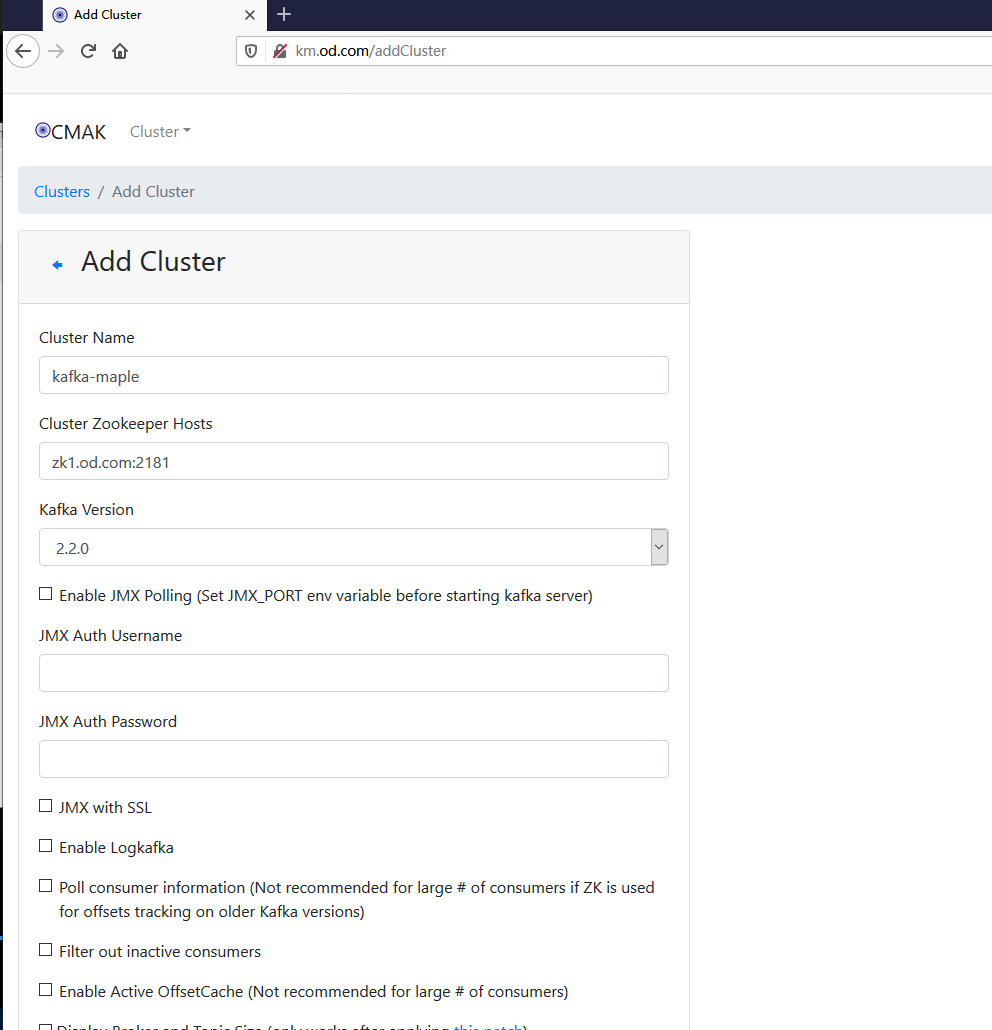

(6)在kafka-manager(CMAK)添加Cluster

只需要在Cluster Name,Cluster Zookeeper Hosts 和Kafka Version选择相对应,brokerViewThreadPoolSize 和offsetCacheThreadPoolSize和kafkaAdminClientThreadPoolSize都修改为2, 其它保持默认即可

8.制作filebeat底包并接入dubbo服务消费者

filebeat官方下载地址:https://www.elastic.co/cn/downloads/beats/filebeat

(1)制作docker镜像

准备filebeat存储dockerfile的目录

[root@mfyxw50 src]# mkdir -p /data/dockerfile/filebeat

准备Dockerfile文件

[root@mfyxw50 ~]# cat > /data/dockerfile/filebeat/Dockerfile << EOF

FROM debian:jessie

ENV FILEBEAT_VERSION=7.5.1 \

FILEBEAT_SHA1=daf1a5e905c415daf68a8192a069f913a1d48e2c79e270da118385ba12a93aaa91bda4953c3402a6f0abf1c177f7bcc916a70bcac41977f69a6566565a8fae9c

RUN set -x && \

apt-get update && \

apt-get install -y wget && \

wget https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-\${FILEBEAT_VERSION}-linux-x86_64.tar.gz -O /opt/filebeat.tar.gz && \

cd /opt && \

echo "\${FILEBEAT_SHA1} filebeat.tar.gz" | sha512sum -c - && \

tar xzvf filebeat.tar.gz && \

cd filebeat-* && \

cp filebeat /bin && \

cd /opt && \

rm -rf filebeat* && \

apt-get purge -y wget && \

apt-get autoremove -y && \

apt-get clean && rm -rf /var/lib/apt/lists/* /tmp/* /var/tmp/*

COPY docker-entrypoint.sh /

ENTRYPOINT ["/docker-entrypoint.sh"]

EOF

准备entrypoint.sh文件

[root@mfyxw50 ~]# cat > /data/dockerfile/filebeat/docker-entrypoint.sh << EOFF

#!/bin/bash

ENV=\${ENV:-"test"}

PROJ_NAME=\${PROJ_NAME:-"no-define"}

MULTILINE=\${MULTILINE:-"^\d{2}"}

cat > /etc/filebeat.yaml << EOF

filebeat.inputs:

- type: log

fields_under_root: true

fields:

topic: logm-\${PROJ_NAME}

paths:

- /logm/*.log

- /logm/*/*.log

- /logm/*/*/*.log

- /logm/*/*/*/*.log

- /logm/*/*/*/*/*.log

scan_frequency: 120s

max_bytes: 10485760

multiline.pattern: '\$MULTILINE'

multiline.negate: true

multiline.match: after

multiline.max_lines: 100

- type: log

fields_under_root: true

fields:

topic: logu-\${PROJ_NAME}

paths:

- /logu/*.log

- /logu/*/*.log

- /logu/*/*/*.log

- /logu/*/*/*/*.log

- /logu/*/*/*/*/*.log

- /logu/*/*/*/*/*/*.log

output.kafka:

hosts: ["192.168.80.10:9092"]

topic: k8s-fb-\$ENV-%{[topic]}

version: 2.0.0

required_acks: 0

max_message_bytes: 10485760

EOF

set -xe

# If user don't provide any command

# Run filebeat

if [[ "\$1" == "" ]]; then

exec filebeat -c /etc/filebeat.yaml

else

# Else allow the user to run arbitrarily commands like bash

exec "\$@"

fi

EOFF

给docker-entrypoint.sh添加执行权限

[root@mfyxw50 ~]# chmod +x /data/dockerfile/filebeat/docker-entrypoint.sh

(2)构建filebeat镜像

[root@mfyxw50 ~]# cd /data/dockerfile/filebeat/

[root@mfyxw50 filebeat]# docker build . -t harbor.od.com/infra/filebeat:v7.5.1

(3)上传filebeat至私有仓库

[root@mfyxw50 ~]# docker login harbor.od.com

[root@mfyxw50 ~]# docker push harbor.od.com/infra/filebeat:v7.5.1

The push refers to repository [harbor.od.com/infra/filebeat]

8e2236b85988: Pushed

c2d8da074e58: Pushed

a126e19b0447: Pushed

v7.5.1: digest: sha256:cee0803ee83a326663b50839ac63981985b672b4579625beca5f2bc1182df4c1 size: 948

(4)修改dubbo-demo-consumer的Tomcat资源配置清单Deployment.yaml

修改好Tomcat的deployment.yaml文件内容如下:

[root@mfyxw50 ~]# cat > /data/k8s-yaml/test/dubbo-demo-consumer/deployment.yaml << EOF

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: dubbo-demo-consumer

namespace: test

labels:

name: dubbo-demo-consumer

spec:

replicas: 1

selector:

matchLabels:

name: dubbo-demo-consumer

template:

metadata:

labels:

app: dubbo-demo-consumer

name: dubbo-demo-consumer

spec:

containers:

- name: dubbo-demo-consumer

image: harbor.od.com/app/dubbo-demo-web:tomcat_20200818_1555

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080

protocol: TCP

env:

- name: C_OPTS

value: -Denv=fat -Dapollo.meta=http://config-test.od.com

volumeMounts:

- mountPath: /opt/tomcat/logs

name: logm

- name: filebeat

image: harbor.od.com/infra/filebeat:v7.5.1

imagePullPolicy: IfNotPresent

env:

- name: ENV

value: test

- name: PROJ_NAME

value: dubbo-demo-web

volumeMounts:

- mountPath: /logm

name: logm

volumes:

- emptyDir: {}

name: logm

imagePullSecrets:

- name: harbor

restartPolicy: Always

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 0

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

EOF

应用修改后的资源配置清单,应用前,先将之前的deployment.yaml使用kubectl delete -f 来删除pod

在master节点(mfyxw30或mfyxw40)任意一台执行

[root@mfyxw30 ~]# kubectl delete -f http://k8s-yaml.od.com/test/dubbo-demo-consumer/deployment.yaml

deployment.extensions "dubbo-demo-consumer" deleted

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/test/dubbo-demo-consumer/deployment.yaml

deployment.extensions/dubbo-demo-consumer created

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl get pod -n test

NAME READY STATUS RESTARTS AGE

apollo-adminservice-5cccf97c64-6448z 1/1 Running 6 5d3h

apollo-configservice-5f6555448-dnrts 1/1 Running 6 5d3h

dubbo-demo-consumer-7488576d88-ghlt5 2/2 Running 0 49s

dubbo-demo-service-cc6b9d8c7-wfkgh 1/1 Running 0 6s

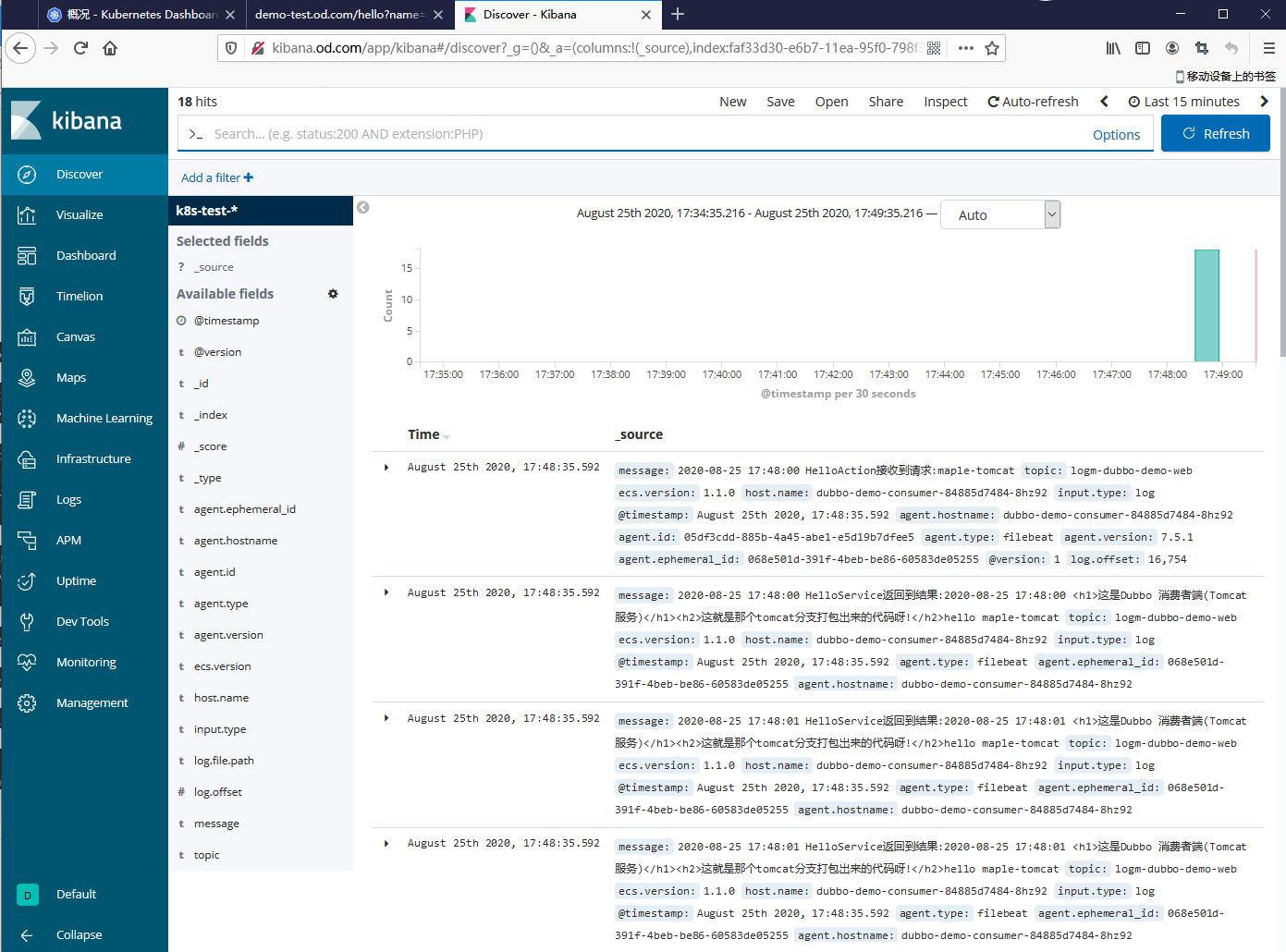

(5)浏览器访问http://demo-test.od.com/hello?name=maple-tomcat

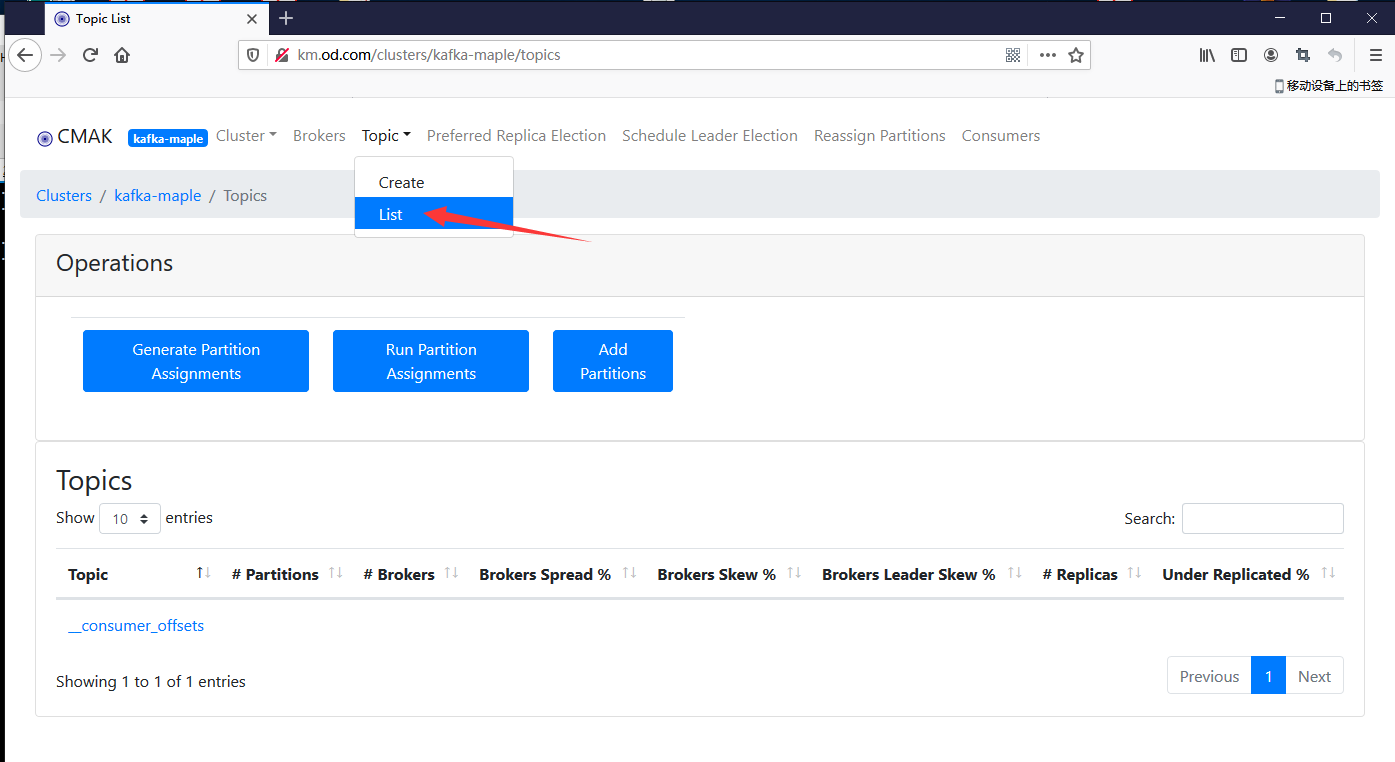

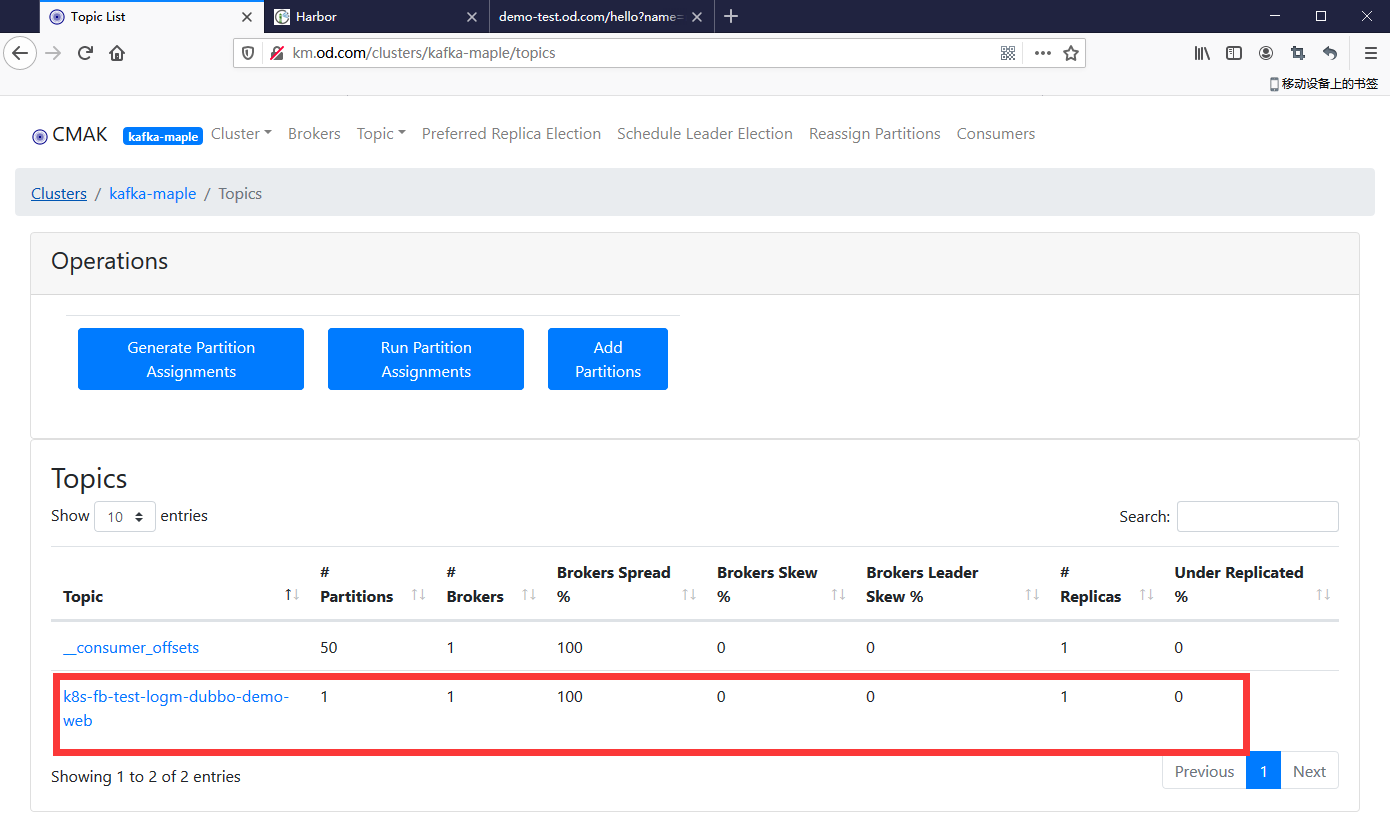

(6)浏览器访问kafka-manager链接

9.部署logstash镜像

Logstash链接:https://www.elastic.co/cn/logstash

在运维主机mfyxw50上运行

(1)下载Logstach-6.8.6镜像

[root@mfyxw50 ~]# docker pull logstash:6.8.6

6.8.6: Pulling from library/logstash

ab5ef0e58194: Pull complete

c2bdff85c0ef: Pull complete

ea4021eabbe3: Pull complete

04880b09f62d: Pull complete

495c57fa2867: Pull complete

b1226a129846: Pull complete

a368341d0685: Pull complete

93bfc3cf8fb8: Pull complete

89333ddd001c: Pull complete

289ecf0bfa6d: Pull complete

b388674055dd: Pull complete

Digest: sha256:0ae81d624d8791c37c8919453fb3efe144ae665ad921222da97bc761d2a002fe

Status: Downloaded newer image for logstash:6.8.6

docker.io/library/logstash:6.8.6

(2)将Logstash镜像的标签修改并上传到私有仓库

[root@mfyxw50 ~]# docker tag docker.io/library/logstash:6.8.6 harbor.od.com/infra/logstash:6.8.6

[root@mfyxw50 ~]#

[root@mfyxw50 ~]# docker login harbor.od.com

Authenticating with existing credentials...

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

[root@mfyxw50 ~]#

[root@mfyxw50 ~]# docker push harbor.od.com/infra/logstash:6.8.6

The push refers to repository [harbor.od.com/infra/logstash]

666f2e4c4af9: Pushed

5c6cd1f13da3: Pushed

d6dd7f93ab29: Pushed

30dad013eb8c: Pushed

ee5cfcf3cf84: Pushed

8c3a9cdf8d67: Pushed

34f741ce8747: Pushed

4ba9ea58780c: Pushed

f2528af7ad89: Pushed

c1e731026d5a: Pushed

77b174a6a187: Pushed

6.8.6: digest: sha256:0ed0f4605e6848b9ae2df7edf6092abf475c4cdf0f591e00d5e15e4b1e5e1961 size: 2824

(3)创建自定义镜像文件

创建logstash存放dockerfile文件的目录

[root@mfyxw50 ~]# mkdir -p /data/dockerfile/logstash

自定义dockerfile文件

[root@mfyxw50 ~]# cat > /data/dockerfile/logstash/dockerfile << EOF

From harbor.od.com/infra/logstash:6.8.6

ADD logstash.yml /usr/share/logstash/config

EOF

自定义logstash.yml文件

[root@mfyxw50 ~]# cat > /data/dockerfile/logstash/logstash.yml << EOF

http.host: "0.0.0.0"

path.config: /etc/logstash

xpack.monitoring.enabled: false

EOF

(4)构建自定义镜像

[root@mfyxw50 ~]# cd /data/dockerfile/logstash/

[root@mfyxw50 logstash]#

[root@mfyxw50 logstash]# docker build . -t harbor.od.com/infra/logstash:v6.8.6

Sending build context to Docker daemon 3.072kB

Step 1/2 : From harbor.od.com/infra/logstash:6.8.6

---> d0a2dac51fcb

Step 2/2 : ADD logstash.yml /usr/share/logstash/config

---> 3deaf4ee882c

Successfully built 3deaf4ee882c

Successfully tagged harbor.od.com/infra/logstash:v6.8.6

(5)上传构建自定义镜像至私有仓库

[root@mfyxw50 ~]# docker login harbor.od.com

Authenticating with existing credentials...

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

[root@mfyxw50 ~]#

[root@mfyxw50 ~]# docker push harbor.od.com/infra/logstash:v6.8.6

The push refers to repository [harbor.od.com/infra/logstash]

b111e0ee0d68: Pushed

666f2e4c4af9: Layer already exists

5c6cd1f13da3: Layer already exists

d6dd7f93ab29: Layer already exists

30dad013eb8c: Layer already exists

ee5cfcf3cf84: Layer already exists

8c3a9cdf8d67: Layer already exists

34f741ce8747: Layer already exists

4ba9ea58780c: Layer already exists

f2528af7ad89: Layer already exists

c1e731026d5a: Layer already exists

77b174a6a187: Layer already exists

v6.8.6: digest: sha256:4cfa2ca033aa577dfd77c5ed79cfdf73950137cd8c2d01e52befe4cb6da208a5 size: 3031

(6)启动自定义logstash的docker镜像

创建存放logstash配置文件目录

[root@mfyxw50 ~]# mkdir -p /etc/logstash

创建logstash启动配置文件

[root@mfyxw50 ~]# cat > /etc/logstash/logstash-test.conf << EOF

input {

kafka {

bootstrap_servers => "192.168.80.10:9092"

client_id => "192.168.80.50"

consumer_threads => 4

group_id => "k8s_test"

topics_pattern => "k8s-fb-test-.*"

}

}

filter {

json {

source => "message"

}

}

output {

elasticsearch {

hosts => ["192.168.80.20:9200"]

index => "k8s-test-%{+YYYY.MM}"

}

}

EOF

启动logstash镜像

[root@mfyxw50 ~]# docker run -d --restart=always --name logstash-test -v /etc/logstash:/etc/logstash harbor.od.com/infra/logstash:v6.8.6 -f /etc/logstash/logstash-test.conf

[root@mfyxw50 ~]#

[root@mfyxw50 ~]# docker ps -a|grep logstash

de684cbfe00c harbor.od.com/infra/logstash:v6.8.6 "/usr/local/bin/dock…" 28 seconds ago Up 27 seconds 5044/tcp, 9600/tcp logstash-test

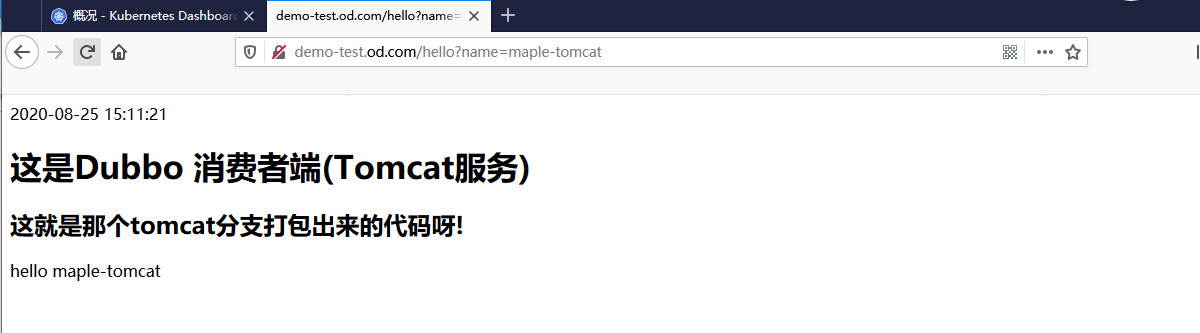

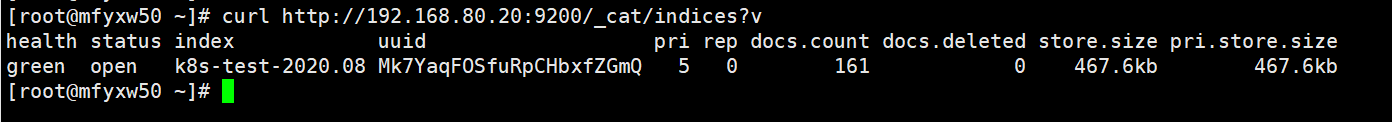

(7)重新访问http://demo-test.od.com/hello?name=maple-tomcat,建议多刷新几次

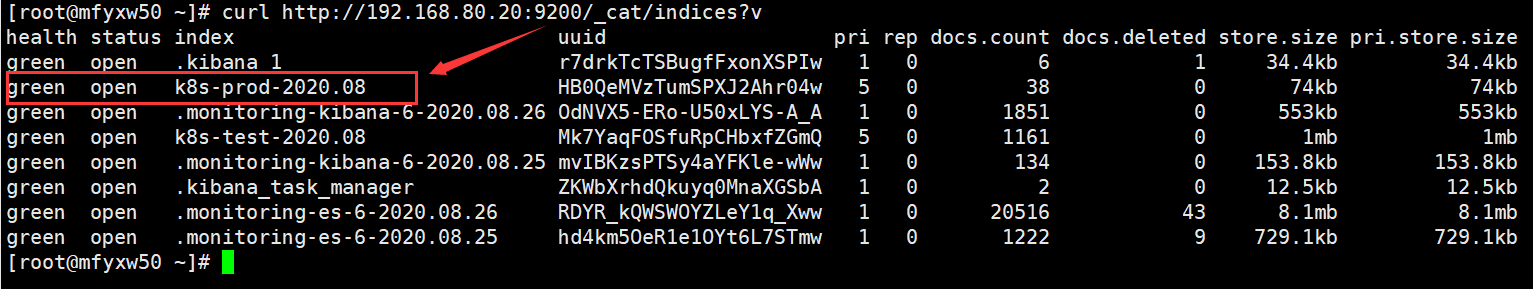

(8)验证ElasticSearch里的索引

使用命令:curl http://192.168.80.20:9200/_cat/indices?v

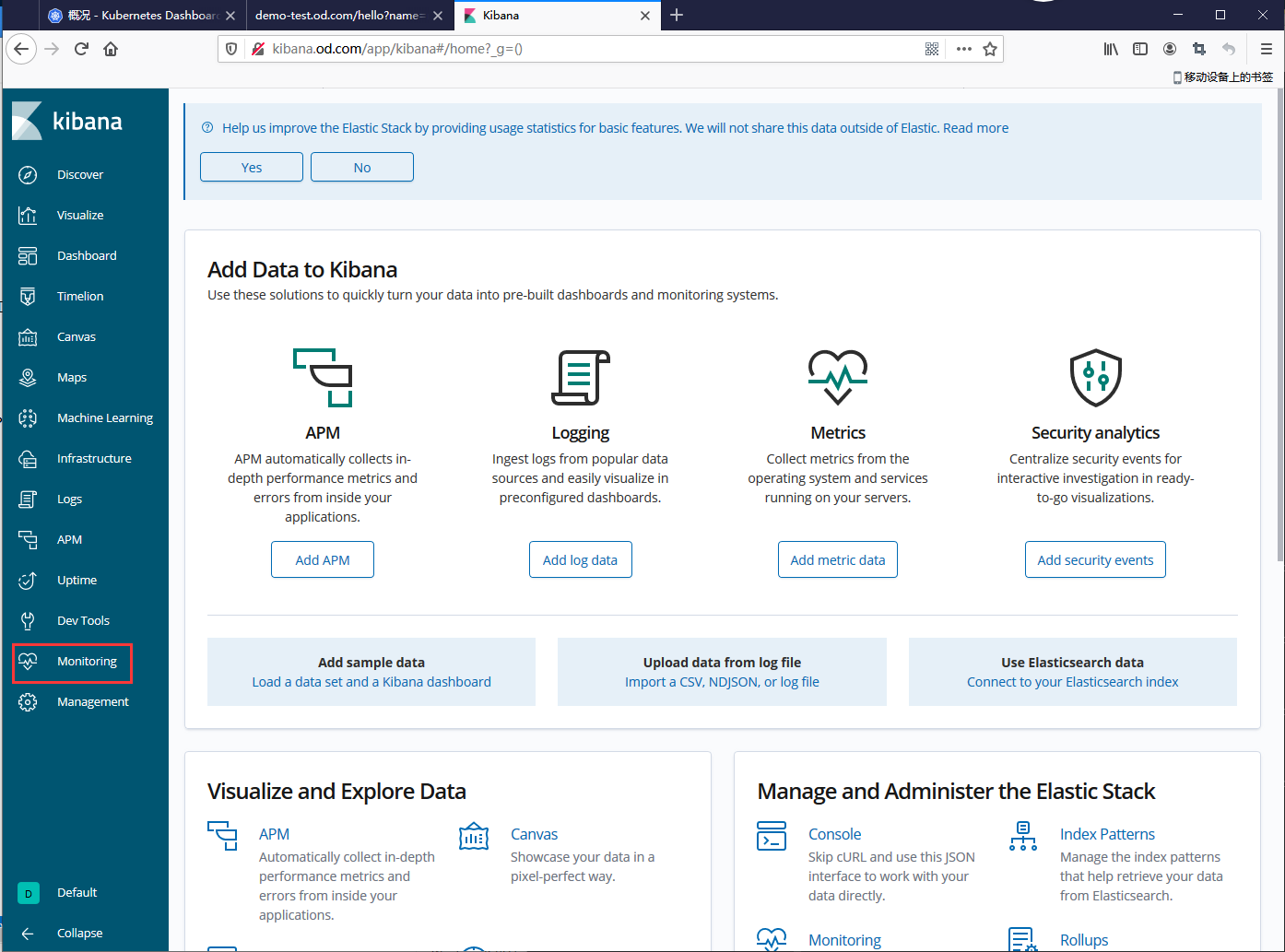

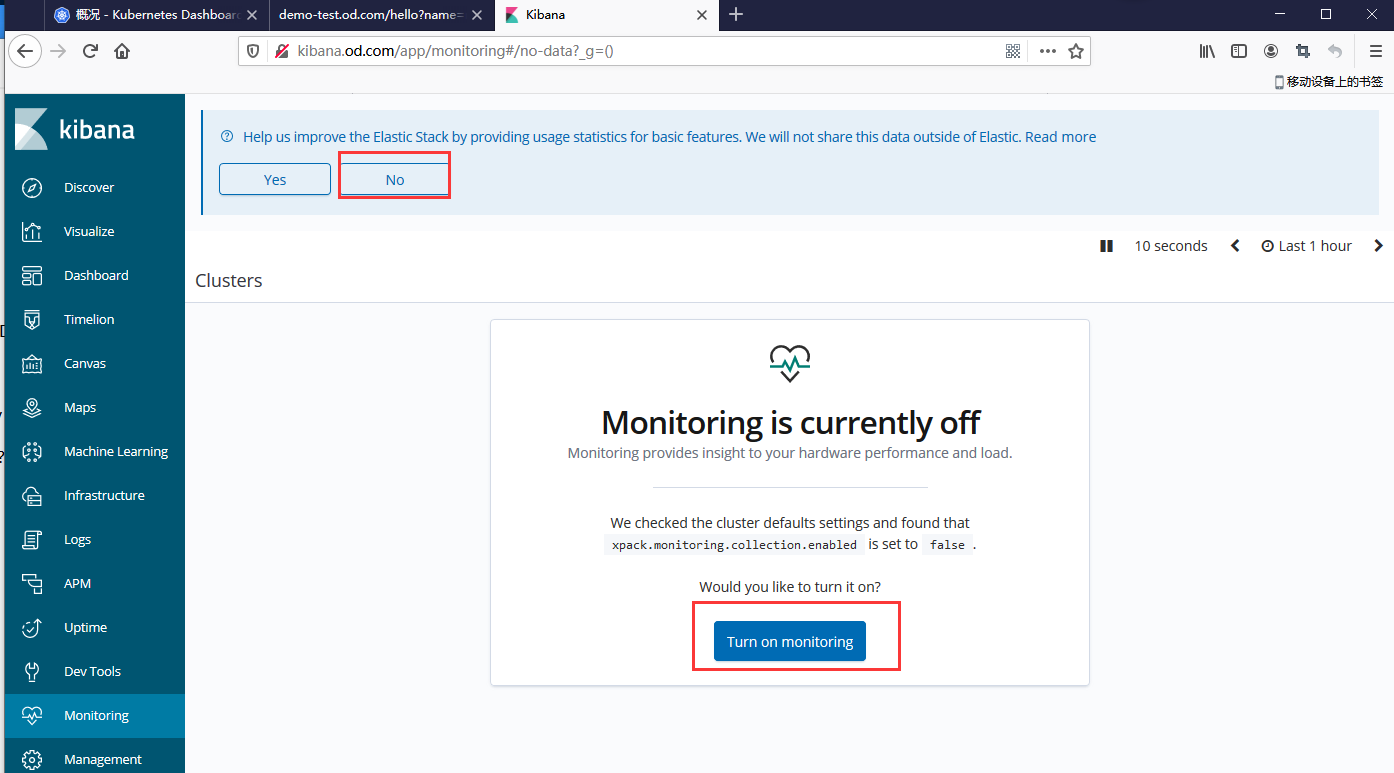

10.交付kibana到K8S集群.

在运维主机(mfyxw50)上运行

(1)下载kibana-6.8.6镜像

kibana官方镜像下载地址:https://hub.docker.com/_/kibana?tab=tags

[root@mfyxw50 ~]# docker pull kibana:6.8.6

6.8.6: Pulling from library/kibana

ab5ef0e58194: Already exists

40057de213d2: Pull complete

434b62b0636c: Pull complete

7370972a467b: Pull complete

7e1767103506: Pull complete

83c66d66f930: Pull complete

590781aeb7f4: Pull complete

f1efa205edea: Pull complete

Digest: sha256:f773d11d6a4304d1795d63b6877cf7e21bbcb4703d57444325f0a906163a408c

Status: Downloaded newer image for kibana:6.8.6

docker.io/library/kibana:6.8.6

(2)打标签并上传至私有仓库

[root@mfyxw50 ~]# docker tag docker.io/library/kibana:6.8.6 harbor.od.com/infra/kibana:v6.8.6

[root@mfyxw50 ~]#

[root@mfyxw50 ~]# docker login harbor.od.com

Authenticating with existing credentials...

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

[root@mfyxw50 ~]#

[root@mfyxw50 ~]# docker push harbor.od.com/infra/kibana:v6.8.6

The push refers to repository [harbor.od.com/infra/kibana]

3573fe0cfde1: Pushed

000b2462f403: Pushed

c5a5a030b050: Pushed

b43f0089a699: Pushed

8597b2101ff5: Pushed

7be703b4643c: Pushed

70cd85eb5312: Pushed

77b174a6a187: Mounted from infra/logstash

v6.8.6: digest: sha256:0a831b9e3251e777615aed743ba46ebd2b0253cd6e39c7636246515710f78379 size: 1991

(3)解析域名

在mfyxw10主机上操作

[root@mfyxw10 ~]# cat > /var/named/od.com.zone << EOF

\$ORIGIN od.com.

\$TTL 600 ; 10 minutes

@ IN SOA dns.od.com. dnsadmin.od.com. (

;序号请加1,表示比之前版本要新

2020031318 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.od.com.

\$TTL 60 ; 1 minute

dns A 192.168.80.10

harbor A 192.168.80.50 ;添加harbor记录

k8s-yaml A 192.168.80.50

traefik A 192.168.80.100

dashboard A 192.168.80.100

zk1 A 192.168.80.10

zk2 A 192.168.80.20

zk3 A 192.168.80.30

jenkins A 192.168.80.100

dubbo-monitor A 192.168.80.100

demo A 192.168.80.100

mysql A 192.168.80.10

config A 192.168.80.100

portal A 192.168.80.100

zk-test A 192.168.80.10

zk-prod A 192.168.80.20

config-test A 192.168.80.100

config-prod A 192.168.80.100

demo-test A 192.168.80.100

demo-prod A 192.168.80.100

blackbox A 192.168.80.100

prometheus A 192.168.80.100

grafana A 192.168.80.100

km A 192.168.80.100

kibana A 192.168.80.100

EOF

重启dns服务

[root@mfyxw10 ~]# systemctl restart named

测试域名解析

[root@mfyxw10 ~]# dig -t A kibana.od.com @192.168.80.10 +short

192.168.80.100

(4)准备kibana资源配置清单

创建存放kibana的资源配置清单目录

[root@mfyxw50 ~]# mkdir -p /data/k8s-yaml/kibana

deployment.yaml文件内容如下:

[root@mfyxw50 ~]# cat > /data/k8s-yaml/kibana/deployment.yaml << EOF

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: kibana

namespace: infra

labels:

name: kibana

spec:

replicas: 1

selector:

matchLabels:

name: kibana

template:

metadata:

labels:

app: kibana

name: kibana

spec:

containers:

- name: kibana

image: harbor.od.com/infra/kibana:v6.8.6

imagePullPolicy: IfNotPresent

ports:

- containerPort: 5601

protocol: TCP

env:

- name: ELASTICSEARCH_URL

value: http://192.168.80.20:9200

imagePullSecrets:

- name: harbor

securityContext:

runAsUser: 0

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

EOF

service.yaml文件内容如下:

[root@mfyxw50 ~]# cat > /data/k8s-yaml/kibana/service.yaml << EOF

kind: Service

apiVersion: v1

metadata:

name: kibana

namespace: infra

spec:

ports:

- protocol: TCP

port: 5601

targetPort: 5601

selector:

app: kibana

EOF

Ingress.yaml文件内容如下:

[root@mfyxw50 ~]# cat > /data/k8s-yaml/kibana/Ingress.yaml << EOF

kind: Ingress

apiVersion: extensions/v1beta1

metadata:

name: kibana

namespace: infra

spec:

rules:

- host: kibana.od.com

http:

paths:

- path: /

backend:

serviceName: kibana

servicePort: 5601

EOF

(5)应用kibana资源配置清单

在master节点(mfyxw30或mfyxw40)任意一台执行即可

[root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/kibana/deployment.yaml

deployment.extensions/kibana created

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/kibana/service.yaml

service/kibana created

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/kibana/Ingress.yaml

ingress.extensions/kibana created

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl get pod -n infra | grep kibana

kibana-ff5c68bbc-vdl76 1/1 Running 0 78s

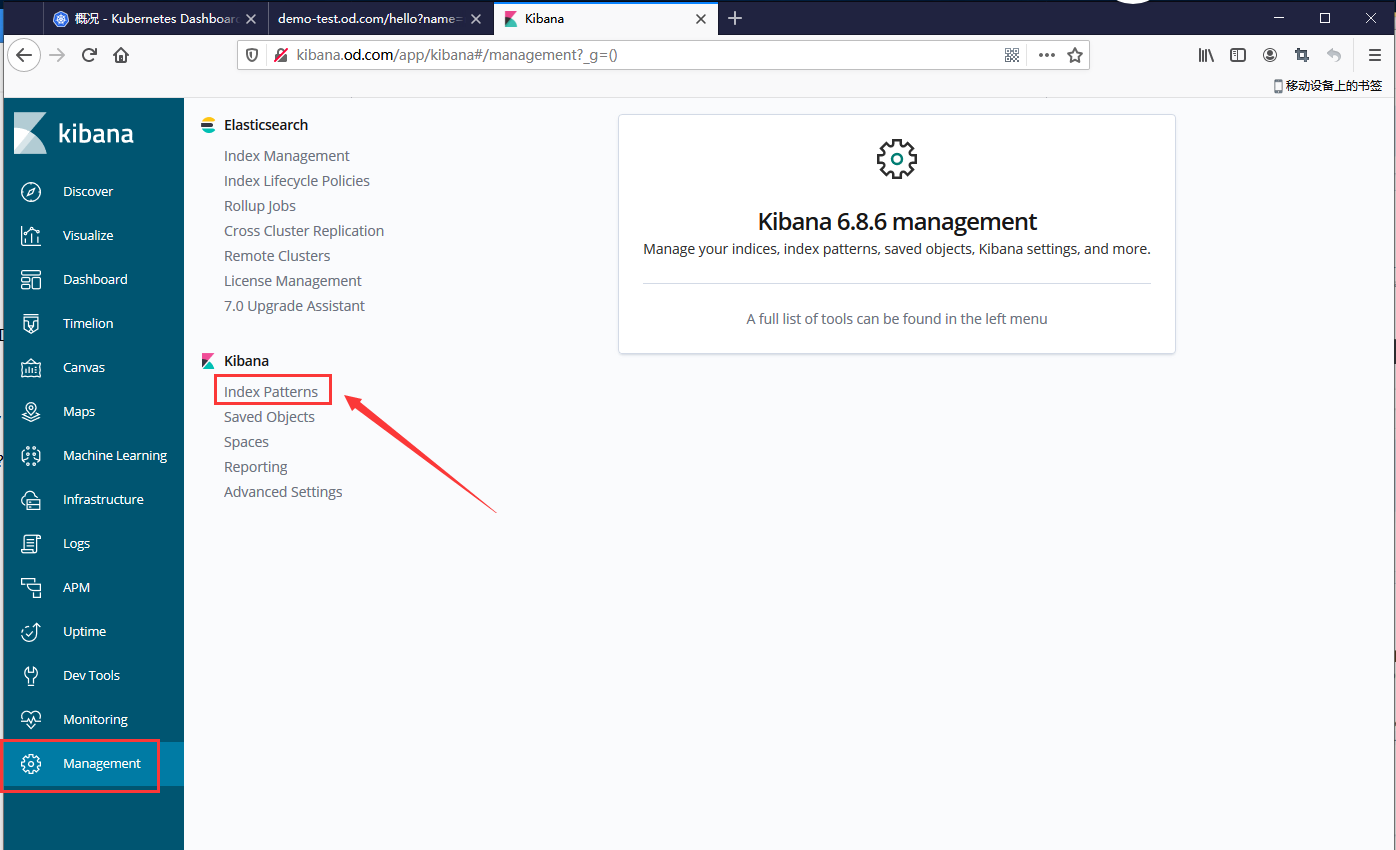

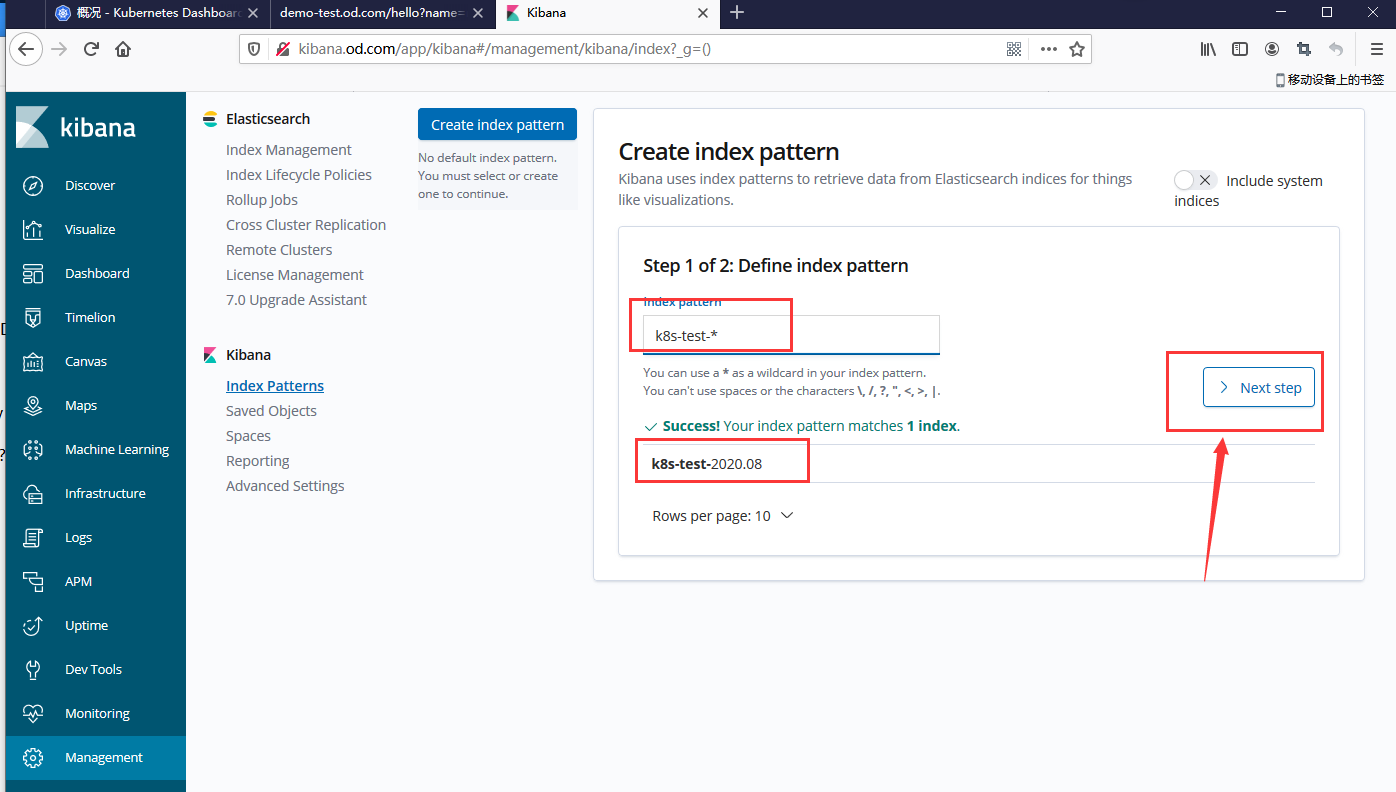

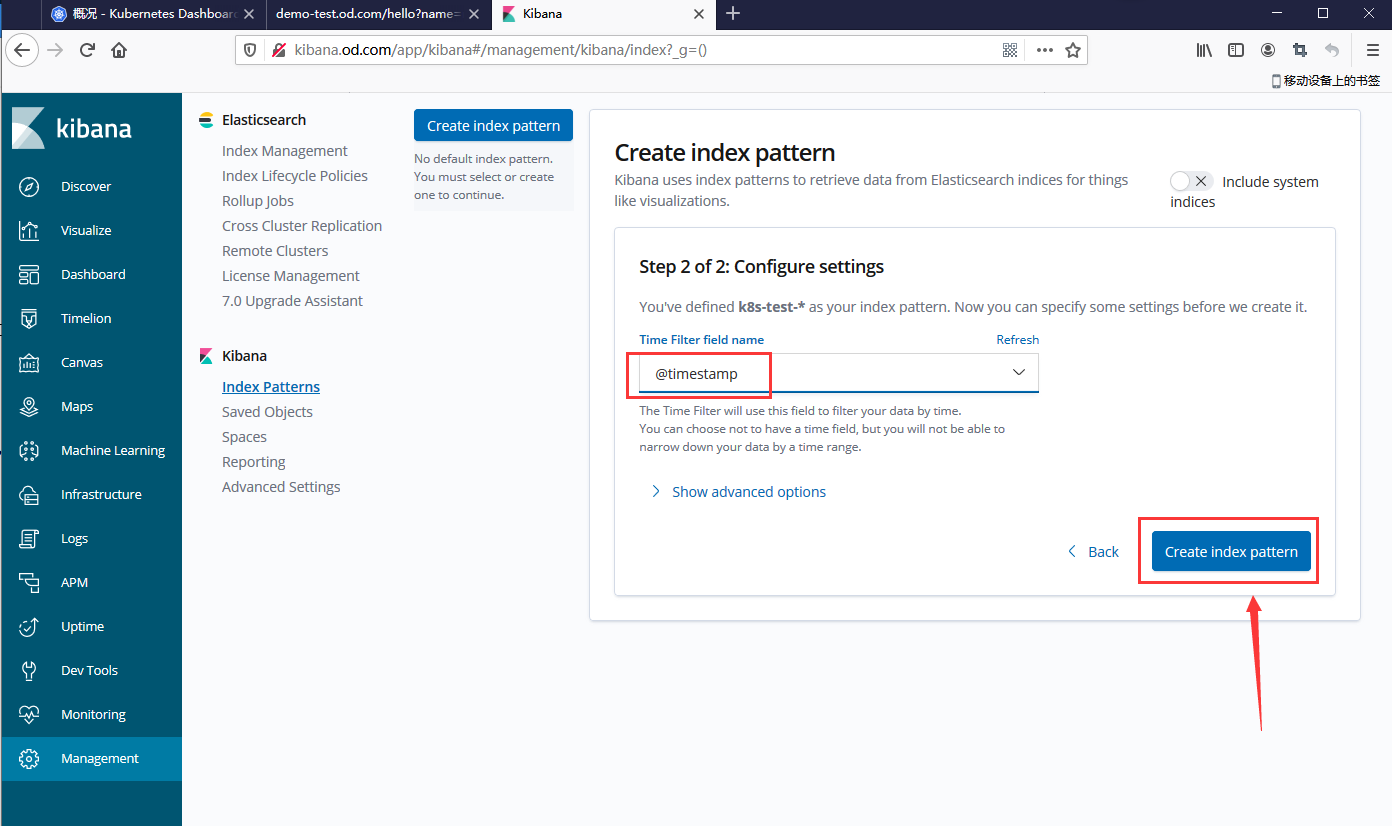

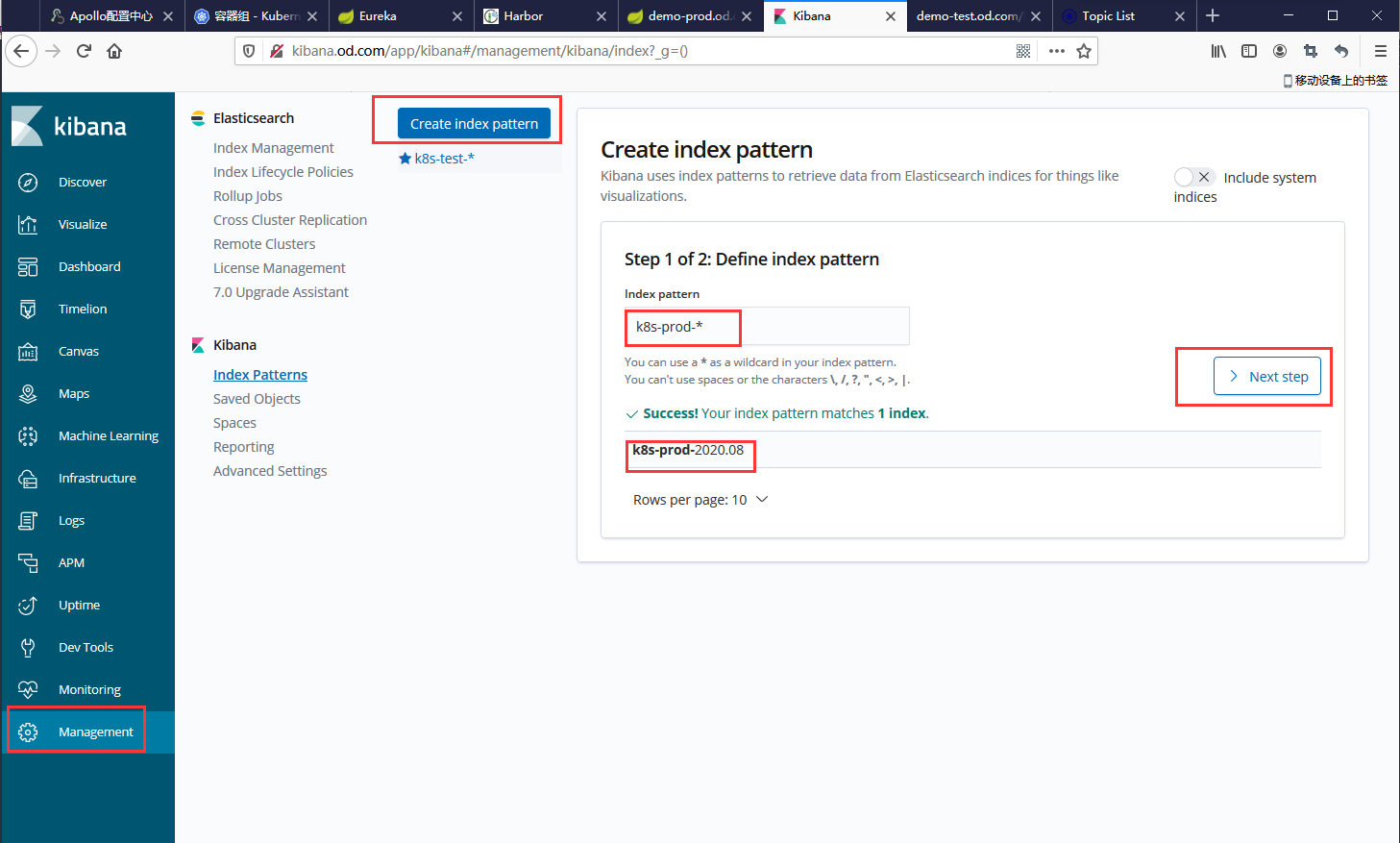

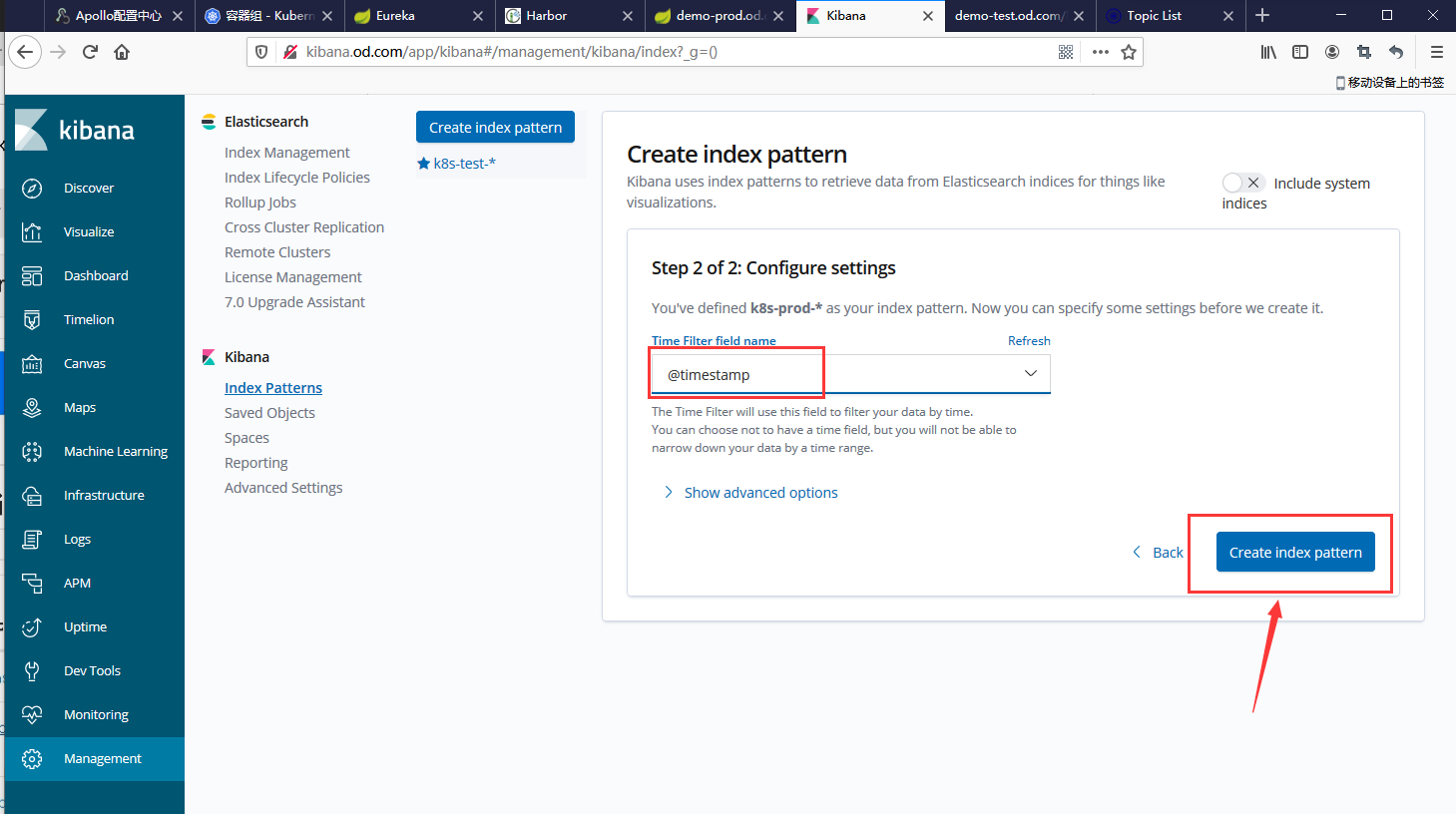

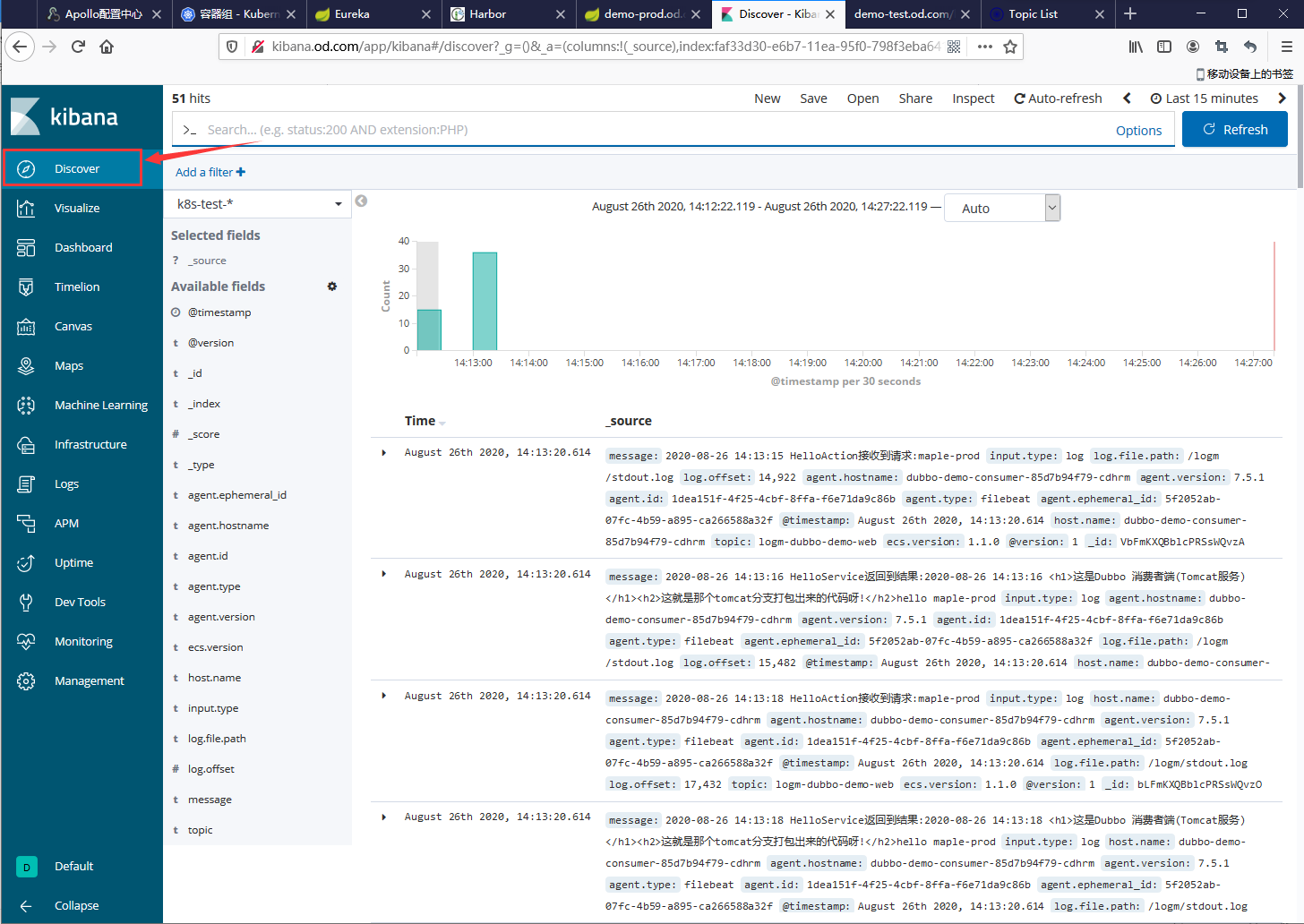

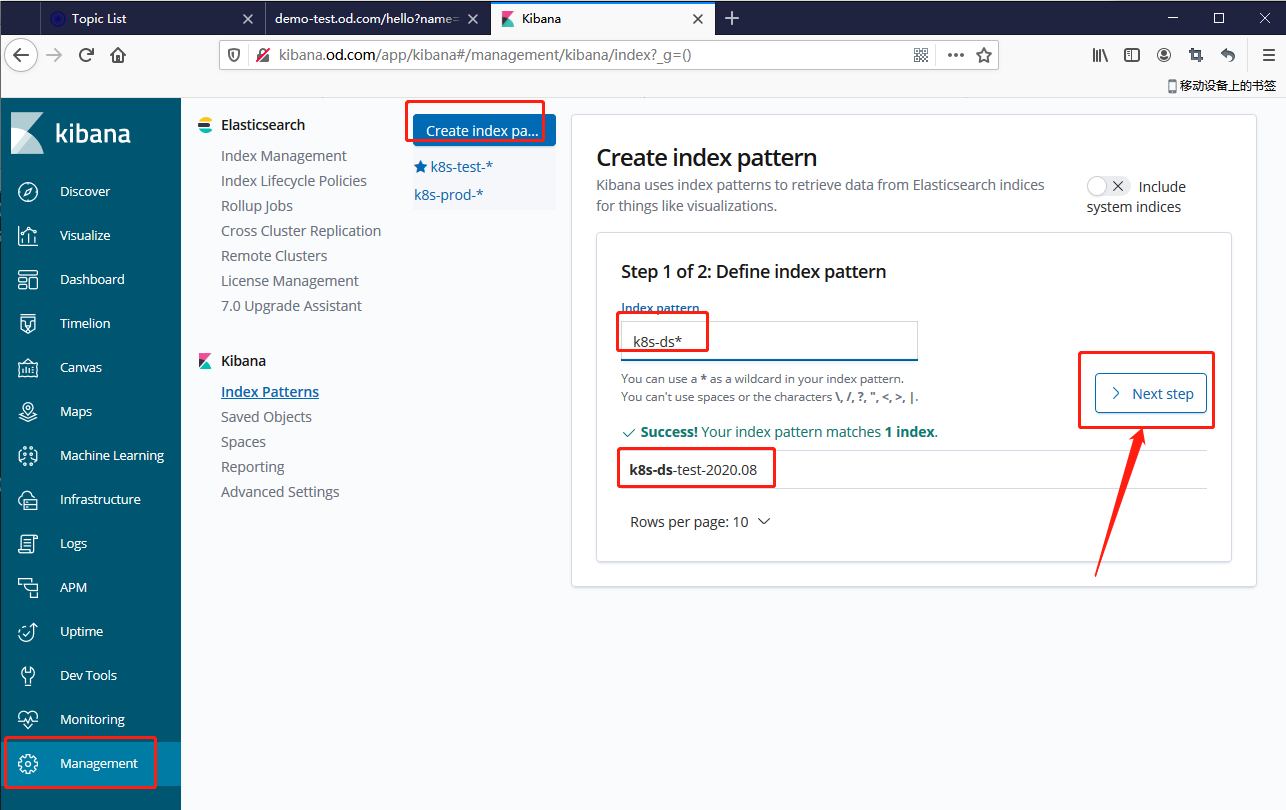

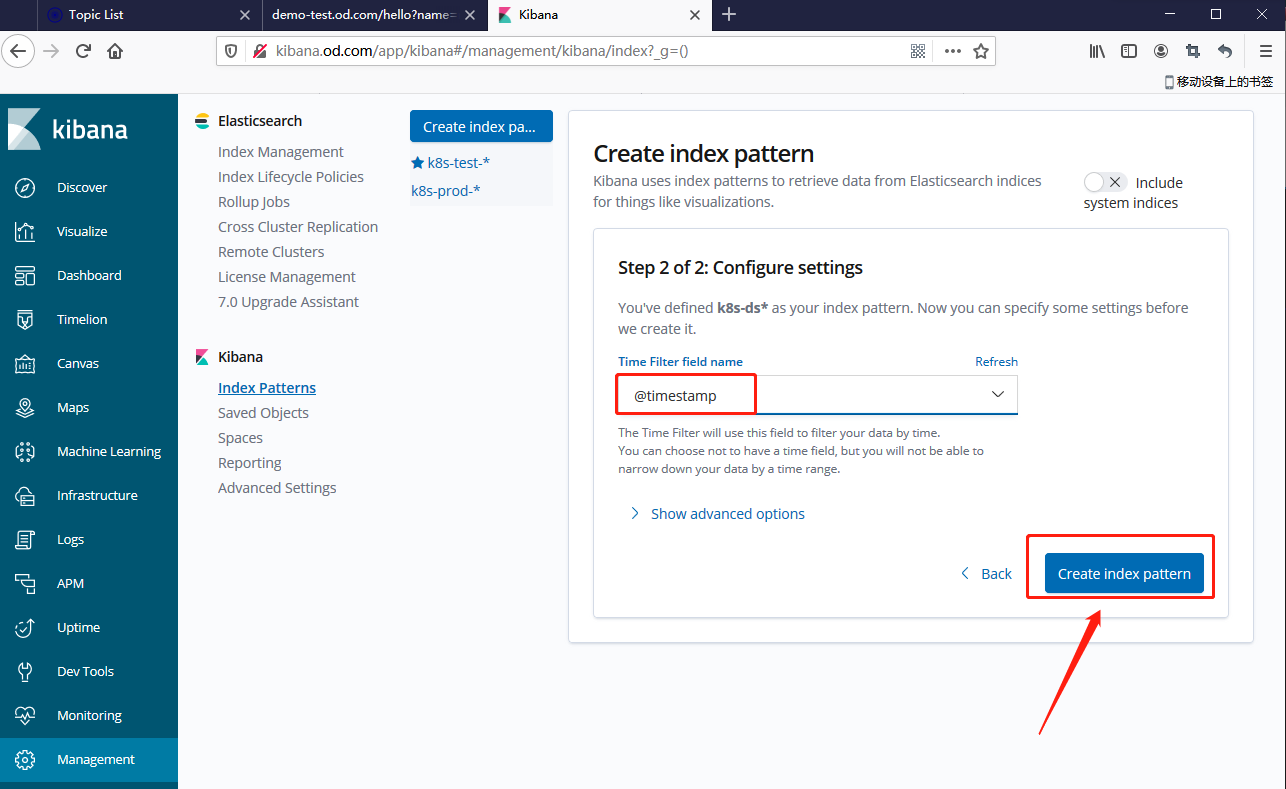

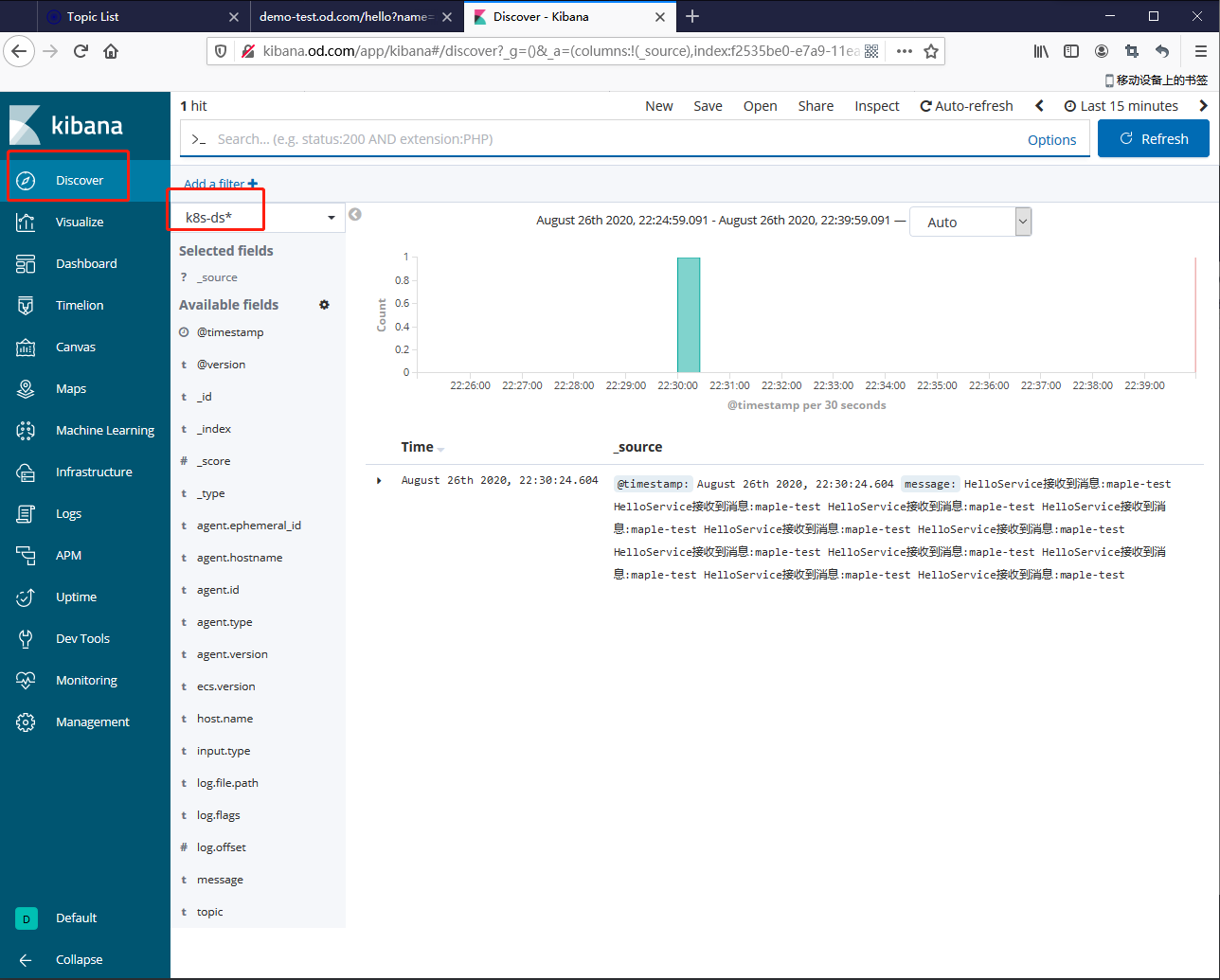

(6)浏览器访问kibana.od.com

创建Index pattern

(7)将生产环境的提供者和消费都启动

先将生产环境的提供者启动,之前已经将deployment/dubbo-demo-service的replicas设置为0.

[root@mfyxw30 ~]# kubectl scale --replicas=1 deployment/dubbo-demo-service -n prod

deployment.extensions/dubbo-demo-service scaled

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl get pod -n prod | grep dubbo-demo-service

dubbo-demo-service-bf45dcbbb-zpbj6 1/1 Running 0 3m44s

修改生产环境的消费者的deployment.yaml文件

deployment.yaml文件内容如下:

[root@mfyxw50 ~]# cat > /data/k8s-yaml/prod/dubbo-demo-consumer/deployment.yaml << EOF

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: dubbo-demo-consumer

namespace: prod

labels:

name: dubbo-demo-consumer

spec:

replicas: 1

selector:

matchLabels:

name: dubbo-demo-consumer

template:

metadata:

labels:

app: dubbo-demo-consumer

name: dubbo-demo-consumer

spec:

containers:

- name: dubbo-demo-consumer

image: harbor.od.com/app/dubbo-demo-web:tomcat_20200818_1555

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080

protocol: TCP

env:

- name: C_OPTS

value: -Denv=pro -Dapollo.meta=http://config-prod.od.com

volumeMounts:

- mountPath: /opt/tomcat/logs

name: logm

- name: filebeat

image: harbor.od.com/infra/filebeat:v7.5.1

imagePullPolicy: IfNotPresent

env:

- name: ENV

value: prod

- name: PROJ_NAME

value: dubbo-demo-web

volumeMounts:

- mountPath: /logm

name: logm

volumes:

- emptyDir: {}

name: logm

imagePullSecrets:

- name: harbor

restartPolicy: Always

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 0

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

EOF

将生产环境下的消费者启动

[root@mfyxw30 ~]# kubectl delete -f http://k8s-yaml.od.com/prod/dubbo-demo-consumer/deployment.yaml

deployment.extensions "dubbo-demo-consumer" deleted

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/prod/dubbo-demo-consumer/deployment.yaml

[root@mfyxw30 ~]# kubectl get pod -n prod

NAME READY STATUS RESTARTS AGE

apollo-adminservice-5cccf97c64-d2k5q 1/1 Running 1 17h

apollo-configservice-5f6555448-j282v 1/1 Running 1 17h

dubbo-demo-consumer-85d7b94f79-vwrhp 2/2 Running 0 2s

dubbo-demo-service-bf45dcbbb-zpbj6 1/1 Running 0 23m

(8)启动生产环境prod的logstash镜像

创建logstash启动配置文件

[root@mfyxw50 ~]# cat > /etc/logstash/logstash-prod.conf << EOF

input {

kafka {

bootstrap_servers => "192.168.80.10:9092"

client_id => "192.168.80.50"

consumer_threads => 4

group_id => "k8s_prod"

topics_pattern => "k8s-fb-prod-.*"

}

}

filter {

json {

source => "message"

}

}

output {

elasticsearch {

hosts => ["192.168.80.20:9200"]

index => "k8s-prod-%{+YYYY.MM}"

}

}

EOF

启动生产环境的logstash镜像

[root@mfyxw50 ~]# docker run -d --restart=always --name logstash-prod -v /etc/logstash:/etc/logstash harbor.od.com/infra/logstash:v6.8.6 -f /etc/logstash/logstash-prod.conf

[root@mfyxw50 ~]#

[root@mfyxw50 ~]# docker ps -a |grep logstash-prod

ba9b95d98eb7 harbor.od.com/infra/logstash:v6.8.6 "/usr/local/bin/dock…" 39 seconds ago Up 37 seconds 5044/tcp, 9600/tcp logstash-prod

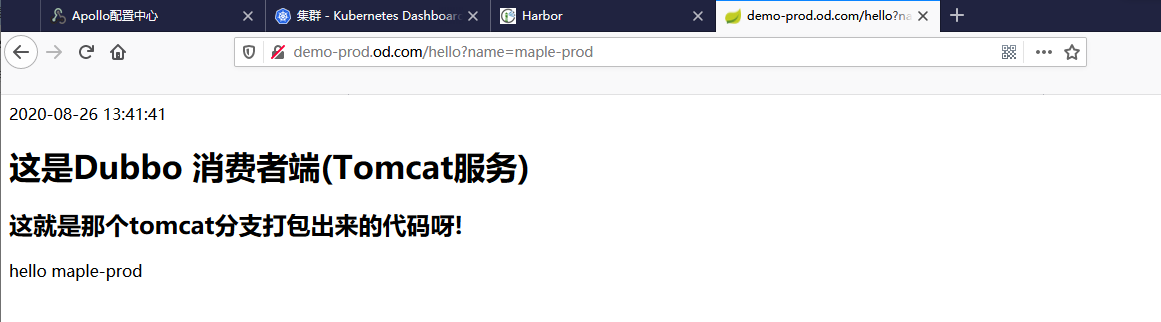

(9)访问http://demo-prod.od.com/hello?name=maple-prod,多刷新几次

(10)验证ElasticSearch里的索引

使用命令:curl http://192.168.80.20:9200/_cat/indices?v

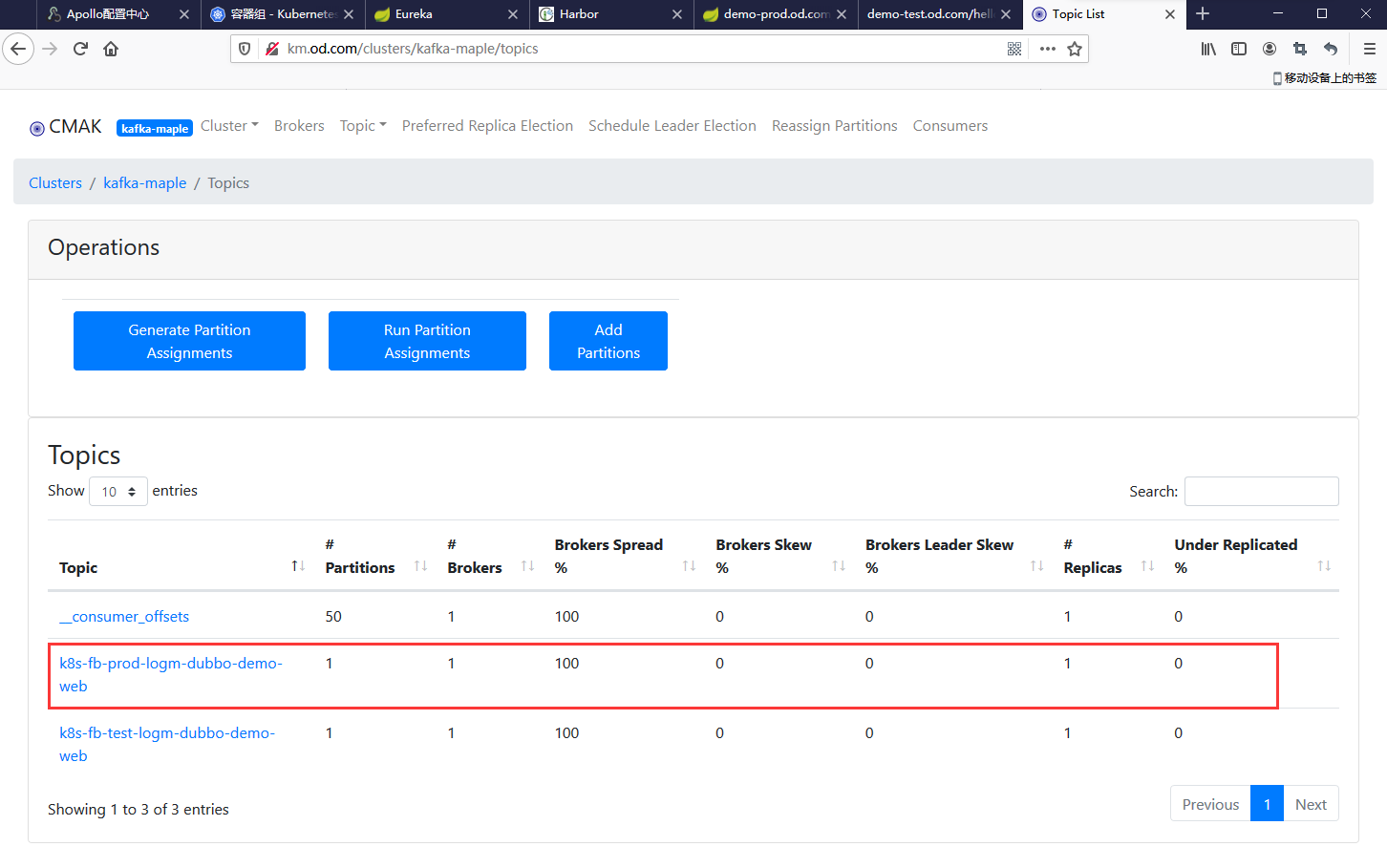

(11)访问km.od.com查看

(12)访问kibana.od.com

11.dubbo-demo-service的日志收集

前面的dubbo-demo-service(提供者)的日志没有保存至文件(落盘),dubbo-demo-service的日志是在控制台上输出,故无法收集

但是,可以通过修改jre8底包来实现,再修改流水线重新构建镜像

(1)修改jre的底包

创建log目录用于存放日志文件

[root@mfyxw50 ~]# mkdir -p /data/dockerfile/jre8/log

修改jre的entrypoint.sh文件

[root@mfyxw50 ~]# cat > /data/dockerfile/jre8/entrypoint.sh << EOF

#!/bin/sh

M_OPTS="-Duser.timezone=Asia/Shanghai -javaagent:/opt/prom/jmx_javaagent-0.3.1.jar=\$(hostname -i):\${M_PORT:-"12346"}:/opt/prom/config.yml"

C_OPTS=\${C_OPTS}

JAR_BALL=\${JAR_BALL}

exec java -jar \${M_OPTS} \${C_OPTS} \${JAR_BALL} 2>&1>>/opt/log/stdout.log

EOF

Dockerifle文件修改如下:

[root@mfyxw50 ~]# cat > /data/dockerfile/jre8/Dockerfile << EOF

FROM harbor.od.com/public/jre8:8u112

RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && echo 'Asia/Shanghai' >/etc/timezone

ADD config.yml /opt/prom/config.yml

ADD log /opt/log

ADD jmx_javaagent-0.3.1.jar /opt/prom/

WORKDIR /opt/project_dir

ADD entrypoint.sh /entrypoint.sh

CMD ["/entrypoint.sh"]

EOF

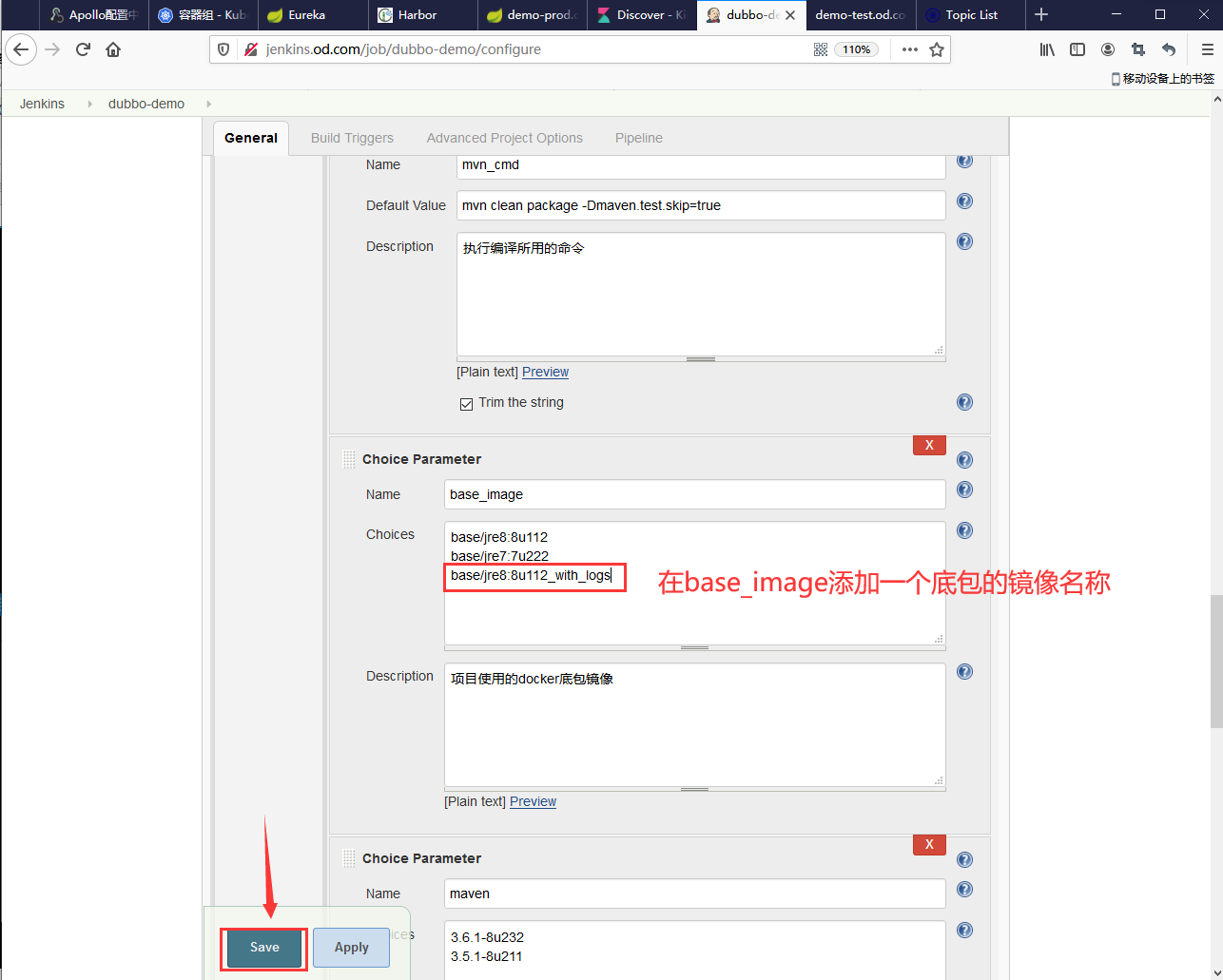

(2)重新制作底包镜像并上传至私有仓库

[root@mfyxw50 ~]# cd /data/dockerfile/jre8/

[root@mfyxw50 ~]#

[root@mfyxw50 jre8]# docker build . -t harbor.od.com/base/jre8:8u112_with_logs

Sending build context to Docker daemon 372.2kB

Step 1/7 : FROM harbor.od.com/public/jre8:8u112

---> fa3a085d6ef1

Step 2/7 : RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && echo 'Asia/Shanghai' >/etc/timezone

---> Using cache

---> 79f2d4acf366

Step 3/7 : ADD config.yml /opt/prom/config.yml

---> Using cache

---> 5461575f9379

Step 4/7 : ADD jmx_javaagent-0.3.1.jar /opt/prom/

---> Using cache

---> 04b3f1aaf297

Step 5/7 : WORKDIR /opt/project_dir

---> Using cache

---> d3cb2ca7eda0

Step 6/7 : ADD entrypoint.sh /entrypoint.sh

---> 3dc576367bcb

Step 7/7 : CMD ["/entrypoint.sh"]

---> Running in 6a46e733679c

Removing intermediate container 6a46e733679c

---> 6d096aad92ca

Successfully built 6d096aad92ca

Successfully tagged harbor.od.com/base/jre8:8u112_with_logs

[root@mfyxw50 ~]#

[root@mfyxw50 jre8]# docker push harbor.od.com/base/jre8:8u112_with_logs

The push refers to repository [harbor.od.com/base/jre8]

086308230233: Pushed

30934063c5fd: Layer already exists

0378026a5ac0: Layer already exists

12ac448620a2: Layer already exists

78c3079c29e7: Layer already exists

0690f10a63a5: Layer already exists

c843b2cf4e12: Layer already exists

fddd8887b725: Layer already exists

42052a19230c: Layer already exists

8d4d1ab5ff74: Layer already exists

8u112_with_logs: digest: sha256:2b3dc55b17c2562565ce3f2bdb2981eec15198a44bbcdcfd5915ab73862fd7e2 size: 2405

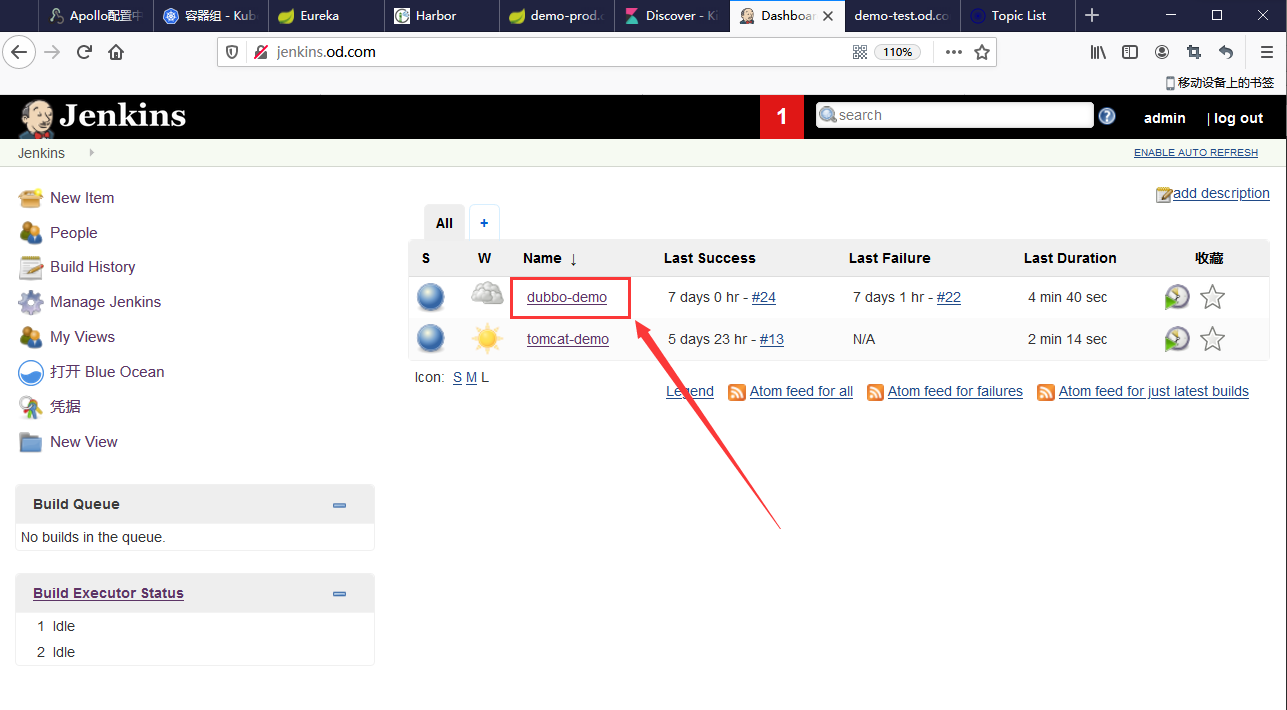

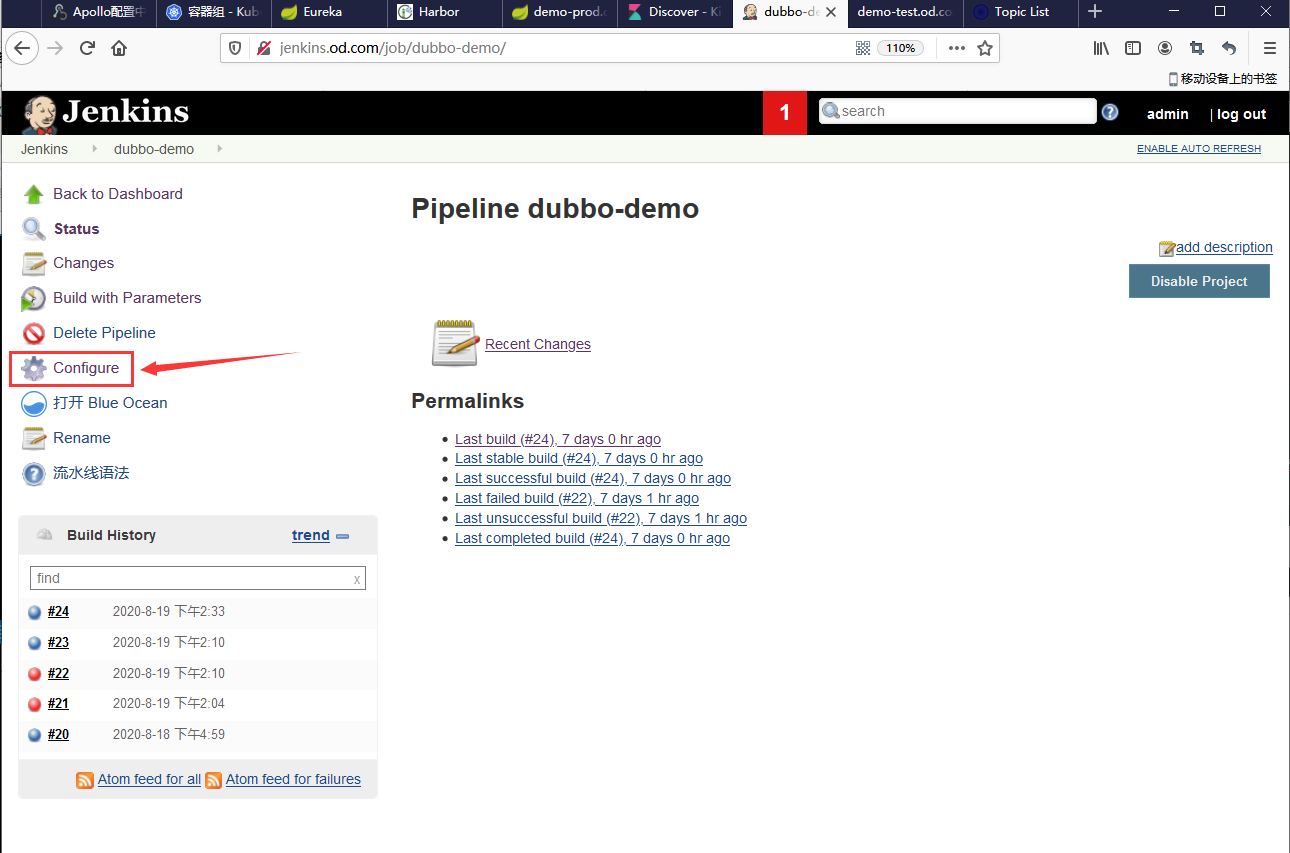

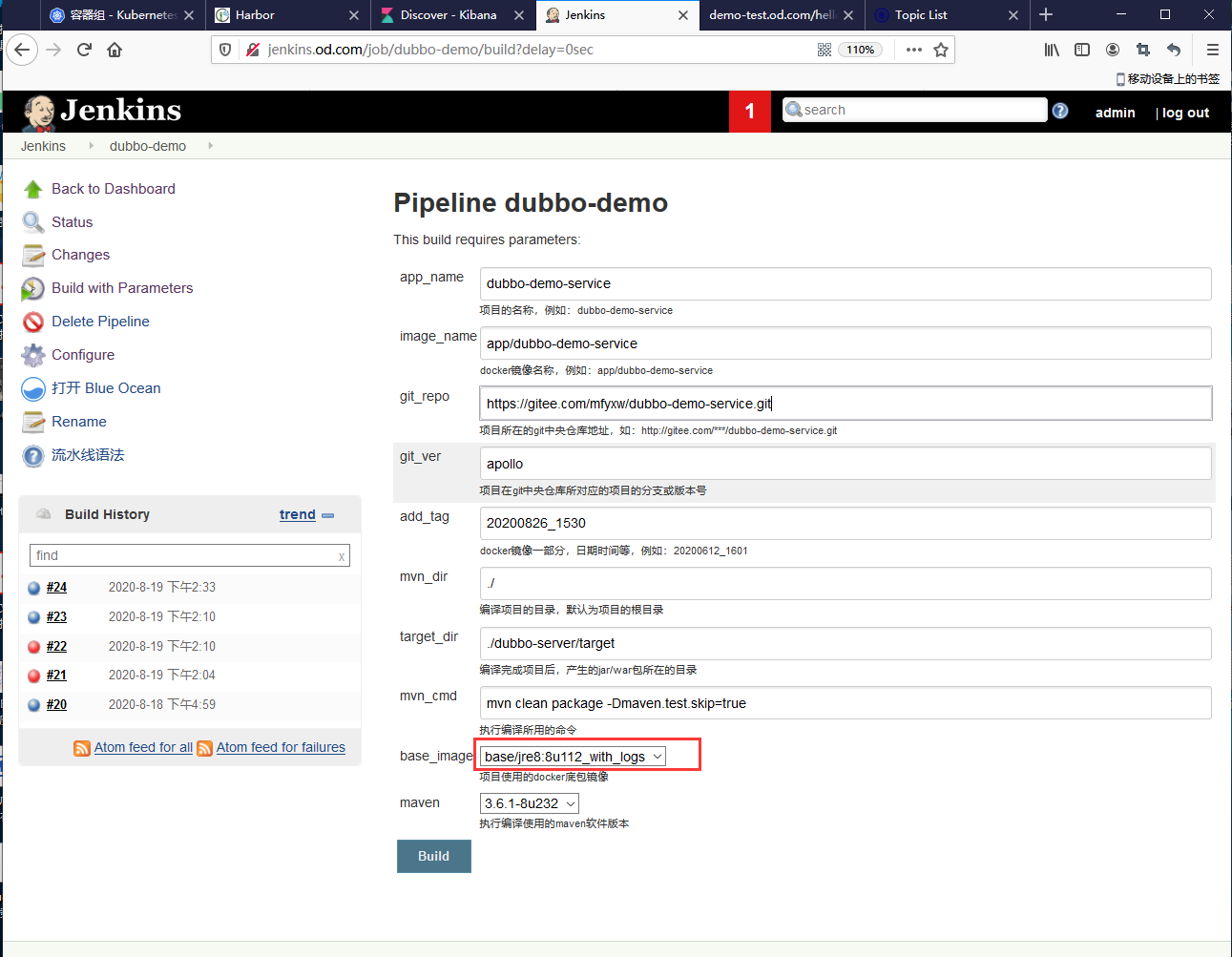

(3)登录jenkins.od.com修改流水线

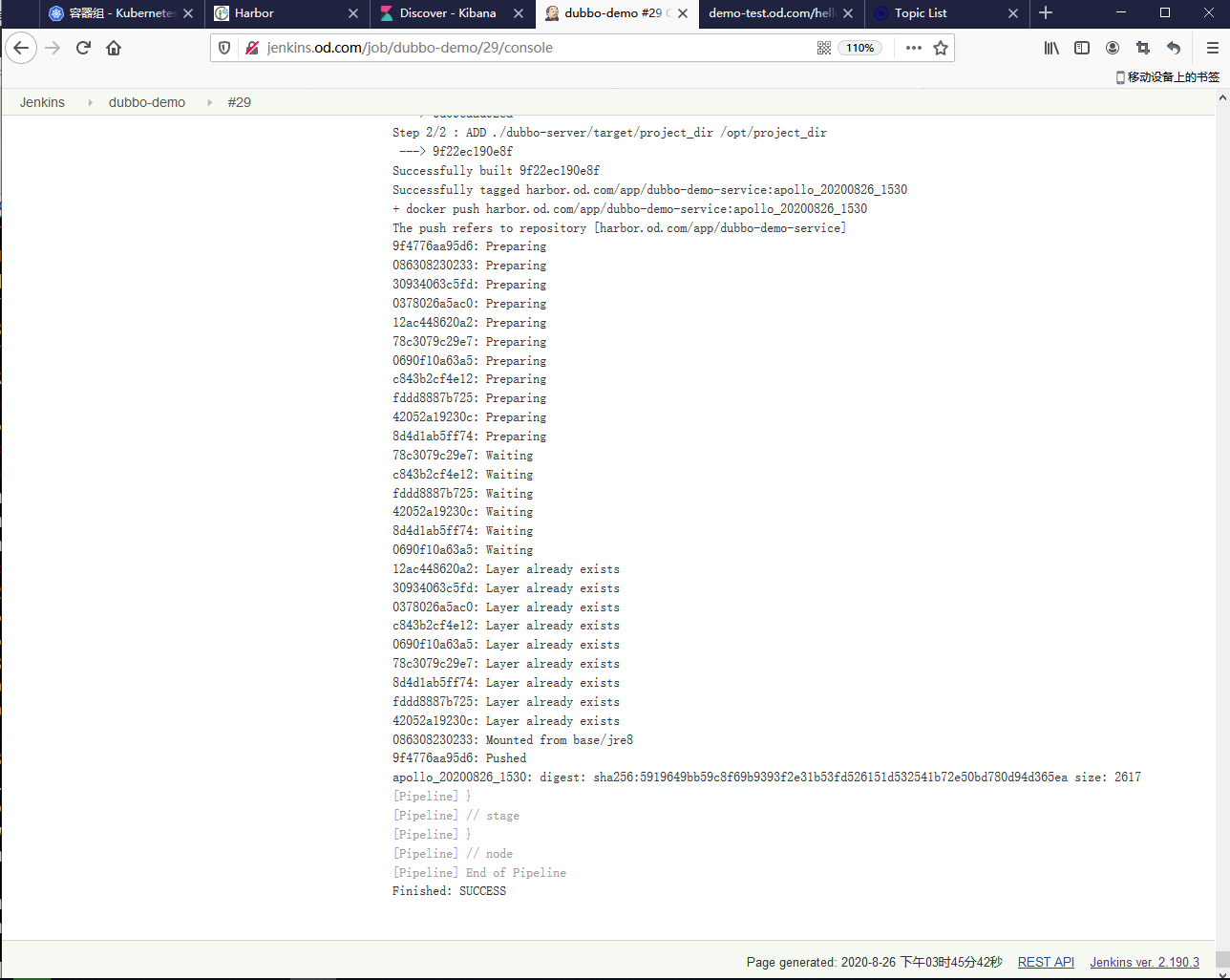

(4)构建镜像

(5)修改test环境的dubbo-demo-service的deployment.yaml文件

deployment.yaml文件内容如下:

[root@mfyxw50 ~]# cat > /data/k8s-yaml/test/dubbo-demo-service/deployment.yaml << EOF

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: dubbo-demo-service

namespace: test

labels:

name: dubbo-demo-service

spec:

replicas: 1

selector:

matchLabels:

name: dubbo-demo-service

template:

metadata:

labels:

app: dubbo-demo-service

name: dubbo-demo-service

spec:

containers:

- name: dubbo-demo-service

image: harbor.od.com/app/dubbo-demo-service:apollo_20200826_1530

imagePullPolicy: IfNotPresent

ports:

- containerPort: 20880

protocol: TCP

env:

- name: JAR_BALL

value: dubbo-server.jar

- name: C_OPTS

value: -Denv=fat -Dapollo.meta=http://config-test.od.com

volumeMounts:

- mountPath: /opt/log

name: logm

- name: filebeat-service

image: harbor.od.com/infra/filebeat:v7.5.1

imagePullPolicy: IfNotPresent

env:

- name: ENV

value: ds-test

- name: PROJ_NAME

value: dubbo-demo-service

volumeMounts:

- mountPath: /logm

name: logm

volumes:

- emptyDir: {}

name: logm

imagePullSecrets:

- name: harbor

restartPolicy: Always

terminationGracePeriodSeconds: 30

EOF

将原来的dubbo-demo-service起来的pod进行delete,再次重新应用

[root@mfyxw30 ~]# kubectl delete -f http://k8s-yaml.od.com/test/dubbo-demo-service/deployment.yaml

deployment.extensions "dubbo-demo-service" deleted

[root@mfyxw30 ~]#

[root@mfyxw30 ~]# kubectl apply -f http://k8s-yaml.od.com/test/dubbo-demo-service/deployment.yaml

deployment.extensions/dubbo-demo-service created

(6)启动测试环境的提供者的logstash镜像

添加配置文件

[root@mfyxw50 ~]# cat > /etc/logstash/logstash-ds-test.conf << EOF

input {

kafka {

bootstrap_servers => "192.168.80.10:9092"

client_id => "192.168.80.50"

consumer_threads => 4

group_id => "k8s_ds-test"

topics_pattern => "k8s-fb-ds-test-.*"

}

}

filter {

json {

source => "message"

}

}

output {

elasticsearch {

hosts => ["192.168.80.20:9200"]

index => "k8s-ds-test-%{+YYYY.MM}"

}

}

EOF

启动logstash镜像

[root@mfyxw50 ~]# docker run -d --restart=always --name logstash-ds-test -v /etc/logstash:/etc/logstash harbor.od.com/infra/logstash:v6.8.6 -f /etc/logstash/logstash-ds-test.conf

[root@mfyxw50 ~]#

[root@mfyxw50 ~]# docker ps | grep logstash-*

6fb42f324ba2 harbor.od.com/infra/logstash:v6.8.6 "/usr/local/bin/dock…" About an hour ago Up About an hour 5044/tcp, 9600/tcp logstash-ds-test

3b4fe8262224 harbor.od.com/infra/logstash:v6.8.6 "/usr/local/bin/dock…" 8 hours ago Up 2 hours 5044/tcp, 9600/tcp logstash-prod

7c1716db7381 harbor.od.com/infra/logstash:v6.8.6 "/usr/local/bin/dock…" 9 hours ago Up 2 hours 5044/tcp, 9600/tcp logstash-test

(7)访问http://demo-test.od.com/hello?name=maple-test,多刷新几次增加访问

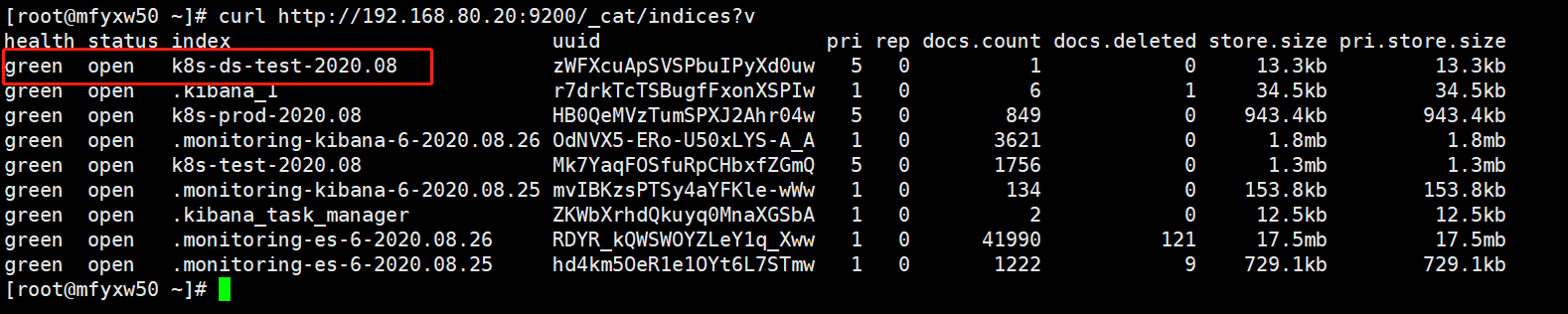

(8)验证ElasticSearch里的索引

使用命令:curl http://192.168.80.20:9200/_cat/indices?v

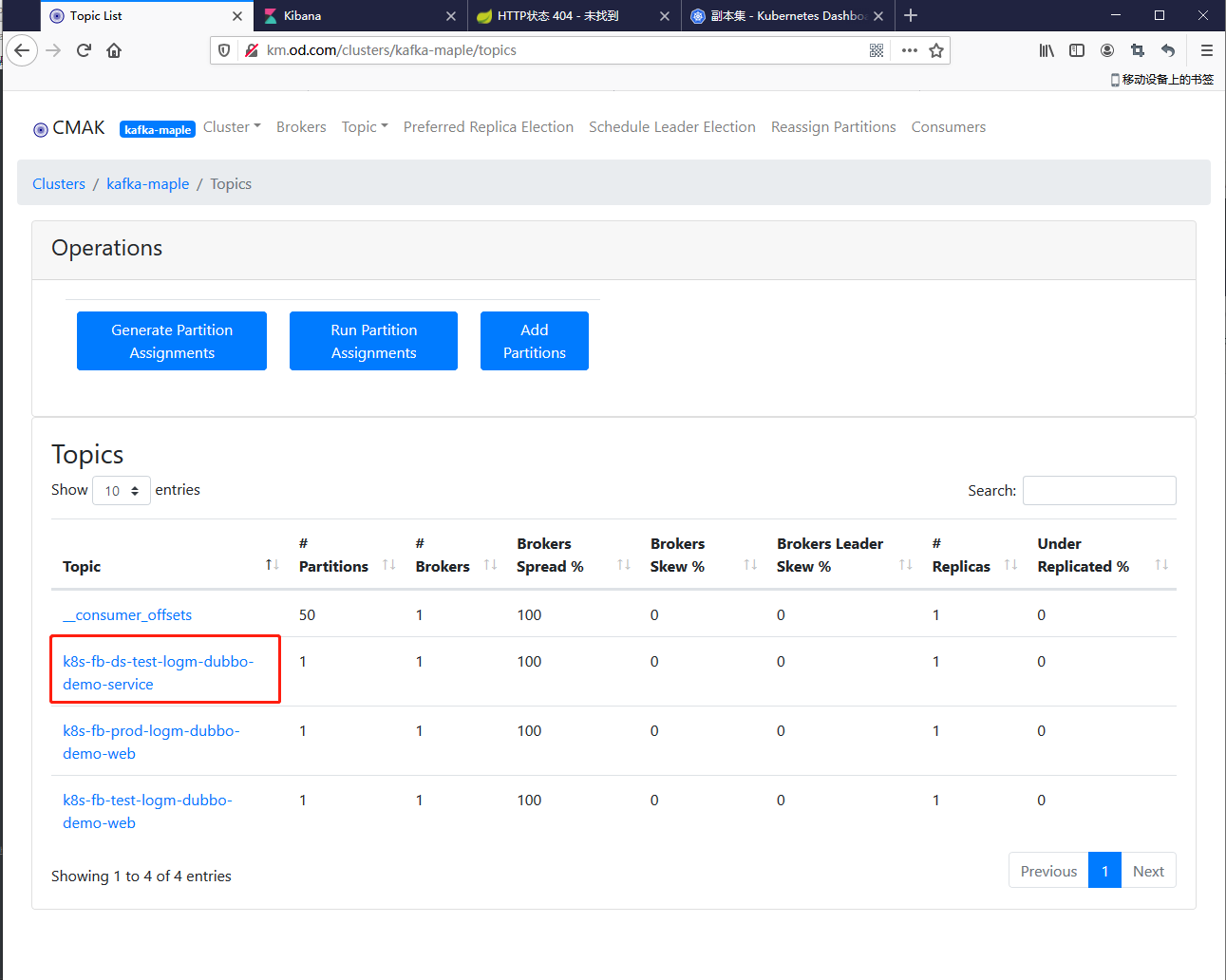

(9)登录km.od.com查看

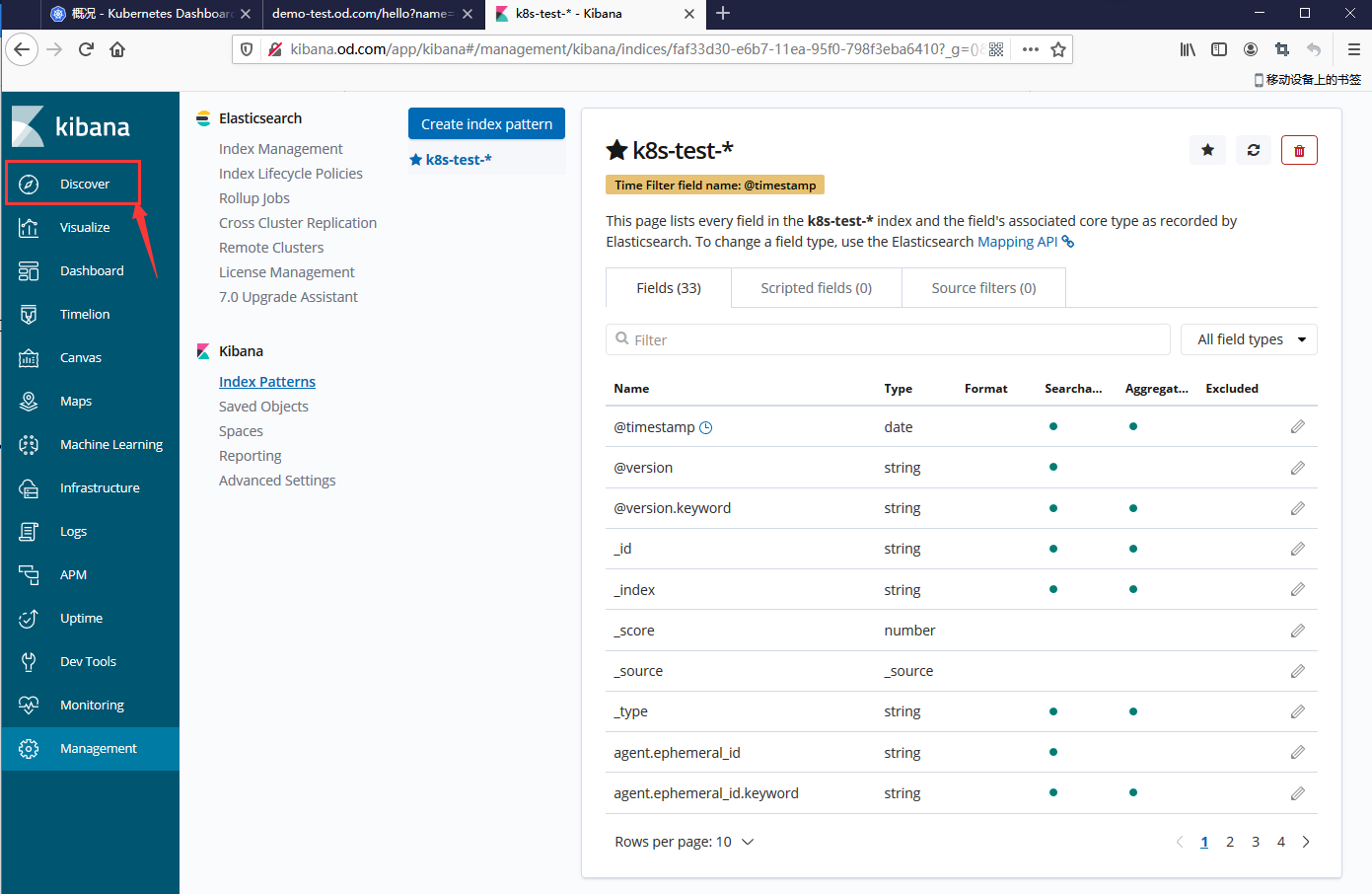

(10)访问kibana.od.com添加index pattern

添加index pattern

添加时间戳

浙公网安备 33010602011771号

浙公网安备 33010602011771号