kubeadm 搭建k8s环境

文档包含:

- 基础环境准备

- docker环境安装

- k8s组件安装

- k8s-master初始化

- k8s节点添加(master/worker)

- k8s证书更换

- 环境准备

阿里云几台最低配服务器做测试,我开通的是2c8g差不多一天5大洋左右,比较划算,自己本地折腾几台机器麻烦

![]()

- 所有主机配置/etc/hosts文件

172.24.150.121 k8s-master

172.24.210.132 k8s-master2

172.24.210.131 k8s-master3

172.30.24.149 k8s-node1

172.24.210.130 k8s-node2

- 所有主机执行时间同步

## 设置时区

# timedatectl set-timezone Asia/Shanghai

## 安装chrony同步工具

# yum makecache fast

# yum -y install chrony

# systemctl start chroynd

# systemctl enable --now chronyd

## 强制同步时间

# chronyc -a makestep

# date

- 关闭防火墙

## firewalld

# systemctl stop firewalld

# systemctl disable firewallld

## iptables

# systemctl stop iptables

# systemctl disable iptables

- 禁用selinux

# sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/sysconfig/selinux

# setenforce 0

- 禁用swap分区

sed -i '/swap/s/^\(.*\)$/#\1/g' /etc/fstab # 禁用自动挂载

swapoff -a # 关闭swap分区

sysctl -w vm.swappiness=0

- 配置网桥转发

将桥接的IPv4流量传递到iptables的链

# cat <<EOF >/etc/sysctl.d/kubernetes.conf

> net.bridge.bridge-nf-call-ip6tables = 1

> net.bridge.bridge-nf-call-iptables = 1

> net.ipv4.ip_forward = 1

> EOF

# sysctl --system # 重新加载

# modprobe br_netfilter # 加载过滤模块

# lsmod | grep br_netfilter # 查看是否成功

- 配置ipvs功能

在K8s中kube-proxy有iptables和ipvs两种代理模型,ipvs性能较高,但需要手动载入ipset和ipvsadm模块

- 系统内核为4.19+时,执行此操作

# yum install -y ipset ipvsadm

# cat <<EOF > /etc/sysconfig/modules/ipvs.modules

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

EOF

# chmod 755 /etc/sysconfig/modules/ipvs.modules

# bash /etc/sysconfig/modules/ipvs.modules

# lsmod|grep -e ip_vs -e nf_conntrack

- 系统内核低于4.19,执行此操作

# yum install -y ipset ipvsadm

# cat <<EOF > /etc/sysconfig/modules/ipvs.modules

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

nf_conntrack

EOF

# chmod 755 /etc/sysconfig/modules/ipvs.modules

# bash /etc/sysconfig/modules/ipvs.modules

# lsmod|grep -e ip_vs -e nf_conntrack_ipv4

- 重启服务器

# reboot

- 安装Docker

docker默认的Cgroup是cgroupfs,K8s推荐的Cgroup是systemd, 修改Docker的Cgroup Driver

# wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

# yum -y install docker-ce-20.10.12 docker-ce-cli-20.10.12 containerd.io

# systemctl start docker.service

# systemctl enable docker.service

# cat << EOF >> /etc/docker/daemon.json

> {

> "exec-opts": ["native.cgroupdriver=systemd"]

> }

> EOF

# systemctl daemon-reload

# systemctl restart docker

# systemctl status docker.service

# docker info|grep Cgroup

- 安装k8s组件(kubeadm, kubelet和kubectl )

- 添加kubernetes的仓库源

# cat <<EOF > /etc/yum.repos.d/kubernetes.repo

> [kubernetes]

> name=Kubernetes

> baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

> enabled=1

> gpgcheck=0

> repo_gpgcheck=0

> gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

> EOF

- 选择支持的kubernetes版本进行安装

# yum list kubelet --showduplicates

# yum -y install kubeadm-1.17.2 kubelet-1.17.2 kubectl-1.17.2

# systemctl enable kubelet # 此时的kubelet会不断重启,此时不急继续后面操作

- 修改kubelet的Cgroup

# cat <<EOF > /etc/sysconfig/kubelet

> KUBELET_CGROUP_ARGS="--cgroup-driver=systemd"

> KUBE_PROXY_MODE="ipvs"

> EOF

- 在k8s-master上执行以下操作

- 提前拉取kubeadm初始化需要的镜像到服务器本地,或者在初始化阶段自动拉取

1.1. 从默认的镜像仓库拉取初始化所需要的镜像

# kubeadm config images list # 获取镜像列表

# kubeadm config images pull # 提前拉去初始化需要的镜像

1.2. 从指定的镜像仓库拉取初始化所需要的镜像

# kubeadm config print init-defaults > kubeadm-config.yaml # 获取kubeadm所有的配置文件模版

# vim kubeadm-config.yaml

...

localAPIEndpoint:

advertiseAddress: 192.168.132.131 # master节点的IP

...

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: 192.168.132.131 # 这里修改为IP地址,使用域名,必须保证解析

...

etcd:

local:

dataDir: /var/lib/etcd # 把etcd容器的目录挂载到本地的/var/lib/etcd目录下,防止数据丢失

imageRepository: k8s.gcr.io # 镜像仓库地址,在国内的话,可以修改为`registry.aliyuncs.com/google_containers`

kind: ClusterConfiguration

kubernetesVersion: v1.17.0 # k8s版本

# kubeadm config images pull --config kubeadm-config.yaml # 拉取镜像到本地

- 初始化k8s-master环境

# kubeadm init --apiserver-advertise-address=172.24.150.121 --image-repository registry.aliyuncs.com/google_containers --kubernetes-version=v1.17.2 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12

# mkdir -p $HOME/.kube

# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

# chown $(id -u):$(id -g) $HOME/.kube/config

# kubectl get no # 查看节点状态

# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml # 安装ICN网络插件

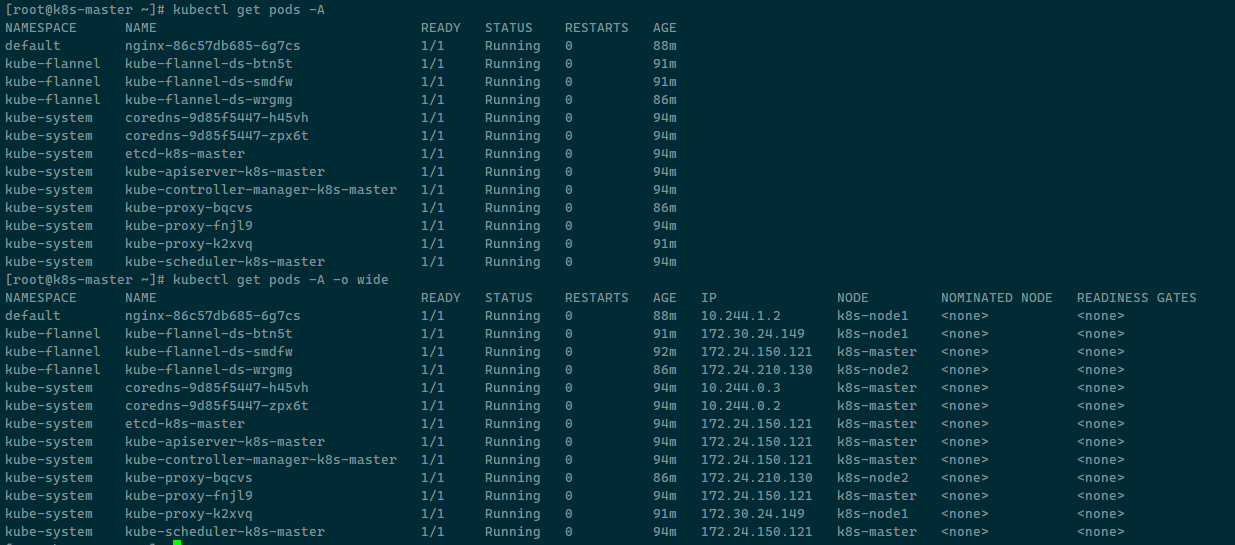

# kubectl get pods -A # 查看所有基础pod运行状态

- 添加node(worker)节点

# kubeadm token create --print-join-command # 在 k8s-master节点上执行

W1101 17:44:05.183326 10619 validation.go:28] Cannot validate kube-proxy config - no validator is available

W1101 17:44:05.183364 10619 validation.go:28] Cannot validate kubelet config - no validator is available

kubeadm join 172.24.150.121:6443 --token pswsh0.qj4deisvnpd96579 --discovery-token-ca-cert-hash sha256:b75a1189a42b8dd43481de8ffc4bd0e704da1cfcb21b3264bc10d6b01622ac85

# kubeadm join 172.24.150.121:6443 --token pswsh0.qj4deisvnpd96579 --discovery-token-ca-cert-hash sha256:b75a1189a42b8dd43481de8ffc4bd0e704da1cfcb21b3264bc10d6b01622ac85 # 在k8s-node1节点上执行

查看所有k8s节点状态

# kubectl get nodes

查看当前k8s所有pod运行状态,其中flannel有3个对应3个节点

# kubectl get pods -A

- 添加master节点

- 导出kubeadm配置文件

# kubectl -n kube-system get configmap kubeadm-config -o jsonpath='{.data.ClusterConfiguration}' > kubeadm.yaml

- 修改配置文件

# cat kubeadm.yml

apiServer:

certSANs: # 额外添加的配置项

- api.k8s.local # 必填项

- k8s-master # 原始master节点

- k8s-master2 # 新master节点主机名

- k8s-master3 # 新master节点主机名

- 172.24.150.121 # 原始master节点ip地址

- 172.24.210.132 # 新master节点ip地址

- 172.24.210.131 # 新master节点ip地址

extraArgs:

authorization-mode: Node,RBAC

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: 172.24.150.121:6443 # 添加,如果有vip,这里写vip

controllerManager: {}

- 或者直接编辑配置

# kubectl -n kube-system edit cm kubeadm-config

4 更新kubeadm.yml配置到configmap中

# mv /etc/kubernetes/pki/apiserver.{crt,key} ~ # 备份当前证书

# kubeadm init phase certs apiserver --config kubeadm.yaml # 重新生成证书,证书包含新master节点信息

# docker ps | grep kube-apiserver | grep -v pause

af25aa8c6dec 41ef50a5f06a "kube-apiserver --ad…" 19 hours ago Up 19 hours k8s_kube-apiserver_kube-apiserver-k8s-master_kube-system_33e35b30f262113fa4d1c0f2529a0e4e_0

# docker kill af25aa8c6dec # 重启apiserver服务

- 检查证书是否更新成功

# openssl x509 -in /etc/kubernetes/pki/apiserver.crt -text

- 更新kubeadm config

# kubeadm init phase upload-config kubeadm --config kubeadm.yaml

- 查看是否更新成功

# kubectl -n kube-system get configmap kubeadm-config -o yaml

- 更新所有apiserver接口地址为虚拟ip

# vim /etc/kubernetes/kubelet.conf

......

server: https://172.24.210.121:6443 # 我这里改成当前节点的apiserver接口

name: kubernetes

......

# systemctl restart kubelet

# vim /etc/kubernetes/controller-manager.conf

......

server: https://172.24.210.121:6443

name: kubernetes

......

# docker kill $(docker ps | grep kube-controller-manager |grep -v pause | cut -d' ' -f1)

# vim /etc/kubernetes/scheduler.conf

......

server: https://172.24.210.121:6443

name: kubernetes

......

# docker kill $(docker ps | grep kube-scheduler | grep -v pause |cut -d' ' -f1)

# kubectl -n kube-system edit cm kube-proxy

......

kubeconfig.conf: |-

apiVersion: v1

kind: Config

clusters:

- cluster:

certificate-authority: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

server: https://172.24.210.121:6443

name: default

# kubectl -n kube-public edit cm cluster-info

......

server: https://172.24.210.121:6443

name: ""

......

# kubectl cluster-info

- 拷贝k8s-master上证书到新master节点

# mkdir -p /etc/kubernetes/pki/etcd # 新节点上创建目录

# scp /etc/kubernetes/admin.conf root@172.24.210.131:/etc/kubernetes # 拷贝老master节点的证书

# scp /etc/kubernetes/pki/{ca.*,sa.*,front-proxy-ca.*} root@172.24.210.131:/etc/kubernetes/pki

# scp /etc/kubernetes/pki/etcd/ca.* root@172.24.210.131:/etc/kubernetes/pki/etcd

- 添加新mster节点到集群

老master节点

# kubeadm token create --print-join-command

W1102 14:58:07.063150 12251 validation.go:28] Cannot validate kube-proxy config - no validator is available

W1102 14:58:07.063185 12251 validation.go:28] Cannot validate kubelet config - no validator is available

kubeadm join 172.24.150.121:6443 --token itszwu.osv5qxhypnr2lbe9 --discovery-token-ca-cert-hash sha256:b75a1189a42b8dd43481de8ffc4bd0e704da1cfcb21b3264bc10d6b01622ac85

# kubeadm init phase upload-certs --upload-certs # kubeadm 1.16+ 版本执行

I1102 14:58:13.743507 12293 version.go:251] remote version is much newer: v1.25.3; falling back to: stable-1.17

W1102 14:58:14.932331 12293 validation.go:28] Cannot validate kube-proxy config - no validator is available

W1102 14:58:14.932346 12293 validation.go:28] Cannot validate kubelet config - no validator is available

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

6e35decf66119359ad2f05190e294ed36e8c2b182d91b4e9458b996c06cc94af

# kubeadm init phase upload-certs --experimental-upload-certs # <= kubeadm 1.16版本

新master节点

# kubeadm join 172.24.150.121:6443 --token itszwu.osv5qxhypnr2lbe9 --discovery-token-ca-cert-hash sha256:b75a1189a42b8dd43481de8ffc4bd0e704da1cfcb21b3264bc10d6b01622ac85 --control-plane --certificate-key 6e35decf66119359ad2f05190e294ed36e8c2b182d91b4e9458b996c06cc94af

执行后打印日志...

This node has joined the cluster and a new control plane instance was created:

* Certificate signing request was sent to apiserver and approval was received.

* The Kubelet was informed of the new secure connection details.

* Control plane (master) label and taint were applied to the new node.

* The Kubernetes control plane instances scaled up.

* A new etcd member was added to the local/stacked etcd cluster.

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.

- 查看集群

# kubectl get nodes # 查看节点状态是否为Ready,如果为NotReady可以看下网卡pod状态是否已经正常,或者查看kubelet日志

# kubectl get pods -A # 查看所有pod状态是否为Running

# kubectl get pods -n kube-system

# kubectl get cs

# kubectl cluster-info # 查看集群状态

- 证书更新问题

集群证书更新最好指定kubadm.config配置文件,否则可能出现命令执行完成之后证书文件没有生成

kubeadm config view > /tmp/cluster.yaml # 导出当前集群kubeadm.config配置

cp -rp /etc/kubernetes /etc/kubernetes.bak

# 备份etcd,建议

docker cp $(docker ps | grep -v etcd-mirror | grep -w etcd | awk '{print $1}'):/usr/local/bin/etcdctl /usr/local/bin/ # 如果是kubeadm安装本机没有命令,导出容器命令到宿主机

etcdctl --endpoints 127.0.0.1:2379 \

--cert="/etc/kubernetes/pki/etcd/server.crt" \

--key="/etc/kubernetes/pki/etcd/server.key" \

--cacert="/etc/kubernetes/pki/etcd/ca.crt" \

snapshot save etcd_snap_save.db

# 删除证书文件

rm -f /etc/kubernetes/pki/apiserver*

rm -f /etc/kubernetes/pki/front-proxy-client.*

rm -rf /etc/kubernetes/pki/etcd/healthcheck-client.*

rm -rf /etc/kubernetes/pki/etcd/server.*

rm -rf /etc/kubernetes/pki/etcd/peer.*

kubeadm alpha certs renew all --config /tmp/cluster.yaml #1.5+版本之后更新可以不删除老证书文件

ls -l /etc/kubernetes/pki # 检查证书文件是否生成,并与备份进行对比

# 移除老的配置文件

mv /etc/kubernetes/*.conf /tmp/

# 生成新的配置文件

kubeadm init phase kubeconfig all --config /tmp/cluster.yaml

# 检查新的配置文件是否生成,并与老的配置文件对比是否更新

ls -l /etc/kubernetes/*.conf

cp /etc/kubernetes/admin.conf ~/.kube/config

systemctl restart kubelet

kubeadm alpha certs check-expiration # 检查证书是否更新

# 如果状态有异常,可以尝试重启k8s组件

docker ps | grep -E 'k8s_kube-apiserver|k8s_kube-controller-manager|k8s_kube-scheduler|k8s_etcd_etcd' | awk -F ' ' '{print $1}' | xargs docker restart

kubectl get no # 查看集群状态

kubectl get pods -A # 查看所有pod是否运行正常

证书更新其他操作,逐步更新每个部件用到的证书

etcd 心跳证书: kubeadm alpha certs renew etcd-healthcheck-client --config kubeadm-config.yaml

etcd peer 证书: kubeadm alpha certs renew etcd-peer --config kubeadm-config.yaml

etcd server 证书: kubeadm alpha certs renew etcd-server --config kubeadm-config.yaml

front-proxy-client 证书: kubeadm alpha certs renew front-proxy-client --config kubeadm-config.yaml

apiserver-etcd-client 证书 kubeadm alpha certs renew apiserver-etcd-client --config kubeadm-config.yaml

apiserver-kubelet-client 证书 kubeadm alpha certs renew apiserver-kubelet-client --config kubeadm-config.yaml

apiserver 证书 kubeadm alpha certs renew apiserver --config kubeadm-config.yaml

浙公网安备 33010602011771号

浙公网安备 33010602011771号