MapReduce经典样例

@

mapreduce核心思想是分治

以下样例只涉及基础学习和少量数据,并不需要连接虚拟机

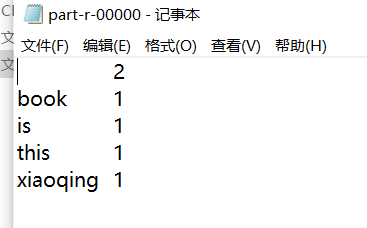

以下样例均可在系统创建的文件夹的part-r-0000中查看结果

MapReduce 经典样例 词频统计

在文件输入一定数量单词,统计各个单词出现次数

代码

package qfnu;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

// mapper组件

class WordCountMapper extends Mapper<LongWritable, Text,

Text, IntWritable>{

@Override

protected void map(LongWritable key, Text value,

Mapper<LongWritable, Text, Text, IntWritable>.Context context)

throws IOException, InterruptedException {

// TODO Auto-generated method stub

String line = value.toString();

String[] words = line.split(" ");

for(String word : words) {

context.write(new Text(word), new IntWritable(1));

}

}

}

// reducer 组件

class WordCountReducer extends Reducer<Text, IntWritable,

Text, IntWritable>{

@Override

protected void reduce(Text key, Iterable<IntWritable> values,

Reducer<Text, IntWritable, Text, IntWritable>.Context context) throws IOException, InterruptedException {

// TODO Auto-generated method stub

int count = 0;

for(IntWritable value : values) {

count += value.get();

}

context.write(key, new IntWritable(count));

}

}

public class WordCountDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

// TODO Auto-generated method stub

Configuration conf = new Configuration();

conf.set("mapreduce.framework.name", "local");

Job job = Job.getInstance();

job.setJarByClass(WordCountDriver.class);

job.setMapperClass(WordCountMapper.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setReducerClass(WordCountReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

// 读取数据

FileInputFormat.setInputPaths(job, "D:/hadooptest/count.txt");

// 读入到指定位置,若文件不存在,自动创建

FileOutputFormat.setOutputPath(job, new Path("D:/hadooptest/ansofcount"));

job.waitForCompletion(true);

}

}

我们可以在D:/hadooptest/ansofcount的part-r-0000看到

MapReduce 经典样例 倒排索引

倒排索引是为了更加方便的搜索

代码

package qfnu;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.FileSplit;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

class InvertedIndexMapper extends Mapper<LongWritable, Text,

Text, Text>{

private static Text keyInfo = new Text();

private static Text valueInfo = new Text("1");

@Override

protected void map(LongWritable key, Text value, Mapper<

LongWritable, Text, Text, Text>.Context context)

throws IOException, InterruptedException {

// TODO Auto-generated method stub

String line = value.toString();

String[] words = line.split(" ");

FileSplit fileSplit = (FileSplit)context.getInputSplit();

String fileName = fileSplit.getPath().getName();

for(String word : words) {

keyInfo.set(word + ":" + fileName);

context.write(keyInfo, valueInfo);

}

}

}

class InvertedIndexCombiner extends Reducer<

Text, Text, Text, Text>{

private static Text keyInfo = new Text();

private static Text valueInfo = new Text();

@Override

protected void reduce(Text key, Iterable<Text> values,

Reducer<Text, Text, Text, Text>.Context context)

throws IOException, InterruptedException {

// TODO Auto-generated method stub

int count = 0;

for(Text value : values) {

count += Integer.parseInt(value.toString());

}

int splitIndex = key.toString().indexOf(":");

keyInfo.set(key.toString().substring(0, splitIndex));

valueInfo.set(key.toString().substring(splitIndex+1) + ":" + count);

context.write(keyInfo, valueInfo);

}

}

class InvertedIndexReducer extends Reducer<Text, Text, Text, Text>{

private static Text valueInfo = new Text();

@Override

protected void reduce(Text key, Iterable<Text> values,

Reducer<Text, Text, Text, Text>.Context context)

throws IOException, InterruptedException {

// TODO Auto-generated method stub

String fileList = "";

for(Text value : values) {

fileList += value.toString() + ";";

}

valueInfo.set(fileList);

context.write(key, valueInfo);

}

}

public class InvertedIndexDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

// TODO Auto-generated method stub

Configuration conf = new Configuration();

conf.set("mapreduce.framework.name", "local");

Job job = Job.getInstance();

job.setJarByClass(InvertedIndexDriver.class);

job.setMapperClass(InvertedIndexMapper.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(Text.class);

job.setCombinerClass(InvertedIndexCombiner.class);

job.setReducerClass(InvertedIndexReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

FileInputFormat.setInputPaths(job, new Path("D:/hadooptest/InvertedIndexReducer.txt"));

FileOutputFormat.setOutputPath(job, new Path("D:/hadooptest/ansofInvertedIndexReducer"));

job.waitForCompletion(true);

}

}

MapReduce 经典样例 数据去重

package qfnu;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

class DeDupMapper extends Mapper<LongWritable, Text, Text, Text>{

@Override

protected void map(LongWritable key, Text value, Mapper<LongWritable, Text, Text, Text>.Context context)

throws IOException, InterruptedException {

// TODO Auto-generated method stub

context.write(value, new Text(""));

}

}

class DeDupReducer extends Reducer<Text, Text, Text, Text>{

@Override

protected void reduce(Text key, Iterable<Text> values,

Reducer<Text, Text, Text, Text>.Context context)

throws IOException, InterruptedException {

context.write(key, new Text(""));

}

}

public class DeDupDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

// TODO Auto-generated method stub

Configuration conf = new Configuration();

conf.set("mapreduce.framework.name", "local");

Job job = Job.getInstance();

job.setJarByClass(DeDupDriver.class);

job.setMapperClass(DeDupMapper.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(Text.class);

job.setReducerClass(DeDupReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

FileInputFormat.setInputPaths(job, new Path("D:/hadooptest/dedup.txt"));

FileOutputFormat.setOutputPath(job, new Path("D:/hadooptest/ansdedup"));

job.waitForCompletion(true);

}

}

MapReduce 经典样例 TOPN排序

package qfnu;

import java.io.IOException;

import java.util.Comparator;

import java.util.TreeMap;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

class TopNMapper extends Mapper<LongWritable, Text,

Text, IntWritable>{

private TreeMap<Integer, String> treeMap =

new TreeMap<Integer, String>();

@Override

protected void map(LongWritable key, Text value,

Mapper<LongWritable, Text, Text, IntWritable>.Context context)

throws IOException, InterruptedException {

// TODO Auto-generated method stub

String line = value.toString();

String[] values = line.split(" ");

for(String v : values) {

treeMap.put(Integer.parseInt(v), "");

if(treeMap.size() > 5) {

treeMap.remove(treeMap.firstKey());

}

}

}

@Override

protected void cleanup(Mapper<LongWritable, Text, Text, IntWritable>.Context context)

throws IOException, InterruptedException {

// TODO Auto-generated method stub

for(Integer key : treeMap.keySet()) {

context.write(new Text("value"), new IntWritable(key));

}

}

}

class TopNReducer extends Reducer<Text, IntWritable,

IntWritable, Text>{

private TreeMap<Integer, String> treeMap = new TreeMap<Integer, String>(

new Comparator<Integer>() {

public int compare(Integer a, Integer b) {

return b-a;

}

});

@Override

protected void reduce(Text key, Iterable<IntWritable> values,

Reducer<Text, IntWritable, IntWritable, Text>.Context context) throws IOException, InterruptedException {

// TODO Auto-generated method stub

for(IntWritable value : values) {

treeMap.put(value.get(), "");

if(treeMap.size() > 5) {

Integer firstKey = treeMap.firstKey();

Integer lastKey = treeMap.lastKey();

treeMap.remove(treeMap.firstKey());

}

}

for(Integer i : treeMap.keySet()) {

context.write(new IntWritable(i), new Text(""));

}

}

}

public class TopNDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

// TODO Auto-generated method stub

Configuration conf = new Configuration();

conf.set("mapreduce.framework.name", "local");

Job job = Job.getInstance();

job.setJarByClass(TopNDriver.class);

job.setMapperClass(TopNMapper.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setReducerClass(TopNReducer.class);

job.setOutputKeyClass(IntWritable.class);

job.setOutputValueClass(Text.class);

FileInputFormat.setInputPaths(job, new Path("D:/hadooptest/TopN.txt"));

FileOutputFormat.setOutputPath(job, new Path("D:/hadooptest/ansofTopN"));

job.waitForCompletion(true);

}

}

浙公网安备 33010602011771号

浙公网安备 33010602011771号