gevent + asyncio mcp 问题记录

from gevent import monkey

monkey.patch_all()

import grpc.experimental.gevent

grpc.experimental.gevent.init_gevent()

def invoke_mcp(self,

model_mode: ModelMode,

prompt_template_entity: PromptTemplateEntity,

tenant_id: str,

provider: str,

model: str,

query: str,

prompt_messages: list[PromptMessage],

callback: Any,

mcp_ids: list[str],

credentials: dict,

stream: bool) -> None:

"""

同步调用MCP服务

:param model_mode: 模型模式

:param prompt_template_entity: 提示模板实体

:param tenant_id: 租户ID

:param provider: 模型提供者

:param model: 模型名称

:param query: 查询内容

:param prompt_messages: 提示消息列表

:param callback: 回调函数

:param mcp_ids: MCP服务ID列表

:param credentials 模型凭据

:param stream: 是否流式处理

:return: 事件列表

"""

try:

features = ModelProviderService().get_model_features(tenant_id=tenant_id,

provider=provider,

model=model)

except Exception as e:

logger.error(f"获取模型特性失败:{e}")

features = []

# 组装MCP服务配置

mcp_list: list[dict] = MCPService.assemble_mcp_configs_tools(tenant_id=tenant_id, mcp_ids=mcp_ids)

# 定义主异步函数

async def main_async_function():

# 系统提示词

try:

sys_original_prompt = self.parse_system_prompt_message(

model_mode=model_mode,

prompt_template_entity=prompt_template_entity

)

except Exception as _e:

logger.error(f"组装系统提示词失败:{_e}")

sys_original_prompt = None

prompt = sys_original_prompt.content if sys_original_prompt else ""

# 使用异步上下文管理器来管理资源

async with contextlib.AsyncExitStack() as exit_stack:

total_tools: list[BaseTool] = []

# 转换MCP配置为LangChain工具

for mcp_config in mcp_list:

if not mcp_config:

continue

m_provider = mcp_config.get("provider", "")

m_config = mcp_config.get('config', {})

m_tools = mcp_config.get('tools', [])

try:

tools, cleanup_func = await convert_mcp_to_langchain_tools(m_config)

# 将清理函数注册到exit_stack

if cleanup_func:

exit_stack.push_async_callback(cleanup_func)

for t in tools:

# 修改元数据

if t.metadata:

t.metadata = {

**t.metadata,

"provider": m_provider

}

else:

t.metadata = {

"provider": m_provider

}

# 如果工具没有禁用,则添加

for _tool in m_tools:

if _tool and 'name' in _tool and 'is_enabled' in _tool and \

_tool.get('name', '') == t.name and _tool.get('is_enabled', False):

total_tools.append(t)

break

except Exception as tool_error:

logger.error(f"加载处理MCP工具失败:{tool_error}", exc_info=True)

# 将工具转换成字典

tools_map: dict[str, BaseTool] = MCPUtils.parse_langchain_tools(tools=total_tools)

# 创建LLM客户端

llm_client = self.create_llm_client(provider=provider, model=model, credentials=credentials)

# 创建React Agent

graph = create_react_agent(llm_client, tools=total_tools, prompt=prompt)

# 准备输入

messages = MCPUtils.convert_prompt_messages_for_langchain(

prompt_messages=prompt_messages, query=query)

input_data = {"messages": messages}

# 创建数据解析器

data_parser = LCRAEventProcessor()

# 引用信息

mcp: list = []

# llm usage

usage: LLMUsage = LLMUsage.empty_usage()

usage.provider = provider

usage.model = model

usage.features = features

if stream:

# 流式处理

async for event in graph.astream_events(input_data, version="v1"):

chunk: LCRAChunk = data_parser.process_stream(raw_chunk=event)

MCPUtils.deal_langchain_chunk(

chunk=chunk, tools_map=tools_map, mcp=mcp,

usage=usage, callback=callback)

else:

# 非流式处理

_result = await graph.ainvoke(input_data)

logger.warning(f"非流式处理")

# todo: 处理非流式结果...

# 返回结果

return {"status": "success"}

# 在新线程中运行异步代码

def run_in_thread():

try:

# 使用anyio.run来运行异步代码,避免手动管理事件循环

import anyio

# noinspection PyArgumentList, PyTypeChecker

return anyio.run(main_async_function)

except Exception as _err:

logger.error(f"Error in thread execution: {_err}", exc_info=True)

if callback:

print(1111111111)

callback({"event": "error", "output": str(_err)})

print(2222222222)

return {"status": "error", "message": str(_err)}

# 使用线程池执行器

with ThreadPoolExecutor() as executor:

result = executor.submit(run_in_thread).result(timeout=20)

# 如果需要,可以通过 callback 传递结果

print("result",result)

if callback and result:

print(33333333333)

callback({"event": "final_result", "result": result})

return None

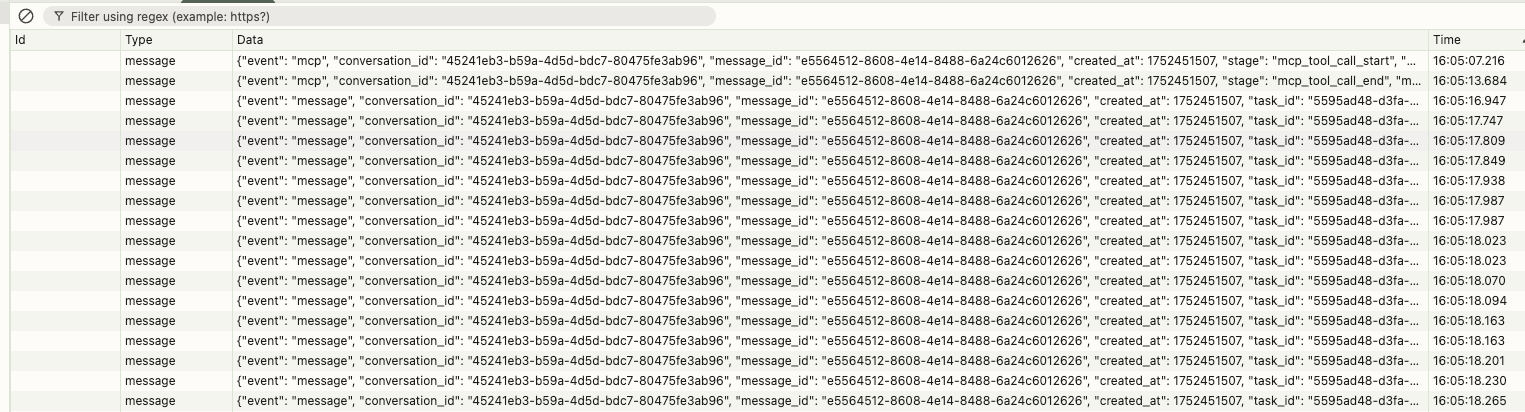

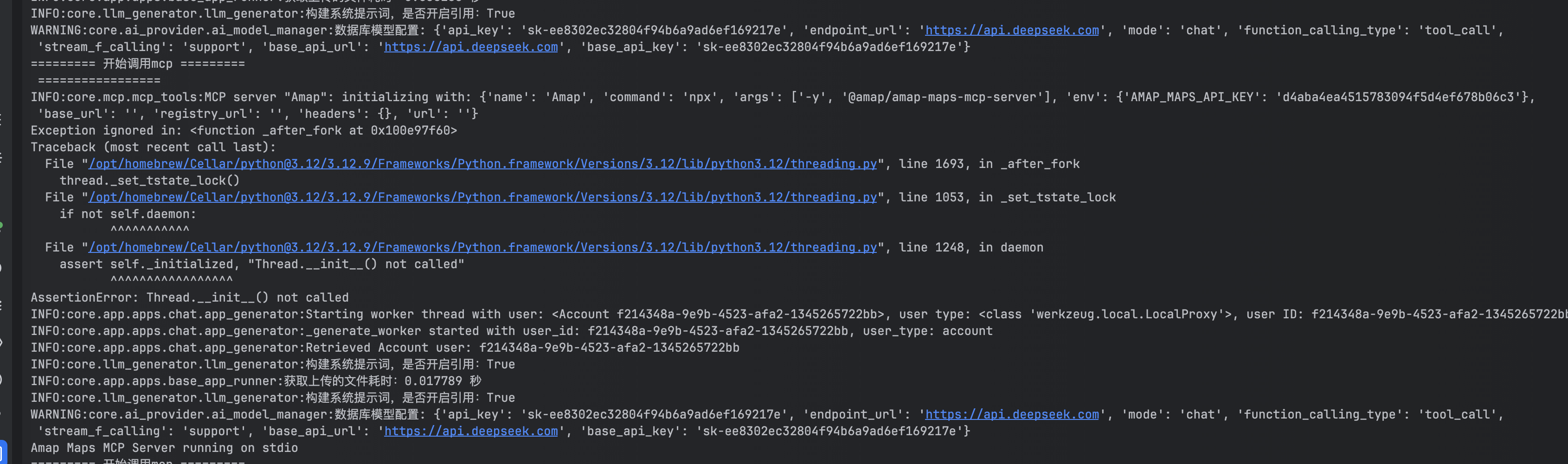

在启动gevent的情况下调用invoke_mcp相关接口,仅调用一次可以正常运行。当出现连续调用时,则会出现如下错误,并且出现错误的那个接口一直显示加载中。

'stream_f_calling': 'support', 'base_api_url': 'https://api.deepseek.com', 'base_api_key': 'sk-ee8302ec32804f94b6a9ad6ef169217e'}

ERROR:core.app.apps.base_app_runner:Error in thread execution: Cannot run the event loop while another loop is running

Traceback (most recent call last):

File "/Users/txmmy/oneai-projects/rag-api/.venv/lib/python3.12/site-packages/anyio/_backends/_asyncio.py", line 2310, in run

return runner.run(wrapper())

^^^^^^^^^^^^^^^^^^^^^

File "/opt/homebrew/Cellar/python@3.12/3.12.9/Frameworks/Python.framework/Versions/3.12/lib/python3.12/asyncio/runners.py", line 93, in run

raise RuntimeError(

RuntimeError: Runner.run() cannot be called from a running event loop

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/Users/txmmy/oneai-projects/rag-api/core/app/apps/base_app_runner.py", line 986, in run_in_thread

return anyio.run(main_async_function)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/Users/txmmy/oneai-projects/rag-api/.venv/lib/python3.12/site-packages/anyio/_core/_eventloop.py", line 74, in run

return async_backend.run(func, args, {}, backend_options)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/Users/txmmy/oneai-projects/rag-api/.venv/lib/python3.12/site-packages/anyio/_backends/_asyncio.py", line 2309, in run

with Runner(debug=debug, loop_factory=loop_factory) as runner:

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/opt/homebrew/Cellar/python@3.12/3.12.9/Frameworks/Python.framework/Versions/3.12/lib/python3.12/asyncio/runners.py", line 62, in __exit__

self.close()

File "/opt/homebrew/Cellar/python@3.12/3.12.9/Frameworks/Python.framework/Versions/3.12/lib/python3.12/asyncio/runners.py", line 71, in close

loop.run_until_complete(loop.shutdown_asyncgens())

File "/opt/homebrew/Cellar/python@3.12/3.12.9/Frameworks/Python.framework/Versions/3.12/lib/python3.12/asyncio/base_events.py", line 667, in run_until_complete

self._check_running()

File "/opt/homebrew/Cellar/python@3.12/3.12.9/Frameworks/Python.framework/Versions/3.12/lib/python3.12/asyncio/base_events.py", line 628, in _check_running

raise RuntimeError(

RuntimeError: Cannot run the event loop while another loop is running

mcp原理拆解

配置 -> clientSeesion -> stdio_client\http\sse等 -> 模型选择并执工具,调用session.call_tool

传输层实现

STDIO传输:适用于本地进程间通信。客户端和服务器端通过标准输入输出进行数据传输。在stdio_client中,会创建一个stdio对象,用于读取和写入数据。客户端通过write方法将消息发送给服务器端,服务器端通过stdio对象读取消息。

HTTP + SSE传输:适用于远程网络通信。服务端通过server-sent events(SSE)向客户端发送消息,客户端通过http post向服务端发送消息。在服务器端,需要配置HTTP服务器,监听特定的端口和路径,当客户端发送请求时,服务器端会根据请求的类型和内容进行相应的处理,并通过SSE向客户端发送响应消息。

代码拆解

- 组装系统提示词

- 将mcp转换成langchain工具

- react 执行选择

解决方案

方法一,使用子进程multiprocess隔离

def invoke_mcp(self,

model_mode: ModelMode,

prompt_template_entity: PromptTemplateEntity,

tenant_id: str,

provider: str,

model: str,

query: str,

prompt_messages: list[PromptMessage],

callback: Any,

mcp_ids: list[str],

credentials: dict,

stream: bool) -> None:

"""

同步调用MCP服务(多进程+asyncio+gevent兼容方案)

"""

import multiprocessing

import asyncio

import logging

import contextlib

from core.mcp.mcp_utils import MCPUtils

from core.mcp.mcp_tools import convert_mcp_to_langchain_tools

from langchain_openai import ChatOpenAI

from langgraph.prebuilt import create_react_agent

from core.app.apps.utils.lcra_message_processor import LCRAEventProcessor, LCRAChunk, LCRAEventType, \

LCRAEventNameEnum

from core.ai_provider.entities.llm_entities import LLMUsage

from services.mcp.mcp_service import MCPService

from services.ai_provider_service import AIProviderService as ModelProviderService

logger = logging.getLogger(__name__)

try:

features = ModelProviderService().get_model_features(tenant_id=tenant_id,

provider=provider,

model=model)

except Exception as e:

logger.error(f"获取模型特性失败:{e}")

features = []

mcp_list: list[dict] = MCPService.assemble_mcp_configs_tools(tenant_id=tenant_id, mcp_ids=mcp_ids)

def run_async_in_subprocess(result_queue):

import asyncio

import contextlib

async def main_async_function():

try:

sys_original_prompt = self.parse_system_prompt_message(

model_mode=model_mode,

prompt_template_entity=prompt_template_entity

)

except Exception as _e:

logger.error(f"组装系统提示词失败:{_e}")

sys_original_prompt = None

prompt = sys_original_prompt.content if sys_original_prompt else ""

async with contextlib.AsyncExitStack() as exit_stack:

total_tools = []

for mcp_config in mcp_list:

if not mcp_config:

continue

m_provider = mcp_config.get("provider", "")

m_config = mcp_config.get('config', {})

m_tools = mcp_config.get('tools', [])

try:

tools, cleanup_func = await convert_mcp_to_langchain_tools(m_config)

if cleanup_func:

exit_stack.push_async_callback(cleanup_func)

for t in tools:

if t.metadata:

t.metadata = {

**t.metadata,

"provider": m_provider

}

else:

t.metadata = {"provider": m_provider}

for _tool in m_tools:

if _tool and 'name' in _tool and 'is_enabled' in _tool and \

_tool.get('name', '') == t.name and _tool.get('is_enabled', False):

total_tools.append(t)

break

except Exception as tool_error:

logger.error(f"加载处理MCP工具失败:{tool_error}", exc_info=True)

tools_map = MCPUtils.parse_langchain_tools(tools=total_tools)

llm_client = self.create_llm_client(provider=provider, model=model, credentials=credentials)

graph = create_react_agent(llm_client, tools=total_tools, prompt=prompt)

messages = MCPUtils.convert_prompt_messages_for_langchain(

prompt_messages=prompt_messages, query=query)

input_data = {"messages": messages}

data_parser = LCRAEventProcessor()

mcp = []

usage: LLMUsage = LLMUsage.empty_usage()

usage.provider = provider

usage.model = model

usage.features = features

try:

if stream:

async for event in graph.astream_events(input_data, version="v1"):

chunk: LCRAChunk = data_parser.process_stream(raw_chunk=event)

MCPUtils.deal_langchain_chunk(

chunk=chunk, tools_map=tools_map, mcp=mcp,

usage=usage, callback=callback)

else:

_result = await graph.ainvoke(input_data)

logger.warning(f"非流式处理")

# todo: 处理非流式结果...

return {"status": "success"}

finally:

# exit_stack 会自动清理所有 async 资源

pass

try:

result = asyncio.run(main_async_function())

result_queue.put(result)

except RuntimeError as e:

if "Attempted to exit cancel scope" in str(e):

print("Ignore cancel scope error during shutdown:", e)

result_queue.put({"status": "warning", "message": str(e)})

else:

raise

result_queue = multiprocessing.Queue()

p = multiprocessing.Process(target=run_async_in_subprocess, args=(result_queue,))

p.start()

p.join()

result = result_queue.get()

if callback and result:

callback({"event": "final_result", "result": result})

return None

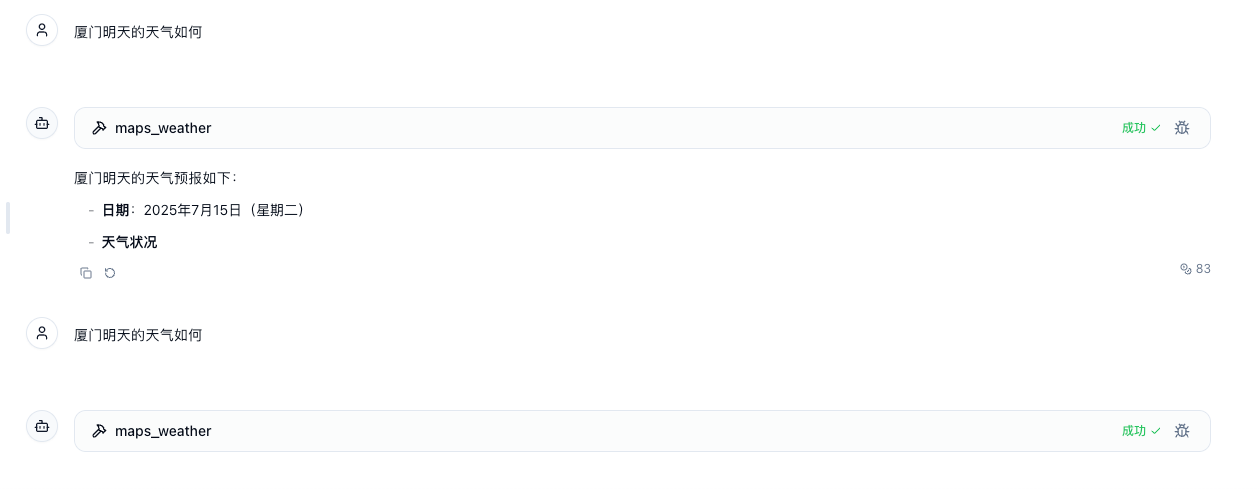

测试用例一: 调用一个会话

可以正常输出,但是出现错误。

测试用例二: 调用两个会话

方法二: 创建新loop

try:

loop = asyncio.get_event_loop()

except RuntimeError:

# 如果当前上下文中没有循环,则创建一个新的并设置为当前循环

# 这在某些测试或脚本场景下可能是必要的

loop = asyncio.new_event_loop()

asyncio.set_event_loop(loop)

出现loop 已经running

方法二: asyncio_gevent

invoke_mcp里注释掉run_in_thread和多线程代码,改成:

future = main_async_function()

greelet = asyncio_gevent.future_to_greenlet(future)

greelet.run()

出现问题:

-

同时测试两个会话,最后一个会话会一直转圈

-

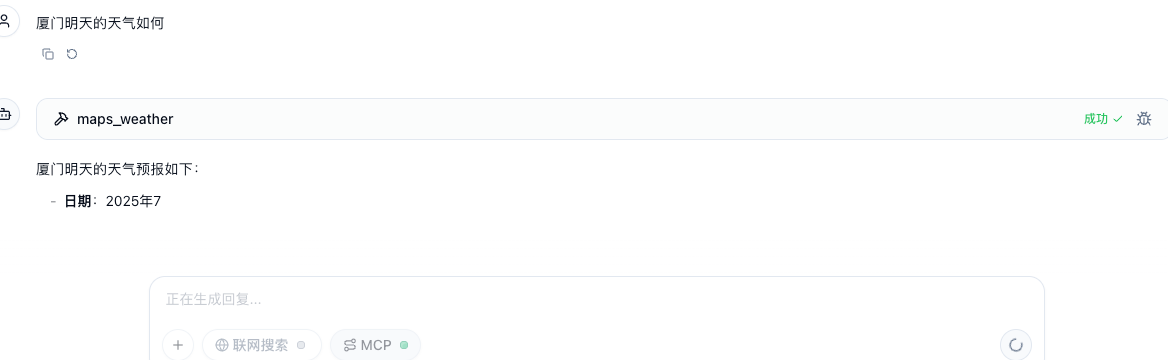

同时测试三个会话,第一个会话正常输出,其他两个会话正常调用,但模型输出会卡住,有时候总结没输出就卡住,有时候输出总结完成也卡住。如:

找出原因:

追溯源码可以发现,并发时三个mcp共享一个loop,也就是第一个会话结束,session已关闭,其他两个会话却还没执行完毕,还在回调阶段,导致出现阻塞的情况:

def future_to_greenlet(

future: asyncio.Future,

loop: Optional[asyncio.AbstractEventLoop] = None,

autostart_future: bool = True,

autocancel_future: bool = True,

autokill_greenlet: bool = True,

) -> gevent.Greenlet:

"""

Wrap a future in a greenlet.

The greenlet returned by this function will not start automatically, so you

need to call `Greenlet.start()` manually.

If `future` is a coroutine object, it will be scheduled as a task on the

`loop` when the greenlet starts. If no `loop` argument has been passed, the

running event loop will be used. If there is no running event loop, a new

event loop will be started using the current event loop policy.

If the future is not already scheduled, then it won't be scheduled for

execution until the greenlet starts running. To prevent the future from

being scheduled automatically, you can pass `autostart_future=False` as an

argument to `future_to_greenlet`.

If the greenlet gets killed, the by default the future gets cancelled. To

prevent this from happening and having the future return the

`GreenletExit` objct instead, you can pass `autocancel_future=False` as an

argument to `future_to_greenlet`.

If the future gets cancelled, the greenlet gets killed and will return a

`GreenletExit`. This default behaviour can be circumvented by passing

`autokill_greenlet=False` and the greenlet will raise the `CancelledError`

instead.

"""

def run():

active_loop = loop

# If not loop argument was specified, try and use the running loop

if not active_loop:

try:

active_loop = asyncio.get_running_loop()

except RuntimeError:

pass

ensured_future = None

try:

if not autostart_future:

ensured_future = future

elif not active_loop:

# If there's no running loop and no loop argument was specified, get a loop and run it to completion in a spawned greenlet

active_loop = asyncio.new_event_loop()

asyncio.set_event_loop(active_loop)

ensured_future = asyncio.ensure_future(future, loop=active_loop)

run_until_complete_greenlet = gevent.spawn(

active_loop.run_until_complete, ensured_future

)

run_until_complete_greenlet.join()

else:

# If there's a running loop already or a loop argument was specified, then schedule the future and block until it's done

ensured_future = asyncio.ensure_future(future, loop=active_loop)

event = gevent.event.Event()

def done(_):

event.set()

ensured_future.add_done_callback(done)

event.wait()

return ensured_future.result()

except CancelledError:

if autokill_greenlet:

greenlet.kill()

else:

raise

greenlet = gevent.Greenlet(run=run)

def cb(gt):

if isinstance(gt.value, gevent.GreenletExit):

future.cancel()

if autocancel_future:

greenlet.link_value(cb)

return greenlet

- 如果不终止掉其他两个会话,继续调用第一个会话。能够正常输出

原因:

其他两个会话在这里就结束了

而第一个会话的模型回调是正常的:

解决方法: 见方法二-2

3. 出现错误:

方法二-2

把greenlet.run改掉

def run_mcp(query: str):

# 解决了并发阻塞问题

# 但代码迁移到服务还是解决不了

task_id = int(time.time())

future = invoke_mcp(query)

greenlet = asyncio_gevent.future_to_greenlet(future, autokill_greenlet=False)

# greenlet.run()

greenlet.start()

result = greenlet.get()

print("task_id", task_id, result)

if result:

print(111111)

greenlet.kill()

print("killed", task_id)

return result

api 测试:

# app.py

# -----------------------------------------------------------------------------

# 1. 环境初始化 (必须放在最前面)

# -----------------------------------------------------------------------------

import os

import time

# 设置环境变量,防止 gevent 警告

os.environ.setdefault('GRPC_DNS_RESOLVER', 'native')

import gevent.monkey

gevent.monkey.patch_all()

import asyncio

import asyncio_gevent

import grpc.experimental.gevent

# 在猴子补丁之后,初始化 gevent-friendly 的 gRPC 和 asyncio

grpc.experimental.gevent.init_gevent()

asyncio.set_event_loop_policy(asyncio_gevent.EventLoopPolicy())

# -----------------------------------------------------------------------------

# 2. 导入和配置

# -----------------------------------------------------------------------------

from flask import Flask, request, jsonify

from gevent.pywsgi import WSGIServer

import logging

from langchain_core.messages import SystemMessage, HumanMessage

from langchain_mcp_tools import McpServersConfig, convert_mcp_to_langchain_tools

from langchain_openai import ChatOpenAI

from my_test.mcp.create_react_agent import create_react_agent # 确保这个路径正确

# --- 全局配置 ---

API_URL = os.environ.get("API_URL", "https://api.oneai.art/v1")

API_KEY = os.environ.get("API_KEY", "sk-uBfTebOwJ2tr2hfEngsdCIpPa5GHJouoAZXHicmFRdXTF8YH") # 建议从环境变量读取

MODEL_NAME = "qwen2.5-72b-instruct"

# --- 日志配置 ---

def init_logger() -> logging.Logger:

logging.basicConfig(

level=logging.INFO,

format="\x1b[90m[%(levelname)s]\x1b[0m %(message)s"

)

return logging.getLogger()

logger = init_logger()

# -----------------------------------------------------------------------------

# 3. 核心业务逻辑 (异步函数)

# -----------------------------------------------------------------------------

async def invoke_mcp(query: str):

"""

核心的异步调用逻辑。

:param query: 用户的查询语句

"""

print(" ==== invoke mcp start ====")

cleanup = None # 初始化 cleanup 函数

try:

mcp_config: McpServersConfig = {

"amap-maps": {

"url": "https://mcp.amap.com/sse?key=d4aba4ea4515783094f5d4ef678b06c3",

"headers": {}

}

}

logger.info(f"Invoking MCP with query: '{query}'")

# 1. 转换工具

tools, cleanup = await convert_mcp_to_langchain_tools(mcp_config, logger)

# 2. 初始化 LLM

llm_client = ChatOpenAI(

base_url=API_URL,

api_key=API_KEY,

model=MODEL_NAME

)

# 3. 创建并调用 Agent

system_prompt = SystemMessage(content="You are a helpful bot named Fred.")

agent = create_react_agent(llm_client, tools, prompt=system_prompt)

result = await agent.ainvoke({"messages": [HumanMessage(content=query)]})

# 4. 提取并返回结果

content = result["messages"][-1].content

logger.info(f"Received content: {content}")

return content

except Exception as e:

logger.error(f"An error occurred in invoke_mcp: {e}", exc_info=True)

# 确保即使出错也返回一个合理的错误信息

return f"An error occurred: {e}"

finally:

# 5. 资源清理

if cleanup:

await cleanup()

logger.info("MCP cleanup function executed.")

# -----------------------------------------------------------------------------

# 4. Flask 应用和 API 路由

# -----------------------------------------------------------------------------

app = Flask(__name__)

def run_mcp(query: str):

# 解决了并发阻塞问题

# 但代码迁移到服务还是解决不了

task_id = int(time.time())

future = invoke_mcp(query)

greenlet = asyncio_gevent.future_to_greenlet(future, autokill_greenlet=False)

# greenlet.run()

greenlet.start()

result = greenlet.get()

print("task_id", task_id, result)

if result:

print(111111)

greenlet.kill()

print("killed", task_id)

return result

@app.route('/invoke_mcp', methods=['POST'])

def invoke_api():

"""

同步的 Flask API 端点,用于接收请求并调用异步逻辑。

"""

if not request.json or 'query' not in request.json:

return jsonify({"error": "Missing 'query' in request body"}), 400

query = request.json['query']

try:

# loop = asyncio.get_event_loop()

# result_content = loop.run_until_complete(invoke_mcp(query))

result = run_mcp(query)

return jsonify({"result": result})

except Exception as e:

logger.error(f"Error in API endpoint: {e}", exc_info=True)

return jsonify({"error": "An internal server error occurred."}), 500

@app.route('/health', methods=['GET'])

def health_check():

return jsonify({"status": "ok"})

# -----------------------------------------------------------------------------

# 5. 启动服务器

# -----------------------------------------------------------------------------

if __name__ == '__main__':

# 用于项目接口测试

# 使用 gevent.pywsgi 运行,这是用于开发的推荐方式

host = '0.0.0.0'

port = 5002

http_server = WSGIServer((host, port), app)

logger.info(f"Server starting on http://{host}:{port}")

http_server.serve_forever()

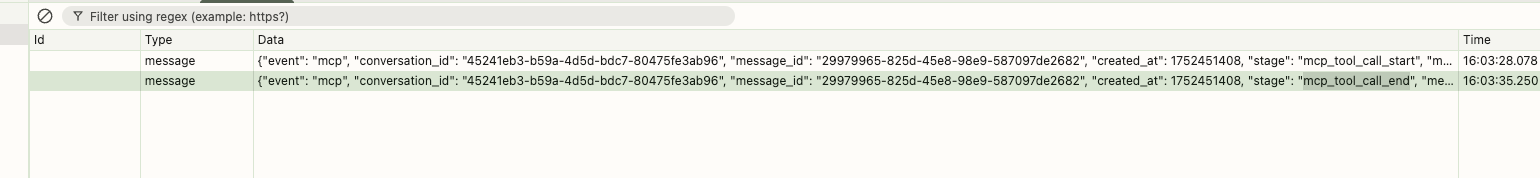

测试多个并发可以正常返回且不会阻塞,但是迁移到服务里不知道为什么还是会阻塞,排查返现是共用一个loop导致的。当调用第一次生成的loop,会被在后续的调用中使用。导致,第一次调用完成,其他的程序还在回调中。

在代码futrue_to_greenlet中存在注释:

If there's a running loop already or a loop argument was specified, then schedule the future and block until it's done

方法二-3 创建新loop,在线程/子进程中隔离

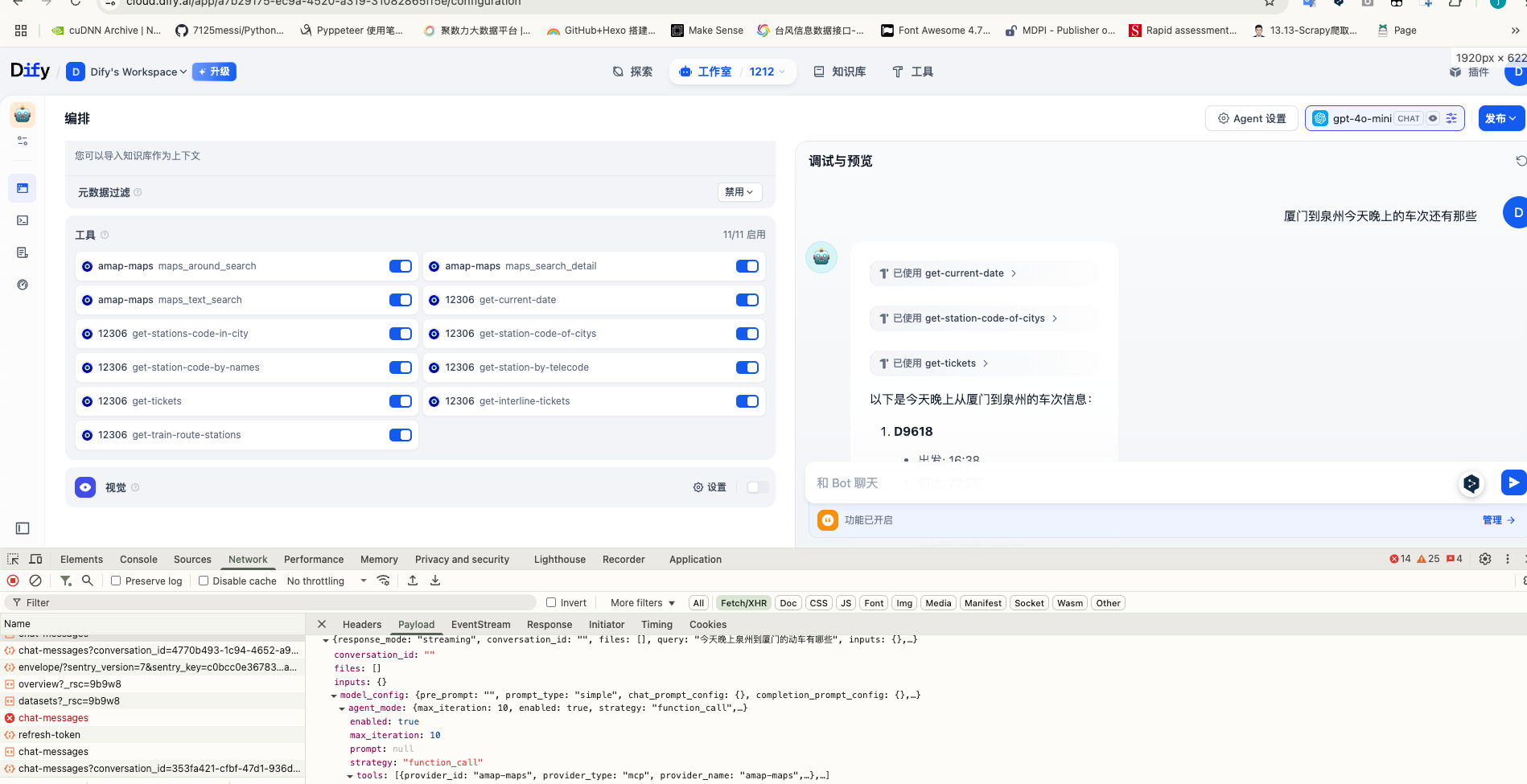

方法四,参考dify

mcp配置成工具,

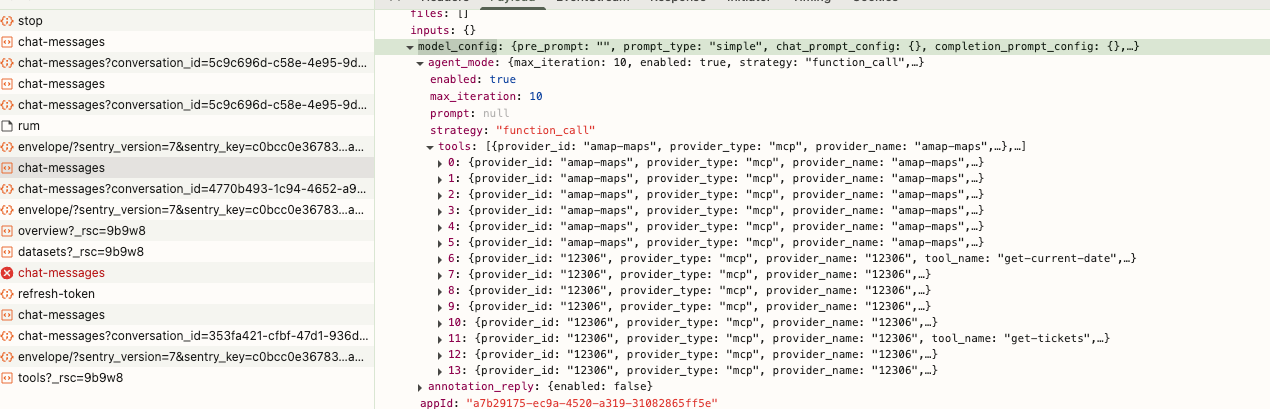

配置存储在 model_config > agent_mode > tools :

接口源码追溯:

上述参数数据转换成app_config

app_model_config_dict={

'agent_mode':

{

'max_iteration': 10,

'enabled': True,

'strategy': 'function_call',

'tools':

[

{'provider_id': 'amap-maps', 'provider_type': 'mcp', 'provider_name': 'amap-maps', 'tool_name': 'maps_direction_bicycling', 'tool_label': 'maps_direction_bicycling', 'tool_parameters': {'origin': '', 'destination': ''}, 'notAuthor': False, 'enabled': True},

{'provider_id': 'amap-maps', 'provider_type': 'mcp', 'provider_name': 'amap-maps', 'tool_name': 'maps_direction_driving', 'tool_label': 'maps_direction_driving', 'tool_parameters': {'origin': '', 'destination': ''}, 'notAuthor': False, 'enabled': True},

{'provider_id': 'amap-maps', 'provider_type': 'mcp', 'provider_name': 'amap-maps', 'tool_name': 'maps_direction_transit_integrated', 'tool_label': 'maps_direction_transit_integrated', 'tool_parameters': {'origin': '', 'destination': '', 'city': '', 'cityd': ''}, 'notAuthor': False, 'enabled': True},

{'provider_id': 'amap-maps', 'provider_type': 'mcp', 'provider_name': 'amap-maps', 'tool_name': 'maps_direction_walking', 'tool_label': 'maps_direction_walking', 'tool_parameters': {'origin': '', 'destination': ''}, 'notAuthor': False, 'enabled': True},

{'provider_id': 'amap-maps', 'provider_type': 'mcp', 'provider_name': 'amap-maps', 'tool_name': 'maps_distance', 'tool_label': 'maps_distance', 'tool_parameters': {'origins': '', 'destination': '', 'type': ''}, 'notAuthor': False, 'enabled': True},

{'provider_id': 'amap-maps', 'provider_type': 'mcp', 'provider_name': 'amap-maps', 'tool_name': 'maps_geo', 'tool_label': 'maps_geo', 'tool_parameters': {'address': '', 'city': ''}, 'notAuthor': False, 'enabled': True},

{'provider_id': 'amap-maps', 'provider_type': 'mcp', 'provider_name': 'amap-maps', 'tool_name': 'maps_regeocode', 'tool_label': 'maps_regeocode', 'tool_parameters': {'location': ''}, 'notAuthor': False, 'enabled': True},

{'provider_id': 'amap-maps', 'provider_type': 'mcp', 'provider_name': 'amap-maps', 'tool_name': 'maps_ip_location', 'tool_label': 'maps_ip_location', 'tool_parameters': {'ip': ''}, 'notAuthor': False, 'enabled': True},

{'provider_id': 'amap-maps', 'provider_type': 'mcp', 'provider_name': 'amap-maps', 'tool_name': 'maps_schema_personal_map', 'tool_label': 'maps_schema_personal_map', 'tool_parameters': {'orgName': '', 'lineList': ''}, 'notAuthor': False, 'enabled': True},

{'provider_id': 'amap-maps', 'provider_type': 'mcp', 'provider_name': 'amap-maps', 'tool_name': 'maps_around_search', 'tool_label': 'maps_around_search', 'tool_parameters': {'keywords': '', 'location': '', 'radius': ''}, 'notAuthor': False, 'enabled': True},

{'provider_id': 'amap-maps', 'provider_type': 'mcp', 'provider_name': 'amap-maps', 'tool_name': 'maps_search_detail', 'tool_label': 'maps_search_detail', 'tool_parameters': {'id': ''}, 'notAuthor': False, 'enabled': True},

{'provider_id': 'amap-maps', 'provider_type': 'mcp', 'provider_name': 'amap-maps', 'tool_name': 'maps_text_search', 'tool_label': 'maps_text_search', 'tool_parameters': {'keywords': '', 'city': '', 'citylimit': ''}, 'notAuthor': False, 'enabled': True},

{'provider_id': 'amap-maps', 'provider_type': 'mcp', 'provider_name': 'amap-maps', 'tool_name': 'maps_schema_navi', 'tool_label': 'maps_schema_navi', 'tool_parameters': {'lon': '', 'lat': ''}, 'notAuthor': False, 'enabled': True},

{'provider_id': 'amap-maps', 'provider_type': 'mcp', 'provider_name': 'amap-maps', 'tool_name': 'maps_schema_take_taxi', 'tool_label': 'maps_schema_take_taxi', 'tool_parameters': {'slon': '', 'slat': '', 'sname': '', 'dlon': '', 'dlat': '', 'dname': ''}, 'notAuthor': False, 'enabled': True},

{'provider_id': 'amap-maps', 'provider_type': 'mcp', 'provider_name': 'amap-maps', 'tool_name': 'maps_weather', 'tool_label': 'maps_weather', 'tool_parameters': {'city': ''}, 'notAuthor': False, 'enabled': True}

],

'prompt': None

},

'opening_statement': '',

'completion_prompt_config': {},

'retriever_resource': {'enabled': True},

'speech_to_text': {'enabled': False},

'file_upload': {'image': {'detail': 'high', 'enabled': False, 'number_limits': 3, 'transfer_methods': ['remote_url', 'local_file']}, 'enabled': False, 'allowed_file_types': [], 'allowed_file_extensions': ['.JPG', '.JPEG', '.PNG', '.GIF', '.WEBP', '.SVG', '.MP4', '.MOV', '.MPEG', '.WEBM'], 'allowed_file_upload_methods': ['remote_url', 'local_file'], 'number_limits': 3, 'fileUploadConfig': {'file_size_limit': 15, 'batch_count_limit': 5, 'image_file_size_limit': 10, 'video_file_size_limit': 100, 'audio_file_size_limit': 50, 'workflow_file_upload_limit': 10}},

'chat_prompt_config': {},

'suggested_questions': [],

'dataset_query_variable': '',

'model':

{

'provider': 'langgenius/tongyi/tongyi',

'name': 'qwen2.5-72b-instruct',

'mode': 'chat',

'completion_params': {}

},

'sensitive_word_avoidance': {'enabled': False, 'type': '', 'configs': []},

'suggested_questions_after_answer': {'enabled': False},

'dataset_configs': {

'retrieval_model': 'multiple',

'top_k': 4,

'reranking_enable': False,

'datasets': {'datasets': []}

},

'external_data_tools': [],

'prompt_type': 'simple',

'text_to_speech': {'enabled': False, 'voice': '', 'language': ''},

'user_input_form': [], 'pre_prompt': ''}

model=ModelConfigEntity(provider='langgenius/tongyi/tongyi', model='qwen2.5-72b-instruct', mode='chat', parameters={}, stop=[])

prompt_template=PromptTemplateEntity(prompt_type=<PromptType.SIMPLE: 'simple'>, simple_prompt_template='', advanced_chat_prompt_template=None, advanced_completion_prompt_template=None) dataset=None external_data_variables=[]

agent=AgentEntity(provider='langgenius/tongyi/tongyi', model='qwen2.5-72b-instruct', strategy=<Strategy.FUNCTION_CALLING: 'function-calling'>,

prompt=AgentPromptEntity(first_prompt='Respond to the human as helpfully and accurately as possible.\n\n{{instruction}}\n\nYou have access to the following tools:\n\n{{tools}}\n\nUse a json blob to specify a tool by providing an action key (tool name) and an action_input key (tool input).\nValid "action" values: "Final Answer" or {{tool_names}}\n\nProvide only ONE action per $JSON_BLOB, as shown:\n\n```\n{\n "action": $TOOL_NAME,\n "action_input": $ACTION_INPUT\n}\n```\n\nFollow this format:\n\nQuestion: input question to answer\nThought: consider previous and subsequent steps\nAction:\n```\n$JSON_BLOB\n```\nObservation: action result\n... (repeat Thought/Action/Observation N times)\nThought: I know what to respond\nAction:\n```\n{\n "action": "Final Answer",\n "action_input": "Final response to human"\n}\n```\n\nBegin! Reminder to ALWAYS respond with a valid json blob of a single action. Use tools if necessary. Respond directly if appropriate. Format is Action:```$JSON_BLOB```then Observation:.\n', next_iteration=''),

tools=[

AgentToolEntity(provider_type=<ToolProviderType.MCP: 'mcp'>, provider_id='amap-maps', tool_name='maps_direction_bicycling', tool_parameters={'origin': '', 'destination': ''}, plugin_unique_identifier=None),

AgentToolEntity(provider_type=<ToolProviderType.MCP: 'mcp'>, provider_id='amap-maps', tool_name='maps_direction_driving', tool_parameters={'origin': '', 'destination': ''}, plugin_unique_identifier=None),

AgentToolEntity(provider_type=<ToolProviderType.MCP: 'mcp'>, provider_id='amap-maps', tool_name='maps_direction_transit_integrated', tool_parameters={'origin': '', 'destination': '', 'city': '', 'cityd': ''}, plugin_unique_identifier=None),

AgentToolEntity(provider_type=<ToolProviderType.MCP: 'mcp'>, provider_id='amap-maps', tool_name='maps_direction_walking', tool_parameters={'origin': '', 'destination': ''}, plugin_unique_identifier=None),

AgentToolEntity(provider_type=<ToolProviderType.MCP: 'mcp'>, provider_id='amap-maps', tool_name='maps_distance', tool_parameters={'origins': '', 'destination': '', 'type': ''}, plugin_unique_identifier=None),

AgentToolEntity(provider_type=<ToolProviderType.MCP: 'mcp'>, provider_id='amap-maps', tool_name='maps_geo', tool_parameters={'address': '', 'city': ''}, plugin_unique_identifier=None),

AgentToolEntity(provider_type=<ToolProviderType.MCP: 'mcp'>, provider_id='amap-maps', tool_name='maps_regeocode', tool_parameters={'location': ''}, plugin_unique_identifier=None),

AgentToolEntity(provider_type=<ToolProviderType.MCP: 'mcp'>, provider_id='amap-maps', tool_name='maps_ip_location', tool_parameters={'ip': ''}, plugin_unique_identifier=None),

AgentToolEntity(provider_type=<ToolProviderType.MCP: 'mcp'>, provider_id='amap-maps', tool_name='maps_schema_personal_map', tool_parameters={'orgName': '', 'lineList': ''}, plugin_unique_identifier=None),

AgentToolEntity(provider_type=<ToolProviderType.MCP: 'mcp'>, provider_id='amap-maps', tool_name='maps_around_search', tool_parameters={'keywords': '', 'location': '', 'radius': ''}, plugin_unique_identifier=None),

AgentToolEntity(provider_type=<ToolProviderType.MCP: 'mcp'>, provider_id='amap-maps', tool_name='maps_search_detail', tool_parameters={'id': ''}, plugin_unique_identifier=None),

AgentToolEntity(provider_type=<ToolProviderType.MCP: 'mcp'>, provider_id='amap-maps', tool_name='maps_text_search', tool_parameters={'keywords': '', 'city': '', 'citylimit': ''}, plugin_unique_identifier=None),

AgentToolEntity(provider_type=<ToolProviderType.MCP: 'mcp'>, provider_id='amap-maps', tool_name='maps_schema_navi', tool_parameters={'lon': '', 'lat': ''}, plugin_unique_identifier=None),

AgentToolEntity(provider_type=<ToolProviderType.MCP: 'mcp'>, provider_id='amap-maps', tool_name='maps_schema_take_taxi', tool_parameters={'slon': '', 'slat': '', 'sname': '', 'dlon': '', 'dlat': '', 'dname': ''}, plugin_unique_identifier=None),

AgentToolEntity(provider_type=<ToolProviderType.MCP: 'mcp'>, provider_id='amap-maps', tool_name='maps_weather', tool_parameters={'city': ''}, plugin_unique_identifier=None)], max_iteration=10)

浙公网安备 33010602011771号

浙公网安备 33010602011771号