K8S脉络整理(019)-kubernetes 集群监控prometheus

几种方案:

1 Weave Scope

2 Heapster

3 Prometheus Operator 目前功能最全面的开源监控方案。 能够监控Node Port ,并支持集群的各种管理组件,如 API Server 、Scheduler、Controller Manager等。

prometheus Alertmanager Grafana

此文相关版本

Prometheus v2.2.1

Alertmanager v0.14.0

Grafana 5.0.0

prometheus Alertmanager Grafana

prometheus 是一个非常优秀的监控方案。提供了数据搜集、存储、处理、可视化和告警一套完整的解决方案。

安装部署:

1、下载最新源码

https://github.com/coreos/prometheus-operator

git clone https://github.com/coreos/prometheus-operator.git

cd prometheus-operator

2、创建namespace: monitoring

daweij@master:~/prometheus-operator$ cat monitoring-ns.yml apiVersion: v1 kind: Namespace metadata: name: monitoring daweij@master:~/prometheus-operator$ kubectl apply -f monitoring-ns.yml namespace "monitoring" created

3、安装Prometheus Operator Deployment

helm install --name prometheus-operator --set rbacEnable=true --namespace=monitoring helm/prometheus-operator

daweij@master:~/prometheus-operator$ helm install --name prometheus-operator --set rbacEnable=true --namespace=monitoring helm/prometheus-operator NAME: prometheus-operator LAST DEPLOYED: Mon Apr 2 15:15:29 2018 NAMESPACE: monitoring STATUS: DEPLOYED RESOURCES: ==> v1/ConfigMap NAME DATA AGE prometheus-operator 1 54s ==> v1/ServiceAccount NAME SECRETS AGE prometheus-operator 1 54s ==> v1beta1/ClusterRole NAME AGE prometheus-operator 54s ==> v1beta1/ClusterRoleBinding NAME AGE prometheus-operator 54s ==> v1beta1/Deployment NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE prometheus-operator 1 1 1 1 54s ==> v1/Pod(related) NAME READY STATUS RESTARTS AGE prometheus-operator-77fd5d856f-6f9v9 1/1 Running 0 54s NOTES: The Prometheus Operator has been installed. Check its status by running: kubectl --namespace monitoring get pods -l "app=prometheus-operator,release=prometheus-operator" Visit https://github.com/coreos/prometheus-operator for instructions on how to create & configure Alertmanager and Prometheus instances using the Operator.

查看

helm list

daweij@master:~/prometheus-operator$ kubectl --namespace monitoring get deployment NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE prometheus-operator 1 1 1 1 1m daweij@master:~/prometheus-operator$ helm ls NAME REVISION UPDATED STATUS CHART NAMESPACE prometheus-operator 1 Mon Apr 2 15:15:29 2018 DEPLOYED prometheus-operator-0.0.1monitoring

Prometheus Operator 所有组件都打包成Helm Chart,安装部署非常方便。

相关目录:

/home/daweij/prometheus-operator/helm/prometheus-operator

相关2个image:

quay.io/coreos/prometheus-operator:v0.17.0

quay.io/coreos/hyperkube:v1.7.6_coreos.0

若删除重建可参考下述执行:

helm delete --purge prometheus-operator

kubectl delete job --namespace=monitoring prometheus-operator-create-sm-job

4、安装Prometheus、Alertmanager和Grafana

helm install --name prometheus --set serviceMonitorsSelector.app=prometheus --set ruleSelector.app=prometheus --namespace=monitoring helm/prometheus

daweij@master:~/prometheus-operator$ helm install --name prometheus --set serviceMonitorsSelector.app=prometheus --set ruleSelector.app=prometheus --namespace=monitoring helm/prometheus NAME: prometheus LAST DEPLOYED: Mon Apr 2 16:18:03 2018 NAMESPACE: monitoring STATUS: DEPLOYED RESOURCES: ==> v1/Prometheus NAME AGE prometheus 42s ==> v1/ServiceMonitor prometheus 42s ==> v1/ConfigMap NAME DATA AGE prometheus 1 42s ==> v1/ServiceAccount NAME SECRETS AGE prometheus 1 42s ==> v1beta1/ClusterRole NAME AGE prometheus 42s ==> v1beta1/ClusterRoleBinding NAME AGE prometheus 42s ==> v1/Service NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE prometheus ClusterIP 10.233.39.37 <none> 9090/TCP 42s NOTES: A new Prometheus instance has been created.

helm install --name alertmanager --namespace=monitoring helm/alertmanager

daweij@master:~/prometheus-operator$ helm install --name alertmanager --namespace=monitoring helm/alertmanager NAME: alertmanager LAST DEPLOYED: Mon Apr 2 16:21:45 2018 NAMESPACE: monitoring STATUS: DEPLOYED RESOURCES: ==> v1/Secret NAME TYPE DATA AGE alertmanager-alertmanager Opaque 1 42s ==> v1/ConfigMap NAME DATA AGE alertmanager 1 42s ==> v1/Service NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE alertmanager ClusterIP 10.233.5.170 <none> 9093/TCP 42s ==> v1/Alertmanager NAME AGE alertmanager 42s ==> v1/ServiceMonitor alertmanager 42s NOTES: A new Alertmanager instance has been created.

helm install --name grafana --namespace=monitoring helm/grafana

daweij@master:~/prometheus-operator$ helm install --name grafana --namespace=monitoring helm/grafana NAME: grafana LAST DEPLOYED: Mon Apr 2 16:22:58 2018 NAMESPACE: monitoring STATUS: DEPLOYED RESOURCES: ==> v1/Pod(related) NAME READY STATUS RESTARTS AGE grafana-grafana-59cdb46b78-8smjn 0/2 ContainerCreating 0 43s ==> v1/Secret NAME TYPE DATA AGE grafana-grafana Opaque 2 43s ==> v1/ConfigMap NAME DATA AGE grafana-grafana 10 43s ==> v1/Service NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE grafana-grafana ClusterIP 10.233.13.205 <none> 80/TCP 43s ==> v1beta1/Deployment NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE grafana-grafana 1 1 1 0 43s ==> v1/ServiceMonitor NAME AGE grafana 43s NOTES: 1. Get your 'admin' user password by running: kubectl get secret --namespace monitoring grafana-grafana -o jsonpath="{.data.password}" | base64 --decode ; echo 2. The Grafana server can be accessed via port 80 on the following DNS name from within your cluster: grafana-grafana.monitoring.svc.cluster.local Get the Grafana URL to visit by running these commands in the same shell: export POD_NAME=$(kubectl get pods --namespace monitoring -l "app=grafana" -o jsonpath="{.items[0].metadata.name}") kubectl --namespace monitoring port-forward $POD_NAME 3000 3. Login with the password from step 1 and the username: admin ################################################################################# ###### WARNING: Persistence is disabled!!! You will lose your data when ##### ###### the Grafana pod is terminated. ##### #################################################################################

通过kubectl get prometheus查看Prometheus类型的资源:

daweij@master:~/prometheus-operator$ kubectl get deployment --namespace=monitoring NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE grafana-grafana 1 1 1 1 2m prometheus-operator 1 1 1 1 8m daweij@master:~/prometheus-operator$ kubectl get pod --namespace=monitoring -o wide NAME READY STATUS RESTARTS AGE IP NODE alertmanager-alertmanager-0 2/2 Running 0 4m 10.233.75.36 node2 grafana-grafana-59cdb46b78-8smjn 2/2 Running 0 2m 10.233.102.149 node1 prometheus-operator-77fd5d856f-hv4z2 1/1 Running 0 8m 10.233.75.35 node2 prometheus-prometheus-0 2/2 Running 0 7m 10.233.71.37 node3 daweij@master:~/prometheus-operator$ kubectl get --namespace=monitoring service NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE alertmanager ClusterIP 10.233.5.170 <none> 9093/TCP 4m alertmanager-operated ClusterIP None <none> 9093/TCP,6783/TCP 4m grafana-grafana ClusterIP 10.233.13.205 <none> 80/TCP 3m prometheus ClusterIP 10.233.39.37 <none> 9090/TCP 8m prometheus-operated ClusterIP None <none> 9090/TCP 1h

为方便访问,将service类型修改为NodePort。

分别使用端口:prometheus为9090:30002 altermanager为9093:30003 grafana为80:30004

kubectl edit --namespace=monitoring service prometheus

kubectl edit --namespace=monitoring service alertmanager

kubectl edit --namespace=monitoring service grafana-grafana

daweij@master:~/prometheus-operator$ kubectl edit --namespace=monitoring service prometheus service "prometheus" edited daweij@master:~/prometheus-operator$ kubectl edit --namespace=monitoring service alertmanager service "alertmanager" edited daweij@master:~/prometheus-operator$ kubectl edit --namespace=monitoring service grafana-grafana service "grafana-grafana" edited daweij@master:~/prometheus-operator$ kubectl get --namespace=monitoring service NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE alertmanager NodePort 10.233.5.170 <none> 9093:30003/TCP 9m alertmanager-operated ClusterIP None <none> 9093/TCP,6783/TCP 9m grafana-grafana NodePort 10.233.13.205 <none> 80:30004/TCP 8m prometheus NodePort 10.233.39.37 <none> 9090:30002/TCP 13m prometheus-operated ClusterIP None <none> 9090/TCP 1h

相关images(搜索:grep -rE 'repository:|tag:'):

quay.io/coreos/prometheus-operator:v0.17.0 quay.io/coreos/hyperkube:v1.7.6_coreos.0 quay.io/coreos/prometheus-config-reloader:v0.0.3 docker pull quay.io/prometheus/alertmanager:v0.14.0 docker pull grafana/grafana:5.0.0 docker pull quay.io/coreos/grafana-watcher:v0.0.8 docker pull quay.io/coreos/prometheus-config-reloader:v0.0.3 docker tag quay.io/prometheus/alertmanager:v0.14.0 172.28.2.2:4000/alertmanager:v0.14.0 docker tag grafana/grafana:5.0.0 172.28.2.2:4000/grafana:5.0.0 docker tag quay.io/coreos/grafana-watcher:v0.0.8 172.28.2.2:4000/grafana-watcher:v0.0.8 docker tag quay.io/coreos/prometheus-config-reloader:v0.0.3 172.28.2.2:4000/prometheus-config-reloader:v0.0.3 docker push 172.28.2.2:4000/prometheus-config-reloader:v0.0.3 docker push 172.28.2.2:4000/alertmanager:v0.14.0 docker push 172.28.2.2:4000/grafana:5.0.0 docker push 172.28.2.2:4000/grafana-watcher:v0.0.8 docker pull 172.28.2.2:4000/prometheus-config-reloader:v0.0.3 docker pull 172.28.2.2:4000/alertmanager:v0.14.0 docker pull 172.28.2.2:4000/grafana:5.0.0 docker pull 172.28.2.2:4000/grafana-watcher:v0.0.8 docker tag 172.28.2.2:4000/prometheus-config-reloader:v0.0.3 quay.io/coreos/prometheus-config-reloader:v0.0.3 docker tag 172.28.2.2:4000/alertmanager:v0.14.0 quay.io/prometheus/alertmanager:v0.14.0 docker tag 172.28.2.2:4000/grafana:5.0.0 grafana/grafana:5.0.0 docker tag 172.28.2.2:4000/grafana-watcher:v0.0.8 quay.io/coreos/grafana-watcher:v0.0.8 docker pull 172.28.2.2:4000/prometheus-config-reloader:v0.0.3 docker tag 172.28.2.2:4000/prometheus-config-reloader:v0.0.3 quay.io/coreos/prometheus-config-reloader:v0.0.3 gcr.io/google_containers/kube-state-metrics:v1.2.0 gcr.io/google_containers/addon-resizer:1.7 docker pull quay.io/coreos/configmap-reload:v0.0.1 docker pull quay.io/prometheus/node-exporter:v0.15.2 docker tag quay.io/coreos/configmap-reload:v0.0.1 172.28.2.2:4000/configmap-reload:v0.0.1 docker tag quay.io/prometheus/node-exporter:v0.15.2 172.28.2.2:4000/node-exporter:v0.15.2 docker push 172.28.2.2:4000/configmap-reload:v0.0.1 docker push 172.28.2.2:4000/node-exporter:v0.15.2 docker pull 172.28.2.2:4000/configmap-reload:v0.0.1 docker pull 172.28.2.2:4000/node-exporter:v0.15.2 docker tag 172.28.2.2:4000/configmap-reload:v0.0.1 quay.io/coreos/configmap-reload:v0.0.1 docker tag 172.28.2.2:4000/node-exporter:v0.15.2 quay.io/prometheus/node-exporter:v0.15.2 docker pull jiang7865134/kube-state-metrics:v1.2.0 docker pull jiang7865134/addon-resizer:1.7 docker tag jiang7865134/kube-state-metrics:v1.2.0 172.28.2.2:4000/kube-state-metrics:v1.2.0 docker tag jiang7865134/addon-resizer:1.7 172.28.2.2:4000/addon-resizer:1.7 docker push 172.28.2.2:4000/kube-state-metrics:v1.2.0 docker push 172.28.2.2:4000/addon-resizer:1.7 docker pull 172.28.2.2:4000/kube-state-metrics:v1.2.0 docker pull 172.28.2.2:4000/addon-resizer:1.7 docker tag 172.28.2.2:4000/kube-state-metrics:v1.2.0 gcr.io/google_containers/kube-state-metrics:v1.2.0 docker tag 172.28.2.2:4000/addon-resizer:1.7 gcr.io/google_containers/addon-resizer:1.7

--prometheus-operator

quay.io/prometheus/prometheus:v2.2.1

quay.io/coreos/prometheus-config-reloader:v0.0.3

quay.io/coreos/configmap-reload:v0.0.1

gcr.io/google_containers/kube-state-metrics:v1.2.0

gcr.io/google_containers/addon-resizer:1.7

quay.io/prometheus/node-exporter:v0.15.2

---

quay.io/prometheus/alertmanager:v0.14.0

---grafana

grafana/grafana:5.0.0

quay.io/coreos/grafana-watcher:v0.0.8

docker pull jiang7865134/kube-state-metrics:v1.2.0

docker pull jiang7865134/addon-resizer:1.7

docker tag jiang7865134/kube-state-metrics:v1.2.0 gcr.io/google_containers/kube-state-metrics:v1.2.0

docker tag jiang7865134/addon-resizer:1.7 gcr.io/google_containers/addon-resizer:1.7

5、安装 kube-prometheus

第一次尝试,安装失败:

daweij@master:~/prometheus-operator$ helm install --name kube-prometheus --namespace=monitoring helm/kube-prometheus Error: found in requirements.yaml, but missing in charts/ directory: alertmanager, prometheus, exporter-kube-controller-manager, exporter-kube-dns, exporter-kube-etcd, exporter-kube-scheduler, exporter-kube-state, exporter-kubelets, exporter-kubernetes, exporter-node, grafana

解决:查看helm/README.md,参考操作:

daweij@master:~/prometheus-operator$ pwd /home/daweij/prometheus-operator daweij@master:~/prometheus-operator$ cat helm/README.md # TL;DR ``` helm repo add coreos https://s3-eu-west-1.amazonaws.com/coreos-charts/stable/ helm install coreos/prometheus-operator --name prometheus-operator --namespace monitoring helm install coreos/kube-prometheus --name kube-prometheus --namespace monitoring ```` # How to contribue? 1. Fork the project 2. Make the changes in the helm charts 3. Bump the version in Chart.yaml for each modified chart 4. Update [kube-prometheus/requirements.yaml](kube-prometheus/requirements.yaml) file with the dependencies 5. Bump the [kube-prometheus/Chart.yaml](kube-prometheus/Chart.yaml) 6. [Test locally](#how-to-test) 7. Push the changes # How to test? ``` helm install helm/prometheus-operator --name prometheus-operator --namespace monitoring mkdir -p helm/kube-prometheus/charts helm package -d helm/kube-prometheus/charts helm/alertmanager helm/grafana helm/prometheus helm/exporter-kube-dns helm/exporter-kube-scheduler helm/exporter-kubelets helm/exporter-node helm/exporter-kube-controller-manager helm/exporter-kube-etcd helm/exporter-kube-state helm/exporter-kubernetes helm install helm/kube-prometheus --name kube-prometheus --namespace monitoring

即(实际操作):

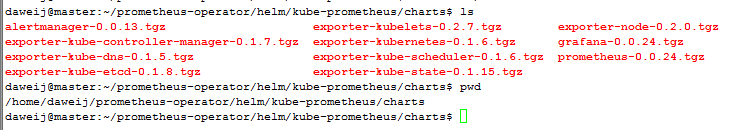

daweij@master:~/prometheus-operator$ mkdir -p helm/kube-prometheus/charts daweij@master:~/prometheus-operator$ helm package -d helm/kube-prometheus/charts helm/alertmanager helm/grafana helm/prometheus helm/exporter-kube-dns helm/exporter-kube-scheduler helm/exporter-kubelets helm/exporter-node helm/exporter-kube-controller-manager helm/exporter-kube-etcd helm/exporter-kube-state helm/exporter-kubernetes Successfully packaged chart and saved it to: helm/kube-prometheus/charts/alertmanager-0.0.13.tgz Successfully packaged chart and saved it to: helm/kube-prometheus/charts/grafana-0.0.24.tgz Successfully packaged chart and saved it to: helm/kube-prometheus/charts/prometheus-0.0.24.tgz Successfully packaged chart and saved it to: helm/kube-prometheus/charts/exporter-kube-dns-0.1.5.tgz Successfully packaged chart and saved it to: helm/kube-prometheus/charts/exporter-kube-scheduler-0.1.6.tgz Successfully packaged chart and saved it to: helm/kube-prometheus/charts/exporter-kubelets-0.2.7.tgz Successfully packaged chart and saved it to: helm/kube-prometheus/charts/exporter-node-0.2.0.tgz Successfully packaged chart and saved it to: helm/kube-prometheus/charts/exporter-kube-controller-manager-0.1.7.tgz Successfully packaged chart and saved it to: helm/kube-prometheus/charts/exporter-kube-etcd-0.1.8.tgz Successfully packaged chart and saved it to: helm/kube-prometheus/charts/exporter-kube-state-0.1.15.tgz Successfully packaged chart and saved it to: helm/kube-prometheus/charts/exporter-kubernetes-0.1.6.tgz daweij@master:~/prometheus-operator$ daweij@master:~/prometheus-operator$ helm ls -a NAME REVISION UPDATED STATUS CHART NAMESPACE alertmanager 1 Mon Apr 2 16:21:45 2018 DEPLOYED alertmanager-0.0.13 monitoring grafana 1 Mon Apr 2 16:22:58 2018 DEPLOYED grafana-0.0.24 monitoring prometheus 1 Mon Apr 2 16:18:03 2018 DEPLOYED prometheus-0.0.24 monitoring prometheus-operator 1 Mon Apr 2 16:17:24 2018 DEPLOYED prometheus-operator-0.0.15 monitoring daweij@master:~/prometheus-operator$ helm install --name kube-prometheus --namespace=monitoring helm/kube-prometheus NAME: kube-prometheus LAST DEPLOYED: Tue Apr 3 10:17:17 2018 NAMESPACE: monitoring STATUS: DEPLOYED RESOURCES: ==> v1/Service NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kube-prometheus-alertmanager ClusterIP 10.233.30.52 <none> 9093/TCP 44s kube-prometheus-exporter-kube-controller-manager ClusterIP None <none> 10252/TCP 44s kube-prometheus-exporter-kube-dns ClusterIP None <none> 10054/TCP,10055/TCP 44s kube-prometheus-exporter-kube-etcd ClusterIP None <none> 4001/TCP 44s kube-prometheus-exporter-kube-scheduler ClusterIP None <none> 10251/TCP 44s kube-prometheus-exporter-kube-state ClusterIP 10.233.52.102 <none> 80/TCP 44s kube-prometheus-exporter-node ClusterIP 10.233.38.253 <none> 9100/TCP 44s kube-prometheus-grafana ClusterIP 10.233.44.224 <none> 80/TCP 44s kube-prometheus-prometheus ClusterIP 10.233.22.86 <none> 9090/TCP 44s ==> v1beta1/DaemonSet NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE kube-prometheus-exporter-node 5 5 0 5 0 <none> 43s ==> v1beta1/Deployment NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE kube-prometheus-exporter-kube-state 1 1 1 0 43s kube-prometheus-grafana 1 1 1 0 43s ==> v1/Alertmanager NAME AGE kube-prometheus 44s ==> v1/Prometheus kube-prometheus 44s ==> v1/ConfigMap NAME DATA AGE kube-prometheus-alertmanager 1 46s kube-prometheus-exporter-kube-controller-manager 1 46s kube-prometheus-exporter-kube-etcd 1 46s kube-prometheus-exporter-kube-scheduler 1 46s kube-prometheus-exporter-kube-state 1 46s kube-prometheus-exporter-kubelets 1 46s kube-prometheus-exporter-kubernetes 1 46s kube-prometheus-exporter-node 1 46s kube-prometheus-grafana 10 46s kube-prometheus-prometheus 1 46s kube-prometheus 1 46s ==> v1beta1/ClusterRole NAME AGE kube-prometheus-exporter-kube-state 46s kube-prometheus-prometheus 46s ==> v1/ServiceMonitor kube-prometheus-alertmanager 44s kube-prometheus-exporter-kube-controller-manager 44s kube-prometheus-exporter-kube-dns 44s kube-prometheus-exporter-kube-etcd 44s kube-prometheus-exporter-kube-scheduler 44s kube-prometheus-exporter-kube-state 44s kube-prometheus-exporter-kubelets 44s kube-prometheus-exporter-kubernetes 44s kube-prometheus-exporter-node 44s kube-prometheus-grafana 44s kube-prometheus-prometheus 44s ==> v1/ServiceAccount NAME SECRETS AGE kube-prometheus-exporter-kube-state 1 46s kube-prometheus-prometheus 1 46s ==> v1beta1/ClusterRoleBinding NAME AGE kube-prometheus-exporter-kube-state 46s kube-prometheus-prometheus 46s ==> v1beta1/RoleBinding NAME AGE kube-prometheus-exporter-kube-state 45s ==> v1/Pod(related) NAME READY STATUS RESTARTS AGE kube-prometheus-exporter-node-66n6z 0/1 ContainerCreating 0 44s kube-prometheus-exporter-node-p8cw7 0/1 ContainerCreating 0 44s kube-prometheus-exporter-node-sj5d8 0/1 ContainerCreating 0 44s kube-prometheus-exporter-node-xflfm 0/1 ContainerCreating 0 44s kube-prometheus-exporter-node-zt4p9 0/1 ContainerCreating 0 44s kube-prometheus-exporter-kube-state-6bcffdd865-5fcrj 0/2 ContainerCreating 0 44s kube-prometheus-grafana-6cd54fd88f-9rb6j 0/2 ContainerCreating 0 44s ==> v1/Secret NAME TYPE DATA AGE alertmanager-kube-prometheus Opaque 1 46s kube-prometheus-grafana Opaque 2 46s ==> v1beta1/Role NAME AGE kube-prometheus-exporter-kube-state 45s daweij@master:~/prometheus-operator$

执行此命令结果,如下图所示。

helm package -d helm/kube-prometheus/charts helm/alertmanager helm/grafana helm/prometheus helm/exporter-kube-dns helm/exporter-kube-scheduler helm/exporter-kubelets helm/exporter-node helm/exporter-kube-controller-manager helm/exporter-kube-etcd helm/exporter-kube-state helm/exporter-kubernetes

daweij@master:~/prometheus-operator$ kubectl get --namespace=monitoring service NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE alertmanager NodePort 10.233.5.170 <none> 9093:30003/TCP 17h alertmanager-operated ClusterIP None <none> 9093/TCP,6783/TCP 17h grafana-grafana NodePort 10.233.13.205 <none> 80:30004/TCP 17h kube-prometheus-alertmanager ClusterIP 10.233.30.52 <none> 9093/TCP 2m kube-prometheus-exporter-kube-state ClusterIP 10.233.52.102 <none> 80/TCP 2m kube-prometheus-exporter-node ClusterIP 10.233.38.253 <none> 9100/TCP 2m kube-prometheus-grafana ClusterIP 10.233.44.224 <none> 80/TCP 2m kube-prometheus-prometheus ClusterIP 10.233.22.86 <none> 9090/TCP 2m prometheus NodePort 10.233.39.37 <none> 9090:30002/TCP 18h prometheus-operated ClusterIP None <none> 9090/TCP 18h daweij@master:~/prometheus-operator$ daweij@master:~/prometheus-operator$ kubectl get --namespace=monitoring service NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE alertmanager NodePort 10.233.5.170 <none> 9093:30003/TCP 17h alertmanager-operated ClusterIP None <none> 9093/TCP,6783/TCP 17h grafana-grafana NodePort 10.233.13.205 <none> 80:30004/TCP 17h kube-prometheus-alertmanager ClusterIP 10.233.30.52 <none> 9093/TCP 3m kube-prometheus-exporter-kube-state ClusterIP 10.233.52.102 <none> 80/TCP 3m kube-prometheus-exporter-node ClusterIP 10.233.38.253 <none> 9100/TCP 3m kube-prometheus-grafana ClusterIP 10.233.44.224 <none> 80/TCP 3m kube-prometheus-prometheus ClusterIP 10.233.22.86 <none> 9090/TCP 3m prometheus NodePort 10.233.39.37 <none> 9090:30002/TCP 18h prometheus-operated ClusterIP None <none> 9090/TCP 18h daweij@master:~/prometheus-operator$ kubectl get --namespace=monitoring servicemonitor NAME AGE alertmanager 17h grafana 17h kube-prometheus-alertmanager 3m kube-prometheus-exporter-kube-controller-manager 3m kube-prometheus-exporter-kube-dns 3m kube-prometheus-exporter-kube-etcd 3m kube-prometheus-exporter-kube-scheduler 3m kube-prometheus-exporter-kube-state 3m kube-prometheus-exporter-kubelets 3m kube-prometheus-exporter-kubernetes 3m kube-prometheus-exporter-node 3m kube-prometheus-grafana 3m kube-prometheus-prometheus 3m prometheus 18h prometheus-operator 19h daweij@master:~/prometheus-operator$ kubectl get --namespace=monitoring pod -o wide NAME READY STATUS RESTARTS AGE IP NODE alertmanager-alertmanager-0 2/2 Running 0 17h 10.233.75.36 node2 alertmanager-kube-prometheus-0 2/2 Running 0 3m 10.233.102.150 node1 grafana-grafana-59cdb46b78-8smjn 2/2 Running 0 17h 10.233.102.149 node1 kube-prometheus-exporter-kube-state-6bcffdd865-5fcrj 2/2 Running 0 3m 10.233.71.39 node3 kube-prometheus-exporter-kube-state-8f4cb89d5-47v6v 1/2 Running 0 1m 10.233.75.38 node2 kube-prometheus-exporter-node-66n6z 1/1 Running 0 3m 172.28.2.212 node2 kube-prometheus-exporter-node-p8cw7 1/1 Running 0 3m 172.28.2.213 node3 kube-prometheus-exporter-node-sj5d8 1/1 Running 0 3m 172.28.2.211 node1 kube-prometheus-exporter-node-xflfm 1/1 Running 0 3m 172.28.2.210 master kube-prometheus-exporter-node-zt4p9 1/1 Running 0 3m 172.28.2.214 node4 kube-prometheus-grafana-6cd54fd88f-9rb6j 2/2 Running 0 3m 10.233.71.38 node3 prometheus-kube-prometheus-0 2/2 Running 0 3m 10.233.75.37 node2 prometheus-operator-77fd5d856f-hv4z2 1/1 Running 0 18h 10.233.75.35 node2 prometheus-prometheus-0 2/2 Running 0 18h 10.233.71.37 node3 daweij@master:~/prometheus-operator$

quay.io/prometheus/alertmanager:v0.14.0

quay.io/prometheus/prometheus:v2.2.1

6、安装Alert规则

prometheus Operator提供了默认的Alertmanager告警规则,相关yaml文件:

contrib/kube-prometheus/manifests/prometheus/prometheus-k8s-rules.yaml

$ sed -ie 's/prometheus: k8s/prometheus: prometheus/g' contrib/kube-prometheus/manifests/prometheus/prometheus-k8s-rules.yaml $ sed -ie 's/role: prometheus-rulefiles/app: prometheus/g' contrib/kube-prometheus/manifests/prometheus/prometheus-k8s-rules.yaml $ sed -ie 's/job=\"kube-controller-manager/job=\"kube-prometheus-exporter-kube-controller-manager/g' contrib/kube-prometheus/manifests/prometheus/prometheus-k8s-rules.yaml

$ sed -ie 's/job=\"apiserver/job=\"kube-prometheus-exporter-kube-api/g' contrib/kube-prometheus/manifests/prometheus/prometheus-k8s-rules.yaml $ sed -ie 's/job=\"kube-scheduler/job=\"kube-prometheus-exporter-kube-scheduler/g' contrib/kube-prometheus/manifests/prometheus/prometheus-k8s-rules.yaml $ sed -ie 's/job=\"node-exporter/job=\"kube-prometheus-exporter-node/g' contrib/kube-prometheus/manifests/prometheus/prometheus-k8s-rules.yaml $ grep -E 'prometheus: prometheus|kube-prometheus-exporter-' contrib/kube-prometheus/manifests/prometheus/prometheus-k8s-rules.yaml prometheus: prometheus expr: absent(up{job="kube-prometheus-exporter-kube-controller-manager"} == 1) expr: absent(up{job="kube-prometheus-exporter-kube-scheduler"} == 1) expr: absent(up{job="kube-prometheus-exporter-kube-api"} == 1) expr: absent(up{job="kube-prometheus-exporter-node"} == 1) $

通过如下命令安装:

daweij@master:~/prometheus-operator$ kubectl apply -n monitoring -f contrib/kube-prometheus/manifests/prometheus/prometheus-k8s-rules.yaml configmap "prometheus-k8s-rules" created

daweij@master:~/prometheus-operator$ kubectl get --namespace=monitoring configmap --show-labels NAME DATA AGE LABELS alertmanager 1 1d app=alertmanager,chart=alertmanager-0.0.13,heritage=Tiller,prometheus=alertmanager,release=alertmanager,role=alert-rules grafana-grafana 10 1d app=grafana,chart=grafana-0.0.24,heritage=Tiller,release=grafana kube-prometheus 1 1d app=prometheus,chart=kube-prometheus-0.0.42,heritage=Tiller,prometheus=kube-prometheus,release=kube-prometheus,role=alert-rules kube-prometheus-alertmanager 1 1d app=alertmanager,chart=alertmanager-0.0.13,heritage=Tiller,prometheus=kube-prometheus,release=kube-prometheus,role=alert-rules kube-prometheus-exporter-kube-controller-manager 1 1d app=prometheus,chart=exporter-kube-controller-manager-0.1.7,heritage=Tiller,prometheus=kube-prometheus,release=kube-prometheus,role=alert-rules kube-prometheus-exporter-kube-etcd 1 1d app=prometheus,chart=exporter-kube-etcd-0.1.8,heritage=Tiller,prometheus=kube-prometheus,release=kube-prometheus,role=alert-rules kube-prometheus-exporter-kube-scheduler 1 1d app=prometheus,chart=exporter-kube-scheduler-0.1.6,heritage=Tiller,prometheus=kube-prometheus,release=kube-prometheus,role=alert-rules kube-prometheus-exporter-kube-state 1 1d app=prometheus,chart=exporter-kube-state-0.1.15,heritage=Tiller,prometheus=kube-prometheus,release=kube-prometheus,role=alert-rules kube-prometheus-exporter-kubelets 1 1d app=prometheus,chart=exporter-kubelets-0.2.7,heritage=Tiller,prometheus=kube-prometheus,release=kube-prometheus,role=alert-rules kube-prometheus-exporter-kubernetes 1 1d app=prometheus,chart=exporter-kubernetes-0.1.6,heritage=Tiller,prometheus=kube-prometheus,release=kube-prometheus,role=alert-rules kube-prometheus-exporter-node 1 1d app=prometheus,chart=exporter-node-0.2.0,heritage=Tiller,prometheus=kube-prometheus,release=kube-prometheus,role=alert-rules kube-prometheus-grafana 10 1d app=kube-prometheus-grafana,chart=grafana-0.0.24,heritage=Tiller,release=kube-prometheus kube-prometheus-prometheus 1 1d app=prometheus,chart=prometheus-0.0.24,heritage=Tiller,prometheus=kube-prometheus,release=kube-prometheus,role=alert-rules prometheus 1 1d app=prometheus,chart=prometheus-0.0.24,heritage=Tiller,prometheus=prometheus,release=prometheus,role=alert-rules prometheus-k8s-rules 10 4m prometheus=prometheus,role=alert-rules prometheus-operator 1 1d <none> kubectl edit configmap kube-prometheus -n monitoring kubectl edit configmap prometheus -n monitoring ....

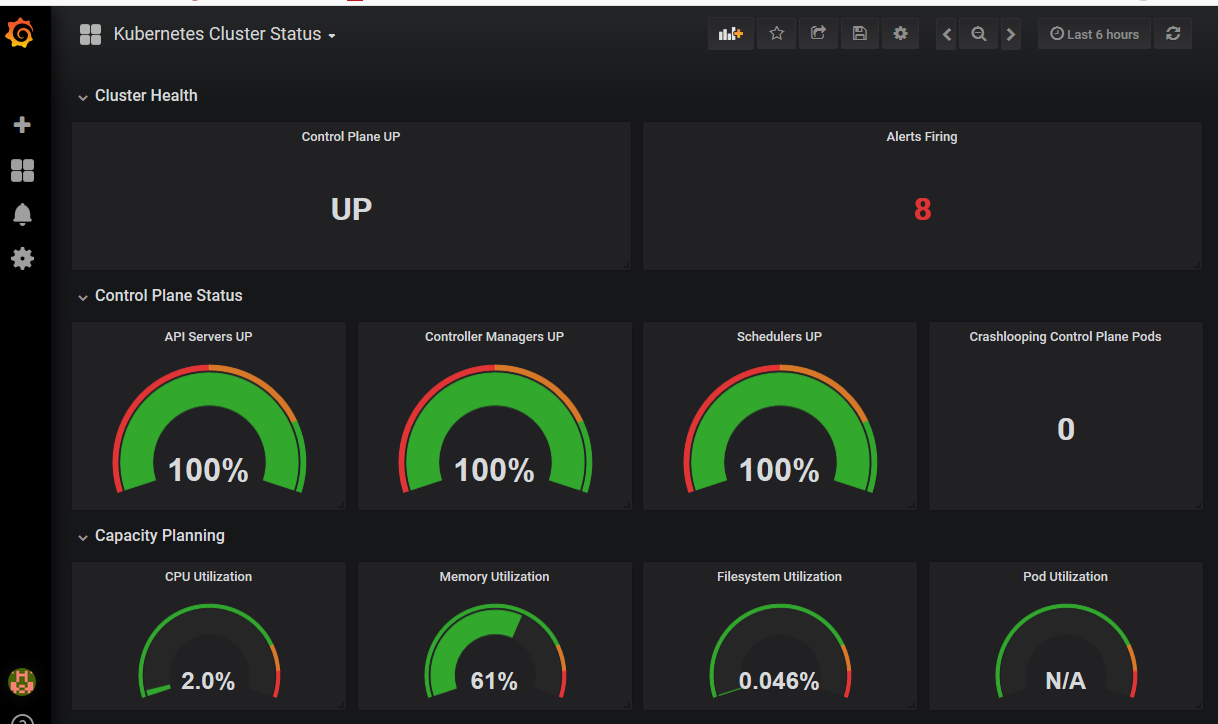

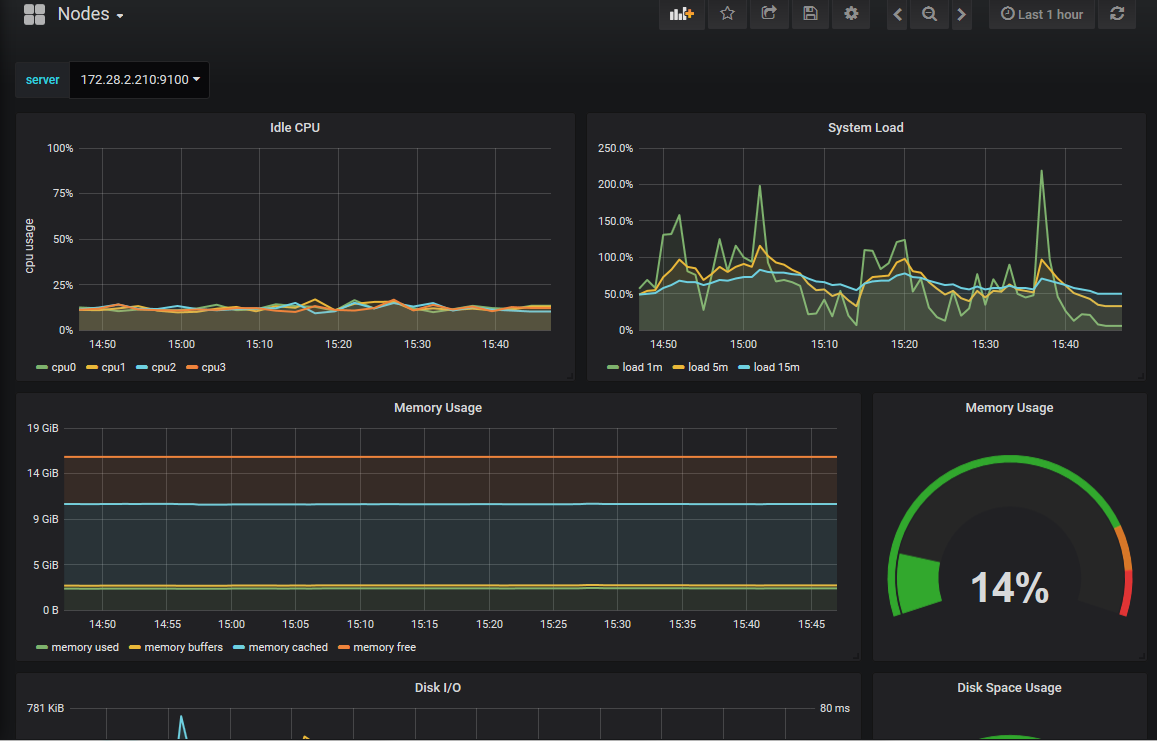

7、安装Grafana Dashboard

Prometheus Operator定义了显示监控数据的默认Dashboard,

相关yaml: contrib/kube-prometheus/manifests/grafana/grafana-dashboards.yaml

通过命令安装:

daweij@master:~/prometheus-operator$ kubectl apply -n monitoring -f contrib/kube-prometheus/manifests/grafana/grafana-dashboards.yaml configmap "grafana-dashboards" created

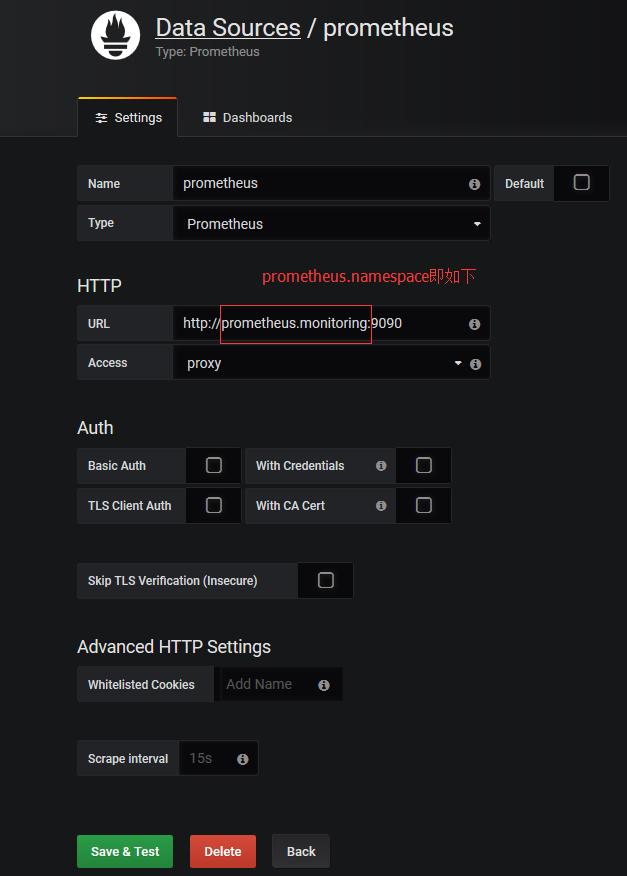

可使用admin/admin登陆 grafana http://172.28.2.210:30004/

若各监控页面提示:unable to load datasource metadata

可在Configuration-Data Sources中修改相关配置,参考下图:

8、导入面板

导入面板,可以直接输入模板编号(如315 3729 等,可在官网查找)在线导入,或者下载好对应的json模板文件本地导入

官网提供了可选的dashboard模板、编号及其下载地址:https://grafana.com/dashboards

9、综上外部可访问的web GUI

prometheus: http://172.28.2.210:30002/graph

alertmanager: http://172.28.2.210:30003/#/alerts

grafana: http://172.28.2.210:30004/

其他blog参考:

http://blog.51cto.com/ylw6006/2084403?cid=700864

浙公网安备 33010602011771号

浙公网安备 33010602011771号