kubeadm安装kubernetes1.15.1

2019-12-06 14:47:19

环境准备:

| k8s-master01 | 172.16.196.160 | 主节点 |

| k8s-node01 | 172.16.196.136 | node1 |

| k8s-node02 | 172.16.196.137 | node2 |

| k8s-node03 | 172.16.196.161 | node3 |

1 这里以master01为例,其他节点都一样。可以自己扩容任意node节点个数 2 //修改主机名 3 hostnamectl set-hostname k8s-master01 4 //安装通用基础包 5 yum -y install conntrack ntpdate ntp ipvsadm ipset jq iptables curl sysstat libseccomp wget vim net-tools git lrzsz 6 //关闭防火墙 7 systemctl stop firewalld && systemctl disable firewalld 8 //开启iptables 9 yum -y install iptables-services && systemctl start iptables && systemctl enable iptables && iptables -F && service iptables save 10 //关闭swap分区 11 swapoff -a && sed -i 's/.*swap.*/#&/' /etc/fstab 12 //关闭selinux 13 setenforce 0 && sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/selinux/config

14 cat >> /etc/sysctl.conf<<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.ipv4.tcp_tw_recycle=0

vm.swappiness = 0

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_instances=8192

fs.inotify.max_user_watches=1048576

fs.file.max=52706963

fs.nr_open=52706963

net.ipv6.conf.all.disable_ipv6=1

net.netfilter.nf_conntrack_max=2310720

EOF

1 调整时间,修改系统日志 2 timedatectl set-timezone Asia/Shanghai 3 timedatectl set-local-rtc 0 4 systemctl restart rsyslog crond 5 mkdir /var/log/journal 6 mkdir /etc/systemd/journald.conf.d 7 cat > /etc/systemd/journald.conf.d/99-prophet <<EOF [Journal] Storage=persistent Compress=yes SyncIntervalSec=5m RateLimitInterval=30s RateLimitBurst=1000 SystemMaxUse=10G SystemMaxFileSize=200M MaxRetentionSec=2week ForwardToSyslog=no EOF 19 systemctl restart systemd-journald

升级内核到4.4版本:

1 rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm 2 yum --enablerepo=elrepo-kernel install -y kernel-lt 3 grub2-set-default "CentOS Linux (4.4.182-1.el7.elrepo.x86_64) 7 (Core)” 4 reboot 5 uname -r

添加ipvs模块:

1 modprobe br_netfilter 2 cat > /etc/sysconfig/modules/ipvs.modules <<EOF modprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack_ipv4 EOF 3 chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

安装docker:

1 yum -y install yum-utils device-mapper-persistent-data lvm2 2 yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo 3 yum update -y && yum -y install docker-ce 4 systemctl start docker && systemctl enable docker 5 cat >/etc/docker/daemon.json <<EOF { "exec-opts": ["native.cgroupdriver=systemd"], "log-driver": "json-file", "log-opts": { "max-size": "100m" } } 6 EOF 7 mkdir -p /etc/systemd/system/docker.service.d 8 systemctl daemon-reload && systemctl restart docker

安装k8s:

1 cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http:://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF 2 yum install kubeadm-1.15.1 kubectl-1.15.1 kubelet-1.15.1 3 systemctl enable kubelet.service

查看所需要的镜像文件(注意需要DNS解析):

kubeadm config images list cat >> /etc/hosts <<EOF 172.16.196.160 k8s-master01 172.16.196.136 k8s-node01 172.16.196.137 k8s-node02 172.16.196.161 k8s-node03 EOF 链接:https://pan.baidu.com/s/1eZvMDQiRLIUASHwJN4YpVA 提取码:4rrk

将镜像导入每个服务中:

1 docker load -i k8s1.15.1-image.tar.gz

//以上操作每个服务器都需要完成

在172.16.196.160服务器中: 2 kubeadm config print init-defaults > kubeadm.config.yaml 3 vim kubeadm.config.yaml

apiVersion: kubeadm.k8s.io/v1beta2 bootstrapTokens: - groups: - system:bootstrappers:kubeadm:default-node-token token: abcdef.0123456789abcdef ttl: 24h0m0s usages: - signing - authentication kind: InitConfiguration localAPIEndpoint: advertiseAddress: 172.16.196.160 bindPort: 6443 nodeRegistration: criSocket: /var/run/dockershim.sock name: k8s-master01 taints: - effect: NoSchedule key: node-role.kubernetes.io/master --- apiServer: timeoutForControlPlane: 4m0s apiVersion: kubeadm.k8s.io/v1beta2 certificatesDir: /etc/kubernetes/pki clusterName: kubernetes controllerManager: {} dns: type: CoreDNS etcd: local: dataDir: /var/lib/etcd imageRepository: k8s.gcr.io kind: ClusterConfiguration kubernetesVersion: v1.15.1 networking: dnsDomain: cluster.local podSubnet: "10.244.0.0/16" serviceSubnet: 10.96.0.0/12 scheduler: {} --- apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration featureGates: SupportIPVSProxyMode: true mode: ipvs

4 kubeadm init --config=kubeadm.config.yaml --upload-certs | tee kubeadm-init.log 5 mkdir -p $HOME/.kube 6 sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config 7 sudo chown $(id -u):$(id -g) $HOME/.kube/config 8 kubectl get node

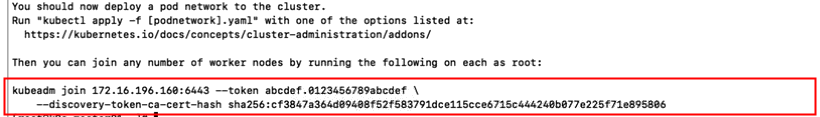

此时主节点初始化完成并提示node节点加入主节点的命令:

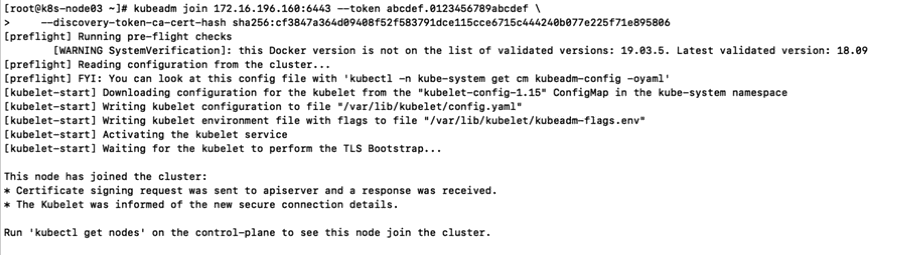

在node节点上执行提示操作

在master节点上:

1 kubectl get nodes //发现都是NotReady状态,因为我们还没有安装flannel扁平化网络 2 kubectl apply -f ./kube-flannel.yaml 3 kubectl get nodes //查看节点信息 4 kubectl get pod -n kube-system //查看pod状态,需要等待一些时间

浙公网安备 33010602011771号

浙公网安备 33010602011771号