猫狗大战:利用ResNet迁移学习进行图像识别

挂载Google Drive,避免数据集重复下载

from google.colab import drive

drive.mount('/content/drive')

导入包、设置GPU、设定随机种子

import numpy as np

import matplotlib.pyplot as plt

import os

import torch

import torch.nn as nn

import torchvision

from torchvision import models,transforms,datasets

import time

import json

import shutil

from PIL import Image

import csv

# 判断是否存在GPU设备

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print('Using gpu: %s ' % torch.cuda.is_available())

# 设置随机种子,方便复现

torch.manual_seed(10000) # 为CPU设置随机种子

torch.cuda.manual_seed(10000) # 为当前GPU设置随机种子

torch.cuda.manual_seed_all(10000) # 为所有GPU设置随机种子

下载数据集,并将数据及分类

#! wget https://static.leiphone.com/cat_dog.rar

!unrar x "/content/drive/My Drive/catdog/cat_dog.rar" "/content/sample_data"

%cd sample_data/

#将训练集验证集的猫狗图像分别放入单独文件夹内,方便ImageFolder读取

for x in ['train','val']:

imgPath = "cat_dog/"+x

pathlist=os.listdir(imgPath)

data_destination = 'cat_dog/'+x+'/cat/'

label_destination = 'cat_dog/'+x+'/dog/'

if not (os.path.exists(data_destination) and os.path.exists(label_destination)):

os.makedirs(data_destination)

os.makedirs(label_destination)

# 根据文件名的特征进行分类并复制相应的文件到新文件夹

for item in pathlist:

# print(os.path.splitext(item)[0],os.path.splitext(item)[1])

if os.path.splitext(item)[1] == '.jpg' and 'cat' in os.path.splitext(item)[0]:

print(os.path.join(imgPath,item))

shutil.move(os.path.join(imgPath,item), data_destination)

elif os.path.splitext(item)[1] == '.jpg' and 'dog' in os.path.splitext(item)[0]:

print(os.path.join(imgPath,item))

shutil.move(os.path.join(imgPath,item), label_destination)

载入数据集,并对数据进行处理

normalize = transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

resnet_format = transforms.Compose([

transforms.CenterCrop(224),

transforms.ToTensor(),

normalize,

])

data_dir = './cat_dog'

dsets = {x: datasets.ImageFolder(os.path.join(data_dir, x), resnet_format)

for x in ['train', 'val']}

dset_sizes = {x: len(dsets[x]) for x in ['train', 'val']}

dset_classes = dsets['train'].classes

#resnet152下,需要显存太大,将batch size调小为48

loader_train = torch.utils.data.DataLoader(dsets['train'], batch_size=48, shuffle=True, num_workers=6)

loader_valid = torch.utils.data.DataLoader(dsets['val'], batch_size=5, shuffle=False, num_workers=6)

载入ResNet152并修改模型全连接层

model = models.resnet152(pretrained=True)

model_new = model;

model_new.fc = nn.Linear(2048, 2,bias=True)

model_new = model_new.to(device)

print(model_new)

部分参数

#采用交叉熵损失函数

criterion = nn.CrossEntropyLoss()

# 学习率0.001,每10epoch *0.1

lr = 0.001

# 随机梯度下降,momentum加速学习,Weight decay防止过拟合

optimizer = torch.optim.SGD(model_new.parameters(), lr=lr, momentum=0.9, weight_decay=5e-4)

模型训练

def val_model(model,dataloader,size):

model.eval()

predictions = np.zeros(size)

all_classes = np.zeros(size)

all_proba = np.zeros((size,2))

i = 0

running_loss = 0.0

running_corrects = 0

with torch.no_grad():

for inputs,classes in dataloader:

inputs = inputs.to(device)

classes = classes.to(device)

outputs = model(inputs)

loss = criterion(outputs,classes)

_,preds = torch.max(outputs.data,1)

# statistics

running_loss += loss.data.item()

running_corrects += torch.sum(preds == classes.data)

#predictions[i:i+len(classes)] = preds.to('cpu').numpy()

#all_classes[i:i+len(classes)] = classes.to('cpu').numpy()

#all_proba[i:i+len(classes),:] = outputs.data.to('cpu').numpy()

i += len(classes)

#print('Testing: No. ', i, ' process ... total: ', size)

epoch_loss = running_loss / size

epoch_acc = running_corrects.data.item() / size

#print('Loss: {:.4f} Acc: {:.4f}'.format(epoch_loss, epoch_acc))

return epoch_loss, epoch_acc

def train_model(model,dataloader,size,epochs=1,optimizer=None):

for epoch in range(epochs):

model.train()

running_loss = 0.0

running_corrects = 0

count = 0

for inputs,classes in dataloader:

inputs = inputs.to(device)

classes = classes.to(device)

outputs = model(inputs)

loss = criterion(outputs,classes)

optimizer = optimizer

optimizer.zero_grad()

loss.backward()

optimizer.step()

_,preds = torch.max(outputs.data,1)

# statistics

running_loss += loss.data.item()

running_corrects += torch.sum(preds == classes.data)

count += len(inputs)

#print('Training: No. ', count, ' process ... total: ', size)

epoch_loss = running_loss / size

epoch_acc = running_corrects.data.item() / size

epoch_Valloss, epoch_Valacc = val_model(model,loader_valid,dset_sizes['val'])

print('epoch: ',epoch,' Loss: {:.5f} Acc: {:.5f} ValLoss: {:.5f} ValAcc: {:.5f}'.format(

epoch_loss, epoch_acc,epoch_Valloss,epoch_Valacc))

scheduler.step()

#学习率衰减

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=10, gamma=0.1)

# 模型训练

train_model(model_new,loader_train,size=dset_sizes['train'], epochs=20,

optimizer=optimizer)

模型测试并输出csv文件

model_new.eval()

csvfile = open('csv.csv', 'w')

writer = csv.writer(csvfile)

test_root='./cat_dog/test/'

img_test=os.listdir(test_root)

img_test.sort(key= lambda x:int(x[:-4]))

for i in range(len(img_test)):

img = Image.open(test_root+img_test[i])

img = img.convert('RGB')

input=resnet_format(img)

input=input.unsqueeze(0)

input = input.to(device)

output=model_new(input)

_,pred = torch.max(output.data,1)

print(i,pred.tolist()[0])

writer.writerow([i,pred.tolist()[0]])

csvfile.close()

训练验证结果如下:

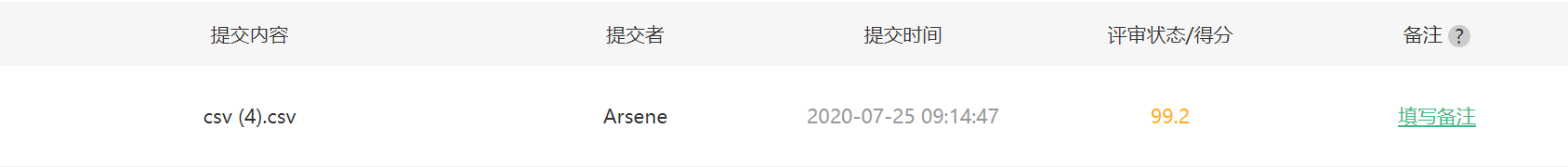

测试结果如下:

一开始采用的VGG16进行训练,冻结FC层之前参数,将优化器由SGD改为Adam,1个epoch下测试结果得分98.1。多个epoch跑下来,效果提升有限,遂采用ResNet。

遇到的问题:

- Colab数据集训练时不要放在Google Drive中!Google Drive中数据和服务器计算是分离的,每次读取数据都需要向Drive进行网络请求,导致训练速度被网络速度拖慢,特别是在传输大量小图片数据时。

- 为了使结果复现,尝试给PyTorch设置随机种子,但还不能保证精确一致,或许还需要设置cudnn、python,numpy。PyTorch的可重复性问题 (如何使实验结果可复现)

- ResNet训练时曾尝试冻结FC层之前参数,效果不理想。

- 大部分情况下,Adam效果相较SGD更好,然而在ResNet下,SGD效果比Adam好。

- 修改网络结构还可通过继承的方式

class Net(nn.Module):

def __init__(self, model):

super(Net, self).__init__()

# 取掉model的后1层

self.resnet_layer = nn.Sequential(*list(model.children())[:-1])

self.Linear_layer = nn.Linear(2048, 2) #加上一层参数修改好的全连接层

def forward(self, x):

x = self.resnet_layer(x)

x = x.view(x.size(0), -1)

x = self.Linear_layer(x)

return x

model_new = Net(model)

model_new = model_new.to(device)

- 学习率的调整可采用动态的方式。学习率太小收敛太慢,学习率太大会导致参数在最优点来回波动。通常先采用较大学习率进行训练,在训练过程中不断衰减。动态调整Learning Rate:TORCH.OPTIM.LR_SCHEDULER

- model.eval()与with torch.no_grad()可同时使用,更加节省算力。深入理解model.eval()与torch.no_grad()

待解决

训练和验证的loss相差大约十倍,是不是哪里写错了。

吐槽

Google Drive挂载功能维护了一天,Colab限额又锁了一天,果然羊毛不是那么好薅的 - -。

浙公网安备 33010602011771号

浙公网安备 33010602011771号