kafka集群+zookeeper集群+kafkaMonitor

Kafka集群搭建

1、系统环境:CentOS 6.5

2、 准备好安装包,官网下载地址:

jdk下载地址:http://www.oracle.com/technetwork/java/javase/downloads/index.html

zookeeper下载地址:http://www.apache.org/dyn/closer.cgi/zookeeper/

kafka下载地址:http://kafka.apache.org/downloads

3、 下载好kafka安装包后,将其解压到/usr/local目录下,删除压缩包

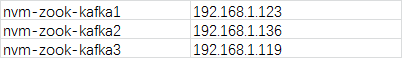

4、 目前搭建了三个节点的kafka集群,

5、建立文件夹

mkdir -p /usr/local/kafka/zookeeper/data #创建zookeeper数据目录 mkdir -p /usr/local/kafka/logs/{kafka,zookeeper} #创建kafka,zk 日志目录

6、先建立zk集群,直接使用kafka自带的zookeeper建立zk集群,如果生产环境,最好单独安装zook集群,修改zookeeper.properties文件:

三个机器上的zookeeper.properties文件配置相同,需要注意的是日志保存的路径,不会自动生成,需要自己手动建立相关的路径.

[root@nvm-zook-kafka1 config]# awk '/^[^#]/' zookeeper.properties dataDir=/usr/local/kafka/zookeeper/data dataLogDir=/usr/local/kafka/logs/zookeeper clientPort=2181 maxClientCnxns=0 tickTime=2000 initLimit=10 syncLimit=5 server.1=192.168.1.123:2888:3888 server.2=192.168.1.136:2888:3888 server.3=192.168.1.119:2888:3888

7、创建myid文件,进入/usr/local/kafka/zookeeper,创建myid文件,将三个服务器上的myid文件分别写入1,2,3,如下:

—-myid是zk集群用来发现彼此的标识,必须创建,且不能相同;

[root@nvm-zook-kafka1 kafka]# cd zookeeper/ [root@nvm-zook-kafka1 zookeeper]# ls data [root@nvm-zook-kafka1 zookeeper]# cd data/ [root@nvm-zook-kafka1 data]# cat myid 1 [root@nvm-zook-kafka1 data]#

8、 进入kafka目录 执行启动zookeeper命令:

./bin/zookeeper-server-start.sh config/zookeeper.properties &

三台机器都执行启动命令,查看zookeeper的日志文件,没有报错就说明zookeeper集群启动成功了。

9、搭建kafka集群,修改server.properties配置文件:

[root@nvm-zook-kafka1 config]# awk '/^[^#]/' server.properties broker.id=1 port=9092 listeners=PLAINTEXT://:9092 host.name=192.168.1.123 advertised.host.name=192.168.1.123 num.network.threads=3 num.io.threads=8 socket.send.buffer.bytes=102400 socket.receive.buffer.bytes=102400 socket.request.max.bytes=104857600 log.dirs=/usr/local/kafka/logs/kafka #日志 num.partitions=1 num.recovery.threads.per.data.dir=1 offsets.topic.replication.factor=1 transaction.state.log.replication.factor=1 transaction.state.log.min.isr=1 log.retention.hours=168 log.segment.bytes=1073741824 log.retention.check.interval.ms=300000 zookeeper.connect=192.168.1.123:2181,192.168.1.136:2181,192.168.1.119:2181 zookeeper.connection.timeout.ms=6000 group.initial.rebalance.delay.ms=0

server.properties配置文件的修改主要在开头和结尾,中间保持默认配置即可;需要注意的点是broker.id的值三个节点要配置不同的值,分别配置为1,2,3;log.dirs必须保证目录存在,不会根据配置文件自动生成;

10、 启动kafka集群,进入kafka目录,执行如下命令 :

./bin/kafka-server-start.sh config/server.properties &

三个节点均要启动;启动无报错,即搭建成功,可以生产和消费消息,来检测是否搭建成功。

11、接下来下载kafkaOffsetMonitor

下载地址: https://github.com/quantifind/KafkaOffsetMonitor

12、启动kafkaOffsetMonitor

java -cp KafkaOffsetMonitor-assembly-0.2.0.jar \

com.quantifind.kafka.offsetapp.OffsetGetterWeb \

--zk 192.168.1.123:2181,192.168.1.136:2181,192.168.1.119:2181 \

--port 8088 \

--refresh 10.seconds \

--retain 2.days &

The arguments are:

- offsetStorage valid options are ''zookeeper'', ''kafka'' or ''storm''. Anything else falls back to ''zookeeper''

- zk the ZooKeeper hosts

- port on what port will the app be available

- refresh how often should the app refresh and store a point in the DB

- retain how long should points be kept in the DB

- dbName where to store the history (default 'offsetapp')

- kafkaOffsetForceFromStart only applies to ''kafka'' format. Force KafkaOffsetMonitor to scan the commit messages from start (see notes below)

- stormZKOffsetBase only applies to ''storm'' format. Change the offset storage base in zookeeper, default to ''/stormconsumers'' (see notes below)

- pluginsArgs additional arguments used by extensions (see below)

不明白可以去git查看:https://github.com/quantifind/KafkaOffsetMonitor

浙公网安备 33010602011771号

浙公网安备 33010602011771号