XCB API风格探究

XCB API风格探究

libxcb中的API风格是这样的:

// 发送请求并得到 Cookie 对象

xcb_DOSOMETING_cookie_t cookie = xcb_DOSOMETING(conn, ARGS...);

// 继续执行其他任务 ...

// 在需要获取结果的时候,通过调用下面的函数获取结果

xcb_DOSOMETING_reply_t *reply = xcb_DOSOMETING_reply(conn, cookie, error_cb);

很明显这个是一个异步API,然后采用阻塞长轮询的方式而非回调来获取结果。因此libxcb可以封装成“同步”API(实际上是延取值)。

这是一种 future 的设计。

分析

源码生成

分析libxcb是怎么实现的,就需要获取libxcb的源码。

libxcb的源码中只有一些基本的工具函数,其他我们平时主要使用的xcb API都是py脚本生成的。一共涉及到4个库(ubuntu系统):

- libxcb1-dev:生成xcb API的接口库,我们主要看这个

- libxau-dev:鉴权库,不用关心

- xcb-proto:协议库,全是xml文档,用作libxcb1-dev中py脚本的输入

- xutils-dev:提供了 xcb-macros,应该也不用关心

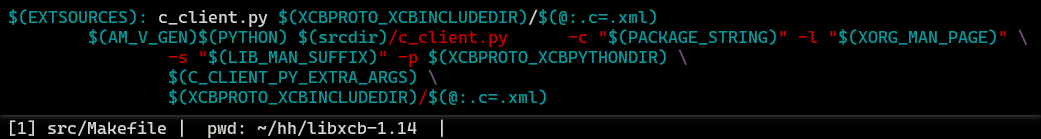

生成源码的脚本是 c_client.py ,生成命令如下:

具体怎么生成的这里就不看了。

调用链路

以这个例子为例:

const xcb_intern_atom_cookie_t cookie = xcb_intern_atom(conn, 0, strlen("WM_PROTOCOLS"), "WM_PROTOCOLS");

xcb_intern_atom_reply_t *reply = xcb_intern_atom_reply(conn, cookie, NULL);//不存在就创建

xcb_atom_t wm_protocols_property = reply->atom;

free(reply);

获取cookie

xcb_intern_atom_cookie_t

xcb_intern_atom (xcb_connection_t *c,

uint8_t only_if_exists,

uint16_t name_len,

const char *name)

{

// 这是专门用于描述xcb请求的应用层结构

static const xcb_protocol_request_t xcb_req = {

.count = 4,

.ext = 0,

.opcode = XCB_INTERN_ATOM,

.isvoid = 0

};

// 可见是采用了分散读,集中写的方法

struct iovec xcb_parts[6];

xcb_intern_atom_cookie_t xcb_ret;

xcb_intern_atom_request_t xcb_out;

xcb_out.only_if_exists = only_if_exists;

xcb_out.name_len = name_len;

memset(xcb_out.pad0, 0, 2);

xcb_parts[2].iov_base = (char *) &xcb_out;

xcb_parts[2].iov_len = sizeof(xcb_out);

xcb_parts[3].iov_base = 0;

xcb_parts[3].iov_len = -xcb_parts[2].iov_len & 3;

/* char name */

xcb_parts[4].iov_base = (char *) name;

xcb_parts[4].iov_len = name_len * sizeof(char);

xcb_parts[5].iov_base = 0;

xcb_parts[5].iov_len = -xcb_parts[4].iov_len & 3;

// 发送请求,得到序列号。序列号用于判断请求是否需要处理

xcb_ret.sequence = xcb_send_request(c, XCB_REQUEST_CHECKED, xcb_parts + 2, &xcb_req);

return xcb_ret;

}

// xcb_send_request会直接调用到这个函数

uint64_t xcb_send_request_with_fds64(xcb_connection_t *c, int flags, struct iovec *vector,

const xcb_protocol_request_t *req, unsigned int num_fds, int *fds)

{

uint64_t request;

uint32_t prefix[2];

int veclen = req->count;

enum workarounds workaround = WORKAROUND_NONE;

if(c->has_error) {

close_fds(fds, num_fds);

return 0;

}

assert(c != 0);

assert(vector != 0);

assert(req->count > 0);

if(!(flags & XCB_REQUEST_RAW))

{

// 包装XCB协议报头

static const char pad[3];

unsigned int i;

uint16_t shortlen = 0;

size_t longlen = 0;

assert(vector[0].iov_len >= 4);

/* set the major opcode, and the minor opcode for extensions */

if(req->ext)

{

const xcb_query_extension_reply_t *extension = xcb_get_extension_data(c, req->ext);

if(!(extension && extension->present))

{

close_fds(fds, num_fds);

_xcb_conn_shutdown(c, XCB_CONN_CLOSED_EXT_NOTSUPPORTED);

return 0;

}

((uint8_t *) vector[0].iov_base)[0] = extension->major_opcode;

((uint8_t *) vector[0].iov_base)[1] = req->opcode;

}

else

((uint8_t *) vector[0].iov_base)[0] = req->opcode;

/* put together the length field, possibly using BIGREQUESTS */

for(i = 0; i < req->count; ++i)

{

longlen += vector[i].iov_len;

if(!vector[i].iov_base)

{

vector[i].iov_base = (char *) pad;

assert(vector[i].iov_len <= sizeof(pad));

}

}

assert((longlen & 3) == 0);

longlen >>= 2;

if(longlen <= c->setup->maximum_request_length)

{

/* we don't need BIGREQUESTS. */

shortlen = longlen;

longlen = 0;

}

else if(longlen > xcb_get_maximum_request_length(c))

{

close_fds(fds, num_fds);

_xcb_conn_shutdown(c, XCB_CONN_CLOSED_REQ_LEN_EXCEED);

return 0; /* server can't take this; maybe need BIGREQUESTS? */

}

/* set the length field. */

((uint16_t *) vector[0].iov_base)[1] = shortlen;

if(!shortlen)

{

prefix[0] = ((uint32_t *) vector[0].iov_base)[0];

prefix[1] = ++longlen;

vector[0].iov_base = (uint32_t *) vector[0].iov_base + 1;

vector[0].iov_len -= sizeof(uint32_t);

--vector, ++veclen;

vector[0].iov_base = prefix;

vector[0].iov_len = sizeof(prefix);

}

}

flags &= ~XCB_REQUEST_RAW;

/* do we need to work around the X server bug described in glx.xml? */

/* XXX: GetFBConfigs won't use BIG-REQUESTS in any sane

* configuration, but that should be handled here anyway. */

if(req->ext && !req->isvoid && !strcmp(req->ext->name, "GLX") &&

((req->opcode == 17 && ((uint32_t *) vector[0].iov_base)[1] == 0x10004) ||

req->opcode == 21))

workaround = WORKAROUND_GLX_GET_FB_CONFIGS_BUG;

/* get a sequence number and arrange for delivery. */

pthread_mutex_lock(&c->iolock);

/* send FDs before establishing a good request number, because this might

* call send_sync(), too

*/

send_fds(c, fds, num_fds);

prepare_socket_request(c);

/* send GetInputFocus (sync_req) when 64k-2 requests have been sent without

* a reply.

* Also send sync_req (could use NoOp) at 32-bit wrap to avoid having

* applications see sequence 0 as that is used to indicate

* an error in sending the request

*/

while ((req->isvoid && c->out.request == c->in.request_expected + (1 << 16) - 2) ||

(unsigned int) (c->out.request + 1) == 0)

{

send_sync(c);

prepare_socket_request(c);

}

send_request(c, req->isvoid, workaround, flags, vector, veclen);

request = c->has_error ? 0 : c->out.request;

pthread_mutex_unlock(&c->iolock);

return request;

}

// send_request最终会调用到

int _xcb_out_send(xcb_connection_t *c, struct iovec *vector, int count)

{

int ret = 1;

while(ret && count)

ret = _xcb_conn_wait(c, &c->out.cond, &vector, &count);

c->out.request_written = c->out.request;

pthread_cond_broadcast(&c->out.cond);

_xcb_in_wake_up_next_reader(c);

return ret;

}

这里出现了 _xcb_conn_wait() ,这个函数是通过 poll + mutex 自行实现了条件变量。这里 xcb_connection_t::out.cond 这个条件变量是内核实现的,用于在多个用户线程准备往xcb的连接fd中写入数据时实现互斥(为啥不用内核 mutex 呢)

获取reply

xcb_intern_atom_reply_t *

xcb_intern_atom_reply (xcb_connection_t *c,

xcb_intern_atom_cookie_t cookie /**< */,

xcb_generic_error_t **e)

{

return (xcb_intern_atom_reply_t *) xcb_wait_for_reply(c, cookie.sequence, e);

}

void *xcb_wait_for_reply(xcb_connection_t *c, unsigned int request, xcb_generic_error_t **e)

{

void *ret;

if(e)

*e = 0;

if(c->has_error)

return 0;

pthread_mutex_lock(&c->iolock);

ret = wait_for_reply(c, widen(c, request), e);

pthread_mutex_unlock(&c->iolock);

return ret;

}

static void *wait_for_reply(xcb_connection_t *c, uint64_t request, xcb_generic_error_t **e)

{

void *ret = 0;

/* If this request has not been written yet, write it. */

if(c->out.return_socket || _xcb_out_flush_to(c, request))

{

pthread_cond_t cond = PTHREAD_COND_INITIALIZER;

reader_list reader;

// 为这个请求注册一个reader

insert_reader(&c->in.readers, &reader, request, &cond);

while(!poll_for_reply(c, request, &ret, e))

if(!_xcb_conn_wait(c, &cond, 0, 0))

break;

remove_reader(&c->in.readers, &reader);

pthread_cond_destroy(&cond);

}

_xcb_in_wake_up_next_reader(c);

return ret;

}

int _xcb_conn_wait(xcb_connection_t *c, pthread_cond_t *cond, struct iovec **vector, int *count)

{

int ret;

struct pollfd fd;

/* If the thing I should be doing is already being done, wait for it. */

if(count ? c->out.writing : c->in.reading)

{

pthread_cond_wait(cond, &c->iolock);

return 1;

}

memset(&fd, 0, sizeof(fd));

fd.fd = c->fd;

fd.events = POLLIN;

++c->in.reading;

if(count)

{

fd.events |= POLLOUT;

++c->out.writing;

}

pthread_mutex_unlock(&c->iolock);

do {

ret = poll(&fd, 1, -1);

/* If poll() returns an event we didn't expect, such as POLLNVAL, treat

* it as if it failed. */

if(ret >= 0 && (fd.revents & ~fd.events))

{

ret = -1;

break;

}

} while (ret == -1 && errno == EINTR);

if(ret < 0)

{

_xcb_conn_shutdown(c, XCB_CONN_ERROR);

ret = 0;

}

pthread_mutex_lock(&c->iolock);

if(ret)

{

/* The code allows two threads to call select()/poll() at the same time.

* First thread just wants to read, a second thread wants to write, too.

* We have to make sure that we don't steal the reading thread's reply

* and let it get stuck in select()/poll().

* So a thread may read if either:

* - There is no other thread that wants to read (the above situation

* did not occur).

* - It is the reading thread (above situation occurred).

*/

int may_read = c->in.reading == 1 || !count;

if(may_read && (fd.revents & POLLIN) != 0)

ret = ret && _xcb_in_read(c);

if((fd.revents & POLLOUT) != 0)

ret = ret && write_vec(c, vector, count);

}

if(count)

--c->out.writing;

--c->in.reading;

return ret;

}

浙公网安备 33010602011771号

浙公网安备 33010602011771号