人工智能 VGG16

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers, regularizers

import numpy as np

import os

import cv2

import matplotlib.pyplot as plt

os.environ["CUDA_VISIBLE_DEVICES"] = "0" #第一块GPU

resize = 224

path = "D:/机器学习/trains/catVSdog/dogs-vs-cats-redux-kernels-edition/train/train"

def load_data():

imgs = os.listdir(path)

num = len(imgs)

train_data = np.empty((3000, resize, resize, 3), dtype="int32")

train_label = np.empty((3000,), dtype="int32")

test_data = np.empty((3000, resize, resize, 3), dtype="int32")

test_label = np.empty((3000,), dtype="int32")

for i in range(3000):

if i % 2:

train_data[i] = cv2.resize(cv2.imread(path + '/' + 'dog.' + str(i) + '.jpg'), (resize, resize))

train_label[i] = 1

else:

train_data[i] = cv2.resize(cv2.imread(path + '/' + 'cat.' + str(i) + '.jpg'), (resize, resize))

train_label[i] = 0

for i in range(3000, 6000):

if i % 2:

test_data[i - 3000] = cv2.resize(cv2.imread(path + '/' + 'dog.' + str(i) + '.jpg'), (resize, resize))

test_label[i - 3000] = 1

else:

test_data[i - 3000] = cv2.resize(cv2.imread(path + '/' + 'cat.' + str(i) + '.jpg'), (resize, resize))

test_label[i - 3000] = 0

return train_data, train_label, test_data, test_label

def vgg16():

weight_decay = 0.0005

nb_epoch = 100

batch_size = 32

# layer1

model = keras.Sequential()

model.add(layers.Conv2D(64, (3, 3), padding='same',

input_shape=(224, 224, 3), kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.Dropout(0.3))

# layer2

model.add(layers.Conv2D(64, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.MaxPooling2D(pool_size=(2, 2)))

# layer3

model.add(layers.Conv2D(128, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.Dropout(0.4))

# layer4

model.add(layers.Conv2D(128, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.MaxPooling2D(pool_size=(2, 2)))

# layer5

model.add(layers.Conv2D(256, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.Dropout(0.4))

# layer6

model.add(layers.Conv2D(256, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.Dropout(0.4))

# layer7

model.add(layers.Conv2D(256, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.MaxPooling2D(pool_size=(2, 2)))

# layer8

model.add(layers.Conv2D(512, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.Dropout(0.4))

# layer9

model.add(layers.Conv2D(512, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.Dropout(0.4))

# layer10

model.add(layers.Conv2D(512, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.MaxPooling2D(pool_size=(2, 2)))

# layer11

model.add(layers.Conv2D(512, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.Dropout(0.4))

# layer12

model.add(layers.Conv2D(512, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.Dropout(0.4))

# layer13

model.add(layers.Conv2D(512, (3, 3), padding='same', kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.MaxPooling2D(pool_size=(2, 2)))

model.add(layers.Dropout(0.5))

# layer14

model.add(layers.Flatten())

model.add(layers.Dense(512, kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

# layer15

model.add(layers.Dense(512, kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

# layer16

model.add(layers.Dropout(0.5))

model.add(layers.Dense(2))

model.add(layers.Activation('softmax'))

return model

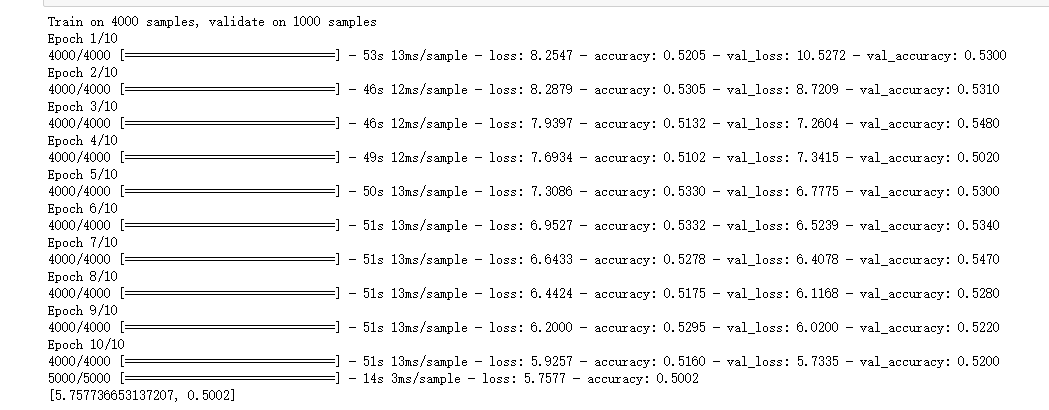

if __name__ == '__main__':

train_data, train_label, test_data, test_label = load_data()

train_data = train_data.astype('float32')

test_data = test_data.astype('float32')

train_label = keras.utils.to_categorical(train_label, 2)

test_label = keras.utils.to_categorical(test_label, 2)

# 定义训练方法,超参数设置

model = vgg16()

sgd = tf.keras.optimizers.SGD(lr=0.01, decay=1e-6, momentum=0.9, nesterov=True) # 设置优化器为SGD

model.compile(loss='categorical_crossentropy', optimizer=sgd, metrics=['accuracy'])

history = model.fit(train_data, train_label,

batch_size=20,

epochs=10,

validation_split=0.2, # 把训练集中的五分之一作为验证集

shuffle=True)

scores = model.evaluate(test_data, test_label, verbose=1)

model.save('vgg16dogcat.h5')

print(scores)

model.save('./testpy')

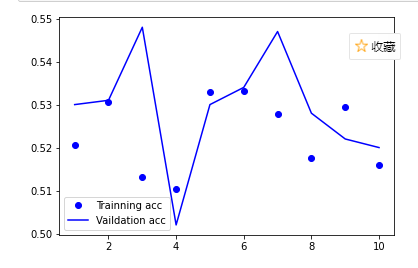

acc = history.history['accuracy'] # 获取训练集准确性数据

val_acc = history.history['val_accuracy'] # 获取验证集准确性数据

loss = history.history['loss'] # 获取训练集错误值数据

val_loss = history.history['val_loss'] # 获取验证集错误值数据

epochs = range(1, len(acc) + 1)

plt.plot(epochs, acc, 'bo', label='Trainning acc') # 以epochs为横坐标,以训练集准确性为纵坐标

plt.plot(epochs, val_acc, 'b', label='Vaildation acc') # 以epochs为横坐标,以验证集准确性为纵坐标

plt.legend() # 绘制图例,即标明图中的线段代表何种含义

plt.show()

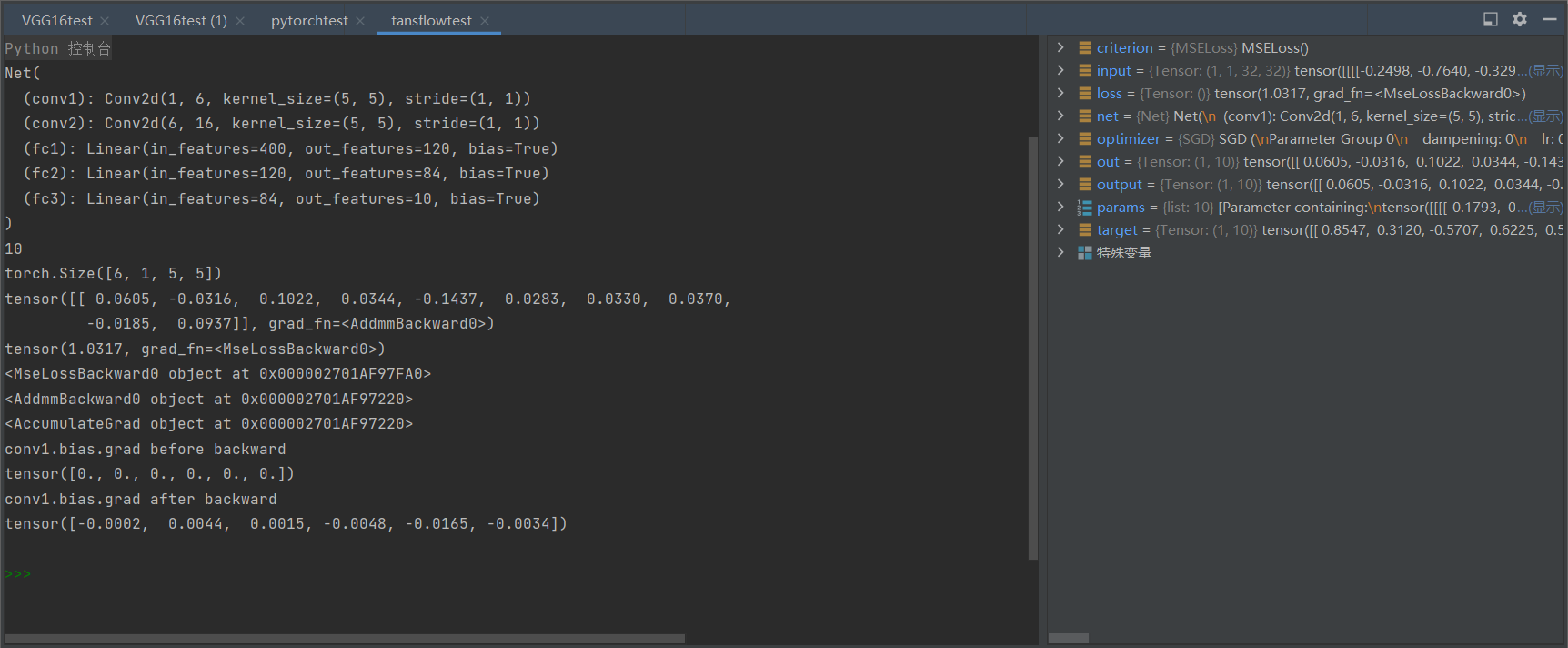

import torch

import torch.nn as nn

import torch.nn.functional as F

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

# 1 input image channel, 6 output channels, 5x5 square convolution

# kernel

self.conv1 = nn.Conv2d(1, 6, 5)

self.conv2 = nn.Conv2d(6, 16, 5)

# an affine operation: y = Wx + b

self.fc1 = nn.Linear(16 * 5 * 5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

# Max pooling over a (2, 2) window

x = F.max_pool2d(F.relu(self.conv1(x)), (2, 2))

# If the size is a square you can only specify a single number

x = F.max_pool2d(F.relu(self.conv2(x)), 2)

x = x.view(-1, self.num_flat_features(x))

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

def num_flat_features(self, x):

size = x.size()[1:] # all dimensions except the batch dimension

num_features = 1

for s in size:

num_features *= s

return num_features

net = Net()

print(net)

浙公网安备 33010602011771号

浙公网安备 33010602011771号