102302133陈佳昕作业3

作业①:

要求:指定一个网站,爬取这个网站中的所有的所有图片,例如:中国气象网(http://www.weather.com.cn)。实现单线程和多线程的方式爬取。

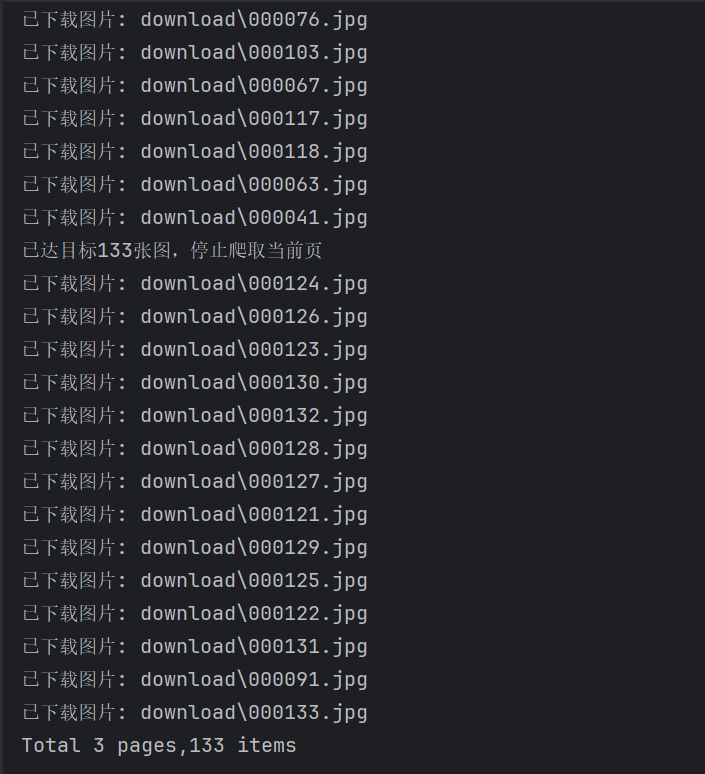

–务必控制总页数(学号尾数2位)、总下载的图片数量(尾数后3位)等限制爬取的措施。

输出信息: 将下载的Url信息在控制台输出,并将下载的图片存储在images子文件中,并给出截图。

Gitee文件夹链接:

单线程爬取:https://gitee.com/chen-jiaxin_fzu/2025_crawl_project/blob/master/作业3/1/1.py

多线程爬取:https://gitee.com/chen-jiaxin_fzu/2025_crawl_project/blob/master/作业3/1/2.py

1.单线程爬取

(1)代码

import urllib.request

from bs4 import BeautifulSoup

import os

def downloadImage(ID, src, tExt):

try:

imgName = 'download_bag\\' + ID + '.' + tExt

urllib.request.urlretrieve(src, imgName)

print(f"已下载图片: {imgName}")

except Exception as err:

print(err)

def initializeDownload():

if not os.path.exists('download_bag'):

os.mkdir('download_bag')

fs = os.listdir('download_bag')

if fs:

for f in fs:

os.remove('download_bag\\' + f)

def spider(url):

global page, count, max_img_count

if count >= max_img_count:

return

page = page + 1

print('Page', page, url)

try:

req = urllib.request.Request(url, headers=headers)

resp = urllib.request.urlopen(req)

html = resp.read().decode('gbk')

soup = BeautifulSoup(html, 'lxml')

lis = soup.select("ul[class='bigimg cloth_shoplist'] li[class^='line']")

for li in lis:

if count >= max_img_count:

print(f"已达目标{max_img_count}张图,停止爬取当前页")

break

img = li.select_one('img')

src = ''

tExt = ''

if img:

# 优先使用 data-original 属性,如果没有则使用 src

if img.get('data-original'):

src = urllib.request.urljoin(url, img['data-original'])

else:

src = urllib.request.urljoin(url, img['src'])

p = src.rfind('.')

if p >= 0:

tExt = src[p + 1:]

if tExt:

count = count + 1

ID = '%06d' % (count)

downloadImage(ID, src, tExt)

if count < max_img_count:

nextUrl = ''

links = soup.select("div[class='paging'] li[class='next'] a")

for link in links:

href = link['href']

nextUrl = urllib.request.urljoin(url, href)

if nextUrl:

spider(nextUrl)

except Exception as err:

print(err)

headers = {

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/141.0.0.0 Safari/537.36 Edg/141.0.0.0'}

initializeDownload()

page = 0

count = 0

max_img_count = 133

spider(url='https://search.dangdang.com/?key=%CA%E9%B0%FC&category_id=10009684#J_tab')

print('Total %d pages,%d items' % (page, count))

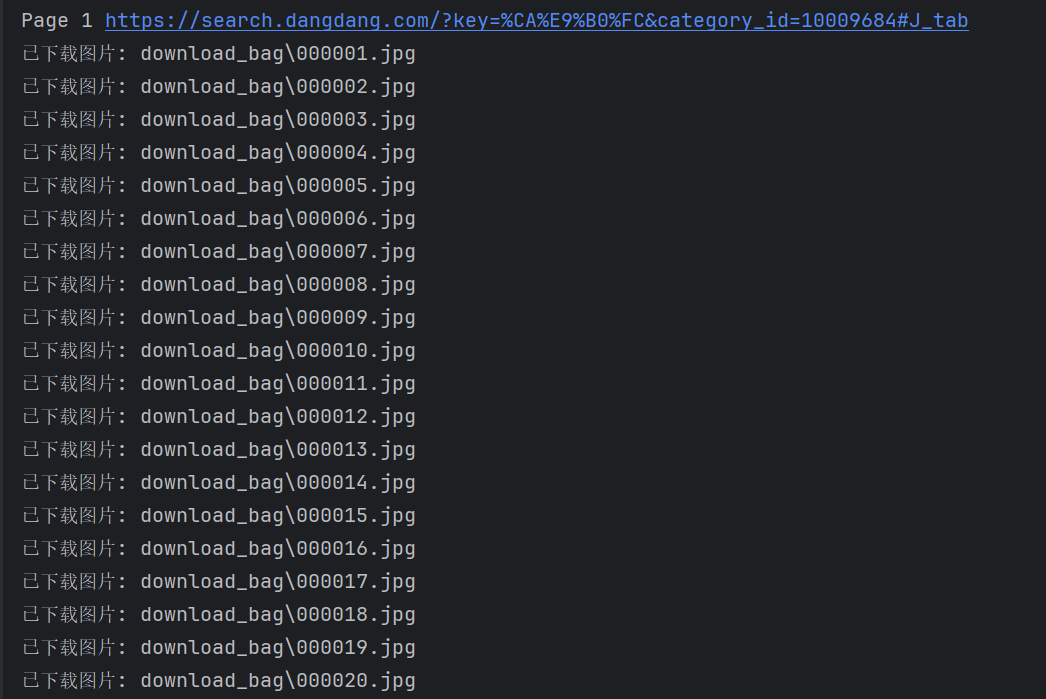

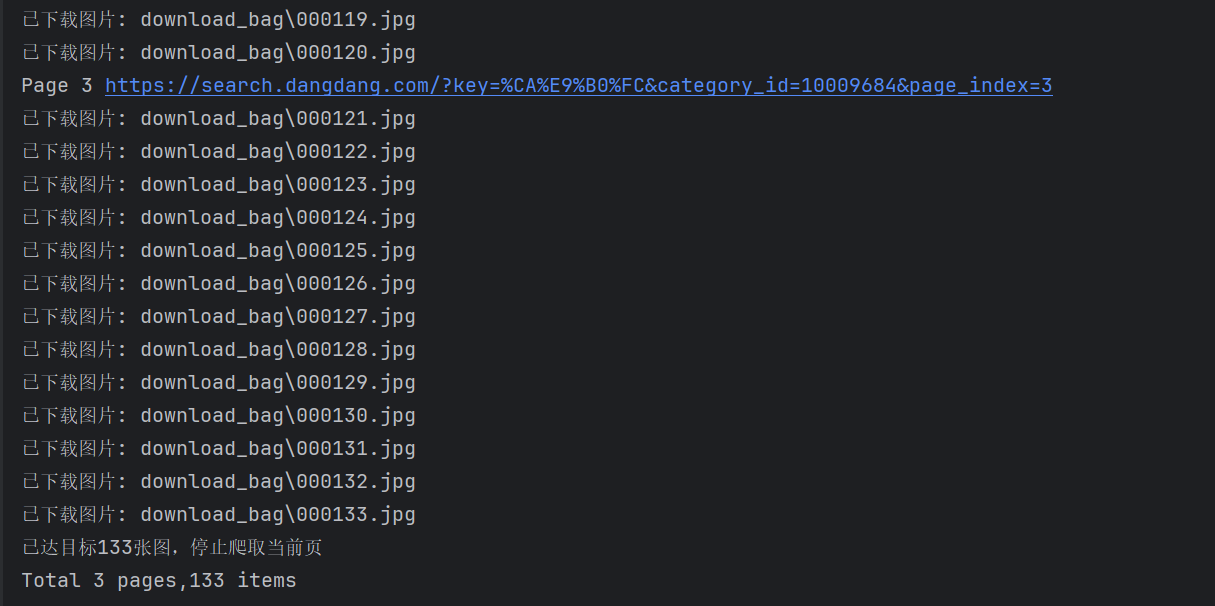

(2)结果

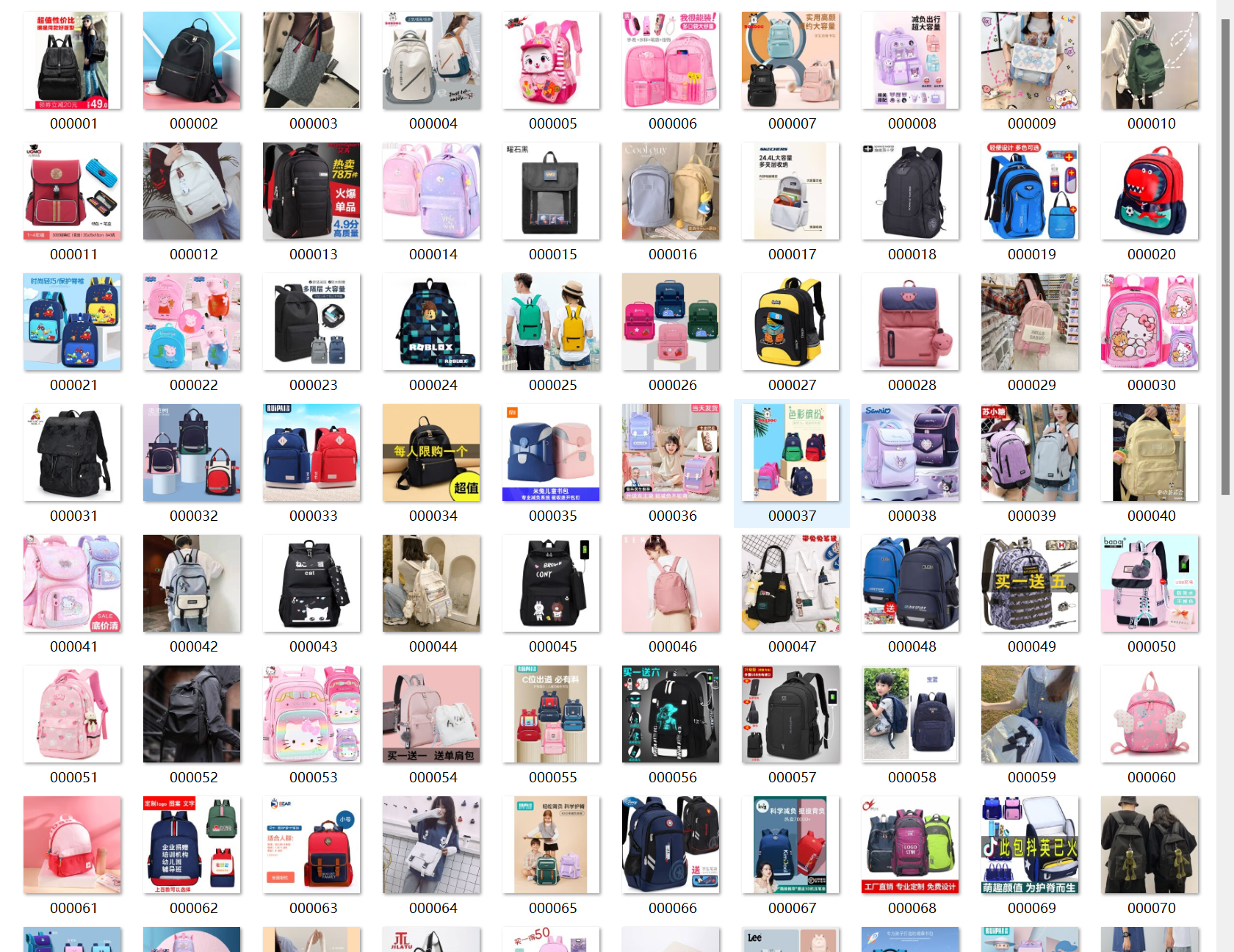

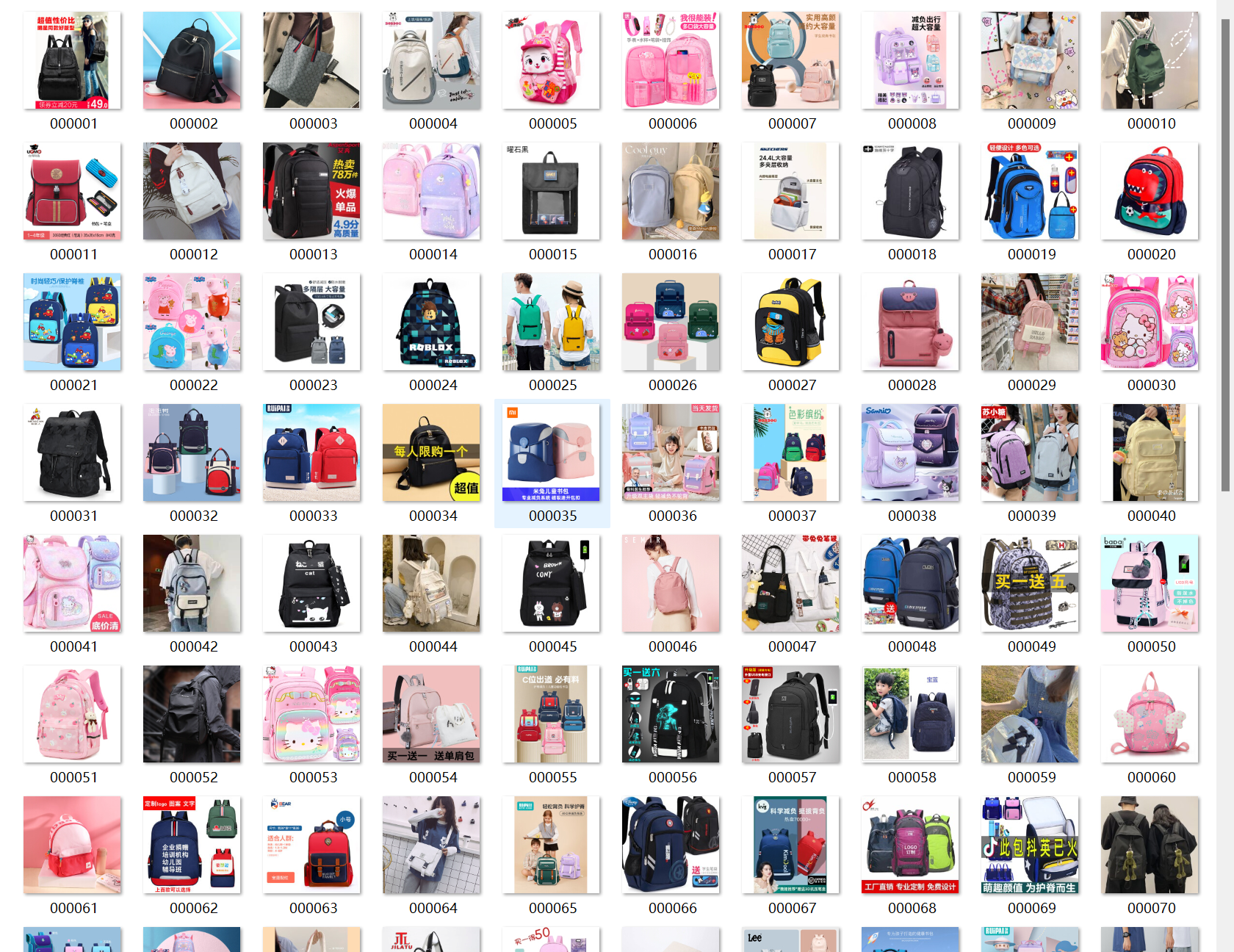

(3)爬取的图片

2.多线程爬取

(1)代码

import urllib.request

from bs4 import BeautifulSoup

import os

import threading

def downloadImage(ID, src, tExt):

try:

imgName = 'download\\' + ID + '.' + tExt

urllib.request.urlretrieve(src, imgName)

print(f"已下载图片: {imgName}")

except Exception as err:

print(err)

def initializeDownload():

if not os.path.exists('download'):

os.mkdir('download')

fs = os.listdir('download')

if fs:

for f in fs:

os.remove('download\\' + f)

def spider(url):

global page, count, threads, max_img_count

if count >= max_img_count:

return

page = page + 1

print('Page', page, url)

try:

req = urllib.request.Request(url, headers=headers)

resp = urllib.request.urlopen(req)

html = resp.read().decode('gbk')

soup = BeautifulSoup(html, 'lxml')

lis = soup.select("ul[class='bigimg cloth_shoplist'] li[class^='line']")

for li in lis:

if count >= max_img_count:

print(f"已达目标{max_img_count}张图,停止爬取当前页")

break

img = li.select_one('img')

src = ''

tExt = ''

if img:

# 优先使用 data-original 属性,如果没有则使用 src

if img.get('data-original'):

src = urllib.request.urljoin(url, img['data-original'])

else:

src = urllib.request.urljoin(url, img['src'])

p = src.rfind('.') # 找到最后一个"."的索引

if p >= 0:

tExt = src[p + 1:]

if tExt:

count = count + 1

ID = '%06d' % (count)

T = threading.Thread(target=downloadImage, args=[ID, src, tExt])

T.start()

threads.append(T)

if count < max_img_count:

nextUrl = ''

links = soup.select("div[class='paging'] li[class='next'] a")

for link in links:

href = link['href']

nextUrl = urllib.request.urljoin(url, href)

if nextUrl:

spider(nextUrl)

except Exception as err:

print(err)

headers = {

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/141.0.0.0 Safari/537.36 Edg/141.0.0.0'}

initializeDownload()

threads = []

page = 0

count = 0

max_img_count = 133

spider(url='https://search.dangdang.com/?key=%CA%E9%B0%FC&category_id=10009684#J_tab')

for T in threads:

T.join()

print('Total %d pages,%d items' % (page, count))

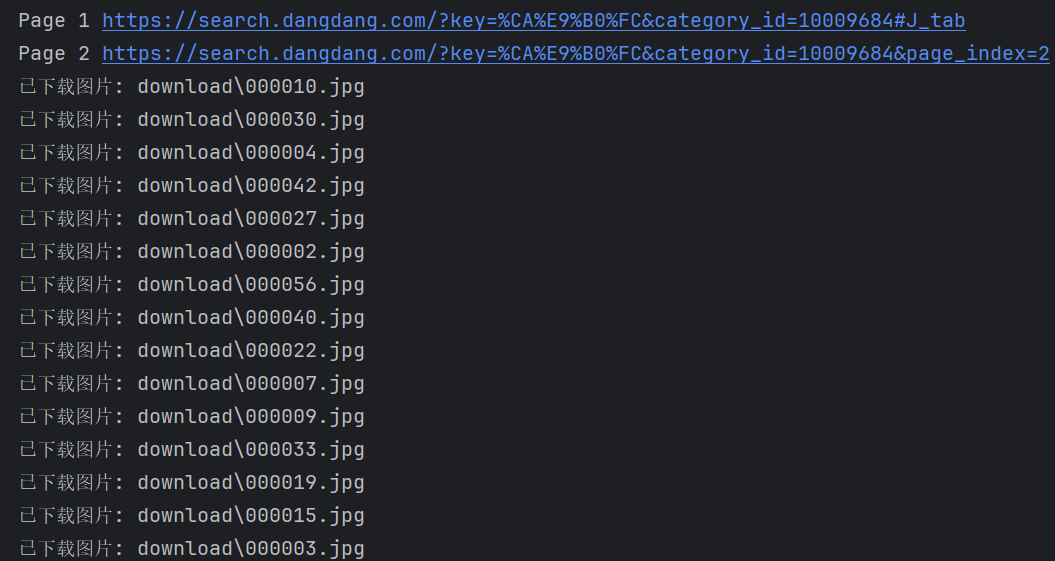

(2)结果

(3)爬取的图片

3.心得体会

通过单线程与多线程爬取图片的实践,我清晰感受到多线程爬取提高的效率。

作业②

要求:熟练掌握 scrapy 中 Item、Pipeline 数据的序列化输出方法;使用scrapy框架+Xpath+MySQL数据库存储技术路线爬取股票相关信息。

候选网站:东方财富网:https://www.eastmoney.com/

输出信息:MySQL数据库存储和输出格式如下:

| 股票代码 | 股票名称 | 最新报价 | 涨跌幅 | 涨跌额 | 成交量 | 成交额 | 振幅 | 最高 | 最低 | 今开 | 昨收 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 688093 | N世华 | 28.47 | 10.92 | 26.13万 | 7.6亿 | 22.34 | 32.0 | 28.08 | 30.20 | 17.55 |

表头英文命名例如:序号id,股票代码:bStockNo……,由同学们自行定义设计

Gitee文件夹链接:

StockItem类:https://gitee.com/chen-jiaxin_fzu/2025_crawl_project/blob/master/作业3/2/1.py

Stock_Spider类:https://gitee.com/chen-jiaxin_fzu/2025_crawl_project/blob/master/作业3/2/2.py

pipelines:https://gitee.com/chen-jiaxin_fzu/2025_crawl_project/blob/master/作业3/2/3.py

middlewares:https://gitee.com/chen-jiaxin_fzu/2025_crawl_project/blob/master/作业3/2/4.py

settings:https://gitee.com/chen-jiaxin_fzu/2025_crawl_project/blob/master/作业3/2/5.py

1.代码

(1)StockItem类

import scrapy

class StockItem(scrapy.Item):

id = scrapy.Field()

stock_code = scrapy.Field()

stock_name = scrapy.Field()

current_price = scrapy.Field()

change_percent = scrapy.Field()

change_amount = scrapy.Field()

volume = scrapy.Field()

amplitude = scrapy.Field()

highest_price = scrapy.Field()

lowest_price = scrapy.Field()

open = scrapy.Field()

previous_close = scrapy.Field()

pass

(2)Stock_Spider类

import scrapy

from demo.items import StockItem

class Stock_Spider(scrapy.Spider):

name = 'stock_spider'

allowed_domains = ['www.eastmoney.com']

start_urls = ['https://quote.eastmoney.com/center/gridlist.html#hs_a_board']

def parse(self,response):

try:

stocks = response.xpath("//tbody//tr")

for stock in stocks[1:]:

item = StockItem()

item["stock_code"] = stock.xpath(".//td[2]//text()").extract_first()

item["stock_name"] = stock.xpath(".//td[3]//text()").extract_first()

item["current_price"] = stock.xpath(".//td[5]//text()").extract_first()

item["change_percent"] = stock.xpath(".//td[6]//text()").extract_first()

item["change_amount"] = stock.xpath(".//td[7]//text()").extract_first()

item["volume"] = stock.xpath(".//td[8]//text()").extract_first()

item["amplitude"] = stock.xpath(".//td[10]//text()").extract_first()

item["highest_price"] = stock.xpath(".//td[11]//text()").extract_first()

item["lowest_price"] = stock.xpath(".//td[12]//text()").extract_first()

item["open"] = stock.xpath(".//td[13]//text()").extract_first()

item["previous_close"] = stock.xpath(".//td[14]//text()").extract_first()

yield item

except Exception as err:

print(err)

(3)pipelines.py

import sqlite3

class StockPipeline(object):

def open_spider(self, spider):

print("open_spider")

self.conn = sqlite3.connect('stocks.db')

self.cursor = self.conn.cursor()

self.cursor.execute("DROP TABLE IF EXISTS stocks")

sql = """

CREATE TABLE stocks

(

id INTEGER PRIMARY KEY AUTOINCREMENT,

stock_code VARCHAR(255),

stock_name VARCHAR(255),

current_price VARCHAR(255),

change_percent VARCHAR(255),

change_amount VARCHAR(255),

volume VARCHAR(255),

amplitude VARCHAR(255),

highest_price VARCHAR(255),

lowest_price VARCHAR(255),

open VARCHAR(255),

previous_close VARCHAR(255)

)

"""

self.cursor.execute(sql)

self.conn.commit()

print("数据库表创建成功")

def close_spider(self, spider):

print("close_spider")

self.conn.commit()

self.conn.close()

def process_item(self, item, spider):

stock_code = item.get('stock_code', '')

stock_name = item.get('stock_name', '')

current_price = item.get('current_price', '')

change_percent = item.get('change_percent', '')

change_amount = item.get('change_amount', '')

volume = item.get('volume', '')

amplitude = item.get('amplitude', '')

highest_price = item.get('highest_price', '')

lowest_price = item.get('lowest_price', '')

open_price = item.get('open', '')

previous_close = item.get('previous_close', '')

try:

sql = """INSERT INTO stocks

(stock_code, stock_name, current_price, change_percent, change_amount,

volume, amplitude, highest_price, lowest_price, open, previous_close)

VALUES (?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?)"""

self.cursor.execute(sql, [stock_code, stock_name, current_price, change_percent, change_amount,

volume, amplitude, highest_price, lowest_price, open_price, previous_close])

self.conn.commit()

print(f"成功插入数据: {stock_code} {stock_name}")

except Exception as err:

print(f"插入数据失败: {err}")

return item

(4)middlewares.py

import time

from selenium import webdriver

from scrapy.http import HtmlResponse

class SeleniumMiddleware:

def process_request(self, request, spider):

driver = webdriver.Edge()

try:

driver.get(request.url)

time.sleep(5)

data = driver.page_source

finally:

driver.quit()

return HtmlResponse(url=request.url, body=data.encode('utf-8'), encoding='utf-8', request=request)

(5)settings.py

ITEM_PIPELINES = {

"demo.pipelines.StockPipeline": 300,

}

DOWNLOADER_MIDDLEWARES = {

'demo.middlewares.SeleniumMiddleware': 543,

}

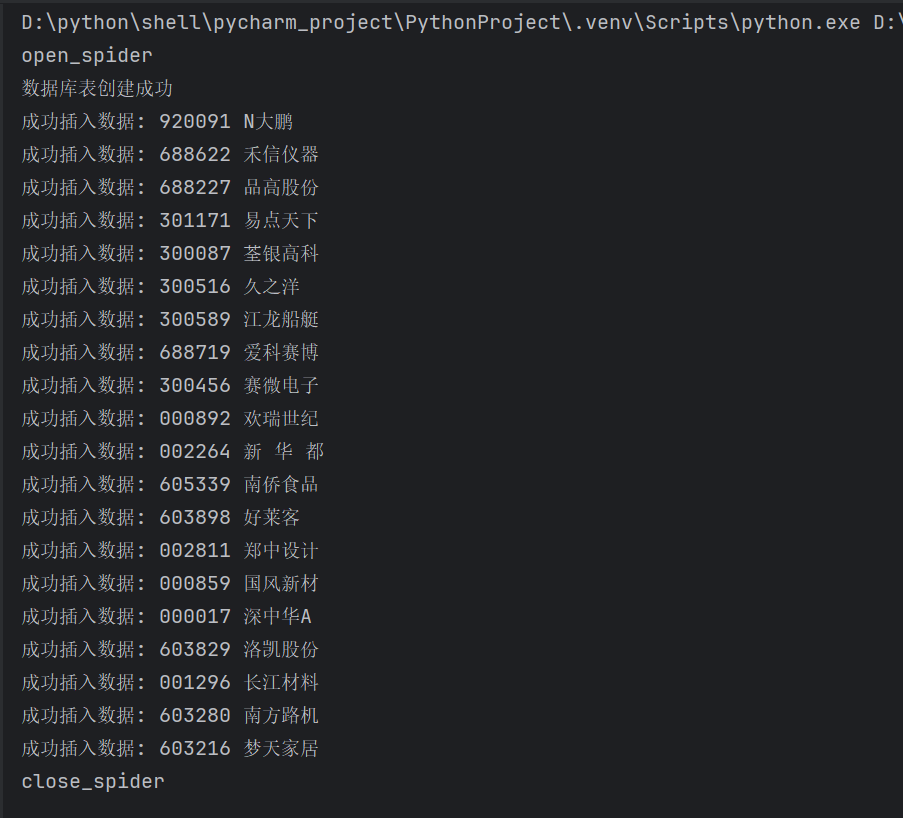

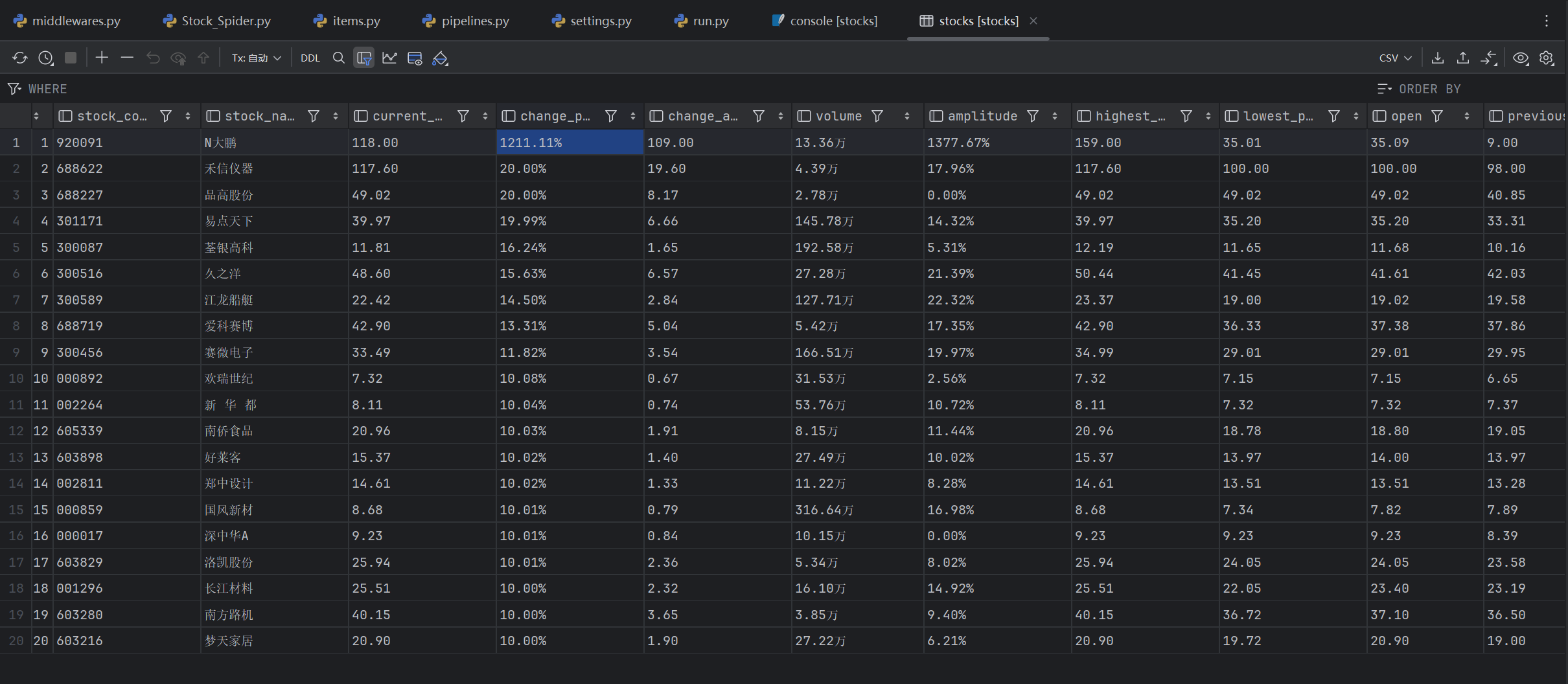

2.结果

3.心得体会

起初,爬取不到数据,后来发现股票数据是通过JavaScript动态加载的,直接访问页面获取不到数据,所以结合了Selenium来爬取。通过实验,我深刻认识到高效数据采集系统的构建不仅需要精准的页面解析能力,更需注重数据管道的完整性与稳定性。

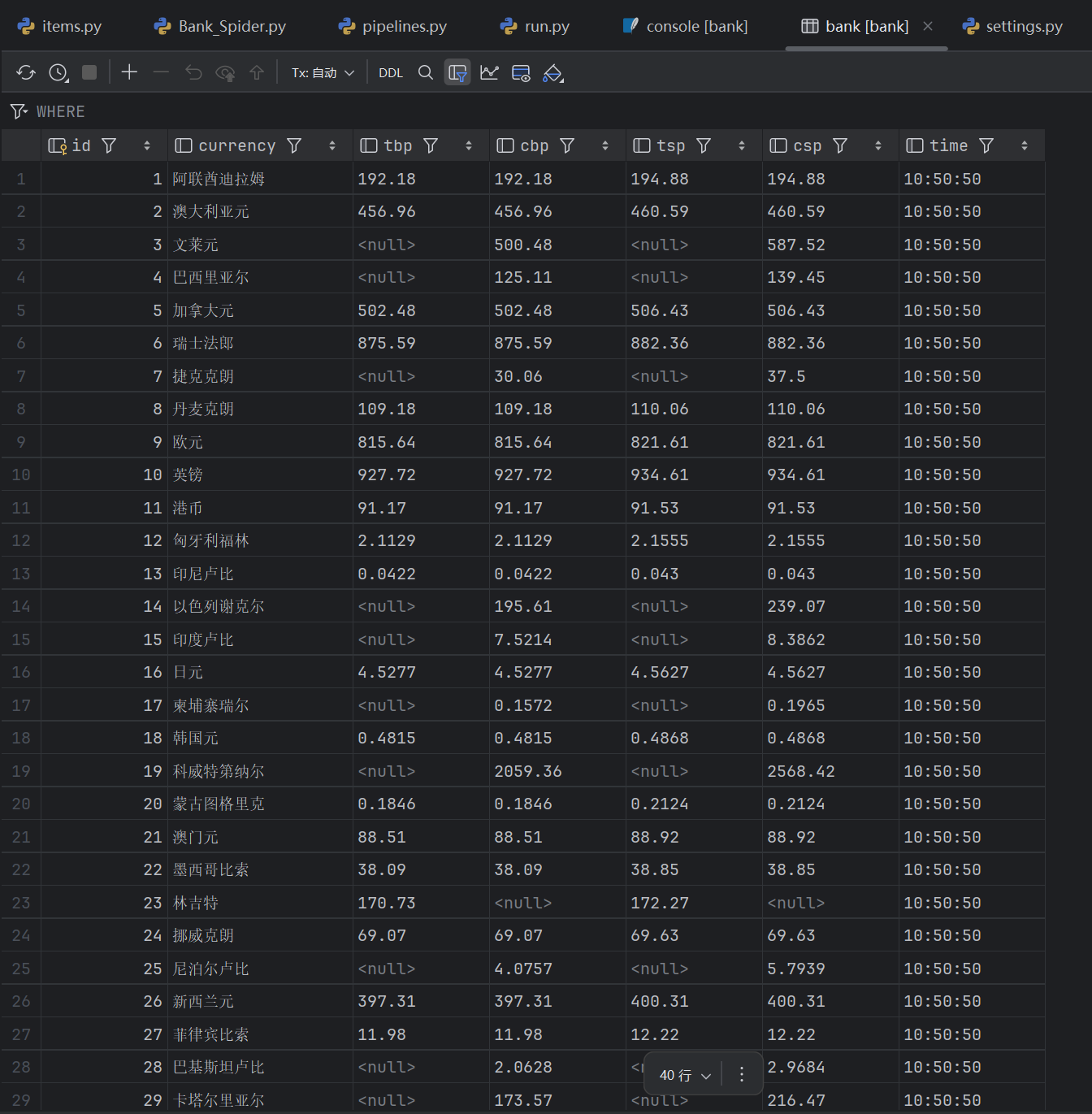

作业③:

要求:熟练掌握 scrapy 中 Item、Pipeline 数据的序列化输出方法;使用scrapy框架+Xpath+MySQL数据库存储技术路线爬取外汇网站数据。

候选网站:中国银行网:https://www.boc.cn/sourcedb/whpj/

输出信息:

| Currency | TBP | CBP | TSP | CSP | Time |

|---|---|---|---|---|---|

| 阿联酋迪拉姆 | 198.58 | 192.31 | 199.98 | 206.59 | 11:27:14 |

Gitee文件夹链接:

BankItem类:https://gitee.com/chen-jiaxin_fzu/2025_crawl_project/blob/master/作业3/3/1.py

Bank_Spider类:https://gitee.com/chen-jiaxin_fzu/2025_crawl_project/blob/master/作业3/3/2.py

pipelines:https://gitee.com/chen-jiaxin_fzu/2025_crawl_project/blob/master/作业3/3/3.py

1.代码

(1)BankItem类

import scrapy

class BankItem(scrapy.Item):

id = scrapy.Field()

currency = scrapy.Field()

tbp = scrapy.Field()

cbp = scrapy.Field()

tsp = scrapy.Field()

csp = scrapy.Field()

time = scrapy.Field()

pass

(2)Bank_Spider类

import scrapy

from demo.items import BankItem

class Bank_Spider(scrapy.Spider):

name = "bank_spider"

allowed_domains = ["www.boc.cn"]

start_urls = ["https://www.boc.cn/sourcedb/whpj/"]

def parse(self, response):

try:

rows = response.xpath('//table//tr')

for row in rows[2:-2]:

item = BankItem()

item['currency'] = row.xpath("./td[1]//text()").extract_first()

item['tbp'] = row.xpath("./td[2]//text()").extract_first()

item['cbp'] = row.xpath("./td[3]//text()").extract_first()

item['tsp'] = row.xpath("./td[4]//text()").extract_first()

item['csp'] = row.xpath("./td[5]//text()").extract_first()

item['time'] = row.xpath("./td[8]//text()").extract_first()

yield item

except Exception as err:

print(err)

(3)pipelines.py

import sqlite3

class BankPipeline(object):

def __init__(self):

self.id_counter = 1

def open_spider(self, spider):

print("open_spider")

self.conn = sqlite3.connect('bank.db')

self.cursor = self.conn.cursor()

# 如果表存在则删除

self.cursor.execute("DROP TABLE IF EXISTS bank")

# 创建表

sql = """

CREATE TABLE bank

(

id INTEGER PRIMARY KEY AUTOINCREMENT,

currency VARCHAR(255),

tbp VARCHAR(255),

cbp VARCHAR(255),

tsp VARCHAR(255),

csp VARCHAR(255),

time VARCHAR(255)

)

"""

self.cursor.execute(sql)

self.conn.commit()

print("数据库表创建成功")

def close_spider(self, spider):

print("close_spider")

self.conn.commit()

self.conn.close()

def process_item(self, item, spider):

currency = item.get('currency', '')

tbp = item.get('tbp', '')

cbp = item.get('cbp', '')

tsp = item.get('tsp', '')

csp = item.get('csp', '')

time = item.get('time', '')

try:

sql = "INSERT INTO bank (currency, tbp, cbp, tsp, csp, time) VALUES (?, ?, ?, ?, ?, ?)"

self.cursor.execute(sql, [currency, tbp, cbp, tsp, csp, time])

self.conn.commit()

print(f"成功插入数据: {currency}")

except Exception as err:

print(f"插入数据失败: {err}")

return item

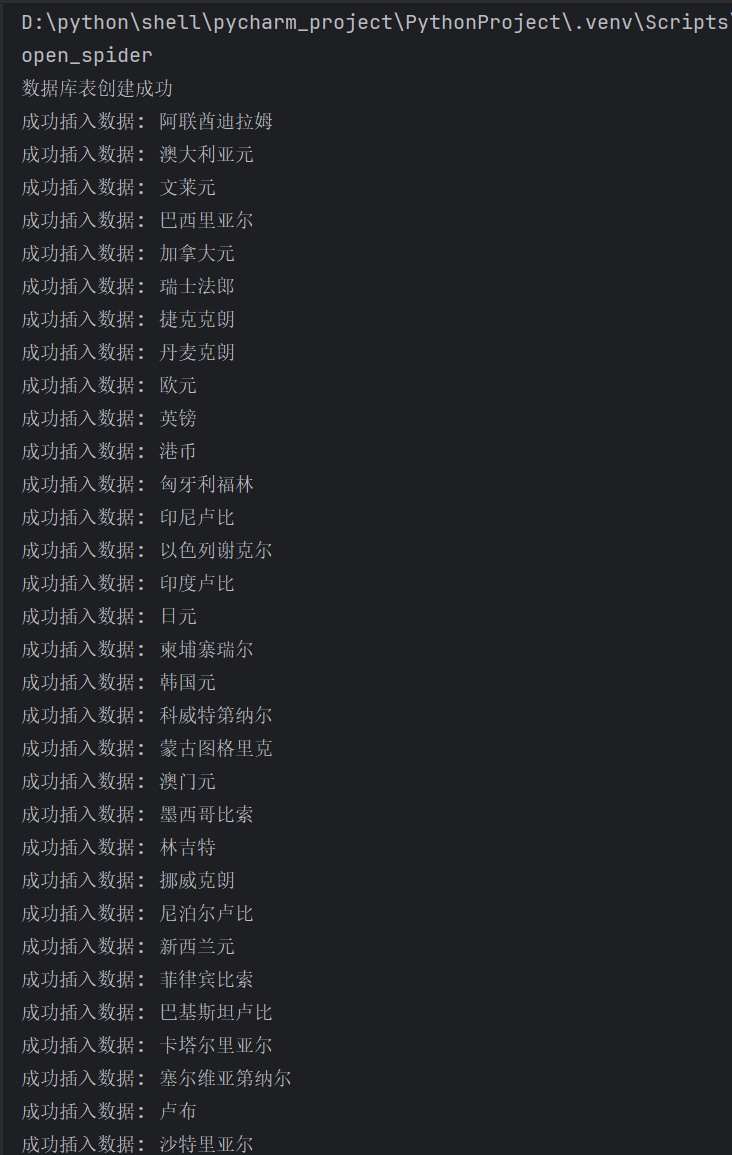

2.结果

3.心得体会

通过本次基于Scrapy框架结合XPath解析与MySQL数据库的外汇数据爬取实践,我深刻体会到数据采集系统中技术组件协同工作的重要性——Scrapy的Item类为数据字段提供了标准化容器,确保了数据结构的清晰性与可维护性;XPath选择器的精准设计直接决定了表格数据的提取效率,而Pipeline机制则通过分层式数据处理流水线实现了数据的清洗、验证与序列化输出,特别是MySQL数据库的连接管理、批量插入,显著提升了数据存储的可靠性与系统容错能力。

浙公网安备 33010602011771号

浙公网安备 33010602011771号