1.逻辑回归是怎么防止过拟合的?为什么正则化可以防止过拟合?(大家用自己的话介绍下)

通过正则化来防止过拟合的,因为正则化可以通过增加新的额外信息,就是通过收缩的办法,限制模型变的越来越大,牺牲样本内误差,降低模型的误差,从而提高样本外的预测效果,防止过拟合。

2.用logiftic回归来进行实践操作,数据不限。

from sklearn.datasets import load_breast_cancer

from sklearn.metrics import classification_report

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LogisticRegression

import numpy as np

cancer = load_breast_cancer()

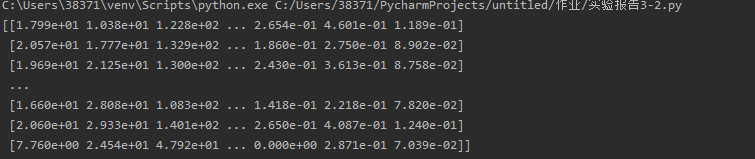

x = cancer['data']

y = cancer['target']

print(x)

print(y)

x_train, x_test, y_train, y_test = train_test_split(x, y, test_size=0.2)

model = LogisticRegression()

model.fit(x_train, y_train)

y_pre = model.predict(x_test)

print(model.score(x_test, y_test))

print('matchs:{0}/{1}'.format(np.equal(y_pre,y_test).sum(),y_test.shape[0]))