第二次作业

作业①

1)WeatherForecast

要求:在中国气象网http://www.weather.com.cn给定城市集的7日天气预报,并保存在数据库。

代码:

from bs4 import BeautifulSoup

from bs4 import UnicodeDammit

import urllib.request

import sqlite3

class WeatherDB: # 包含对数据库的操作

def openDB(self):

self.con = sqlite3.connect('weathers.db')

self.cursor = self.con.cursor()

try:

self.cursor.execute(

'create table weathers (wCity varchar(16),wDate varchar(16),wWeather varchar(64),wTemp varchar(32),constraint pk_weather primary key(wCity,wDate))')

except: # 第一次创建表格是成功的;第二次创建就会清空表格

self.cursor.execute('delete from weathers')

def closeDB(self):

self.con.commit()

self.con.close()

def insert(self, city, date, weather, temp):

try:

self.cursor.execute('insert into weathers (wCity,wDate,wWeather,wTemp)values(?,?,?,?)',

(city, date, weather, temp)) ## 爬取城市的天气预报数据储存到数据库weather.db中

except Exception as err:

print(err)

def show(self):

self.cursor.execute('select * from weathers') #执行查询语句,输出表

rows = self.cursor.fetchall()

print('%-16s%-16s%-32s%-16s' % ('city', 'date', 'weather', 'temp'))

for row in rows:

print('%-16s%-16s%-32s%-16s' % (row[0], row[1], row[2], row[3]))

class WeatherForecast:

def __init__(self):

self.headers = {

'User-Agent': 'Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US;rv:1.9pre)Gecko/2019100821 Minefield/3.0.2pre'} # 创建头,伪装成服务器/浏览器访问远程的web服务器

self.cityCode = {'北京': '101010100', '上海': '101020100', '广州': '101280101', '深圳': '101280601'} # 查找的城市

def forecastCity(self, city):

if city not in self.cityCode.keys():

print(city + 'code cannot be found')

return

url = 'http://www.weather.com.cn/weather/' + self.cityCode[city] + '.shtml'

try:

req = urllib.request.Request(url, headers=self.headers)

data = urllib.request.urlopen(req)

data = data.read()

dammit = UnicodeDammit(data, ['utf-8'], 'gbk')

data = dammit.unicode_markup

soup = BeautifulSoup(data, 'lxml')

lis = soup.select("ul[class='t clearfix'] li") # 找到每一个天气数据

for li in lis:

try:

date = li.select('h1')[0].text

weather = li.select('p[class="wea"]')[0].text

temp = li.select('p[class="tem"] span')[0].text + '/' + li.select('p[class="tem"] i')[0].text

print(city, date, weather, temp)

self.db.insert(city, date, weather, temp) # 插入到数据库的记录

except Exception as err:

print(err)

except Exception as err:

print(err)

def process(self, cities):

self.db = WeatherDB()

self.db.openDB()

for city in cities:

self.forecastCity(city) # 循环每一个城市

self.db.show()

self.db.closeDB()

ws = WeatherForecast()

ws.process(['北京', '上海', '广州', '深圳'])

print('completed')

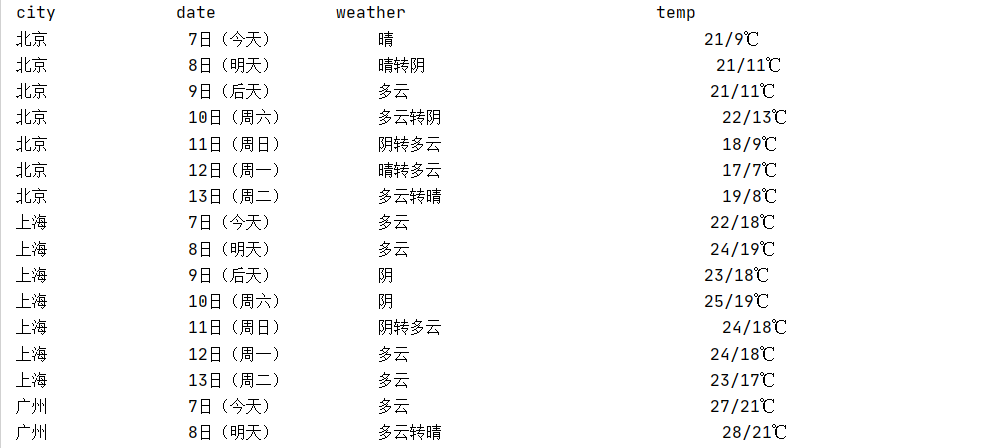

运行结果部分截图

2)心得体会:

这次的代码就是按照书上敲的,遇到不懂的也通过度娘解决了,这次主要是加强了Beautiful的使用以及对sqlite数据库有初步了解。

作业②

2)

要求:用requests和BeautifulSoup库方法定向爬取股票相关信息。

候选网站:东方财富网https://www.eastmoney.com/

新浪股票http://finance.sina.com.cn/stock/

技巧:在谷歌浏览器中进入F12调试模式进行抓包,查找股票列表加载使用的url,并分析api返回的值,并根据所要求的参数可适当更改api的请求参数。根据URL可观察请求的参数f1、f2可获取不同的数值,根据情况可删减请求的参数。

思路:

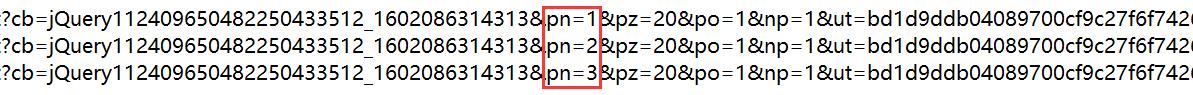

通过数据的url对比,可以发现同一板块的数据页数对应的是pn参数

而同一页数不同板块的数据对应的是fid和fs参数

参考链接:https://zhuanlan.zhihu.com/p/50099084

代码:

import requests

import re

import math

# 用get方法访问服务器并提取页面数据

def getHtml(cmd, page):

url = "http://68.push2.eastmoney.com/api/qt/clist/get?cb=jQuery112409784442493077996_1601810442107&pn=" + str(

page) + "&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&" + cmd + "&fields=f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152"

r = requests.get(url)

pat = "\"diff\":\[(.*?)\]"

data = re.compile(pat, re.S).findall(r.text)[0]

all_page = math.ceil(eval(re.findall('"total":(\d+)', r.text)[0]) / 20) #获取板块的数据条数,除20向上取整就是页数

return data, all_page

# 获取单个页面股票数据

def getOnePageStock(cmd, page):

data, all_page = getHtml(cmd, page)

datas = data.split("},") #分解每条股票

global p

for i in range(len(datas)):

p += 1

stocks = re.sub('["{}]', '', datas[i]).split(",") #分解股票的每条属性

print(tplt.format(p, stocks[11].split(":")[1], stocks[13].split(":")[1], stocks[1].split(":")[1], #输出股票内容

stocks[2].split(":")[1], stocks[3].split(":")[1],

stocks[4].split(":")[1], stocks[5].split(":")[1], stocks[6].split(":")[1],

stocks[14].split(":")[1], stocks[15].split(":")[1],

stocks[16].split(":")[1], stocks[17].split(":")[1], chr(12288)))

cmd = {

"沪深A股": "fid=f3&fs=m:0+t:6,m:0+t:13,m:0+t:80,m:1+t:2,m:1+t:23",

"上证A股": "fid=f3&fs=m:1+t:2,m:1+t:23",

"深证A股": "fid=f3&fs=m:0+t:6,m:0+t:13,m:0+t:80",

"新股": "fid=f26&fs=m:0+f:8,m:1+f:8",

"中小板": "fid=f3&fs=m:0+t:13",

"创业板": "fid=f3&fs=m:0+t:80"

}

for i in cmd.keys():

tplt = "{0:^13}{1:^13}{2:{13}^13}{3:^13}{4:^13}{5:^13}{6:^13}{7:^13}{8:^13}{9:^13}{10:^13}{11:^13}{12:^13}"

print(i)

print("{0:^11}{1:^11}{2:{13}^12}{3:^12}{4:^12}{5:^12}{6:^10}{7:^10}{8:^12}{9:^12}{10:^12}{11:^12}{12:^12}".format(

"序号", "股票代码", "股票名称", "最新报价", "涨跌幅", "涨跌额", "成交量", "成交额", "振幅", "最高", "最低", "今开", "昨收", chr(12288)))

page = 1

p = 0

stocks, all_page = getHtml(cmd[i], page)

while True:

page += 1

if page <= all_page: #页数判断

getOnePageStock(cmd[i], page)

else:

break

运行结果部分截图:

2)心得体会:

这次实验的是抓取js动态加载的网页,在获取数据方面花费的很长时间,再有就是板块的页数(最后还是借鉴同学的),还是有很大收获的。

作业③

3)

要求:根据自选3位数+学号后3位选取股票,获取印股票信息。抓包方法同作②。

候选网站:东方财富网https://www.eastmoney.com/

新浪股票http://finance.sina.com.cn/stock/

代码:

import requests

import re

import math

# 用get方法访问服务器并提取页面数据

def getHtml(cmd, page):

url = "http://68.push2.eastmoney.com/api/qt/clist/get?cb=jQuery112409784442493077996_1601810442107&pn=" + str(

page) + "&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&" + cmd + "&fields=f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152"

r = requests.get(url)

pat = "\"diff\":\[(.*?)\]"

data = re.compile(pat, re.S).findall(r.text)[0]

all_page = math.ceil(eval(re.findall('"total":(\d+)', r.text)[0]) / 20)

return data, all_page

# 获取单个页面股票数据

def getOnePageStock(cmd, page):

data, all_page = getHtml(cmd, page)

datas = data.split("},")

for i in range(len(datas)):

stocks = re.sub('["{}]', '', datas[i]).split(",")

if (stocks[11].split(":")[1] == "002105"):

print(tplt.format("股票代码号", "股票名称", "今日开", "今日最高", "今日最低", chr(12288)))

print(tplt.format(stocks[11].split(":")[1], stocks[13].split(":")[1], stocks[16].split(":")[1],

stocks[14].split(":")[1],

stocks[15].split(":")[1], chr(12288)))

global p #找到就输出,并置p=1,跳出循环

p = 1

break

cmd = {

"沪深A股": "fid=f3&fs=m:0+t:6,m:0+t:13,m:0+t:80,m:1+t:2,m:1+t:23",

"上证A股": "fid=f3&fs=m:1+t:2,m:1+t:23",

"深证A股": "fid=f3&fs=m:0+t:6,m:0+t:13,m:0+t:80",

"新股": "fid=f26&fs=m:0+f:8,m:1+f:8",

"中小板": "fid=f3&fs=m:0+t:13",

"创业板": "fid=f3&fs=m:0+t:80"

}

p = 0

for i in cmd.keys():

tplt = "{0:^8}\t{1:{5}^8}\t{2:^8}\t{3:^8}\t{4:^8}"

page = 1

stocks, all_page = getHtml(cmd[i], page)

# 自动爬取多页,并在结束时停止

while True:

page += 1

if page <= all_page:

getOnePageStock(cmd[i], page)

else:

break

if p == 1:

break

if p == 0:

print("没找到对应的股票代码")

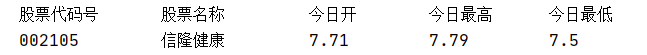

运行结果:

2)心得体会:

我好像是只是在第二题的基础上加了一个if判断,感觉没有理解题目的意思。。。

浙公网安备 33010602011771号

浙公网安备 33010602011771号