数据采集与融合技术_实验四

-

作业①:

1)当当网图书信息爬取

– 要求:熟练掌握 scrapy 中 Item、Pipeline 数据的序列化输出方法;Scrapy+Xpath+MySQL数据库存储技术路线爬取当当网站图书数据

– 候选网站:http://search.dangdang.com/?key=python&act=input

– 关键词:学生可自由选择

– 输出信息:MySQL的输出信息如下:

完成过程:

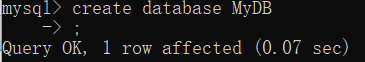

1.创建数据库mydb,同时创建books表用于存储数据:

2.编写item.py

title = scrapy.Field()

author = scrapy.Field()

date = scrapy.Field()

publisher = scrapy.Field()

detail = scrapy.Field()

price = scrapy.Field()

pass

3.修改setting.py

ROBOTSTXT_OBEY = False

ITEM_PIPELINES = {

'pro4_test1.pipelines.Pro4Test1Pipeline': 300,

}

4.编写spider

1)设置关键词和初始url

name = "book"

key = 'python'

source_url = 'http://search.dangdang.com/'

def start_requests(self):

url = BookSpider.source_url + "?key=" + BookSpider.key

yield scrapy.Request(url=url, callback=self.parse)

2)使用xpath匹配文本信息,同时找到翻页接口,实现翻页

def parse(self, response):

try:

dammit = UnicodeDammit(response.body, ["utf-8", "gbk"])

data = dammit.unicode_markup

selector = scrapy.Selector(text=data)

lis = selector.xpath("//li['@ddt-pit'][starts-with(@class,'line')]")

for li in lis:

title = li.xpath("./a[position()=1]/@title").extract_first()

price = li.xpath("./p[@class='price']/span[@class='search_now_price']/text()").extract_first()

author = li.xpath("./p[@class='search_book_author']/span[position()=1]/a/@title").extract_first()

date = li.xpath("./p[@class='search_book_author']/span[position()=last()- 1]/text()").extract_first()

publisher = li.xpath("./p[@class='search_book_author']/span[position()=last()]/a/@title ").extract_first()

detail = li.xpath("./p[@class='detail']/text()").extract_first()

# detail有时没有,结果None

item = Pro4Test1Item()

item["title"] = title.strip() if title else ""

item["author"] = author.strip() if author else ""

item["date"] = date.strip()[1:] if date else ""

item["publisher"] = publisher.strip() if publisher else ""

item["price"] = price.strip() if price else ""

item["detail"] = detail.strip() if detail else ""

yield item

link = selector.xpath("//div[@class='paging']/ul[@name='Fy']/li[@class='next'] / a / @ href").extract_first()

if link:

url = response.urljoin(link)

yield scrapy.Request(url=url, callback=self.parse)

except Exception as err:

print(err)

5.编写piplines.py

def open_spider(self, spider):

print("opened")

try:

self.con = pymysql.connect(host="localhost", port=3306, user="root",

passwd="123456", db="mydb", charset="utf8")

self.cursor = self.con.cursor(pymysql.cursors.DictCursor)

self.cursor.execute("delete from books")

self.opened = True

self.count = 0

except Exception as err:

print(err)

self.opened = False

def close_spider(self, spider):

if self.opened:

self.con.commit()

self.con.close()

self.opened = False

print("closed")

print("总共爬取", self.count, "本书籍")

def process_item(self, item, spider):

try:

print(item["title"])

print(item["author"])

print(item["publisher"])

print(item["date"])

print(item["price"])

print(item["detail"])

print()

if self.opened:

self.cursor.execute(

"insert into books (bTitle,bAuthor,bPublisher,bDate,bPrice,bDetail) values(%s,%s,%s,%s,%s,%s)",

(item["title"], item["author"], item["publisher"], item["date"], item["price"], item["detail"]))

self.count += 1

except Exception as err:

print(err)

return item

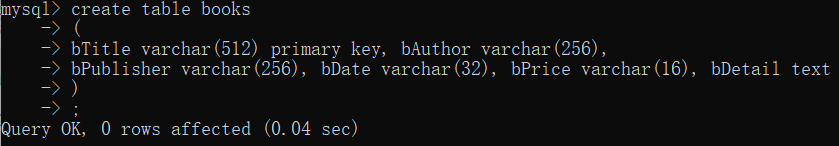

6.数据库存储结果

7.代码链接:https://gitee.com/huang-dunn/crawl_project/tree/master/实验四作业1

2)心得体会:通过复现书本上的代码,使得我更加熟悉scrapy框架的应用,也加深了对数据库操作的理解。

-

作业②

1)

– 要求:熟练掌握 scrapy 中 Item、Pipeline 数据的序列化输出方法;使用scrapy框架+Xpath+MySQL数据库存储技术路线爬取外汇网站数据。

– 候选网站:招商银行网:http://fx.cmbchina.com/hq/

– 输出信息:MySQL数据库存储和输出格式

| Id | Currency | TSP | CSP | TBP | CBP | Time |

|---|---|---|---|---|---|---|

| 1 | 港币 | 86.60 | 86.60 | 86.26 | 85.65 | 15:36:30 |

| 2... |

完成过程:

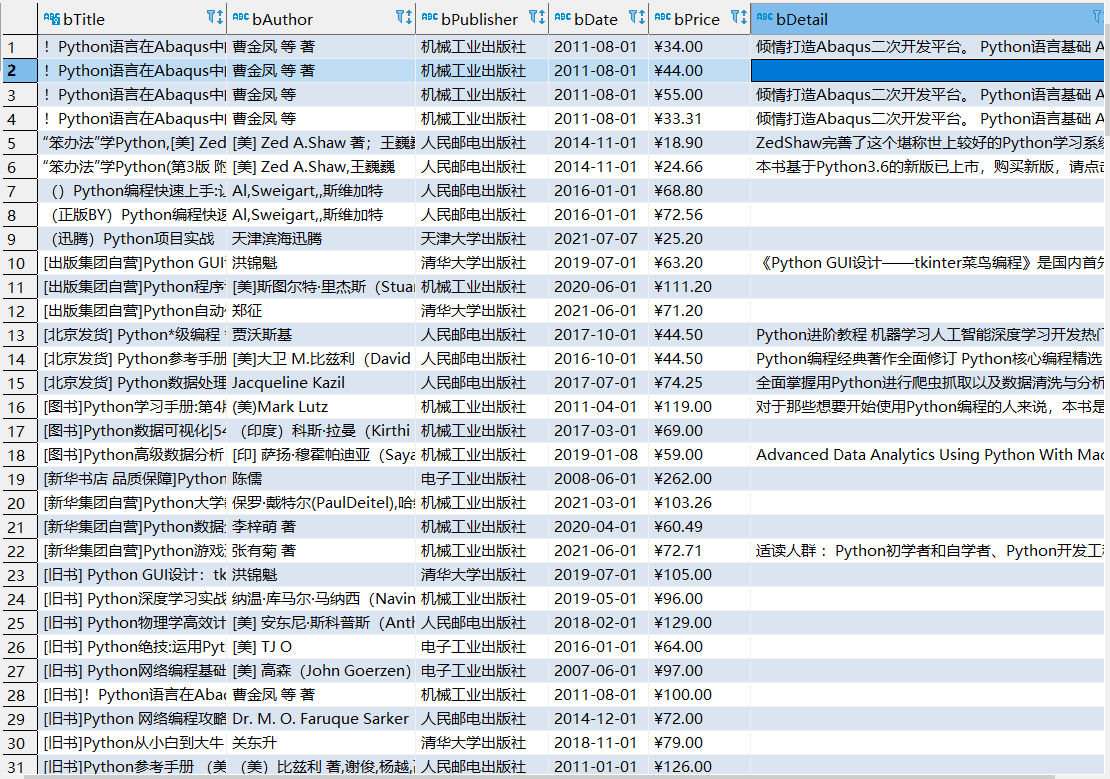

1.创建数据库

2.编写item.py

name = scrapy.Field()

TSP = scrapy.Field()

CSP = scrapy.Field()

TBP = scrapy.Field()

CBP = scrapy.Field()

time = scrapy.Field()

3.编写spiders,使用xpath:

def start_requests(self):

url = 'http://fx.cmbchina.com/hq/'

yield scrapy.Request(url=url, callback=self.parse)

def parse(self, response):

dammit = UnicodeDammit(response.body, ["utf-8", "gbk"])

data = dammit.unicode_markup

selector = scrapy.Selector(text=data)

tr_list = selector.xpath('//tr')

del (tr_list[0],tr_list[1])

item = Pro4Test2Item()

flag = 0

for tr in tr_list:

# print(tr)

if flag == 0:

flag = flag+1

continue

item['name'] = tr.xpath('./td[1]/text()').extract_first().strip()

item['TSP'] = tr.xpath('./td[4]/text()').extract_first()

item['CSP'] = tr.xpath('./td[5]/text()').extract_first()

item['TBP'] = tr.xpath('./td[6]/text()').extract_first()

item['CBP'] = tr.xpath('./td[7]/text()').extract_first()

item['time'] = tr.xpath('./td[8]/text()').extract_first()

# 去空格和换行符

item['TSP'] = str(item['TSP']).strip()

item['CSP'] = str(item['CSP']).strip()

item['TBP'] = str(item['TBP']).strip()

item['CBP'] = str(item['CBP']).strip()

item['time'] = str(item['time']).strip()

print(item['name'], item['TSP'], item['CSP'], item['TBP'], item['CBP'], item['time'])

yield item

4.编写pipeline.py,将获取的数据插入数据库中:

def open_spider(self, spider):

print("opened")

try:

self.con = pymysql.connect(host="localhost", port=3306, user="root",

passwd="123456", db="mydb", charset="utf8")

self.cursor = self.con.cursor(pymysql.cursors.DictCursor)

self.cursor.execute("delete from banks")

self.opened = True

self.count = 0

except Exception as err:

print(err)

self.opened = False

def close_spider(self, spider):

if self.opened:

self.con.commit()

self.con.close()

self.opened = False

print("closed")

def process_item(self, item, spider):

try:

if self.opened:

self.cursor.execute(

"insert into banks (name,TSP,CSP,TBP,CBP,time) values(%s,%s,%s,%s,%s,%s)",

(item['name'], item['TSP'], item['CSP'], item['TBP'], item['CBP'], item['time']))

self.count += 1

except Exception as err:

print(err)

return item

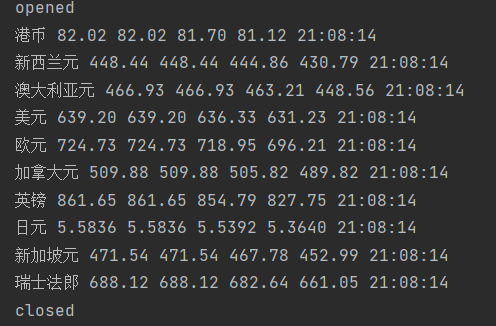

5.输出结果展示:

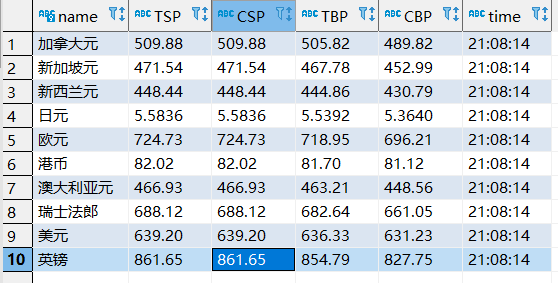

6.数据库存储展示:

7.代码链接:https://gitee.com/huang-dunn/crawl_project/tree/master/实验四作业2

2)心得体会:对scrapy框架的使用更加的熟练,对xpath匹配文本也理解的更深,并对MYSQL数据库的增删改查有了更进一步的了解。

-

作业③

1)

– 要求:熟练掌握 Selenium 查找HTML元素、爬取Ajax网页数据、等待HTML元素等内容;使用Selenium框架+ MySQL数据库存储技术路线爬取“沪深A股”、

– “上证A股”、“深证A股”3个板块的股票数据信息。

– 候选网站:东方财富网:http://quote.eastmoney.com/center/gridlist.html#hs_a_board

– 输出信息:MySQL数据库存储和输出格式如下,表头应是英文命名例如:序号id,股票代码:bStockNo……,由同学们自行定义设计表头:

| 序号 | 股票代码 | 股票名称 | 最新报价 | 涨跌幅 | 涨跌额 | 成交量 | 成交额 | 振幅 | 最高 | 最低 | 今开 | 昨收 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 688093 | N世华 | 28.47 | 62.22% | 10.92 | 26.13万 | 7.6亿 | 22.34 | 32.0 | 28.08 | 30.2 | 17.55 |

| 2...... |

完成过程:

1.分析页面:

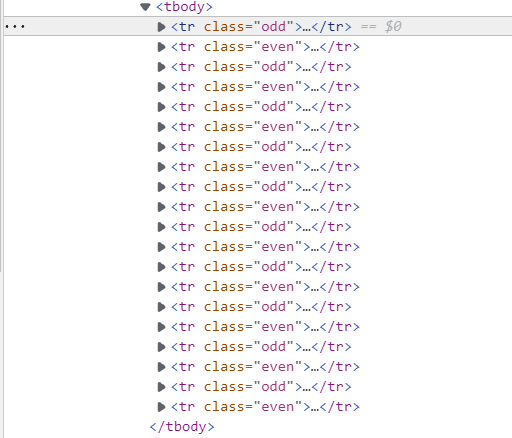

1)所需信息位置:

可以看出每支股票的信息均在tr标签下

2)翻页链接的位置

点击下一页可以看出翻页接口链接的xpath路径为//*[@id="main-table_paginate"]/a[2]

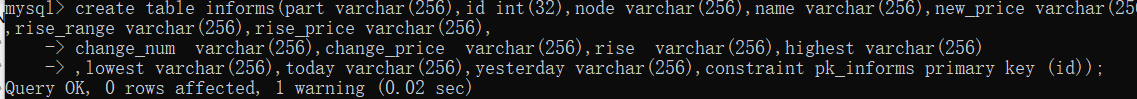

2.创建数据库:

3.编写爬虫函数:

def spider(url, name_):

global count

driver.get(url)

sleep(3)

temp = 1

while True:

tr_list = driver.find_elements('xpath', '//*[@id="table_wrapper-table"]/tbody/tr')

for tr in tr_list:

data = tr.text

# print(data)

data = data.split(" ")

print(count, data[1], data[2], data[6], data[7], data[8], data[9], data[10], data[11], data[12], data[13],

data[14], data[15])

try:

cursor.execute("insert into informs (part,id,node,name,new_price,rise_range,rise_price,change_num,"

"change_price,rise,highest,lowest,today,yesterday) "

"values (%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s)",

(

name_, count, data[1], data[2], data[6], data[7], data[8], data[9], data[10],

data[11], data[12], data[13], data[14], data[15]))

count += 1

except Exception as err:

print(err)

try: # 翻页处理

next_page = WebDriverWait(driver, 3, 0.2).until(

lambda x: x.find_element('xpath', '//*[@id="main-table_paginate"]/a[2]'))

except Exception as e:

print(e)

break

if temp >= 5: # 每种类型爬5页

break

else:

next_page.click()

sleep(3)

webdriver.Chrome().refresh() # 页面刷新

temp = temp + 1

4.主函数:

conn = pymysql.connect(host='localhost', port=3306, user='root', password='123456', db='mydb',

charset='utf8')

cursor = conn.cursor()

count = 1

code = ["hs_a_board", "sh_a_board", "sz_a_board"]

name = ['沪深A股', '上证A股', '深证A股']

for i in range(0, 3):

url_ = 'http://quote.eastmoney.com/center/gridlist.html#' + code[i]

spider(url_, name[i])

conn.commit()

print(name[i]+'信息已导入数据库')

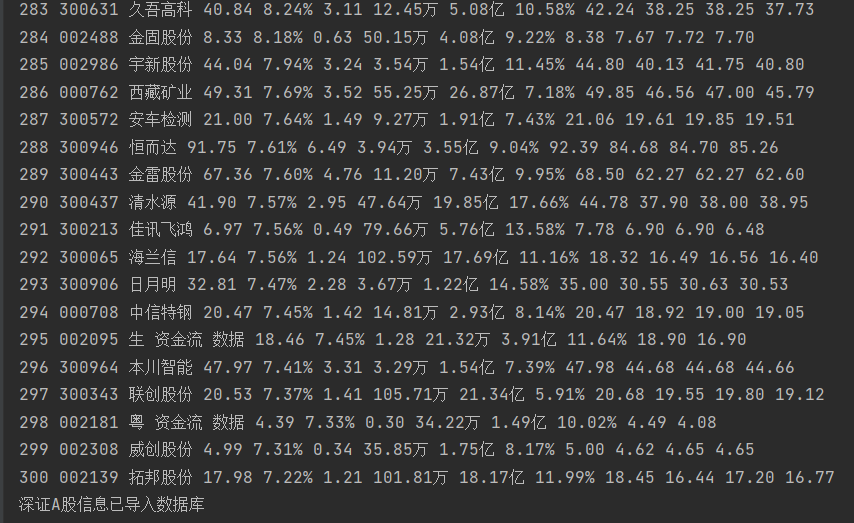

5.输出结果:

6.数据库存储结果:

7.相关代码链接:https://gitee.com/huang-dunn/crawl_project/blob/master/实验四作业3/project_four_test3.py