102302116_田自豪_作业2

- 作业1

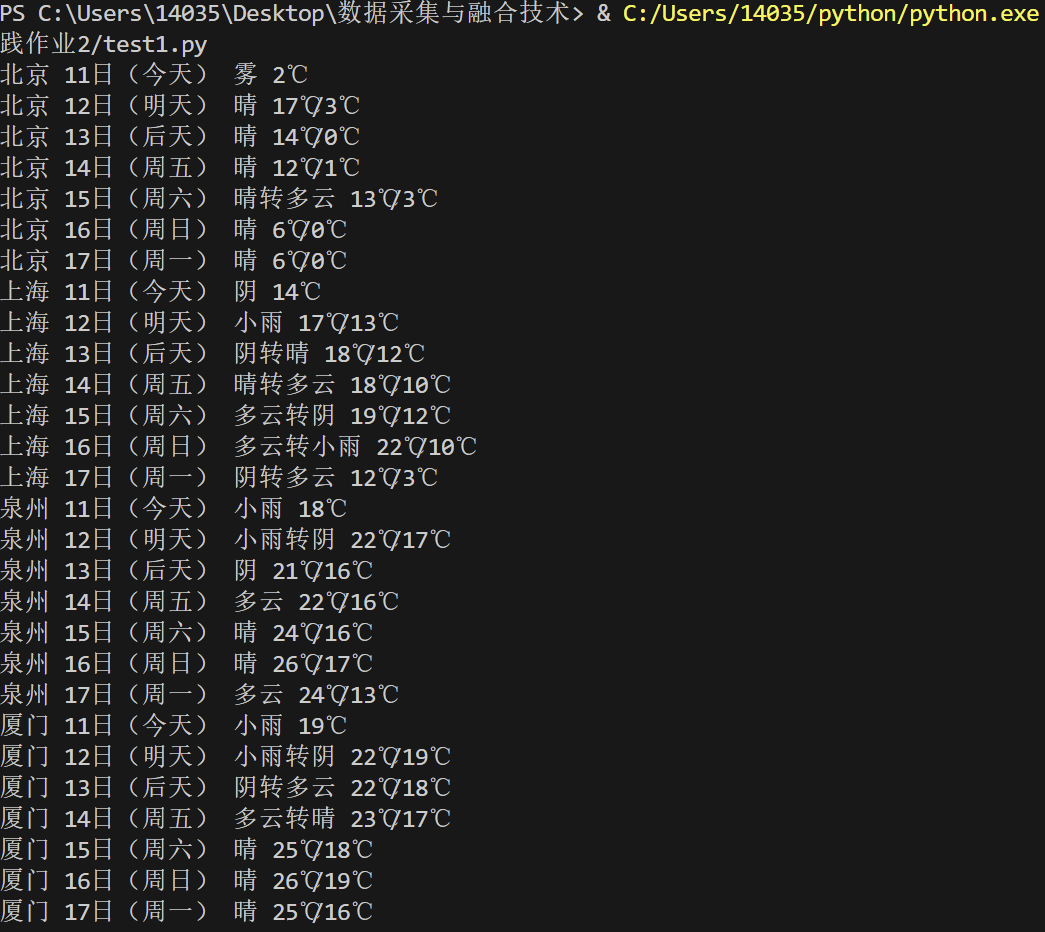

要求:在中国气象网(http://www.weather.com.cn)给定城市集的7日天气预报,并保存在数据库。

代码以及结果:

点击查看代码

from bs4 import BeautifulSoup

import urllib.request

import sqlite3

class WeatherDB:

def __init__(self):

self.con = None

self.cursor = None

def open_db(self):

"""打开数据库并初始化表结构"""

self.con = sqlite3.connect("weathers.db")

self.cursor = self.con.cursor()

self.cursor.execute("""

CREATE TABLE IF NOT EXISTS weathers (

wCity VARCHAR(16),

wDate VARCHAR(16),

wWeather VARCHAR(64),

wTemp VARCHAR(32),

PRIMARY KEY (wCity, wDate)

)

""")

self.cursor.execute("DELETE FROM weathers")

def close_db(self):

"""关闭数据库连接"""

if self.con:

self.con.commit()

self.con.close()

def insert_weather(self, city, date, weather, temp):

"""插入天气数据"""

try:

self.cursor.execute(

"INSERT INTO weathers VALUES (?, ?, ?, ?)",

(city, date, weather, temp)

)

except Exception as e:

print(f"插入数据失败: {e}")

def display_data(self):

"""显示所有天气数据"""

self.cursor.execute("SELECT * FROM weathers")

rows = self.cursor.fetchall()

header = "%-16s%-16s%-32s%-16s"

print(header % ("city", "date", "weather", "temp"))

for row in rows:

print(header % row)

class WeatherForecast:

def __init__(self):

self.headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36"

}

self.city_codes = {

"北京": "101010100",

"上海": "101020100",

"泉州": "101230501",

"厦门": "101230201"

}

self.db = WeatherDB()

def get_weather_data(self, city):

"""获取单个城市的天气数据"""

if city not in self.city_codes:

print(f"未找到城市编码: {city}")

return

url = f"http://www.weather.com.cn/weather/{self.city_codes[city]}.shtml"

try:

req = urllib.request.Request(url, headers=self.headers)

response = urllib.request.urlopen(req)

html = response.read().decode('utf-8', 'ignore')

soup = BeautifulSoup(html, 'lxml')

weather_items = soup.select("ul.t.clearfix li")

for item in weather_items:

self._parse_weather_item(city, item)

except Exception as e:

print(f"获取{city}天气数据失败: {e}")

def _parse_weather_item(self, city, item):

"""解析单个天气项"""

try:

date = item.select_one('h1').text

weather = item.select_one('p.wea').text

temp_tag = item.select_one('p.tem')

high_temp = temp_tag.select_one('span')

low_temp = temp_tag.select_one('i')

temp = f"{high_temp.text}/{low_temp.text}" if high_temp else low_temp.text

print(city, date, weather, temp)

self.db.insert_weather(city, date, weather, temp)

except Exception as e:

print(f"解析天气项失败: {e}")

def process_cities(self, cities):

"""处理多个城市的天气数据"""

self.db.open_db()

for city in cities:

self.get_weather_data(city)

self.db.display_data()

self.db.close_db()

if __name__ == "__main__":

forecast = WeatherForecast()

forecast.process_cities(["北京", "上海", "泉州", "厦门"])

print("数据获取完成")

心得体会:

通过将数据库操作与网络请求分离,不仅使逻辑更加清晰,也便于后续扩展。异常处理需要把握适度原则,既要保证程序健壮性又要避免过度包装。面对网页数据的不规则性,灵活的数据解析策略尤为重要,比如温度数据的多格式处理就体现了对真实场景的适应能力。

Gitee文件路径:https://gitee.com/tian-rongqi/tianzihao/blob/master/test2/1.py

- 作业2

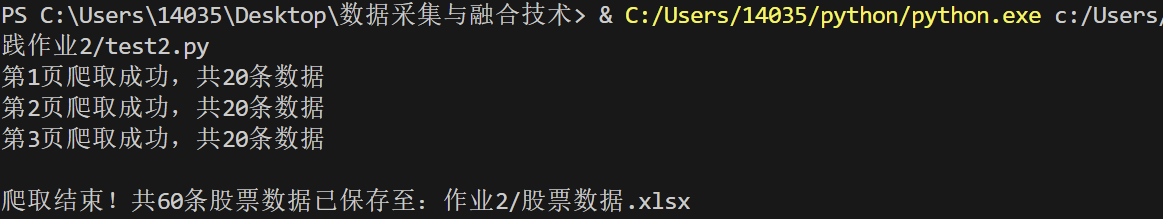

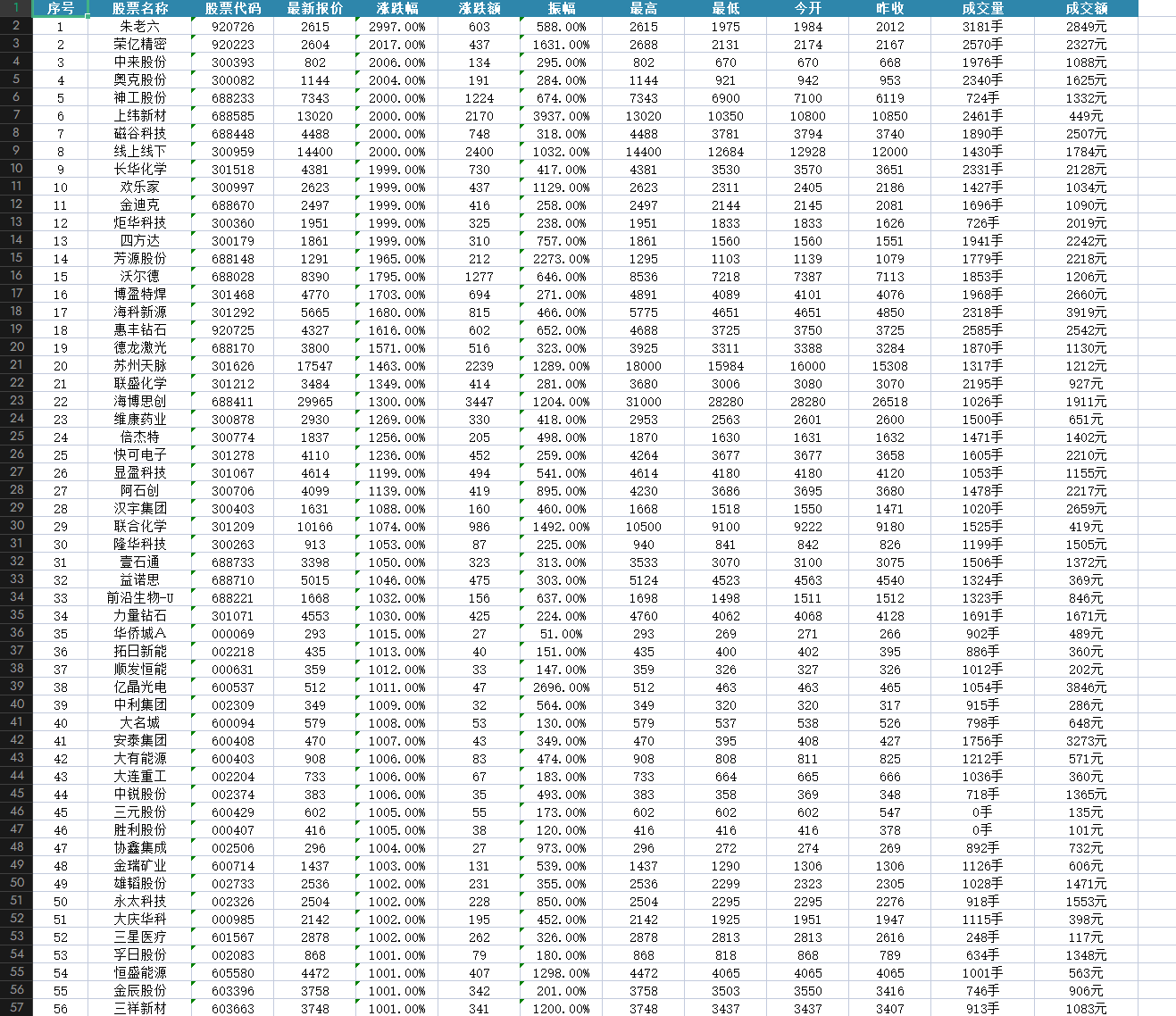

要求:用requests和json解析方法定向爬取股票相关信息,并存储在数据库中。

候选网站:东方财富网:https://www.eastmoney.com/

新浪股票:http://finance.sina.com.cn/stock/

技巧:在谷歌浏览器中进入F12调试模式进行抓包,查找股票列表加载使用的url,并分析api返回的值,并根据所要求的参数可适当更改api的请求参数。根据URL可观察请求的参数f1、f2可获取不同的数值,根据情况可###删减请求的参数。

参考链接:https://zhuanlan.zhihu.com/p/50099084

代码如下:

点击查看代码

import requests

import json

import os

import time

from openpyxl import Workbook

from openpyxl.styles import Font, Alignment, PatternFill

from openpyxl.utils import get_column_letter

requests.packages.urllib3.disable_warnings()

API_URL = "https://push2.eastmoney.com/api/qt/clist/get"

EXCEL_SAVE_PATH = "作业2/股票数据.xlsx"

FIELD_MAPPING = {

"f12": "股票代码", "f14": "股票名称", "f2": "最新报价", "f3": "涨跌幅",

"f4": "涨跌额", "f7": "成交量", "f8": "成交额", "f15": "最高",

"f16": "最低", "f17": "今开", "f18": "昨收", "f23": "振幅"

}

EXCEL_HEADERS = ["序号", "股票名称", "股票代码", "最新报价", "涨跌幅", "涨跌额",

"振幅", "最高", "最低", "今开", "昨收", "成交量", "成交额"]

MY_COOKIE = "st_inirUrl=https%3A%2F%2Fwww.eastmoney.com%2F; st_asi=delete; qgqp_b_id=c82b4c52776ca917068c06efed2b0d9c; st_nvi=_6T45tlila7AZH7MkVcagf80c; rskey=DXk79VzBIWmFwUnRDUWpLK3BMeGYvRVp6QT093dgIL; gvi=91G3yXm99SxaenjvbyorK3987; st_pvi=82904229884786; st_psi=20251029152250326-113200301321-2418745882; st_sp=2025-10-29%2014%3A07%3A23; st_si=19338798169197; nid_create_time=1761718043978; gvi_create_time=1761718043978; st_sn=11; fullscreengg2=1; fullscreengg=1; nid=0e6db4cc9634e9e54ad55022890b8f8b; isoutside=0"

def format_stock_data(raw_data):

formatted = {}

for api_field, chinese_name in FIELD_MAPPING.items():

value = raw_data.get(api_field, 0)

if chinese_name == "涨跌幅":

formatted[chinese_name] = f"{value:.2f}%" if value != 0 else "0.00%"

elif chinese_name == "涨跌额":

formatted[chinese_name] = round(value, 2)

elif chinese_name == "振幅":

formatted[chinese_name] = f"{value:.2f}%" if value != 0 else "0.00%"

elif chinese_name == "成交量":

formatted[chinese_name] = f"{value/10000:.2f}万" if value >= 10000 else f"{value}手"

elif chinese_name == "成交额":

formatted[chinese_name] = f"{value/100000000:.2f}亿" if value >= 100000000 else f"{value}元"

elif chinese_name in ["最新报价", "最高", "最低", "今开", "昨收"]:

formatted[chinese_name] = round(value, 2)

else:

formatted[chinese_name] = value

return formatted

def create_excel():

wb = Workbook()

ws = wb.active

ws.title = "东方财富股票数据"

for col_idx, header in enumerate(EXCEL_HEADERS, 1):

cell = ws.cell(row=1, column=col_idx, value=header)

cell.font = Font(bold=True, color="FFFFFF")

cell.fill = PatternFill(start_color="2E86AB", end_color="2E86AB", fill_type="solid")

cell.alignment = Alignment(horizontal="center", vertical="center")

column_widths = [8, 15, 12, 12, 12, 12, 12, 12, 12, 12, 12, 15, 15]

for col_idx, width in enumerate(column_widths, 1):

ws.column_dimensions[get_column_letter(col_idx)].width = width

return wb, ws

def write_to_excel(ws, data, row_idx):

ws.cell(row=row_idx, column=1, value=row_idx - 1)

ws.cell(row=row_idx, column=2, value=data["股票名称"])

ws.cell(row=row_idx, column=3, value=data["股票代码"])

ws.cell(row=row_idx, column=4, value=data["最新报价"])

ws.cell(row=row_idx, column=5, value=data["涨跌幅"])

ws.cell(row=row_idx, column=6, value=data["涨跌额"])

ws.cell(row=row_idx, column=7, value=data["振幅"])

ws.cell(row=row_idx, column=8, value=data["最高"])

ws.cell(row=row_idx, column=9, value=data["最低"])

ws.cell(row=row_idx, column=10, value=data["今开"])

ws.cell(row=row_idx, column=11, value=data["昨收"])

ws.cell(row=row_idx, column=12, value=data["成交量"])

ws.cell(row=row_idx, column=13, value=data["成交额"])

for col_idx in range(1, len(EXCEL_HEADERS) + 1):

ws.cell(row=row_idx, column=col_idx).alignment = Alignment(horizontal="center", vertical="center")

def crawl_stock_data(page_num=1, page_size=20):

api_params = {

"np": 1,

"fltt": 1,

"invt": 2,

"fs": "m:0+t:6+f:!2,m:0+t:80+f:!2,m:1+t:2+f:!2,m:1+t:23+f:!2,m:0+t:81+s:262144+f:!2",

"fields": "f12,f13,f14,f1,f2,f4,f3,f152,f5,f6,f7,f15,f18,f16,f17,f10,f8,f9,f23",

"fid": "f3",

"pn": page_num,

"pz": page_size,

"po": 1,

"dect": 1,

"ut": "fa5fd1943c7b386f172d6893dbfba10b",

"wbp2u": "|0|0|0|web",

"_": int(time.time() * 1000)

}

basic_headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36",

"Accept": "text/javascript, application/javascript, application/ecmascript, application/x-ecmascript, */*; q=0.01",

"Referer": "https://quote.eastmoney.com/",

"Cookie": MY_COOKIE

}

try:

response = requests.get(

url=API_URL,

params=api_params,

headers=basic_headers,

timeout=10,

verify=False

)

response.raise_for_status()

stock_json = response.json()

stock_list = stock_json.get("data", {}).get("diff", [])

if not stock_list:

print(f"第{page_num}页无股票数据")

return []

formatted_stocks = [format_stock_data(stock) for stock in stock_list]

print(f"第{page_num}页爬取成功,共{len(formatted_stocks)}条数据")

return formatted_stocks

except Exception as e:

print(f"第{page_num}页爬取失败:{str(e)[:80]}")

return []

def main(total_pages=3):

os.makedirs(os.path.dirname(EXCEL_SAVE_PATH), exist_ok=True)

wb, ws = create_excel()

current_row = 2

for page in range(1, total_pages + 1):

stock_data_list = crawl_stock_data(page_num=page, page_size=20)

if not stock_data_list:

break

for stock in stock_data_list:

write_to_excel(ws, stock, current_row)

current_row += 1

wb.save(EXCEL_SAVE_PATH)

total_data_count = current_row - 2

print(f"\n爬取结束!共{total_data_count}条股票数据已保存至:{EXCEL_SAVE_PATH}")

if __name__ == "__main__":

main(total_pages=3)

保存在表格内容截图:

心得体会:

通过这个股票数据爬虫项目,我深刻体会到数据获取与展示的完整流程。在对接东方财富API时,字段映射和数据处理是关键,特别是成交量和成交额的单位转换需要精准处理。使用openpyxl库进行Excel格式化输出让我学会了如何将原始数据转化为美观易读的报表,而异常处理机制则保证了爬虫的稳定性。

Gitee文件路径:https://gitee.com/tian-rongqi/tianzihao/blob/master/test2/2.py

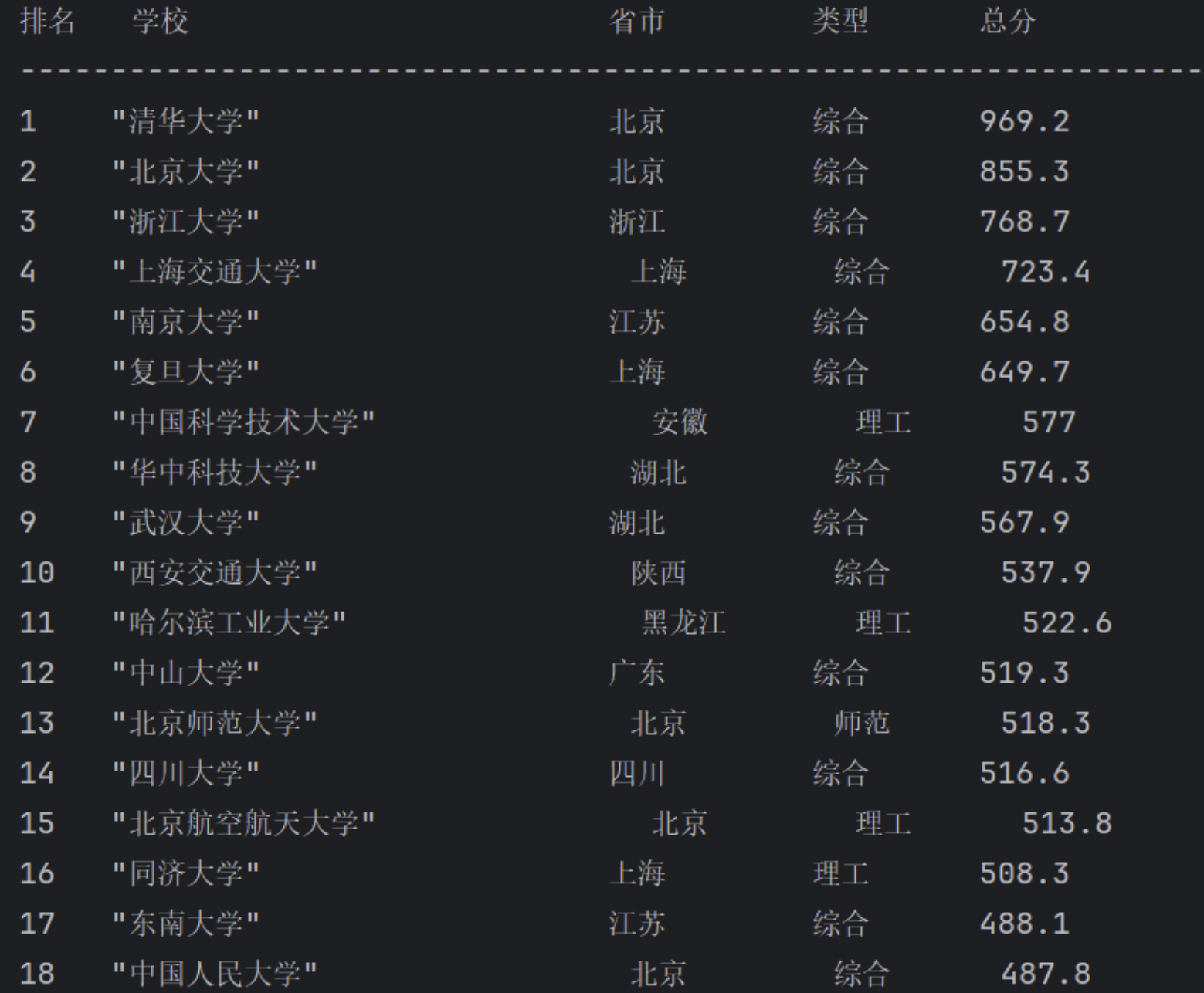

- 作业3

要求:爬取中国大学2021主榜(https://www.shanghairanking.cn/rankings/bcur/2021)所有院校信息,并存储在数据库中,同时将浏览器F12调试分析的过程录制Gif加入至博客中。

Gif录制:

代码如下:

点击查看代码

# -*- coding: utf-8 -*-

import requests

import re

import sqlite3

class CollegeRankingCrawler:

def __init__(self):

self.url = 'https://www.shanghairanking.cn/_nuxt/static/1762224972/rankings/payload.js'

self.headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36"

}

self.keyset, self.valueset = self._init_mapping_data()

self.mapping = dict(zip(self.keyset, map(str, self.valueset)))

def _init_mapping_data(self):

"""初始化映射数据"""

keys = 'a,b,c,d,e,f,g,h,i,j,k,l,m,n,o,p,q,r,s,t,u,v,w,x,y,z,A,B,C,D,E,F,G,H,I,J,K,L,M,N,O,P,Q,R,S,T,U,V,W,X,Y,Z,_,$,aa,ab,ac,ad,ae,af,ag,ah,ai,aj,ak,al,am,an,ao,ap,aq,ar,as,at,au,av,aw,ax,ay,az,aA,aB,aC,aD,aE,aF,aG,aH,aI,aJ,aK,aL,aM,aN,aO,aP,aQ,aR,aS,aT,aU,aV,aW,aX,aY,aZ,a_,a$,ba,bb,bc,bd,be,bf,bg,bh,bi,bj,bk,bl,bm,bn,bo,bp,bq,br,bs,bt,bu,bv,bw,bx,by,bz,bA,bB,bC,bD,bE,bF,bG,bH,bI,bJ,bK,bL,bM,bN,bO,bP,bQ,bR,bS,bT,bU,bV,bW,bX,bY,bZ,b_,b$,ca,cb,cc,cd,ce,cf,cg,ch,ci,cj,ck,cl,cm,cn,co,cp,cq,cr,cs,ct,cu,cv,cw,cx,cy,cz,cA,cB,cC,cD,cE,cF,cG,cH,cI,cJ,cK,cL,cM,cN,cO,cP,cQ,cR,cS,cT,cU,cV,cW,cX,cY,cZ,c_,c$,da,db,dc,dd,de,df,dg,dh,di,dj,dk,dl,dm,dn,do0,dp,dq,dr,ds,dt,du,dv,dw,dx,dy,dz,dA,dB,dC,dD,dE,dF,dG,dH,dI,dJ,dK,dL,dM,dN,dO,dP,dQ,dR,dS,dT,dU,dV,dW,dX,dY,dZ,d_,d$,ea,eb,ec,ed,ee,ef,eg,eh,ei,ej,ek,el,em,en,eo,ep,eq,er,es,et,eu,ev,ew,ex,ey,ez,eA,eB,eC,eD,eE,eF,eG,eH,eI,eJ,eK,eL,eM,eN,eO,eP,eQ,eR,eS,eT,eU,eV,eW,eX,eY,eZ,e_,e$,fa,fb,fc,fd,fe,ff,fg,fh,fi,fj,fk,fl,fm,fn,fo,fp,fq,fr,fs,ft,fu,fv,fw,fx,fy,fz,fA,fB,fC,fD,fE,fF,fG,fH,fI,fJ,fK,fL,fM,fN,fO,fP,fQ,fR,fS,fT,fU,fV,fW,fX,fY,fZ,f_,f$,ga,gb,gc,gd,ge,gf,gg,gh,gi,gj,gk,gl,gm,gn,go,gp,gq,gr,gs,gt,gu,gv,gw,gx,gy,gz,gA,gB,gC,gD,gE,gF,gG,gH,gI,gJ,gK,gL,gM,gN,gO,gP,gQ,gR,gS,gT,gU,gV,gW,gX,gY,gZ,g_,g$,ha,hb,hc,hd,he,hf,hg,hh,hi,hj,hk,hl,hm,hn,ho,hp,hq,hr,hs,ht,hu,hv,hw,hx,hy,hz,hA,hB,hC,hD,hE,hF,hG,hH,hI,hJ,hK,hL,hM,hN,hO,hP,hQ,hR,hS,hT,hU,hV,hW,hX,hY,hZ,h_,h$,ia,ib,ic,id,ie,if0,ig,ih,ii,ij,ik,il,im,in0,io,ip,iq,ir,is,it,iu,iv,iw,ix,iy,iz,iA,iB,iC,iD,iE,iF,iG,iH,iI,iJ,iK,iL,iM,iN,iO,iP,iQ,iR,iS,iT,iU,iV,iW,iX,iY,iZ,i_,i$,ja,jb,jc,jd,je,jf,jg,jh,ji,jj,jk,jl,jm,jn,jo,jp,jq,jr,js,jt,ju,jv,jw,jx,jy,jz,jA,jB,jC,jD,jE,jF,jG,jH,jI,jJ,jK,jL,jM,jN,jO,jP,jQ,jR,jS,jT,jU,jV,jW,jX,jY,jZ,j_,j$,ka,kb,kc,kd,ke,kf,kg,kh,ki,kj,kk,kl,km,kn,ko,kp,kq,kr,ks,kt,ku,kv,kw,kx,ky,kz,kA,kB,kC,kD,kE,kF,kG,kH,kI,kJ,kK,kL,kM,kN,kO,kP,kQ,kR,kS,kT,kU,kV,kW,kX,kY,kZ,k_,k$,la,lb,lc,ld,le,lf,lg,lh,li,lj,lk,ll,lm,ln,lo,lp,lq,lr,ls,lt,lu,lv,lw,lx,ly,lz,lA,lB,lC,lD,lE,lF,lG,lH,lI,lJ,lK,lL,lM,lN,lO,lP,lQ,lR,lS,lT,lU,lV,lW,lX,lY,lZ,l_,l$,ma,mb,mc,md,me,mf,mg,mh,mi,mj,mk,ml,mm,mn,mo,mp,mq,mr,ms,mt,mu,mv,mw,mx,my,mz,mA,mB,mC,mD,mE,mF,mG,mH,mI,mJ,mK,mL,mM,mN,mO,mP,mQ,mR,mS,mT,mU,mV,mW,mX,mY,mZ,m_,m$,na,nb,nc,nd,ne,nf,ng,nh,ni,nj,nk,nl,nm,nn,no,np,nq,nr,ns,nt,nu,nv,nw,nx,ny,nz,nA,nB,nC,nD,nE,nF,nG,nH,nI,nJ,nK,nL,nM,nN,nO,nP,nQ,nR,nS,nT,nU,nV,nW,nX,nY,nZ,n_,n$,oa,ob,oc,od,oe,of,og,oh,oi,oj,ok,ol,om,on,oo,op,oq,or,os,ot,ou,ov,ow,ox,oy,oz,oA,oB,oC,oD,oE,oF,oG,oH,oI,oJ,oK,oL,oM,oN,oO,oP,oQ,oR,oS,oT,oU,oV,oW,oX,oY,oZ,o_,o$,pa,pb,pc,pd,pe,pf,pg,ph,pi,pj,pk,pl,pm,pn,po,pp,pq,pr,ps,pt,pu,pv,pw,px,py,pz,pA,pB,pC,pD,pE'.split(',')

values = ["",'false','null',0,"理工","综合",'true',"师范","双一流","211","江苏","985","农业","山东","河南","河北","北京","辽宁","陕西","四川","广东","湖北","湖南","浙江","安徽","江西",1,"黑龙江","吉林","上海",2,"福建","山西","云南","广西","贵州","甘肃","内蒙古","重庆","天津","新疆","467","496","2025,2024,2023,2022,2021,2020","林业","5.8","533","2023-01-05T00:00:00+08:00","23.1","7.3","海南","37.9","28.0","4.3","12.1","16.8","11.7","3.7","4.6","297","397","21.8","32.2","16.6","37.6","24.6","13.6","13.9","3.3","5.2","8.1","3.9","5.1","5.6","5.4","2.6","162",93.5,89.4,"宁夏","青海","西藏",7,"11.3","35.2","9.5","35.0","32.7","23.7","33.2","9.2","30.6","8.5","22.7","26.3","8.0","10.9","26.0","3.2","6.8","5.7","13.8","6.5","5.5","5.0","13.2","13.3","15.6","18.3","3.0","21.3","12.0","22.8","3.6","3.4","3.5","95","109","117","129","138","147","159","185","191","193","196","213","232","237","240","267","275","301","309","314","318","332","334","339","341","354","365","371","378","384","388","403","416","418","420","423","430","438","444","449","452","457","461","465","474","477","485","487","491","501","508","513","518","522","528",83.4,"538","555",2021,11,14,10,"12.8","42.9","18.8","36.6","4.8","40.0","37.7","11.9","45.2","31.8","10.4","40.3","11.2","30.9","37.8","16.1","19.7","11.1","23.8","29.1","0.2","24.0","27.3","24.9","39.5","20.5","23.4","9.0","4.1","25.6","12.9","6.4","18.0","24.2","7.4","29.7","26.5","22.6","29.9","28.6","10.1","16.2","19.4","19.5","18.6","27.4","17.1","16.0","27.6","7.9","28.7","19.3","29.5","38.2","8.9","3.8","15.7","13.5","1.7","16.9","33.4","132.7","15.2","8.7","20.3","5.3","0.3","4.0","17.4","2.7","160","161","164","165","166","167","168",130.6,105.5,2025,"学生、家长、高校管理人员、高教研究人员等","中国大学排名(主榜)",25,13,12,"全部","1","88.0",5,"2","36.1","25.9","3","34.3","4","35.5","21.6","39.2","5","10.8","4.9","30.4","6","46.2","7","0.8","42.1","8","32.1","22.9","31.3","9","43.0","25.7","10","34.5","10.0","26.2","46.5","11","47.0","33.5","35.8","25.8","12","46.7","13.7","31.4","33.3","13","34.8","42.3","13.4","29.4","14","30.7","15","42.6","26.7","16","12.5","17","12.4","44.5","44.8","18","10.3","15.8","19","32.3","19.2","20","21","28.8","9.6","22","45.0","23","30.8","16.7","16.3","24","25","32.4","26","9.4","27","33.7","18.5","21.9","28","30.2","31.0","16.4","29","34.4","41.2","2.9","30","38.4","6.6","31","4.4","17.0","32","26.4","33","6.1","34","38.8","17.7","35","36","38.1","11.5","14.9","37","14.3","18.9","38","13.0","39","27.8","33.8","3.1","40","41","28.9","42","28.5","38.0","34.0","1.5","43","15.1","44","31.2","120.0","14.4","45","149.8","7.5","46","47","38.6","48","49","25.2","50","19.8","51","5.9","6.7","52","4.2","53","1.6","54","55","20.0","56","39.8","18.1","57","35.6","58","10.5","14.1","59","8.2","60","140.8","12.6","61","62","17.6","63","64","1.1","65","20.9","66","67","68","2.1","69","123.9","27.1","70","25.5","37.4","71","72","73","74","75","76","27.9","7.0","77","78","79","80","81","82","83","84","1.4","85","86","87","88","89","90","91","92","93","109.0","94",235.7,"97","98","99","100","101","102","103","104","105","106","107","108",223.8,"111","112","113","114","115","116",215.5,"119","120","121","122","123","124","125","126","127","128",206.7,"131","132","133","134","135","136","137",201,"140","141","142","143","144","145","146",194.6,"149","150","151","152","153","154","155","156","157","158",183.3,"169","170","171","172","173","174","175","176","177","178","179","180","181","182","183","184",169.6,"187","188","189","190",168.1,167,"195",165.5,"198","199","200","201","202","203","204","205","206","207","208","209","210","212",160.5,"215","216","217","218","219","220","221","222","223","224","225","226","227","228","229","230","231",153.3,"234","235","236",150.8,"239",149.9,"242","243","244","245","246","247","248","249","250","251","252","253","254","255","256","257","258","259","260","261","262","263","264","265","266",139.7,"269","270","271","272","273","274",137,"277","278","279","280","281","282","283","284","285","286","287","288","289","290","291","292","293","294","295","296","300",130.2,"303","304","305","306","307","308",128.4,"311","312","313",125.9,"316","317",124.9,"320","321","Wuyi University","322","323","324","325","326","327","328","329","330","331",120.9,120.8,"Taizhou University","336","337","338",119.9,119.7,"343","344","345","346","347","348","349","350","351","352","353",115.4,"356","357","358","359","360","361","362","363","364",112.6,"367","368","369","370",111,"373","374","375","376","377",109.4,"380","381","382","383",107.6,"386","387",107.1,"390","391","392","393","394","395","396","400","401","402",104.7,"405","406","407","408","409","410","411","412","413","414","415",101.2,101.1,100.9,"422",100.3,"425","426","427","428","429",99,"432","433","434","435","436","437",97.6,"440","441","442","443",96.5,"446","447","448",95.8,"451",95.2,"454","455","456",94.8,"459","460",94.3,"463","464",93.6,"472","473",92.3,"476",91.7,"479","480","481","482","483","484",90.7,90.6,"489","490",90.2,"493","494","495",89.3,"503","504","505","506","507",87.4,"510","511","512",86.8,"515","516","517",86.2,"520","521",85.8,"524","525","526","527",84.6,"530","531","532","537",82.8,"540","541","542","543","544","545","546","547","548","549","550","551","552","553","554",78.1,"557","558","559","560","561","562","563","564","565","566","567","568","569","570","571","572","573","574","575","576","577","578","579","580","581","582",4,"2025-04-15T00:00:00+08:00","logo\u002Fannual\u002Fbcur\u002F2025.png","软科中国大学排名于2015年首次发布,多年来以专业、客观、透明的优势赢得了高等教育领域内外的广泛关注和认可,已经成为具有重要社会影响力和权威参考价值的中国大学排名领先品牌。软科中国大学排名以服务中国高等教育发展和进步为导向,采用数百项指标变量对中国大学进行全方位、分类别、监测式评价,向学生、家长和全社会提供及时、可靠、丰富的中国高校可比信息。",2024,2023,2022,15,2020,2019,2018,2017,2016,2015]

return keys, values

def init_database(self):

"""初始化数据库"""

with sqlite3.connect('college_data.db') as conn:

conn.execute('''

CREATE TABLE IF NOT EXISTS college_info (

id INTEGER PRIMARY KEY AUTOINCREMENT,

rank INTEGER NOT NULL,

name VARCHAR(50) NOT NULL,

province VARCHAR(20),

category VARCHAR(20),

score DECIMAL(10,1),

UNIQUE(name)

)

''')

def save_to_db(self, data):

"""保存数据到数据库"""

with sqlite3.connect('college_data.db') as conn:

conn.execute(

'INSERT OR IGNORE INTO college_info (rank, name, province, category, score) VALUES (?, ?, ?, ?, ?)',

data

)

def show_db_data(self):

"""显示数据库数据"""

print("存储在数据库的数据:")

with sqlite3.connect('college_data.db') as conn:

cursor = conn.cursor()

cursor.execute('SELECT rank, name, province, category, score FROM college_info ORDER BY rank')

rows = cursor.fetchall()

self._print_table(rows)

def _print_table(self, rows=None, data=None):

"""打印表格"""

header = f"{'排名':<5}{'学校':<25}{'省市':<10}{'类型':<8}{'总分':<8}"

print(header)

print("-" * 65)

if rows:

for row in rows:

print(f"{row[0]:<5}{row[1]:<25}{row[2]:<10}{row[3]:<8}{row[4]:<8}")

elif data:

for info in data:

print(f"{info['排名']:<5}{info['学校']:<25}{info['省市']:<10}{info['类型']:<8}{info['总分']:<8}")

def transform_value(self, value):

"""转换映射值"""

key = value.strip().strip('"')

return self.mapping.get(key, value)

def crawl_data(self):

"""爬取数据"""

response = requests.get(url=self.url, headers=self.headers)

page_text = response.text

# 提取数据

patterns = {

'rank': r"ranking:(.*?),rankChange:",

'name': r"univNameCn:(.*?),univNameEn:",

'province': r"province:(.*?),score:",

'category': r"univCategory:(.*?),province:",

'score': r"score:(.*?),ranking:"

}

extracted_data = {}

for key, pattern in patterns.items():

matches = re.findall(pattern, page_text)

extracted_data[key] = [self.transform_value(match) for match in matches]

return extracted_data

def process_data(self, data):

"""处理数据"""

college_info = []

for i in range(len(data['rank'])):

item = (data['rank'][i], data['name'][i], data['province'][i],

data['category'][i], data['score'][i])

college_info.append({

"排名": item[0],

"学校": item[1],

"省市": item[2],

"类型": item[3],

"总分": item[4]

})

self.save_to_db(item)

return college_info

def run(self):

"""主运行方法"""

self.init_database()

print("正在爬取大学排名数据...")

extracted_data = self.crawl_data()

college_info = self.process_data(extracted_data)

print("\n爬取结果:")

self._print_table(data=college_info)

self.show_db_data()

print(f"\n共处理{len(college_info)}条数据")

if __name__ == "__main__":

crawler = CollegeRankingCrawler()

crawler.run()

心得体会:

面对网页中经过混淆的JavaScript数据,通过建立完整的映射字典成功还原了原始信息。采用面向对象的设计模式将数据库操作、数据爬取和结果显示等功能模块化,大大提升了代码的可读性和可维护性。

Gitee文件路径:https://gitee.com/tian-rongqi/tianzihao/blob/master/test2/3.py

浙公网安备 33010602011771号

浙公网安备 33010602011771号