paddle框架下图像分割的教程

1,图像分割

我以为图像分割是个细分领域,没想到,它是一个非常笼统的概念,所谓图像分割就是把图像上的每一个像素都找到对应的类别,但是具体应用起来又有所不同,语义分割,是指你要找到图像上的路,人,车等类别,而实例分割,是要找到图像上这一个人和另一个人,这一辆车和另一辆车,跑了几个例子稍微记录一下笔记。

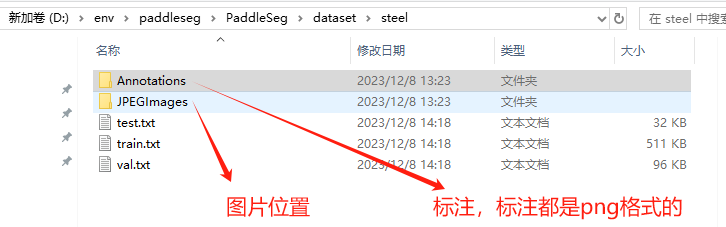

2,paddleseg中的钢板表面划痕检测

这个是语义分割哈,不是实例分割,首先这个教程我用的数据是官方给的,网友整理过的,是一个钢材表面瑕疵的数据集,既不是coco也不是voc格式的,

标注是原图上的一个mask,mask填充的是0,如果有需要分割的目标,那就分别在对应的位置上填充1,2,3等像素值,最大可以填充254,所以记录标注的那些png图片,也是黑糊糊看不清的

数据就位以后开启训练等流程简记作如下,暂时这样记录,后面还要出一个自定义数据版的教程,如果效果还不错的话:

数据集切分:

python tools/data/split_dataset_list.py dataset/steel JPEGImages Annotations --split 0.8 0.15 0.05 --format jpg png

修改配置的yml文件

开启训练

python tools/train.py --config configs\pp_liteseg\pp_liteseg_stdc1_camvid_960x720_10k.yml --save_interval 500 --do_eval --save_dir output

模型评估:

python tools/val.py --config configs\pp_liteseg\pp_liteseg_stdc1_camvid_960x720_10k.yml --model_path output/best_model/model.pdparams

这个是针对测试集进行评估的哈

模型预测看看:

python tools/predict.py --config configs\pp_liteseg\pp_liteseg_stdc1_camvid_960x720_10k.yml --model_path output/best_model/model.pdparams --image_path dataset\steel\JPEGImages\1917c9f34.jpg --save_dir output/result

模型导出:

python tools/export.py --config configs\pp_liteseg\pp_liteseg_stdc1_camvid_960x720_10k.yml --model_path output/best_model/model.pdparams --save_dir output/infer_model --input_shape 1 3 800 128

注意,inference模型需要加上尺寸信息才能成功转化为onnx

转onnx

paddle2onnx --model_dir output/infer_model --model_filename model.pdmodel --params_filename model.pdiparams --opset_version 11 --save_file output/infer_model/output.onnx

实践证明,图像分割效果还是可以的,接下来需要自己标注少量数据薅一下子了,

安装EISeg标注工具

首先安装paddleSeg然后进入eiseg文件夹,执行pip install -r requirements.txt

运行:同文件夹中执行python -m eiseg

3,paddleDetection中的solov2实例分割

solov2官方没有多少教程,全靠女装大佬的solov2教程哈,参考链接:https://aistudio.baidu.com/projectdetail/985880?channelType=0&channel=0

他跑的数据集是官方的一个数据集,这个数据集说起来就厉害了,首先它大数据集和验证集加起来将近12w张图了吧,其次它内里标了segmentation(目标边缘)和bbox(目标外框),外框至于用没用我不大清楚,但是coco里有哈,拿到数据集以后咱就要开始修改参数跑一个模型验证一下吧,,得,一看时间,3060的显卡16天!初步验证的话,咱还是想办法需要减少一些数据量吧,因为没有自行整理数据跑solov2的教程,所以咱也只能尝试分析一下数据看看里面都有些啥内容

数据分析:

##从coco数据集中解析一张标注好的图片看一看 import json import cv2 with open("./instances_train2017.json","r") as f: data = json.load(f) keys = list(data.keys()) ##看看json里的数据结构 for i in keys: print(i,len(data[i])) ## # info 6 # licenses 8 # images 2000 # annotations 13939 # categories 80 print(data['categories']) # [{'supercategory': 'person', 'id': 1, 'name': 'person'}, # {'supercategory': 'vehicle', 'id': 2, 'name': 'bicycle'}, # {'supercategory': 'vehicle', 'id': 3, 'name': 'car'}, # {'supercategory': 'vehicle', 'id': 4, 'name': 'motorcycle'}, # {'supercategory': 'vehicle', 'id': 5, 'name': 'airplane'}, # {'supercategory': 'vehicle', 'id': 6, 'name': 'bus'}, # {'supercategory': 'vehicle', 'id': 7, 'name': 'train'}, # {'supercategory': 'vehicle', 'id': 8, 'name': 'truck'}, # {'supercategory': 'vehicle', 'id': 9, 'name': 'boat'}, # {'supercategory': 'outdoor', 'id': 10, 'name': 'traffic light'}, # {'supercategory': 'outdoor', 'id': 11, 'name': 'fire hydrant'}, # {'supercategory': 'outdoor', 'id': 13, 'name': 'stop sign'}, # {'supercategory': 'outdoor', 'id': 14, 'name': 'parking meter'}, # {'supercategory': 'outdoor', 'id': 15, 'name': 'bench'}, # {'supercategory': 'animal', 'id': 16, 'name': 'bird'}, # {'supercategory': 'animal', 'id': 17, 'name': 'cat'}, # {'supercategory': 'animal', 'id': 18, 'name': 'dog'}, # {'supercategory': 'animal', 'id': 19, 'name': 'horse'}, # {'supercategory': 'animal', 'id': 20, 'name': 'sheep'}, # {'supercategory': 'animal', 'id': 21, 'name': 'cow'}, # {'supercategory': 'animal', 'id': 22, 'name': 'elephant'}, # {'supercategory': 'animal', 'id': 23, 'name': 'bear'}, # {'supercategory': 'animal', 'id': 24, 'name': 'zebra'}, # {'supercategory': 'animal', 'id': 25, 'name': 'giraffe'}, # {'supercategory': 'accessory', 'id': 27, 'name': 'backpack'}, # {'supercategory': 'accessory', 'id': 28, 'name': 'umbrella'}, # {'supercategory': 'accessory', 'id': 31, 'name': 'handbag'}, # {'supercategory': 'accessory', 'id': 32, 'name': 'tie'}, # {'supercategory': 'accessory', 'id': 33, 'name': 'suitcase'}, # {'supercategory': 'sports', 'id': 34, 'name': 'frisbee'}, # {'supercategory': 'sports', 'id': 35, 'name': 'skis'}, # {'supercategory': 'sports', 'id': 36, 'name': 'snowboard'}, # {'supercategory': 'sports', 'id': 37, 'name': 'sports ball'}, # {'supercategory': 'sports', 'id': 38, 'name': 'kite'}, # {'supercategory': 'sports', 'id': 39, 'name': 'baseball bat'}, # {'supercategory': 'sports', 'id': 40, 'name': 'baseball glove'}, # {'supercategory': 'sports', 'id': 41, 'name': 'skateboard'}, # {'supercategory': 'sports', 'id': 42, 'name': 'surfboard'}, # {'supercategory': 'sports', 'id': 43, 'name': 'tennis racket'}, ] #这之类。大约80个 ##看看图片格式啥样 example_images = data['images'][0] print(example_images) ##{'license': 3, 'file_name': '000000391895.jpg', 'coco_url': # 'http://images.cocodataset.org/train2017/000000391895.jpg', # 'height': 360, 'width': 640, 'date_captured': '2013-11-14 11:18:45', # 'flickr_url': 'http://farm9.staticflickr.com/8186/8119368305_4e622c8349_z.jpg', 'id': 391895} ##看看标注啥样 print(data['annotations'][0]) ##{'segmentation': [[321.02, 321.0, 314.25, 307.99, 307.49, 293.94, 300.2, 286.14, 290.84, 277.81, 285.11, 276.25, 267.94, 277.81, 256.49, 279.89, 244.52, 281.97, 227.35, 287.18, 192.49, 290.3, 168.55, 289.26, 142.53, 287.18, 121.72, 293.42, 105.07, 303.83, 94.14, 313.2, 86.33, 326.73, 84.25, 339.22, 76.97, 343.9, 67.6, 345.46, 61.87, 350.66, 69.16, 360.03, 77.49, 360.03, 93.62, 358.99, 105.07, 356.91, 110.27, 351.7, 117.55, 353.79, 121.2, 352.74, 132.64, 361.07, 139.41, 367.32, 145.89, 373.77, 156.05, 374.5, 160.41, 370.14, 167.67, 367.96, 168.39, 370.87, 169.84, 362.88, 166.94, 356.35, 177.83, 353.45, 190.89, 353.45, 209.54, 358.32, 224.96, 360.09, 240.82, 361.85, 258.45, 364.49, 267.43, 374.29, 275.14, 377.71, 293.14, 379.43, 300.86, 370.86, 303.43, 358.86, 312.0, 356.29, 326.57, 361.43, 341.14, 365.71, 344.57, 369.14, 358.29, 370.86, 358.29, 364.0, 355.71, 360.57, 342.86, 348.57, 334.29, 340.0, 320.57, 322.86]], # 'area': 18234.62355, 'iscrowd': 0, # 'image_id': 74, 'bbox': [61.87, 276.25, 296.42, 103.18], 'category_id': 18, 'id': 1774} ##找张图片画上瞧一瞧 example_images = data['images'][0] image_id = example_images['id'] image_name ="D:\env\paddledetection2\PaddleDetection-develop\dataset\coco\\train2017\\"+example_images['file_name'] img_data = cv2.imread(image_name) color_list = [(0, 0, 0),(255,0,0,),(0,0,255),(0,255,0)] idx= 0 for anno in data['annotations']: if anno['image_id'] == image_id: category_id = anno['category_id'] print("find seg one",category_id,anno) bbox = anno['bbox'] x1 = int(bbox[0]) y1=int(bbox[1]) w=int(bbox[2]) h=int(bbox[3]) cv2.rectangle(img_data, (x1, y1), (x1+w, y1+h), color_list[idx], 2) segmentation = anno['segmentation'][0] for i in range(0,len(segmentation),2): x = int(segmentation[i]) y= int(segmentation[i+1]) # print("image",image['id']) # imageid_list.append(image['id']) cv2.circle(img_data, (x, y), 1, color_list[idx], -1) idx+=1 cv2.imwrite("justfortest.jpg",img_data)

因为数据实在太大了,计划截取2w张出来跑个demo,这是脚本

import json with open("dataset/coco/annotations/instances_train2017.json","r") as f: data = json.load(f) keys = list(data.keys()) # for i in keys: # print(i,len(data[i])) new_images = data['images'][:2000] imageid_list = [] for image in new_images: print("image",image['id']) imageid_list.append(image['id']) ##{'license': 3, 'file_name': '000000391895.jpg', 'coco_url': # 'http://images.cocodataset.org/train2017/000000391895.jpg', # 'height': 360, 'width': 640, 'date_captured': '2013-11-14 11:18:45', # 'flickr_url': 'http://farm9.staticflickr.com/8186/8119368305_4e622c8349_z.jpg', 'id': 391895} new_seg_list = [] idx = 0 for anno in data['annotations']: if anno['image_id'] in imageid_list: print("anno id",anno['id']) new_seg_list.append(anno) idx+=1 else: continue ##{'segmentation': [[321.02, 321.0, 314.25, 307.99, 307.49, 293.94, 300.2, 286.14, 290.84, 277.81, 285.11, 276.25, 267.94, 277.81, 256.49, 279.89, 244.52, 281.97, 227.35, 287.18, 192.49, 290.3, 168.55, 289.26, 142.53, 287.18, 121.72, 293.42, 105.07, 303.83, 94.14, 313.2, 86.33, 326.73, 84.25, 339.22, 76.97, 343.9, 67.6, 345.46, 61.87, 350.66, 69.16, 360.03, 77.49, 360.03, 93.62, 358.99, 105.07, 356.91, 110.27, 351.7, 117.55, 353.79, 121.2, 352.74, 132.64, 361.07, 139.41, 367.32, 145.89, 373.77, 156.05, 374.5, 160.41, 370.14, 167.67, 367.96, 168.39, 370.87, 169.84, 362.88, 166.94, 356.35, 177.83, 353.45, 190.89, 353.45, 209.54, 358.32, 224.96, 360.09, 240.82, 361.85, 258.45, 364.49, 267.43, 374.29, 275.14, 377.71, 293.14, 379.43, 300.86, 370.86, 303.43, 358.86, 312.0, 356.29, 326.57, 361.43, 341.14, 365.71, 344.57, 369.14, 358.29, 370.86, 358.29, 364.0, 355.71, 360.57, 342.86, 348.57, 334.29, 340.0, 320.57, 322.86]], # 'area': 18234.62355, 'iscrowd': 0, # 'image_id': 74, 'bbox': [61.87, 276.25, 296.42, 103.18], 'category_id': 18, 'id': 1774} data['images'] = new_images data['annotations'] = new_seg_list json_str = json.dumps(data) with open("train_test.json","w") as f: f.write(json_str)

修改配置文件开启训练因为我要跑的是solov2_r50_fpn_3x_coco.yml,所以修改其中的base_lr: 0.005,数据集指定文件夹基本不变:coco_instance.yml,其余的改一下soloreader里面的batch_size为1,估计是比较费计算,batch_size是2的话根本跑不起来

启动训练:

python tools/train.py -c configs/solov2/solov2_r50_fpn_3x_coco.yml --eval

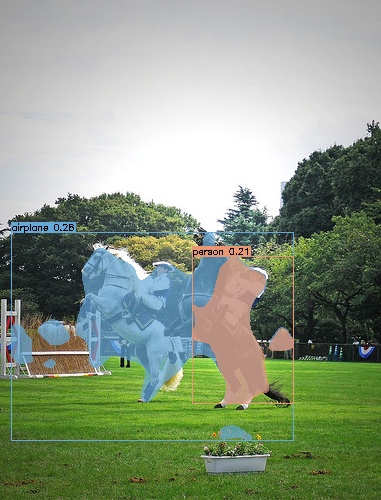

推理一张图看看:

python tools/infer.py -c configs/solov2/solov2_r50_fpn_3x_coco.yml --infer_img=dataset\coco\train2017\000000000049.jpg --output_dir=output/result --draw_threshold=0.2 -o weights=output/best_model.pdparams --use_vdl=True

效果图,感觉还行,毕竟只是我2w张图,4-5个小时的训练结果:

转inference模型

python tools/export_model.py -c configs/solov2/solov2_r50_fpn_3x_coco.yml --output_dir=./inference_model -o weights=output\best_model.pdparams

inference推理

python deploy/python/infer.py --model_dir=inference_model\solov2_r50_fpn_3x_coco --image_file="D:\env\paddledetection2\PaddleDetection-develop\dataset\coco\val_test\seg_1019.png" --device=gpu --output_dir=output/result

浙公网安备 33010602011771号

浙公网安备 33010602011771号