dynamic rendering

小结:

1、

Google has to process JavaScript multiple times in order for it to fully understand the content in it. This process is known as rendering.

2、

Ironically, JavaScript development and SEO are often at odds with each other. JavaScript makes websites fun and interesting to use, while SEO makes them available for people to find in the first place.

Server-side rendering (SSR) was created to make them both possible.

Render Javascript With Search Engines in Mind | Prerender https://prerender.io/

Dynamic Rendering | Google Search Central | Google Developers : https://developers.google.com/search/docs/guides/dynamic-rendering

Dynamic Rendering: The Solution for Visitors and Search Engines

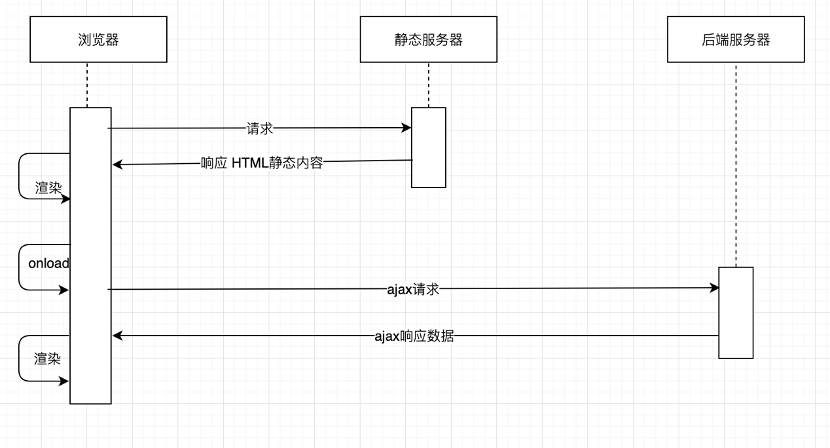

Client-Side Rendering

When a user visits a JavaScript site, their browser downloads several files and executes the code to figure out how the page should look. This is called client-side rendering because it uses the computing power of the client device.

That’s fine for most human users, but search engine crawlers move quickly, learning as much as possible without using up their computing resources. So when they find a JavaScript site, they might read only a few pages and get an incomplete picture of it.

Server-Side Rendering

Crawlers also have a second queue for rendering, adding JavaScript sites to the index too late, resulting in poor rankings.

JavaScript sites can be rendered on the server side instead, but doing so is hard work for the server, can be tricky and produce slow or lost interactive elements.

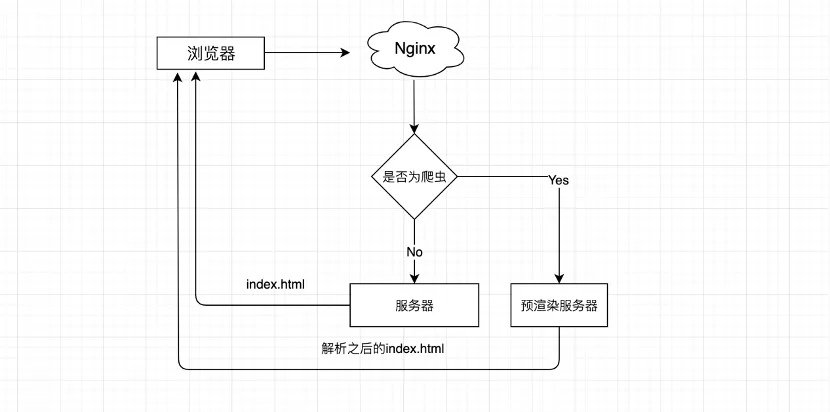

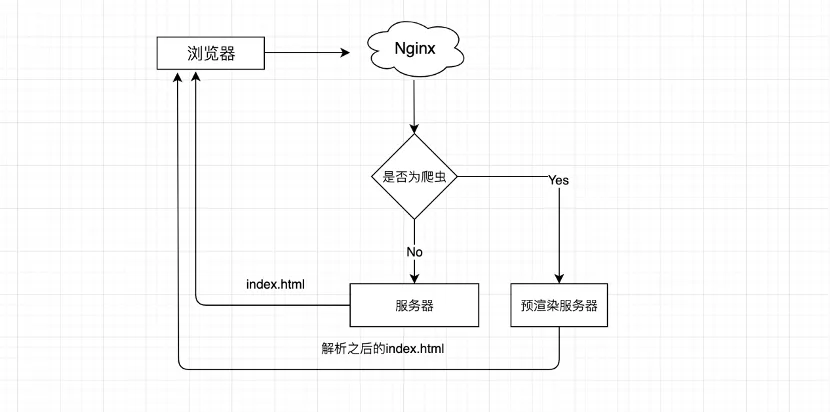

Dynamic Rendering

That’s where dynamic rendering comes in. The server can distinguish between human and robot, giving the human the full experience and the robot a lightweight HTML version.

Dynamic rendering is preferred by search engines and comes without an SEO penalty for cloaking content, making it the clear best choice.

Dynamic Rendering: How It Can Lead To SEO Success - Prerender https://prerender.io/how-to-be-successful-with-dynamic-rendering-and-seo/

How to Be Successful With Dynamic Rendering and SEO

JavaScript web pages make SEO, an already tricky field, much more complicated.

SEO is one of the more technical fields within the digital marketing space. It’s like the popular circus act where the juggler spins three plates on poles. Technical SEO is like doing that on a tightrope. JavaScript SEO is lighting the tightrope, the plates, and yourself on fire.

It’s a tricky balancing act. Not only does your website need to be formatted in a way that makes it easy for search engines to process it, but it needs to perform better and load faster than the competition.

However, the nice thing about technical SEO is it’s one of the ranking factors that you have direct control over.

How do you make your JavaScript website easy for Google to read and understand, while giving your visitors a good web experience at the same time?

The answer: Dynamic rendering.

We’ll break down what dynamic rendering is, why it’s important, why it’s beneficial for your website’s SEO health, and how to implement it.

What Happens When Google Visits Your Webpage

Google uses an automated program, known as a bot, to index and catalogue every web page on the Internet.

Google’s stated purpose is to provide the user with the best possible result for a given query. To accomplish this, it seeks to understand what content is on a given web page, and assess its relative importance to other web pages about the same topic.

Most modern web development is done with three main programming languages: HTML, CSS, and JavaScript.

Google processes HTML in two steps: crawl and index. First, Googlebot crawls the HTML on a page. It reads the text and outgoing links on a page, and parses out the keywords that help it determine what the web page is about. Then, Googlebot indexes the page.

Google, and other search engines, prefer content that’s rendered in static HTML.

With JavaScript, this process is more complicated. Rendering JavaScript comes in three stages:

- Crawl

- Render

- Index

Google has to process JavaScript multiple times in order for it to fully understand the content in it. This process is known as rendering. When Google encounters JavaScript on a web page, it puts it into a queue and comes back to it once it has the resources to render it.

The Problem With JavaScript SEO

HTML is standard in web development. Search engines can render HTML-based content easily. By comparison, it’s more difficult for search engines to process Javascript. It’s resource-intensive.

What this means is that web pages based in JavaScript eat up your crawl budget. Google states that its web crawler can process JavaScript. However, this hasn’t yet been proven. It requires more resources from Google to crawl, index, and render your JavaScript pages. Other search engines such as Bing and DuckDuckGo are unable to parse JavaScript at all.

Because search engines have to use more resources to render your JavaScript pages, it’s likely many elements of your page won’t get indexed at all. Google and other search engines could skip over your metadata and canonical tags, for example, which are critical for SEO.

The thing is, Javascript provides a good user experience. It’s the reason why you’re able to make flashy websites that make your users go “Wow, that was so cool!”

How do you make a modern web experience without sacrificing your SEO?

Most developers accomplish this with server-side rendering.

What’s the Difference Between Client-side and Server-side Rendering?

Most JavaScript frameworks such as Angular, Vue, and React default to client-side rendering. They wait to fully load your web page’s content until they can do so within the browser on the user’s end. In other words, they render the content for humans, rather than on the server for search engines to see it.

Client-side rendering is cheaper than other alternatives. It also reduces the strain on your servers without adding more work for your developers.

However, it carries the chance of a poor user experience. For example, it adds seconds of page load time to your web pages, which can lead to a high bounce rate.

Client-side rendering affects bots as well. Googlebot uses a two-wave indexing system. It crawls and indexes the static HTML first, then crawls the JavaScript content once it has the resources to do so. This means your JavaScript content might be missed in the indexing process.

That’s bad. You need Google to see that content if you want to rank higher than your competitors and to be found by your customers.

So what’s the alternative? For most development teams, it’s server-side rendering: configuring your JavaScript so that content is rendered on your website’s own server rather than on the client-side browser.

This renders your JavaScript content in advance, making it readable for bots. SSR has performance benefits as well. Both bots and humans get faster experiences, and there’s no risk of partial indexing or missing content.

So, Why Doesn’t Everyone Just Use Server-Side Rendering?

If server-side rendering were easy, then every website would do it and JavaScript SEO wouldn’t be a problem. But, server-side rendering isn’t easy.

SSR is expensive, time-consuming, and difficult to execute. You need a competent web development team to put it in place.

It also tends not to work with third-party JavaScript. Websites that use server-side rendering often require external JavaScript libraries or plugins that are difficult to configure.

This is the case with Angular, which requires the Angular Universal Library to enable server-side rendering. Enabling SSR with Angular requires a lot of moving parts. If just one piece is out of place, it could confuse web crawlers and lead to a drop in your search results.

React, on the other hand, makes use of the Next.JS library to enable server-side rendering. That means your development team has to maintain an additional server at an extra cost.

So how do you make frameworks like React SEO friendly to please your customers and search engines? The solution is dynamic rendering.

What is Dynamic Rendering?

Dynamic rendering is the process of serving content based on the user agent requesting it.

Essentially, it’s a hybrid solution that gives the best of both worlds. It provides static HTML for bots, and dynamic JavaScript for users. It gives bots a machine-readable, stripped down, text-and-link-only version of your web page that’s simple for them to scan and parse. It gives your human users the fully-rendered, fully-optimized, intended web experience that gets them to interact with your website longer.

How Do You Implement Dynamic Rendering?

Implementing dynamic rendering is a three-step process.

First, you install a dynamic renderer (let’s say Prerender), to transform your dynamic content into static HTML.

Second, you choose the user-agents you think should receive static content. In most cases, this includes search engine crawlers like Googlebot and Bingbot. There might be others, such as LinkedInbot, you also wish to include.

If your prerendering service slows down your server or your HTTP requests increase, consider implementing a cache to store content. Next, determine if your user-agents require a desktop or mobile content. You can use dynamic serving to give them the appropriate solution.

Finally, configure your servers to deliver static HTML.

Verifying Your Configuration

Now you need to make sure that dynamic rendering is working properly. Here are a few things to check:

Mobile-Friendly Test: This is a function of Google Search Console’s suite of tools. Google made the switch to mobile-first indexing for all websites in September of 2020. In other words, Google looks at the mobile version of your website before the desktop one. Therefore it’s important your website is optimized for a mobile-first experience.

URL Inspection Tool: You need to make sure your website is properly crawled and indexed. The URL Inspection Tool will do just that.

Fetch as Google: This is what you will use to determine the effectiveness of your dynamic renderer. It allows you to make sure that individual URLs are properly submitted for indexing.

Structured Data Testing Tool: If you’re using schema markup in your website, then you’ll want to use this tool. It ensures your dynamic renderer isn’t interfering with schema markup.

When Should You Use Dynamic Rendering?

Dynamic rendering is an ideal way to fix your JavaScript SEO problems. Really, one of the biggest benefits of dynamic rendering is that it eliminates any issues related to your crawl budget while being cost-effective. And it doesn’t require advanced technical knowledge to implement.

So when should you use dynamic rendering?

Dynamic rendering is a good solution if you have a large website with lots of content that changes frequently (e.g. an e-commerce store with revolving inventory). If that’s the case, then your website requires quick and frequent indexing. Dynamic rendering will make sure that all of your pages get indexed and displayed properly in the SERPs.

It’s also beneficial for websites that rely on social media sharing, such as those with embeddable social media walls or widgets.

Is Dynamic Rendering Cloaking?

Cloaking is the practice of serving markedly different content to search engine bots and humans. This is considered a black hat SEO tactic. While the short-term benefits of cloaking may be tempting, the potential risks are not worth it.

Dynamic rendering is not cloaking, as long as it serves the same end content to both crawlers and human users. It’s only cloaking if you serve completely different content to each.

Wrapping Up

JavaScript SEO is challenging. But there are things you can do to make it easier, and reduce the burden on your web development team and your budget.

If you want a dynamic renderer that solves all of your JavaScript SEO problems, look no further than Prerender. All you have to do is install our middleware. The rest takes care of itself. Get Google to finally work with you rather than against you.

What Is Server Side Rendering | SSR Pros & Cons | Prerender https://prerender.io/what-is-srr-and-why-do-you-need-to-know/

What is Server-Side Rendering, and Why Do You Need to Know?

The world of web development has changed rapidly.

Over the last fifteen years, web pages have evolved from simple HTML text to multimedia interactive experiences, elevating web development to an art. That’s like a civilization going from stone houses to space exploration in a century.

Two of the most significant advancements in web development during this period have been the adoption of JavaScript frameworks to build web pages, and the field of Search Engine Optimization.

Ironically, JavaScript development and SEO are often at odds with each other. JavaScript makes websites fun and interesting to use, while SEO makes them available for people to find in the first place.

Server-side rendering (SSR) was created to make them both possible.

Read on to learn about what SSR is, why you should care, and how you can use it for yourself.

What is SSR?

Server-side rendering (SSR) is a method of loading your website’s JavaScript on your own server. When human users or search engine web crawlers like Googlebot request a page, the content reads as a static HTML page.

Historically, search engines have had difficulty crawling and indexing websites made using JavaScript rather than HTML.

Google indexes JavaScript-based web pages using a two-wave indexing system. When Googlebot first encounters your website, it crawls your pages and extracts all of their HTML, CSS and links, typically within a few hours..

Google then puts the JavaScript content in a queue, rendering it when it has the resources. Sometimes that takes days or weeks. During that time, your web pages are not being indexed and, therefore, not being found on Google. That’s a lot of traffic you’re missing out on.

What’s worse, if your JavaScript pages aren’t able to be crawled and indexed properly, Google reads them as a blank screen and ranks it accordingly, which can be catastrophic to your website’s SEO health.

Google has claimed that Googlebot is able to crawl and index Javascript-based web pages just fine, but this has yet to be proven. Other search engines such as Bing, Yandex and DuckDuckGo cannot crawl JavaScript at all.

Regardless of the search engine, JavaScript presents a problem because it needs additional processing power to crawl and index, thereby eating up more of your website’s allotted crawl budget.

SSR is designed for this problem. It renders JavaScript on your own servers rather than putting the burden on the user agent, making the content fast and easily accessible when requested.

What is Client-Side Rendering, and How is it Different From Server-Side Rendering?

Client-Side Rendering (CSR) is the increasingly popular alternative to SSR.

The difference between the two is similar to ordering a prepared meal kit from a service like Blue Apron or Green Chef, or buying all the ingredients and making the meal yourself.

Client-side rendering loads a website’s JavaScript in the user’s browser, not the website’s server. It’s ordering the prepared meal kit.

Websites built with front-end JavaScript frameworks such as Angular, React or Vue all default to CSR. This is problematic from an SEO standpoint because when web crawlers encounter a page on your website, all they see is a blank screen.

Server-side rendering, meanwhile, is the more traditional option; it’s buying the groceries and cooking the meal yourself. It loads your JavaScript content on your website’s server.

SSR dates back to the time when JavaScript and PHP were primarily backend technologies, and Java was used simply to make HTML-based websites more interactive rather than building them from scratch.

SSR converts your HTML files into information that’s readable for the user-end browser. Googlebot can see the basic HTML content on your web page without JavaScript in the way, while the user sees the fully-rendered page in all its glory. Your website is ranked properly on Google, and your user is treated to a web experience that’s a feast for the eyes and ears.

Advantages of Server-Side Rendering

We’ve already discussed some of the SEO benefits of server-side rendering: flawlessly crawled and indexed JavaScript pages, no more wasted crawl budgets or plummeting search rankings, no sluggish two-wave indexing process; just smooth, seamless indexation and the steady stream of Google traffic that comes with it.

SSR has even more advantages than the ones above.

It optimizes web pages for social media, not just search engines. When someone shares your page on Facebook or Twitter, the post includes a preview of the page.

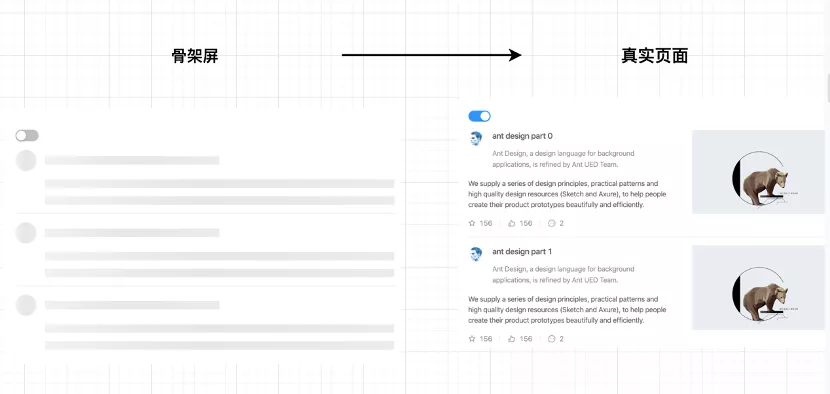

It comes with a number of performance benefits that improve your website’s UX. SSR pages have much faster load time and a much faster first contentful paint, because the content is available in the browser sooner. That means less time your user has to look at a loading screen.

JavaScript is resource-heavy and code-intensive. Downloading it onto a browser using CSR contributes significantly to page weight. A single JavaScript file averages out to about 1MB, whereas web development best practice advises keeping the entire page under 5MB max.

The performance enhancements that come with SSR also have their own SEO benefits. Google gives preferential search rankings to the sites with the fastest page load speed. Faster load times improve user metrics such as session duration and bounce rate; Google algorithms look at these metrics and give you an extra SEO bost.

Faster web pages. Happy search engines. Happy user.

Server-Side Rendering Disadvantages

If SSR is so much more technically well-optimized and SEO-friendly, why don’t all websites use it?

Turns out, using SSR for your website does come with some significant drawbacks. It’s expensive, difficult to implement and requires a lot of manpower to set up.

It also puts the burden of rendering your JavaScript content on your own servers, which will rack up your server maintenance costs.

Websites that use JavaScript frameworks need universal libraries to enable SSR; Angular requires Angular Universal, React and Vue need Next.JS. All of them require additional work from your engineering team, which costs you money.

SSR pages will have a higher TTFB latency and a slower time-to-interactive. Your user will see the content sooner, but if they click on something, nothing will happen. They’ll get frustrated and leave.

SSR is not a fix-all solution. You need to assess your website’s technical needs and challenges before putting it in place.

There’s a Better Solution Still: Prerendering

SSR has a lot of benefits that compensate for the technical deficiencies and deteriorated user experience of CSR. However, it has its own limitations and may not be the best solution for your website.

Prerendering is a great option that combines improved performance and indexation with ease-of-setup and implementation. It’s cost-effective, scalable and even recommended by Google’s own documentation.

To give users and search engines a fast and well-optimized web experience, sign up for Prerender for free today.

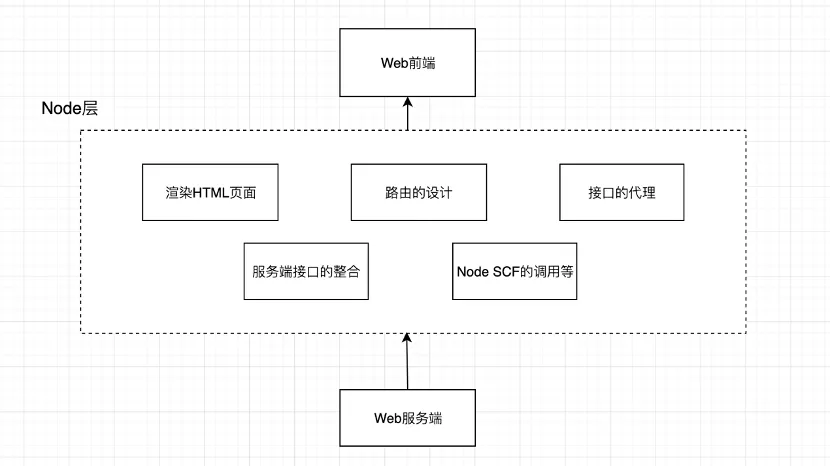

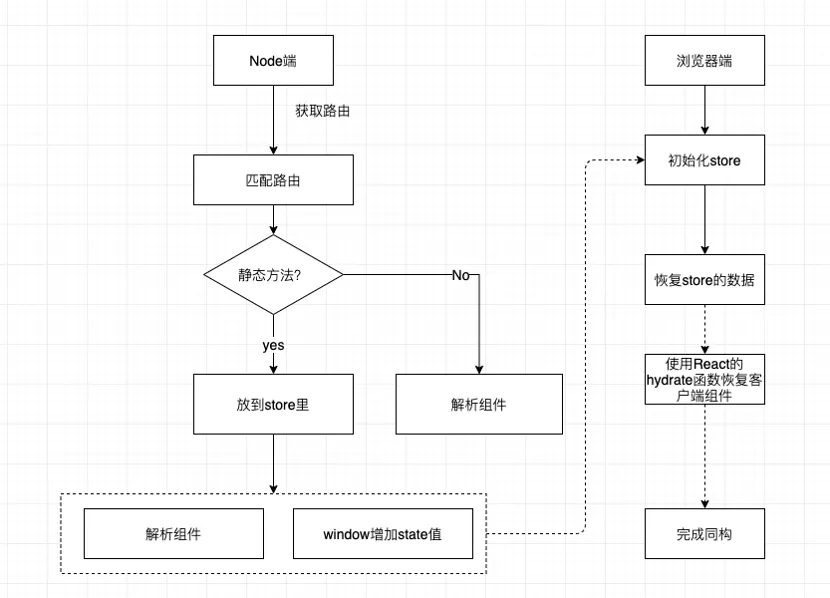

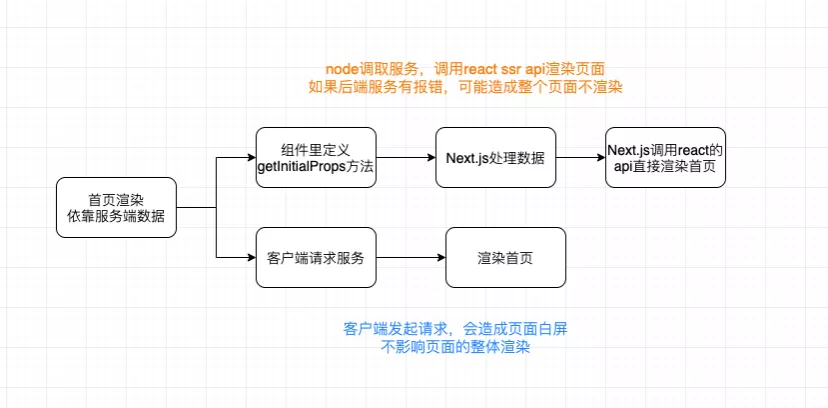

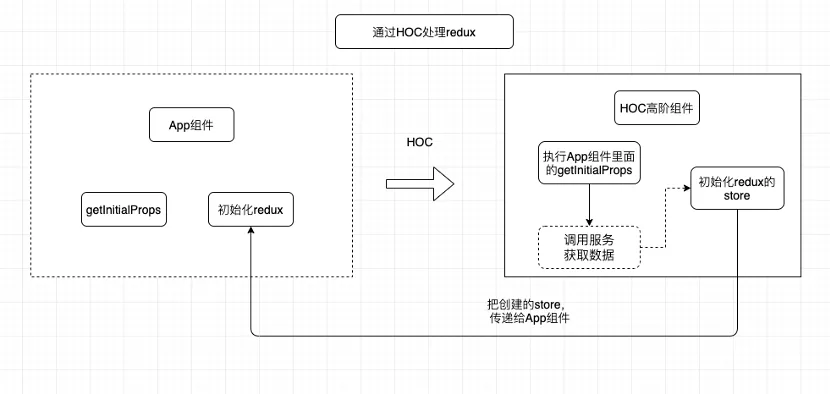

https://mp.weixin.qq.com/s/4SLlA0mJENvpPZQSi8-U2g

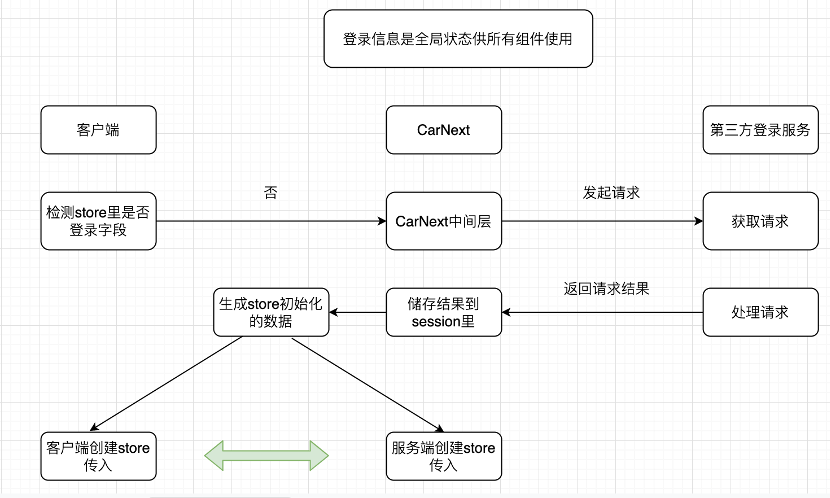

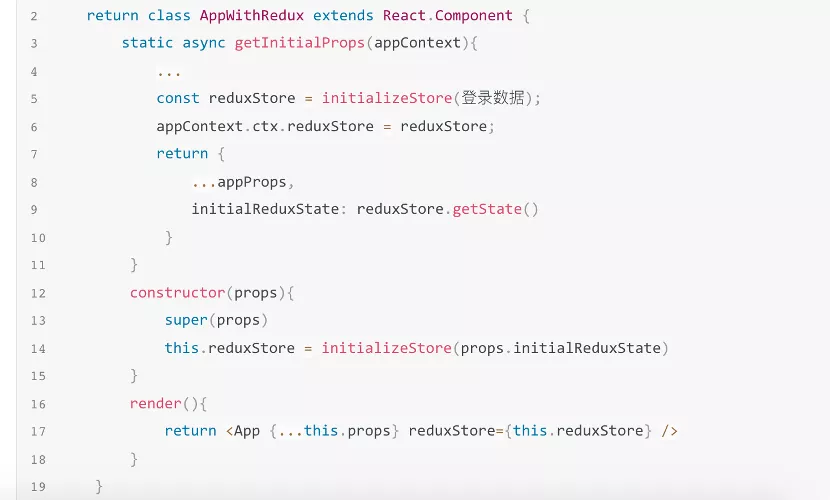

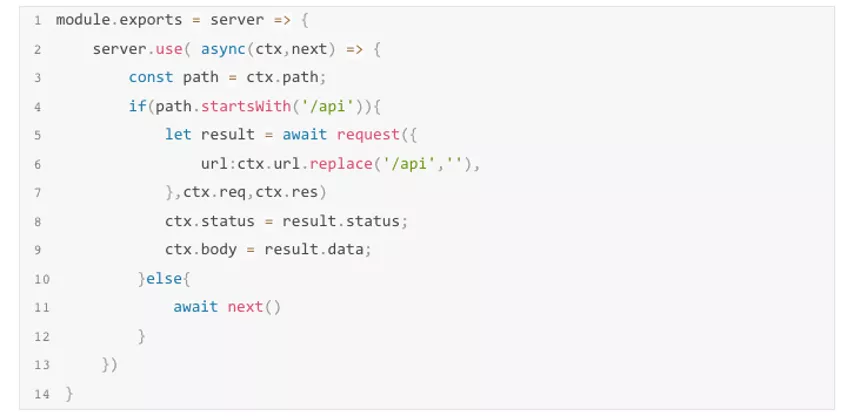

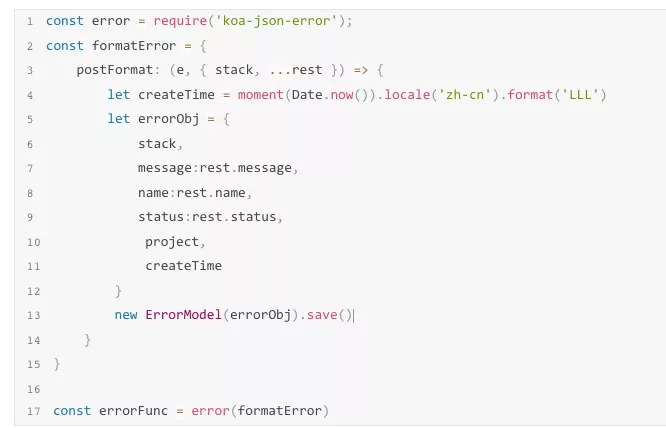

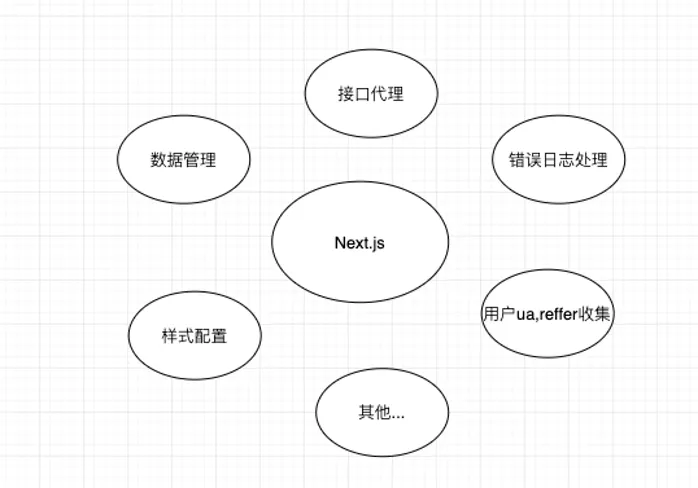

基于next.js的服务端渲染解决方案

浙公网安备 33010602011771号

浙公网安备 33010602011771号