Paper | Beyond a Gaussian Denoiser: Residual Learning of Deep CNN for Image Denoising

Beyond a Gaussian Denoiser: Residual Learning of Deep CNN for Image Denoising

发表在2017 TIP。

摘要:

Discriminative model learning for image denoising has been recently attracting considerable attentions due to its favorable denoising performance. In this paper, we take one step forward by investigating the construction of feed-forward denoising convolutional neural networks (DnCNNs) to embrace the progress in very deep architecture, learning algorithm, and regularization method into image denoising. Specifically, residual learning and batch normalization are utilized to speed up the training process as well as boost the denoising performance. Different from the existing discriminative denoising models which usually train a specific model for additive white Gaussian noise (AWGN) at a certain noise level, our DnCNN model is able to handle Gaussian denoising with unknown noise level (i.e., blind Gaussian denoising). With the residual learning strategy, DnCNN implicitly removes the latent clean image in the hidden layers. This property motivates us to train a single DnCNN model to tackle with several general image denoising tasks such as Gaussian denoising, single image super-resolution and JPEG image deblocking. Our extensive experiments demonstrate that our DnCNN model can not only exhibit high effectiveness in several general image denoising tasks, but also be efficiently implemented by benefiting from GPU computing.

结论:

In this paper, a deep convolutional neural network was proposed for image denoising, where residual learning is adopted to separating noise from noisy observation. The batch normalization and residual learning are integrated to speed up the training process as well as boost the denoising performance. Unlike traditional discriminative models which train specific models for certain noise levels, our single DnCNN model has the capacity to handle the blind Gaussian denoising with unknown noise level. Moreover, we showed the feasibility to train a single DnCNN model to handle three general image denoising tasks, including Gaussian denoising with unknown noise level, single image super-resolution with multiple upscaling factors, and JPEG image deblocking with different quality factors. Extensive experimental results demonstrated that the proposed method not only produces favorable image denoising performance quantitatively and qualitatively but also has promising run time by GPU implementation.

要点:

-

DnCNN可以处理未知程度的高斯噪声,即实现blind Gaussian denoising。

-

作者还尝试了用一个DnCNN,同时处理未知高斯噪声、多尺度超分辨和未知QF的JPEG图像压缩。

优点:

-

应该是第一篇将DNN用于高斯去噪的网络,通过混合训练集,可以实现盲去噪。(CNN最早在[23]被用于图像去噪)

-

打破了传统方法对模型或图像先验的形式约束,让网络自己学习先验项。

-

作者通过实验发现,BN和残差学习是互助的。

故事背景

首先,作者回顾了高斯去噪的历史工作,主要是一些传统方法,例如nonlocal self-similarity models和马尔科夫随机场模型等。其中,NSS方法是SOTA常客,包括BM3D,NCSR等。

尽管如此,这些方法普遍需要优化、耗时;此外,这些方法都需要一些主观设计的参数。

这就引出了判别式学习方法。例如,[14]提出将随机场模型和展开的半二次优化模型在一个学习框架中统一起来。又比如,[15][16]提出的TNRD模型,可以学习一种调整的专家场(modified fields of experts)图像先验。这些方法普遍只学习一种特定的先验,并且只适用于某一个特定的噪声程度。(批注:实际上就是先验项的形式被提前规定好了,因此先验类型是固定的)

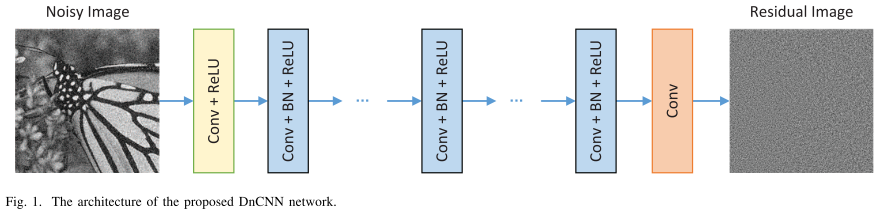

网络结构

整体上,网络采用的是VGG结构,改造:

-

所有卷积核都设为\(3 \times 3\)。

-

移除所有池化层。

在论文第三页,作者说明了如何根据有效感受野来设定网络深度。有兴趣的可以看一看。最终作者设定DnCNN为17层。对于盲去噪及其他任务,作者设为20层。

作者加入了一系列的关于CNN的优化手段:

-

残差学习:让CNN建模潜在干净图像与有噪图像的差异。换句话说,CNN需要从有噪图像中剥离出干净图像(找出自然无损图像的本质特征)。

-

BN:加快和稳定训练过程。实验发现,BN和残差学习是彼此受益的。

注意几点:

-

第一层和最后一层没有使用BN。

-

最后一层不使用ReLU非线性激活。

-

每一层卷积都产生64个通道,最后输出单通道的灰度图像,理想状况下为噪声图像。

最后值得一提的是,在传统方法中,由于通常是基于块处理,因此会产生比较明显的块效应。但在DnCNN中,我们采用的是补零卷积的方法,实验发现补零不会产生块效应。

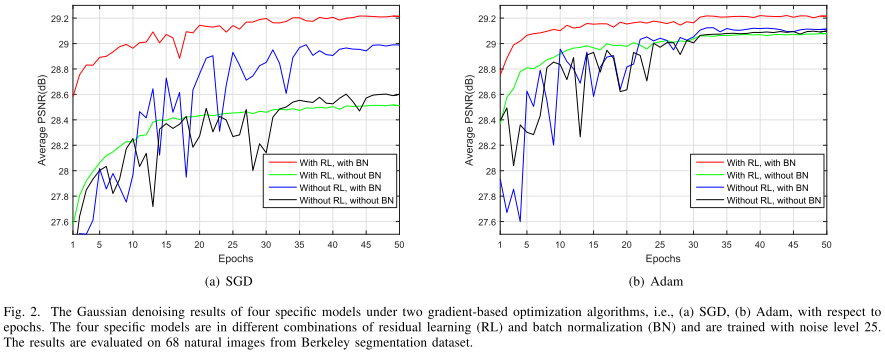

BN和残差学习

作者采用实验的方式,探究BN和残差学习的组合效果:

如图,无论是SGD还是Adam优化方法,RL和BN的强强联手都能显著提升网络性能。作者对此的解释是:

Actually, one can notice that in Gaussian denoising the residual image and batch normalization are both associated with the Gaussian distribution. It is very likely that residual learning and batch normalization can benefit from each other for Gaussian denoising. This point can be further validated by the following analyses.

拓展到其他任务

盲去噪:作者在训练集中混合了噪声标准差从0到55不等的有噪图像,来训练单一的DnCNN模型。

去JPEG块效应:同理。

超分辨:先插值升采样,然后再执行相同的流程。

实验略。这篇文章在现在看来还是相对简单的。

浙公网安备 33010602011771号

浙公网安备 33010602011771号