HBase-TDG Introduction

Before we start looking into all the moving parts of HBase, let us pause to think about why there was a need to come up with yet another storage architecture. Relational database management

systems (RDBMS) have been around since the early 1970s, and have helped countless companies and organizations to implement their solution to given problems. And they are equally helpful today. There are many use-cases for which the relational model makes perfect sense. Yet there also seem to be specific problems that do not fit this model very well.

The Dawn of Big Data

大数据的黎明, 一切故事都开始于大数据的运动.

Google和Amazon是这个运动中的先行者, 基于Google的design设计的Hadoop做为大数据的开源的方案, 但是Hadoop不支持数据的random访问, 而只是批量的访问.

对于随机访问, 当然当前的RDBMS是现在的主流的solution. 当然也有其他的如object-oriented databases, 或column-oriented or massively parallel processing (MPP) databases

Column-Oriented Databases

Column-oriented databases save their data grouped by columns.

Subsequent column values are stored contiguously on disk. This differs from the usual row-oriented approach of traditional databases, which store entire rows contiguously.

The reason to store values on a per column basis instead is based on the assumption that for specific queries not all of them are needed.

Especially in analytical databases this is often the case and therefore they are good candidates for this different storage schema.

Reduced IO is one of the primary reasons for this new layout but it offers additional advantages playing into the same category: since the values of one column are often very similar in nature or even vary only slightly between logical rows they are often much better suited for compression than the heterogeneous values of a row-oriented record structure: most compression algorithms only look at a finite window.Specialized algorithms, for example delta and/or prefix compression, selected based on the type of the column (i.e. on the data stored) can yield huge improvements in compression ratios. Better ratios result in more efficient bandwidth usage in return.

按列存放, 两个好处, 首先节省IO, 因为大多数查询都是查询某一列, 而对于传统的row-oriented需要读出整行. 再者适合压缩, 对于同一列的数据, 相似性会比较大, 压缩比会比较高.

The Problem with Relational Database Systems

RDBMSs have typically played (and, for the foreseeable future at least, will play) an integral role when designing and implementing business applications.

但是RDBMS在面对大数据的时候, 扩展性比较差.

当一台RDBMS服务器, 面对越来越多的用户时, 可能会使用如下几步,

The first step to ease the pressure is to add slave database servers that are used to being read from in parallel.

A common next step is to add a cache, for example Memcached.

While this may help you with the amount of reads, you have not yet addressed the writes.

最终还是要, Scaling up vertically, 和对数据库经行优化

但是这个也是有限度的...

Where do you go from here? You could start sharding (see Sharding) your data across many databases, but this turns into an operational nightmare, is very costly, and still does not give you a truly fitting solution.

所以当数据量持续增长时, RDBMS是无法应对的, 因为扩展非常麻烦...数据量越大你需要付出的扩展的代价越大.

对于大数据不是说不能用RDBMS来解决, 通过shard等方法也可以handle, 问题是当数据到下个数量级的时候, 扩展的代价太大, 所以不适合.

Non-relational Database Systems, Not-only SQL or NoSQL?

We saw the advent of the so called NoSQL solutions, a term coined by Eric Evans in response to a question from Johan Oskarsson, who was trying to find a name for an event in that very emerging, new data storage system space.

Even projects like memcached are lumped into the NoSQL category, as if anything that is not an RDBMS is automatically NoSQL. This creates a kind of false dichotomy that obscures the exciting technical possibilities these systems have to offer. And there are many; within the NoSQL category, there are numerous dimensions you could use to classify where the strong points of a particular system lies.

Dimensions

Let us take a look at a handful of those dimensions here. Note that this is not a comprehensive list, or the only way to classify them.

Data model

There are many variations of how the data is stored, which include key/value stores (compare to a HashMap), semi-structured, column-oriented stores, and document-oriented stores. How is your application accessing the data? Can the schema evolve over time?

Storage model

In-memory or persistent? This is fairly easy to decide on since we are comparing with RDBMSs, which usually persist their data to permanent storage, such as physical disks. But you may explicitly need a purely in-memory solution, and there are choices for that too. As far as persistent storage is concerned, does this affect your access pattern in any way?

Consistency model

Strictly or eventually consistent? The question is, how does the storage system achieve its goals: does it have to weaken the consistency guarantees? While this seems like a cursory question, it can make all the difference in certain use-cases. It may especially affect latency, i.e., how fast the system can respond to read and write requests. This is often measured in harvest and yield.

Physical model

Distributed or single machine? What does the architecture look like - is it built from distributed machines or does it only run on single machines with the distribution handled client-side, i.e., in your own code? Maybe the distribution is only an afterthought and could cause problems once you need to scale the system.

And if it does offer scalability, does it imply specific steps to do so? Easiest would be to add one machine at a time, while sharded setups sometimes (especially those not supporting virtual shards) require for each shard to be increased simultaneously because each partition needs to be equally powerful.

Read/write performance

You have to understand what your application's access patterns look like. Are you designing something that is written to a few equal load between reads and writes? Or are you taking in a lot

of writes and just a few reads? Does it support range scans or is better suited doing random reads? Some of the available systems are advantageous for only one of these operations, while others may do well in all of them.

Secondary indexes

Secondary indexes allow you to sort and access tables based on different fields and sorting orders. The options here range from systems that have absolutely no secondary indexes and no

guaranteed sorting order (like a HashMap, i.e., you need to know the keys) to some that weakly support them, all the way to those that offer them out-of-the-box. Can your application

cope, or emulate, if this feature is missing?

Failure handling

It is a fact that machines crash, and you need to have a mitigation plan in place that addresses machine failures (also refer to the discussion of the CAP theorem in Consistency

Models). How does each data store handle server failures? Is it able to continue operating? This is related to the "Consistency model" dimension above, as losing a machine may cause holes in your data store, or even worse, make it completely unavailable. And if you are replacing the server, how easy will it be to get back to 100% operational? Another scenario is decommissioning a server in a clustered setup, which would most likely be handled the same way.

Compression

When you have to store terabytes of data, especially of the kind that consists of prose or human readable text, it is advantageous to be able to compress the data to gain substantial savings in required raw storage. Some compression algorithms can achieve a 10:1 reduction in storage space needed. Is the compression method pluggable? What types are available?

Load balancing

Given that you have a high read or write rate, you may want to invest into a storage system that transparently balances itself while the load shifts over time. It may not be the full answer to

your problems, but may help you to ease into a high throughput application design.

Atomic Read-Modify-Write

While RDBMSs offer you a lot of these operations directly (because you are talking to a central, single server), it can be more difficult to achieve in distributed systems. They allow you to prevent race conditions in multi-threaded or shared-nothing application server design. Having these compare and swap(CAS) or check and set operations available can reduce client-side complexity.

Locking, waits and deadlocks

It is a known fact that complex transactional processing, like 2-phase commits, can increase the possibility of multiple clients waiting for a resource to become available. In a worst-case scenario, this can lead to deadlocks, which are hard to resolve. What kind of locking model does the system you are looking at support? Can it be free of waits and therefore deadlocks?

现在面对繁多的Nosql系统, 如果问哪一种Nosql系统最好, 那是无法回答的, 就像Nosql无法替代sql一样, 只有适不适合?

上面这么多Dimensions, 不可能有一种Nosql系统全可以handle的很好, 所以Nosql的选择主要看你的场景你的应用, 需要哪些特性? 根据这些特性选择最合适的Nosql.

Scalability

While the performance of RDBMSs is well suited for transactional processing, it is less so for very large-scale analytical processing. This is referring to very large queries that scan wide ranges of records or entire tables. Analytical databases may contain hundreds or thousands of terabytes, causing queries to exceed what can be done on a single server in a reasonable amount of time. Scaling that server vertically, i.e., adding more cores or disks, is simply not good enough.

What is even worse is that with RDBMSs, waits and deadlocks are increasing non-linearly with the size of the transactions and concurrency - i.e., the square of concurrency and the third or even 5th power of the transaction size. [18] Sharding is often no practical solution, as it has to be done within the application layer, and may involve complex and costly (re)partitioning procedures.

There are commercial RDBMSs that solve many of these issues, but they are often specialized and only cover certain aspects. Above all they are very, very expensive. Looking at open-source alternatives in the RDBMS space, you will likely have to give up many or all relational features, such as secondary indexes, to gain some level of performance.

The question is, wouldn't it be good to trade relational features permanently for performance? You could denormalize (see below) the data model and avoid waits and deadlocks by minimizing necessary locking. How about built-in horizontal scalability without the need to repartition as your data grows? Finally, throw in fault tolerance and data availability, using the same mechanisms that allow scalability, and what you get is a NoSQL solution - more specifically, one that matches what HBase has to offer.

还是在谈扩展性问题, RDBMS非常好, 但是在面对大数据和分布式的情况下, 如果还要保持所有的relational features, 如transaction, 就会非常困难. 一些商业版的RDBMS解决了其中一些问题, 但为此付出的代价都是非常昂贵的.

于是, 你会问, 你真的需要所有的relational features吗, 值得吗? 于是有人尝试放弃其中的一些feature, 来满足不断增长的performance的需求, 这就是Nosql.

所以可以理解为, NoSQL是一种妥协, 一种舍, 所以他是无法完全替代SQL的

上面列了那么多Dimensions, 每种Nosql的选择和舍取都是不同的, 所以只有在特定的场景下, 才应该选择特定的NoSQL的解决方案.

并且选择NoSQL意味着, 你的场景确实值得牺牲一些relational features来获取更高的performance, 这个是前提, 比如银行取款, 这样的应用, 不可能在一致性和transaction feature上做任何妥协, 就不适合Nosql.

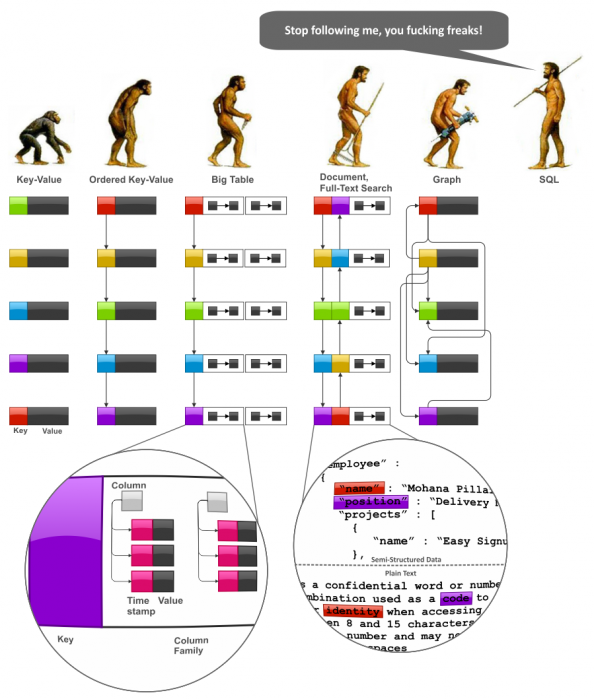

下面图很好反映出Nosql 数据模型的演变, key-value和SQL分别代表两个极端, 分别代表着扩展性和丰富的功能.

Keyvalue, 数据模型最简单, 但功能太弱, 只能用于类似简单的数据或cache, 数据之间完全没有关联.

然后为了支持类似range query这样的功能, 出现Ordered Keyvalue

接着, 为了支持稍微复杂些的数据结构, 加入了value model, 可以在在value里面嵌套keyvalue, 对于Big table就是Column Family

继续复杂下去, document, Graph, NoSQL的复杂性大大增加, 越来越象SQL.

MongoDB就支持大部分的SQL的feature, 所以SQL要喊'Stop following me’.

所以对DB solution方案的选择其实很简单, 就是你可以接受多少对功能的妥协. 所以我觉得NoSQL根本谈不上是一种革新, 只不过是对现实的一种折衷.

Database (De-)Normalization

学习过RDBMS的同学都知道, 数据库设计有几大范式(Normalization), 理论上设计必须满足这几个范式, 来防止数据冗余等.

但是对于实际使用Mysql或其他RDBMS的同学, 也会知道现实不是那么理想化的, 用于当数据量增大后, join等操作就会变得非常低效, 往往采用的方法就是这儿说的反范式化(Denormalization)

通过冗余(duplicated)来避免join, 空间换时间.

Nosql也是通过这种办法来处理SQL中复杂的一对多, 多对多的关系,

总体来说,反规格化需要权衡下面这些东西:

- 查询数据量 /查询IO VS 总数据量.使用反规格化,一方面可以把一条查询语句所需要的所有数据组合起来放到一个地方存储。这意味着,其它不同不同查询所需要的相同的数据,需要放在别不同的地方。因此,这产生了很多冗余的数据,从而导致了数据量的增大

- 处理复杂度 VS 总数据量. 在符合范式的数据模式上进行表连接的查询,很显然会增加了查询处理的复杂度,尤其对于分布式系统来说更是。反规格化的数据模型允许我们以方便查询的方式来存构造数据结构以简化查询复杂度

所以Denormalization也是上面这些balance的tradeoff.

Building Blocks

This section provides you with a first overview of the architecture behind HBase. After giving you some background information on its lineage, you will be introduced to the general concepts of the data model, the storage API available, and finally be presented with a high level view on implementation details.

Backdrop

In 2003, Google published a paper called The Google File System. It uses a cluster of commodity hardware to store huge amounts of data on a distributed file system.

Shortly afterwards, another paper by Google was published, titled MapReduce: Simplified Data Processing on Large Clusters. It was the missing piece to the GFS architecture as it made use of the vast number of CPUs each commodity server in the GFS cluster provides. MapReduce plus GFS forms the backbone for processing massive amounts of data, including the entire search index Google owns.

What is missing though is the ability to access data randomly and close to real-time (meaning good enough to drive a web service, for example). Another drawback of the GFS design is that it is good with a few very, very large files, but not as good with millions of tiny files, because the data retained in memory by the master node is ultimately bound to the number of files. The more files, the higher the pressure on the memory of the master.

GFS+MapReduce的方案有两个问题, 不支持实时的随机数据access, 和无法应对millions of tiny files (更适用于适当数量的大文件)

So Google was trying to find a solution that could drive interactive applications, such as Mail or Analytics, while making use of the same infrastructure and relying on GFS for replication and data availability. The data stored should be composed of much smaller entities and the system would transparently take care of aggregating the small records into very large storage files and offer some sort of indexing that allows the user to retrieve data with a minimal amount of disk seeks. Finally, it should be able to store the entire web crawl and work with MapReduce to be able to build the entire search index in a timely manner.

所以Google需要提高其他的solution来满足一些interactive applications的需求, 需要可以透明的把大量的small records聚合到非常大的storage files里面, 还能提供index满足用户随机存储的需求.

Being aware of the shortcomings of RDBMSs at scale (see the section called “Seek vs. Transfer” for a discussion of one fundamental issue)

the engineers approached this problem differently: forfeit relational features and use a simple API that has basic create, read, update, delete (or CRUD) operations, plus a scan function to iterate over larger key ranges or entire tables. The culmination of these efforts was published 2006 in the Bigtable: A Distributed Storage System for Structured Data paper.

Tables, Rows, Columns, Cells

First a quick summary:

the most basic unit is a column.

One or more columns form a row that is addressed uniquely by a row key.

A number of rows, in turn, form a table, and there can be many of them.

Each column may have multiple versions, with each distinct value contained in a separate cell. This sounds like a reasonable description for a typical database, but with the extra dimension of allowing multiple versions of each cells.

All rows are always sorted lexicographically by their row key.

Having the row keys always sorted can give you something like a primary key index known from RDBMSs. It is also always unique, i.e., you can have each row key only once, or you are updating the same row. While the original Bigtable paper only considers a single index, HBase adds support for secondary indexes (see the section called “Secondary Indexes”). The row keys can be any arbitrary array of bytes and are not necessarily human-readable.

HBase还支持Secondary Indexes

Rows are comprised of columns, and those are in turn are grouped into column families. This helps building semantical or topical boundaries between the data, and also applying certain features to

them, for example compression, or denoting them to stay in-memory.

All columns in a column family are stored together in the same low-level storage files, called HFile.

Column families need to be defined when the table is created and should not be changed too often, nor should there be too many.

The name of the column family must be printable characters, a notable difference to all other names or values.

Columns are often referenced as family:qualifier with the qualifier being any arbitrary array of bytes. As opposed to the limit on column families there is no such thing for the number of columns: you could have millions of columns in a particular column family. There is also no type nor length boundary on the column values.

前面已经介绍了使用column oriented的好处, 现在更进一步, 加入column family的概念, 主要出于便于管理的考虑, 当column很多的时候, 需要分组管理.

基于这样的假设, column family不应该经常变, 也不应该太多, 因为他应该是比较抽象和general的, 而column就没有数量的限制

All rows and columns are defined in the context of a table, adding a few more concepts across all included column families, and which we will discuss below.

Every column value, or cell, is either timestamped implicitly by the system or can be set explicitly by the user. This can be used, for example, to save multiple versions of a value as it changes over time. Different versions of a cell are stored in decreasing timestamp order, allowing you to read the newest value first. This is an optimization aimed at read-patterns that favor more current values over historical ones.

The user can specify how many versions of a value should be kept. In addition, there is support for predicate deletions (see the section called “Log-Structured Merge-Trees” for the concepts behind them) allowing you to keep, for example, only values written in the last week. The values (or cells) are also just uninterpreted arrays of bytes, that the client needs to know how to handle.

这样的设计可以从多个方面去解释, 首先这样可以将CRUD操作, 简化为CR, 大大的简化了并发数据库操作, 而且更易于容错, 因为旧的版本还在.

大大提高了写速度, 这个也是Bigtable的优势, 写的逻辑也相当简单.

适应于GFS的模式, GFS本身也是append-only优化的, 去update某块数据比较低效, 也无法保证defined(一致).

If you recall from the quote earlier, the Bigtable model, as implemented by HBase, is a sparse, distributed, persistent, multidimensional map, which is indexed by row key, column key, and

a timestamp. Putting this together, we can express the access to data like so:

(Table, RowKey, Family, Column, Timestamp) → Value

要知道Family, Column, Timestamp封装在value里面的

Access to row data is atomic and includes any number of columns being read or written too. There is no further guarantee or transactional feature that spans multiple rows or across tables. The

atomic access is also a contributing factor to this architecture being strictly consistent, as each concurrent reader and writer can make safe assumptions about the state of a row.

HBase可以保证单行操作的原子性

Auto Sharding

The basic unit of scalability and load balancing in HBase is called a region. These are essentially contiguous ranges of rows stored together. They are dynamically split by the system when they become too large. Alternatively, they may also be merged to reduce their number and required storage files.

Initially there is only one region for a table, and as you start adding data to it, the system is monitoring to ensure that you do not exceed a configured maximum size. If you exceed the limit, the region is split into two equal halves. Each region is served by exactly one region server, and each of these servers can serve many regions at any time.

Splitting and serving regions can be thought of as auto sharding, as offered by other systems.

Splitting is also very fast - close to instantaneous - because the split regions simply read from the original storage files until a compaction rewrites them into separate ones asynchronously.

Region的自动的split可以看作是一种, auto sharding, 而且split只有在compaction的时候才真正发生, 之前都是虚拟的split.

Storage API

"Bigtable does not support a full relational data model; instead, it provides clients with a simple data model that supports dynamic control over data layout and format [...]"

- The API offers operations to create and delete tables and column families. In addition, it has functions to change the table and column family metadata, such as compression or block sizes.

- A scan API allows to efficiently iterate over ranges of rows and be able to limit which columns are returned or how many versions of each cell.

- The system has support for single-row transactions, and with it implements atomic read-modify-write sequences on data stored under a single row key.

- Cell values can be interpreted as counters and updated atomically. These counters can be read and modified in one operation, so that despite the distributed nature of the architecture, clients can use this mechanism to implement global, strictly consistent, sequential counters.

- There is also the option to run client-supplied code in the address space of the server. The server-side framework to support this is called Coprocessors. The code has access to the server local data and can be used to implement light-weight batch jobs, or use expressions to analyze or summarize data based on a variety of operators.

- Finally, the system is integrated with the MapReduce framework by supplying wrappers that convert tables into input source and output targets for MapReduce jobs.

Differences in naming

可以看出HBase的名字起的比较晦涩

HBase Bigtable

Region Tablet

RegionServer Tablet Server

Flush Minor Compaction

Minor Compaction Merging Compaction

Major Compaction Major Compaction

Write-Ahead Log Commit Log

HDFS GFS

Hadoop MapReduce MapReduce

MemStore memtable

HFile SSTable

ZooKeeper Chubby

Installation

Deployment

Script Based

Using a script based approach seems archaic compared to the more advanced approaches listed below. But they serve their purpose and do a good job for small to even medium size clusters.

Apache Whirr (提供各种云的抽象操作接口)

There is a recent increase of users wanting to run their cluster in dynamic environments, such as the public cloud offerings by Amazon's EC2, or Rackspace Cloud Servers, but also in private server

farms, using open-source tools like Eucalyptus.

Since it is not trivial to program against each of the APIs providing dynamic cluster infrastructures it would be useful to abstract the provisioning part and once the cluster is operational simply launch the MapReduce jobs the same way you would on a local, static cluster. This is where Apache Whirr comes in.

Whirr - available at http://incubator.apache.org/whirr/ – has support for a variety of public and private cloud APIs and allows you to provision clusters running a range of services. One of those is HBase, giving you the ability to quickly deploy a fully operational HBase cluster on dynamic setups.

Puppet and Chef

Similar to Whirr, there are other deployment frameworks for dedicated machines. Puppet by Puppet Labs and Chef by Opscode are two of such offerings.

Both work similar by having a central provisioning server that stores all the configuration, combined with client software, executed on each server, which communicates with the central server to receive updates and apply them locally.

把配置存放在中心服务器上, 其他server定期从中心服务器获取新的配置信息, 并update

浙公网安备 33010602011771号

浙公网安备 33010602011771号