MongoDB的一些知识

MongoDB的一些知识

https://www.percona.com/doc/percona-server-for-mongodb/LATEST/changed_in_34.html

The MongoRocks storage engine is now based on RocksDB 4.13.5.

facebook的RocksDB存储引擎

MySQL版叫MyRocks

mongodb版叫MongoRocks

这篇文章用的版本,mongodb版本:3.0.7,应该是mmapv1引擎

mongodb文档:https://docs.mongodb.com/manual/reference/method/rs.remove/

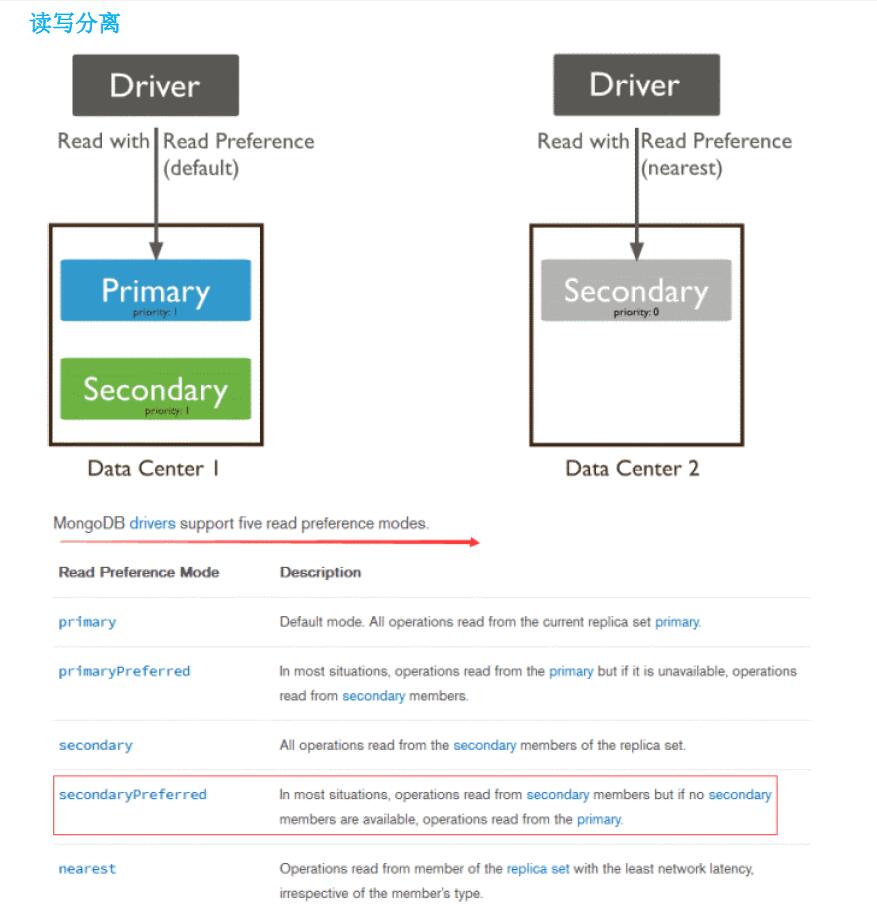

主库除了local库之外,其他库的数据都会同步到从库,包括用户,认证等信息

percona版本mongodb的文档

https://www.percona.com/doc/percona-server-for-mongodb/LATEST/install/yum.html

https://www.percona.com/doc/percona-server-for-mongodb/LATEST/install/tarball.html

https://www.percona.com/doc/percona-server-for-mongodb/LATEST/index.html

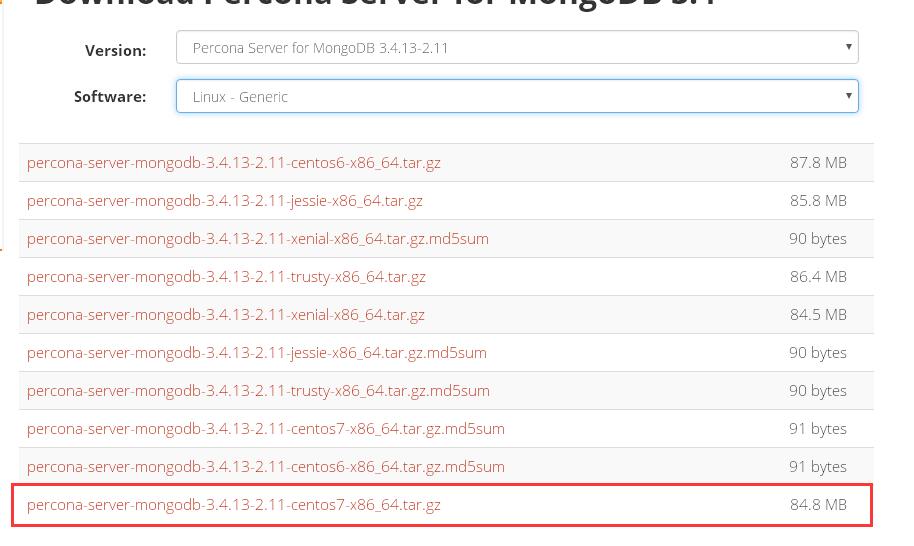

https://www.percona.com/downloads/percona-server-mongodb-3.4/

mongodb的Windows客户端:

Robo 3T 前身robomongo(免费)

https://robomongo.org/

MongoChef(收费)

mongochef-x64.msi mongodb管理工具

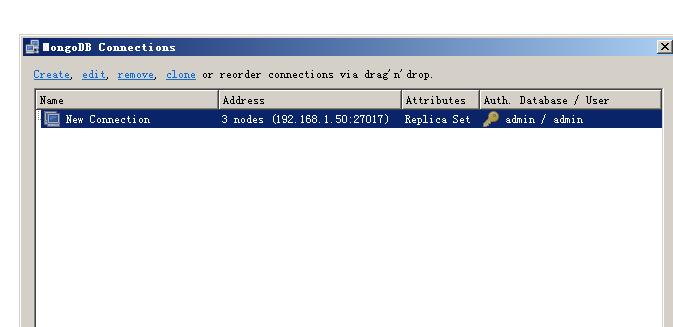

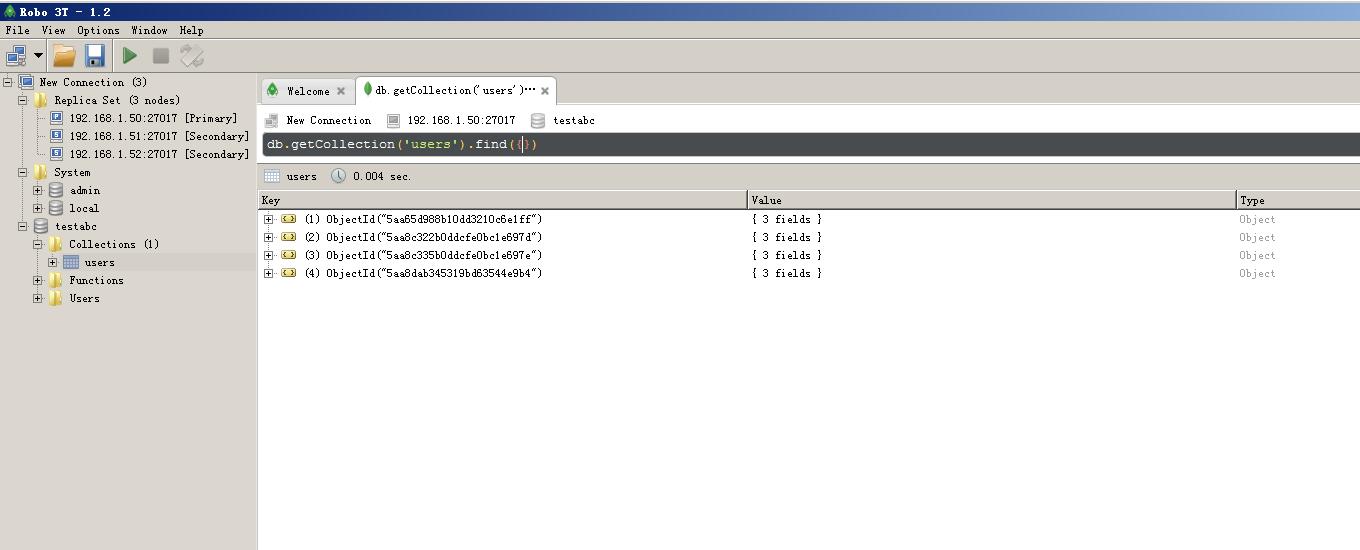

连接复制集和分片集群都可以 ,而且不崩溃

需要在Windows上安装mongodb,调用mongo.exe

各种角色和权限:

https://docs.mongodb.com/manual/reference/built-in-roles/ Database User Roles read #授予User只读数据的权限 readWrite #授予User读写数据的权限 Database Administration Roles dbAdmin #在当前DB中执行管理操作 dbOwner #在当前DB中执行任意操作 userAdmin #在当前DB中管理User Cluster Administration Roles clusterAdmin #授予管理集群的最高权限 clusterManager #授予管理和监控集群的权限 clusterMonitor #授予监控集群的权限,对监控工具具有readonly的权限 hostManager #管理Server Backup and Restoration Roles backup restore All-Database Roles readAnyDatabase #授予在所有数据库上读取数据的权限 readWriteAnyDatabase #授予在所有数据库上读写数据的权限 userAdminAnyDatabase #授予在所有数据库上管理User的权限,不能操作数据库 dbAdminAnyDatabase #授予管理所有数据库的权限,只包括管理数据库权限 Internal Roles __system #不能分配这个角色给用户 Superuser Roles root #具有一切权限

用户权限控制

实例上的所有用户都保存在admin数据库下的system.users 集合里 //没有权限 hard0:RECOVERING> show dbs; 2015-12-15T16:35:10.504+0800 E QUERY Error: listDatabases failed:{ "ok" : 0, "errmsg" : "not authorized on admin to execute command { listDatabases: 1.0 }", "code" : 13 } at Error (<anonymous>) at Mongo.getDBs (src/mongo/shell/mongo.js:47:15) at shellHelper.show (src/mongo/shell/utils.js:630:33) at shellHelper (src/mongo/shell/utils.js:524:36) at (shellhelp2):1:1 at src/mongo/shell/mongo.js:47 rsshard0:RECOVERING> show dbs 2015-12-15T16:35:13.263+0800 E QUERY Error: listDatabases failed:{ "ok" : 0, "errmsg" : "not authorized on admin to execute command { listDatabases: 1.0 }", "code" : 13 } at Error (<anonymous>) at Mongo.getDBs (src/mongo/shell/mongo.js:47:15) at shellHelper.show (src/mongo/shell/utils.js:630:33) at shellHelper (src/mongo/shell/utils.js:524:36) at (shellhelp2):1:1 at src/mongo/shell/mongo.js:47 rsshard0:RECOVERING> exit //改配置文件 vi /data/replset0/config/rs0.conf

port=27017

dbpath=/data/mongodb/mongodb27017/data

logpath=/data/mongodb/mongodb27017/logs/mongo.log

pidfilepath=/data/mongodb/mongodb27017/logs/mongo.pid

#profile = 1 #打开profiling 分析慢查询

slowms = 1000 #分析的慢查询的时间,默认是100毫秒

fork=true #是否后台运行,启动之后mongo会fork一个子进程来后台运行

logappend=true #写错误日志的模式:设置为true为追加。默认是覆盖现有的日志文件

oplogSize=2048 #一旦mongod第一次创建OPLOG,改变oplogSize将不会影响OPLOG的大小

directoryperdb=true

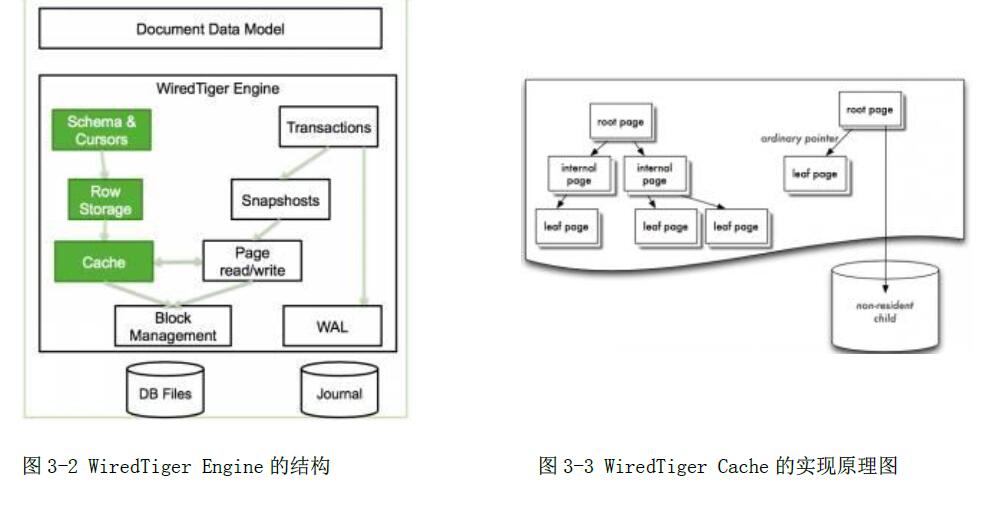

storageEngine=wiredTiger

wiredTigerCacheSizeGB=4 #bufferpool大小

syncdelay=30 #刷写数据到日志的频率,通过fsync操作数据。默认60秒。

wiredTigerCollectionBlockCompressor=snappy #数据压缩策略,google发明的压缩算法

journal=true #redolog

#replSet=ck1 #指定一个副本集名称作为参数,所有主机都必须有相同的名称作为同一个副本集。

#auth = true #进入数据库需要auth验证

#shardsvr=true #表示是否是一个分片集群

//添加用户 拥有root角色 rsshard0:PRIMARY> use admin rsshard0:PRIMARY> db.createUser({user:"lyhabc",pwd:"123456",roles:[{role:"root",db:"admin"}]}) Successfully added user: { "user" : "lyhabc", "roles" : [ { "role" : "root", "db" : "admin" } ] } /usr/local/mongodb/bin/mongod --config /data/replset0/config/rs0.conf mongo --port 4000 -u lyhabc -p 123456 --authenticationDatabase admin # cat /data/replset0/log/rs0.log 2015-12-15T17:07:12.388+0800 I COMMAND [conn38] command admin.$cmd command: replSetHeartbeat { replSetHeartbeat: "rsshard0", pv: 1, v: 2, from: "192.168.14.198:4000", fromId: 2, checkEmpty: false } ntoreturn:1 keyUpdates:0 writeConflicts:0 numYields:0 reslen:142 locks:{} 19ms 2015-12-15T17:07:13.595+0800 I NETWORK [conn37] end connection 192.168.14.221:43932 (1 connection now open) 2015-12-15T17:07:13.596+0800 I NETWORK [initandlisten] connection accepted from 192.168.14.221:44114 #39 (2 connections now open) 2015-12-15T17:07:14.393+0800 I NETWORK [conn38] end connection 192.168.14.198:35566 (1 connection now open) 2015-12-15T17:07:14.394+0800 I NETWORK [initandlisten] connection accepted from 192.168.14.198:35568 #40 (2 connections now open) 2015-12-15T17:07:15.277+0800 I NETWORK [initandlisten] connection accepted from 127.0.0.1:46271 #41 (3 connections now open) 2015-12-15T17:07:15.283+0800 I ACCESS [conn41] SCRAM-SHA-1 authentication failed for lyhabc on admin from client 127.0.0.1 ; UserNotFound Could not find user lyhabc@admin 2015-12-15T17:07:15.291+0800 I NETWORK [conn41] end connection 127.0.0.1:46271 (2 connections now open)

打开web后台

vi /data/replset0/config/rs0.conf journal=true rest=true //打开更多web后天监控指标 httpinterface=true //打开web后台 port=4000 replSet=rsshard0 dbpath = /data/replset0/data/rs0 shardsvr = true oplogSize = 100 pidfilepath = /usr/local/mongodb/mongodb0.pid logpath = /data/replset0/log/rs0.log logappend = true profile = 1 slowms = 5 fork = true

性能监控

db.serverStatus() "resident" : 86, //当前所使用的物理内存总量 单位MB 如果超过系统内存表示系统内存过小 "supported" : true, //系统是否支持可扩展内存 "mapped" : 368, //映射数据文件所使用的内存大小 单位MB 如果超过系统内存表示系统内存过小 需要使用swap 映射的空间比内存空间还要大 "extra_info" : { "note" : "fields vary by platform", "heap_usage_bytes" : 63345440, "page_faults" : 63 //缺页中断的次数 内存不够缺页中断也会增多 "activeClients" : { "total" : 12, //连接到mongodb实例的连接数 mongodb监控工具 mongostat --port 4000 mongotop --port 4000 --locks mongotop --port 4000 rsshard0:SECONDARY> db.serverStatus() { "host" : "steven:4000", "version" : "3.0.7", "process" : "mongod", "pid" : NumberLong(1796), "uptime" : 63231, "uptimeMillis" : NumberLong(63230882), "uptimeEstimate" : 3033, "localTime" : ISODate("2015-12-15T03:26:39.707Z"), "asserts" : { "regular" : 0, "warning" : 0, "msg" : 0, "user" : 2226, "rollovers" : 0 }, "backgroundFlushing" : { "flushes" : 57, "total_ms" : 61, "average_ms" : 1.0701754385964912, "last_ms" : 0, "last_finished" : ISODate("2015-12-15T03:26:33.960Z") }, "connections" : { "current" : 1, "available" : 818, "totalCreated" : NumberLong(15) }, "cursors" : { "note" : "deprecated, use server status metrics", "clientCursors_size" : 0, "totalOpen" : 0, "pinned" : 0, "totalNoTimeout" : 0, "timedOut" : 0 }, "dur" : { "commits" : 28, "journaledMB" : 0, "writeToDataFilesMB" : 0, "compression" : 0, "commitsInWriteLock" : 0, "earlyCommits" : 0, "timeMs" : { "dt" : 3010, "prepLogBuffer" : 0, "writeToJournal" : 0, "writeToDataFiles" : 0, "remapPrivateView" : 0, "commits" : 33, "commitsInWriteLock" : 0 } }, "extra_info" : { "note" : "fields vary by platform", "heap_usage_bytes" : 63345440, "page_faults" : 63 //缺页中断的次数 内存不够缺页中断也会增多 }, "globalLock" : { "totalTime" : NumberLong("63230887000"), "currentQueue" : { "total" : 0, //如果这个值一直很大,表示并发问题 锁太长时间 "readers" : 0, "writers" : 0 }, "activeClients" : { "total" : 12, //连接到mongodb实例的连接数 "readers" : 0, "writers" : 0 } }, "locks" : { "Global" : { "acquireCount" : { "r" : NumberLong(27371), "w" : NumberLong(21), "R" : NumberLong(1), "W" : NumberLong(5) }, "acquireWaitCount" : { "r" : NumberLong(1) }, "timeAcquiringMicros" : { "r" : NumberLong(135387) } }, "MMAPV1Journal" : { "acquireCount" : { "r" : NumberLong(13668), "w" : NumberLong(45), "R" : NumberLong(31796) }, "acquireWaitCount" : { "w" : NumberLong(4), "R" : NumberLong(5) }, "timeAcquiringMicros" : { "w" : NumberLong(892), "R" : NumberLong(1278323) } }, "Database" : { "acquireCount" : { "r" : NumberLong(13665), "R" : NumberLong(7), "W" : NumberLong(21) }, "acquireWaitCount" : { "W" : NumberLong(1) }, "timeAcquiringMicros" : { "W" : NumberLong(21272) } }, "Collection" : { "acquireCount" : { "R" : NumberLong(13490) } }, "Metadata" : { "acquireCount" : { "R" : NumberLong(1) } }, "oplog" : { "acquireCount" : { "R" : NumberLong(900) } } }, "network" : { "bytesIn" : NumberLong(7646), "bytesOut" : NumberLong(266396), "numRequests" : NumberLong(113) }, "opcounters" : { "insert" : 0, "query" : 7, "update" : 0, "delete" : 0, "getmore" : 0, "command" : 107 }, "opcountersRepl" : { "insert" : 0, "query" : 0, "update" : 0, "delete" : 0, "getmore" : 0, "command" : 0 }, "repl" : { "setName" : "rsshard0", "setVersion" : 2, "ismaster" : false, "secondary" : true, "hosts" : [ "192.168.1.155:4000", "192.168.14.221:4000", "192.168.14.198:4000" ], "me" : "192.168.1.155:4000", "rbid" : 107705010 }, "storageEngine" : { "name" : "mmapv1" }, "writeBacksQueued" : false, "mem" : { "bits" : 64, //跑在64位系统上 "resident" : 86, //当前所使用的物理内存总量 单位MB "virtual" : 1477, //mongodb进程所映射的虚拟内存总量 单位MB "supported" : true, //系统是否支持可扩展内存 "mapped" : 368, //映射数据文件所使用的内存大小 单位MB "mappedWithJournal" : 736 //映射Journaling所使用的内存大小 单位MB }, "metrics" : { "commands" : { "count" : { "failed" : NumberLong(0), "total" : NumberLong(6) }, "dbStats" : { "failed" : NumberLong(0), "total" : NumberLong(1) }, "getLog" : { "failed" : NumberLong(0), "total" : NumberLong(4) }, "getnonce" : { "failed" : NumberLong(0), "total" : NumberLong(11) }, "isMaster" : { "failed" : NumberLong(0), "total" : NumberLong(16) }, "listCollections" : { "failed" : NumberLong(0), "total" : NumberLong(2) }, "listDatabases" : { "failed" : NumberLong(0), "total" : NumberLong(1) }, "listIndexes" : { "failed" : NumberLong(0), "total" : NumberLong(2) }, "ping" : { "failed" : NumberLong(0), "total" : NumberLong(18) }, "replSetGetStatus" : { "failed" : NumberLong(0), "total" : NumberLong(15) }, "replSetStepDown" : { "failed" : NumberLong(1), "total" : NumberLong(1) }, "serverStatus" : { "failed" : NumberLong(0), "total" : NumberLong(21) }, "top" : { "failed" : NumberLong(0), "total" : NumberLong(5) }, "whatsmyuri" : { "failed" : NumberLong(0), "total" : NumberLong(4) } }, "cursor" : { "timedOut" : NumberLong(0), "open" : { "noTimeout" : NumberLong(0), "pinned" : NumberLong(0), "total" : NumberLong(0) } }, "document" : { "deleted" : NumberLong(0), "inserted" : NumberLong(0), "returned" : NumberLong(5), "updated" : NumberLong(0) }, "getLastError" : { "wtime" : { "num" : 0, "totalMillis" : 0 }, "wtimeouts" : NumberLong(0) }, "operation" : { "fastmod" : NumberLong(0), "idhack" : NumberLong(0), "scanAndOrder" : NumberLong(0), "writeConflicts" : NumberLong(0) }, "queryExecutor" : { "scanned" : NumberLong(2), "scannedObjects" : NumberLong(5) }, "record" : { "moves" : NumberLong(0) }, "repl" : { "apply" : { "batches" : { "num" : 0, "totalMillis" : 0 }, "ops" : NumberLong(0) }, "buffer" : { "count" : NumberLong(0), "maxSizeBytes" : 268435456, "sizeBytes" : NumberLong(0) }, "network" : { "bytes" : NumberLong(0), "getmores" : { "num" : 0, "totalMillis" : 0 }, "ops" : NumberLong(0), "readersCreated" : NumberLong(1) }, "preload" : { "docs" : { "num" : 0, "totalMillis" : 0 }, "indexes" : { "num" : 0, "totalMillis" : 0 } } }, "storage" : { "freelist" : { "search" : { "bucketExhausted" : NumberLong(0), "requests" : NumberLong(0), "scanned" : NumberLong(0) } } }, "ttl" : { "deletedDocuments" : NumberLong(0), "passes" : NumberLong(56) } }, "ok" : 1 rsshard0:SECONDARY> use aaaa switched to db aaaa rsshard0:SECONDARY> db.stats() { "db" : "aaaa", "collections" : 4, "objects" : 7, "avgObjSize" : 149.71428571428572, "dataSize" : 1048, "storageSize" : 1069056, "numExtents" : 4, "indexes" : 1, "indexSize" : 8176, "fileSize" : 67108864, "nsSizeMB" : 16, "extentFreeList" : { "num" : 0, "totalSize" : 0 }, "dataFileVersion" : { "major" : 4, "minor" : 22 }, "ok" : 1 }

备份

tail /data/replset1/log/rs1.log jobs bg %1 netstat -lnp |grep mongo w top --导出文本备份 mongoexport --port 4000 --db aaaa --collection testaaa --out :/tmp/aaaa.csv sz :/tmp/aaaa.csv --导出二进制备份 mongodump --port 4000 --db aaaa --out /tmp/aaaa.bak ls /tmp cd /tmp/aaaa.bak/ --打包备份 tar zcvf aaaa.tar.gz aaaa/ ls sz aaaa.tar.gz cd aaaa ---C 输出十六进制和对应字符 hexdump -C testaaa.bson history

MongoDB复制集成员的重新同步(mongodb内部有一个 initial sync进程不停初始化同步)

http://www.linuxidc.com/Linux/2015-06/118981.htm?utm_source=tuicool&utm_medium=referral

关闭 mongod 进程。通过在 mongo 窗口中使用 db.shutdownServer() 命令或者在Linux系统中使用 mongod --shutdown 参数来安全关闭

use admin;

db.shutdownServer() ;

mongod --shutdown

MongoDB中的_id和ObjectId

http://blog.csdn.net/magneto7/article/details/23842941?utm_source=tuicool&utm_medium=referral

数据表的复制 db.runCommand({cloneCollection:"库.表",from:"198.61.104.31:27017"});

数据库的复制 db.copyDatabase("源库","目标库","198.61.104.31:27017");

刷新磁盘:将内存中尚未写入磁盘的信息写入磁盘,并锁住对数据库更新的操作,但读操作可以使用,使用runCommand命令

格式:db.runCommand({fsync:1,async:true})

async:是否异步执行

lock:1 锁定数据库

Query Translator

http://www.querymongo.com/

{ "_id" : ObjectId("56341908c4393e7396b20594"), "id" : 2 }

{ "_id" : ObjectId("56551e020be4a0c3355a5ba7"), "id" : 1 }

{ "_id" : 121, "age" : 22, "Attribute" : 33 }

第一次插入数据时不需要先创建collection,插入数据会自动建立

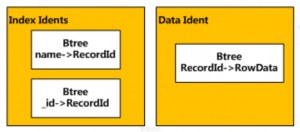

每次插入数据如果没有指定_id字段,系统会默认创建一个主键_id,ObjectId类型 更好支持分布式存储 ObjectId类型为12字节 4字节时间戳 3字节机器唯一标识 2字节进程id 3字节随机计数器

每个集合都必须有一个_id字段,不管是自动生成还是指定的,而且不能重复

插入语句

db.users.insert({id:1},{class:1})

更新语句

db.people.update({country:"JP"},{$set:{country:"DDDDDDD"}},{multi:true})

删除语句

db.people.remove({country:"DDDDDDD"}) //不删除索引

db.people.drop() //删除数据和索引

db.people.dropIndexes() //删除所有索引

db.people.dropIndex() //删除特定索引

db.system.indexes.find()

{

"createdCollectionAutomatically" : false,

"numIndexesBefore" : 2, _id一个隐藏索引加db.people.ensureIndex({name:1},{unique:true}) 总共两个索引

"numIndexesAfter" : 3,

"ok" : 1

}

MongoDB文档定位

字段下面是一个数组 字段.数组元素下标

字段下面是一个嵌套文档 字段.嵌套文档某个key

字段下面是一个嵌套文档数组 字段.数组元素下标.嵌套文档某个key

下表为MongoDB中常用的几种数据类型。

数据类型 描述

String 字符串。存储数据常用的数据类型。在 MongoDB 中,UTF-8 编码的字符串才是合法的。

Integer 整型数值。用于存储数值。根据你所采用的服务器,可分为 32 位或 64 位。

Boolean 布尔值。用于存储布尔值(真/假)。

Double 双精度浮点值。用于存储浮点值。

Min/Max keys 将一个值与 BSON(二进制的 JSON)元素的最低值和最高值相对比。

Array 用于将数组或列表或多个值存储为一个键。

Timestamp 时间戳。记录文档修改或添加的具体时间。

Object 用于内嵌文档。

Null 用于创建空值。

Symbol 符号。该数据类型基本上等同于字符串类型,但不同的是,它一般用于采用特殊符号类型的语言。

Date 日期时间。用 UNIX 时间格式来存储当前日期或时间。你可以指定自己的日期时间:创建 Date 对象,传入年月日信息。

Object ID 对象 ID。用于创建文档的 ID。

Binary Data 二进制数据。用于存储二进制数据。

Code 代码类型。用于在文档中存储 JavaScript 代码。

Regular expression 正则表达式类型。用于存储正则表达式。

MongoDB两个100ms

1、100ms做一次checkpoint 写一次journal日志文件

2、超过100ms的查询会记录到慢查询日志

MongoDB的日志

cat /data/mongodb/logs//mongo.log

每个库一个文件夹

2015-10-30T05:59:12.386+0800 I JOURNAL [initandlisten] journal dir=/data/mongodb/data/journal 2015-10-30T05:59:12.386+0800 I JOURNAL [initandlisten] recover : no journal files present, no recovery needed 2015-10-30T05:59:12.518+0800 I JOURNAL [durability] Durability thread started 2015-10-30T05:59:12.518+0800 I JOURNAL [journal writer] Journal writer thread started 2015-10-30T05:59:12.521+0800 I CONTROL [initandlisten] MongoDB starting : pid=4479 port=27017 dbpath=/data/mongodb/data/ 64-bit host=steven 2015-10-30T05:59:12.521+0800 I CONTROL [initandlisten] 2015-10-30T05:59:12.522+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/enabled is 'always'. 2015-10-30T05:59:12.522+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never' 2015-10-30T05:59:12.522+0800 I CONTROL [initandlisten] 2015-10-30T05:59:12.522+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/defrag is 'always'. 2015-10-30T05:59:12.522+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never' 2015-10-30T05:59:12.522+0800 I CONTROL [initandlisten] 2015-10-30T05:59:12.522+0800 I CONTROL [initandlisten] ** WARNING: soft rlimits too low. rlimits set to 1024 processes, 64000 files. Number of processes should be at least 32000 : 0.5 times number of files. 2015-10-30T05:59:12.522+0800 I CONTROL [initandlisten] 2015-10-30T05:59:12.522+0800 I CONTROL [initandlisten] db version v3.0.7 2015-10-30T05:59:12.522+0800 I CONTROL [initandlisten] git version: 6ce7cbe8c6b899552dadd907604559806aa2e9bd 2015-10-30T05:59:12.522+0800 I CONTROL [initandlisten] build info: Linux ip-10-101-218-12 2.6.32-220.el6.x86_64 #1 SMP Wed Nov 9 08:03:13 EST 2011 x86_64 BOOST_LIB_VERSION=1_49 2015-10-30T05:59:12.522+0800 I CONTROL [initandlisten] allocator: tcmalloc 2015-10-30T05:59:12.522+0800 I CONTROL [initandlisten] options: { config: "/etc/mongod.conf", net: { port: 27017 }, processManagement: { fork: true, pidFilePath: "/usr/local/mongodb/mongo.pid" }, replication: { oplogSizeMB: 2048 }, sharding: { clusterRole: "shardsvr" }, storage: { dbPath: "/data/mongodb/data/", directoryPerDB: true }, systemLog: { destination: "file", logAppend: true, path: "/data/mongodb/logs/mongo.log" } } 2015-10-30T05:59:12.536+0800 I INDEX [initandlisten] allocating new ns file /data/mongodb/data/local/local.ns, filling with zeroes... 2015-10-30T05:59:12.858+0800 I STORAGE [FileAllocator] allocating new datafile /data/mongodb/data/local/local.0, filling with zeroes... //填0初始化 数据文件 2015-10-30T05:59:12.858+0800 I STORAGE [FileAllocator] creating directory /data/mongodb/data/local/_tmp 2015-10-30T05:59:12.866+0800 I STORAGE [FileAllocator] done allocating datafile /data/mongodb/data/local/local.0, size: 64MB, took 0.001 secs 2015-10-30T05:59:12.876+0800 I NETWORK [initandlisten] waiting for connections on port 27017 2015-10-30T05:59:14.325+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:40766 #1 (1 connection now open) 2015-10-30T05:59:14.328+0800 I NETWORK [conn1] end connection 192.168.1.106:40766 (0 connections now open) 2015-10-30T05:59:24.339+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:40769 #2 (1 connection now open) //接受192.168.1.106的连接 2015-10-30T06:00:20.348+0800 I CONTROL [signalProcessingThread] got signal 15 (Terminated), will terminate after current cmd ends 2015-10-30T06:00:20.348+0800 I CONTROL [signalProcessingThread] now exiting 2015-10-30T06:00:20.348+0800 I NETWORK [signalProcessingThread] shutdown: going to close listening sockets... 2015-10-30T06:00:20.348+0800 I NETWORK [signalProcessingThread] closing listening socket: 6 2015-10-30T06:00:20.348+0800 I NETWORK [signalProcessingThread] closing listening socket: 7 2015-10-30T06:00:20.348+0800 I NETWORK [signalProcessingThread] removing socket file: /tmp/mongodb-27017.sock //socket方式通信 2015-10-30T06:00:20.348+0800 I NETWORK [signalProcessingThread] shutdown: going to flush diaglog... 2015-10-30T06:00:20.348+0800 I NETWORK [signalProcessingThread] shutdown: going to close sockets... 2015-10-30T06:00:20.348+0800 I STORAGE [signalProcessingThread] shutdown: waiting for fs preallocator... 2015-10-30T06:00:20.348+0800 I STORAGE [signalProcessingThread] shutdown: final commit... 2015-10-30T06:00:20.349+0800 I JOURNAL [signalProcessingThread] journalCleanup... 2015-10-30T06:00:20.349+0800 I JOURNAL [signalProcessingThread] removeJournalFiles 2015-10-30T06:00:20.349+0800 I NETWORK [conn2] end connection 192.168.1.106:40769 (0 connections now open) 2015-10-30T06:00:20.356+0800 I JOURNAL [signalProcessingThread] Terminating durability thread ... 2015-10-30T06:00:20.453+0800 I JOURNAL [journal writer] Journal writer thread stopped 2015-10-30T06:00:20.454+0800 I JOURNAL [durability] Durability thread stopped 2015-10-30T06:00:20.455+0800 I STORAGE [signalProcessingThread] shutdown: closing all files... 2015-10-30T06:00:20.457+0800 I STORAGE [signalProcessingThread] closeAllFiles() finished 2015-10-30T06:00:20.457+0800 I STORAGE [signalProcessingThread] shutdown: removing fs lock... 2015-10-30T06:00:20.457+0800 I CONTROL [signalProcessingThread] dbexit: rc: 0 2015-10-30T06:01:20.259+0800 I CONTROL ***** SERVER RESTARTED ***** 2015-10-30T06:01:20.290+0800 I JOURNAL [initandlisten] journal dir=/data/mongodb/data/journal 2015-10-30T06:01:20.291+0800 I JOURNAL [initandlisten] recover : no journal files present, no recovery needed 2015-10-30T06:01:20.439+0800 I JOURNAL [initandlisten] preallocateIsFaster=true 2.36 2015-10-30T06:01:20.544+0800 I JOURNAL [durability] Durability thread started 2015-10-30T06:01:20.546+0800 I JOURNAL [journal writer] Journal writer thread started 2015-10-30T06:01:20.547+0800 I CONTROL [initandlisten] MongoDB starting : pid=4557 port=27017 dbpath=/data/mongodb/data/ 64-bit host=steven 2015-10-30T06:01:20.547+0800 I CONTROL [initandlisten] 2015-10-30T06:01:20.547+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/enabled is 'always'. 2015-10-30T06:01:20.547+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never' 2015-10-30T06:01:20.547+0800 I CONTROL [initandlisten] 2015-10-30T06:01:20.547+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/defrag is 'always'. 2015-10-30T06:01:20.547+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never' 2015-10-30T06:01:20.547+0800 I CONTROL [initandlisten] 2015-10-30T06:01:20.547+0800 I CONTROL [initandlisten] ** WARNING: soft rlimits too low. rlimits set to 1024 processes, 64000 files. Number of processes should be at least 32000 : 0.5 times number of files. 2015-10-30T06:01:20.547+0800 I CONTROL [initandlisten] 2015-10-30T06:01:20.547+0800 I CONTROL [initandlisten] db version v3.0.7 2015-10-30T06:01:20.547+0800 I CONTROL [initandlisten] git version: 6ce7cbe8c6b899552dadd907604559806aa2e9bd 2015-10-30T06:01:20.547+0800 I CONTROL [initandlisten] build info: Linux ip-10-101-218-12 2.6.32-220.el6.x86_64 #1 SMP Wed Nov 9 08:03:13 EST 2011 x86_64 BOOST_LIB_VERSION=1_49 2015-10-30T06:01:20.547+0800 I CONTROL [initandlisten] allocator: tcmalloc 2015-10-30T06:01:20.547+0800 I CONTROL [initandlisten] options: { config: "/etc/mongod.conf", net: { port: 27017 }, processManagement: { fork: true, pidFilePath: "/usr/local/mongodb/mongo.pid" }, replication: { oplogSizeMB: 2048 }, sharding: { clusterRole: "shardsvr" }, storage: { dbPath: "/data/mongodb/data/", directoryPerDB: true }, systemLog: { destination: "file", logAppend: true, path: "/data/mongodb/logs/mongo.log" } } 2015-10-30T06:01:20.582+0800 I NETWORK [initandlisten] waiting for connections on port 27017 2015-10-30T06:01:28.390+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:40798 #1 (1 connection now open) 2015-10-30T06:01:28.398+0800 I NETWORK [conn1] end connection 192.168.1.106:40798 (0 connections now open) 2015-10-30T06:01:38.394+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:40800 #2 (1 connection now open) 2015-10-30T07:01:39.383+0800 I NETWORK [conn2] end connection 192.168.1.106:40800 (0 connections now open) 2015-10-30T07:01:39.384+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:42327 #3 (1 connection now open) 2015-10-30T07:32:40.910+0800 I NETWORK [conn3] end connection 192.168.1.106:42327 (0 connections now open) 2015-10-30T07:32:40.910+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:43130 #4 (2 connections now open) 2015-10-30T08:32:43.957+0800 I NETWORK [conn4] end connection 192.168.1.106:43130 (0 connections now open) 2015-10-30T08:32:43.957+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:46481 #5 (2 connections now open) 2015-10-31T04:27:00.240+0800 I CONTROL ***** SERVER RESTARTED ***** //服务器非法关机,需要recover 凤胜踢了机器电源 2015-10-31T04:27:00.703+0800 W - [initandlisten] Detected unclean shutdown - /data/mongodb/data/mongod.lock is not empty. //检测到不是clean shutdown 2015-10-31T04:27:00.812+0800 I JOURNAL [initandlisten] journal dir=/data/mongodb/data/journal 2015-10-31T04:27:00.812+0800 I JOURNAL [initandlisten] recover begin //mongodb开始还原 记录lsn 2015-10-31T04:27:01.048+0800 I JOURNAL [initandlisten] recover lsn: 6254831 2015-10-31T04:27:01.048+0800 I JOURNAL [initandlisten] recover /data/mongodb/data/journal/j._0 2015-10-31T04:27:01.089+0800 I JOURNAL [initandlisten] recover skipping application of section seq:0 < lsn:6254831 2015-10-31T04:27:01.631+0800 I JOURNAL [initandlisten] recover cleaning up 2015-10-31T04:27:01.632+0800 I JOURNAL [initandlisten] removeJournalFiles 2015-10-31T04:27:01.680+0800 I JOURNAL [initandlisten] recover done 2015-10-31T04:27:03.006+0800 I JOURNAL [initandlisten] preallocateIsFaster=true 25.68 2015-10-31T04:27:04.076+0800 I JOURNAL [initandlisten] preallocateIsFaster=true 19.9 2015-10-31T04:27:06.896+0800 I JOURNAL [initandlisten] preallocateIsFaster=true 35.5 2015-10-31T04:27:06.896+0800 I JOURNAL [initandlisten] preallocateIsFaster check took 5.215 secs 2015-10-31T04:27:06.896+0800 I JOURNAL [initandlisten] preallocating a journal file /data/mongodb/data/journal/prealloc.0 2015-10-31T04:27:09.005+0800 I - [initandlisten] File Preallocator Progress: 325058560/1073741824 30% 2015-10-31T04:27:12.236+0800 I - [initandlisten] File Preallocator Progress: 440401920/1073741824 41% 2015-10-31T04:27:15.006+0800 I - [initandlisten] File Preallocator Progress: 713031680/1073741824 66% 2015-10-31T04:27:18.146+0800 I - [initandlisten] File Preallocator Progress: 817889280/1073741824 76% 2015-10-31T04:27:21.130+0800 I - [initandlisten] File Preallocator Progress: 912261120/1073741824 84% 2015-10-31T04:27:24.477+0800 I - [initandlisten] File Preallocator Progress: 1017118720/1073741824 94% 2015-10-31T04:28:08.132+0800 I JOURNAL [initandlisten] preallocating a journal file /data/mongodb/data/journal/prealloc.1 2015-10-31T04:28:11.904+0800 I - [initandlisten] File Preallocator Progress: 629145600/1073741824 58% 2015-10-31T04:28:14.260+0800 I - [initandlisten] File Preallocator Progress: 692060160/1073741824 64% 2015-10-31T04:28:17.335+0800 I - [initandlisten] File Preallocator Progress: 796917760/1073741824 74% 2015-10-31T04:28:20.440+0800 I - [initandlisten] File Preallocator Progress: 859832320/1073741824 80% 2015-10-31T04:28:23.274+0800 I - [initandlisten] File Preallocator Progress: 922746880/1073741824 85% 2015-10-31T04:28:26.638+0800 I - [initandlisten] File Preallocator Progress: 1017118720/1073741824 94% 2015-10-31T04:29:01.643+0800 I JOURNAL [initandlisten] preallocating a journal file /data/mongodb/data/journal/prealloc.2 2015-10-31T04:29:04.032+0800 I - [initandlisten] File Preallocator Progress: 450887680/1073741824 41% 2015-10-31T04:29:09.015+0800 I - [initandlisten] File Preallocator Progress: 566231040/1073741824 52% 2015-10-31T04:29:12.181+0800 I - [initandlisten] File Preallocator Progress: 828375040/1073741824 77% 2015-10-31T04:29:15.125+0800 I - [initandlisten] File Preallocator Progress: 964689920/1073741824 89% 2015-10-31T04:29:34.755+0800 I JOURNAL [durability] Durability thread started 2015-10-31T04:29:34.755+0800 I JOURNAL [journal writer] Journal writer thread started 2015-10-31T04:29:35.029+0800 I CONTROL [initandlisten] MongoDB starting : pid=1672 port=27017 dbpath=/data/mongodb/data/ 64-bit host=steven 2015-10-31T04:29:35.031+0800 I CONTROL [initandlisten] 2015-10-31T04:29:35.031+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/enabled is 'always'. 2015-10-31T04:29:35.031+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never' 2015-10-31T04:29:35.031+0800 I CONTROL [initandlisten] 2015-10-31T04:29:35.031+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/defrag is 'always'. 2015-10-31T04:29:35.031+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never' 2015-10-31T04:29:35.031+0800 I CONTROL [initandlisten] 2015-10-31T04:29:35.031+0800 I CONTROL [initandlisten] ** WARNING: soft rlimits too low. rlimits set to 1024 processes, 64000 files. Number of processes should be at least 32000 : 0.5 times number of files. 2015-10-31T04:29:35.031+0800 I CONTROL [initandlisten] 2015-10-31T04:29:35.031+0800 I CONTROL [initandlisten] db version v3.0.7 2015-10-31T04:29:35.031+0800 I CONTROL [initandlisten] git version: 6ce7cbe8c6b899552dadd907604559806aa2e9bd 2015-10-31T04:29:35.031+0800 I CONTROL [initandlisten] build info: Linux ip-10-101-218-12 2.6.32-220.el6.x86_64 #1 SMP Wed Nov 9 08:03:13 EST 2011 x86_64 BOOST_LIB_VERSION=1_49 2015-10-31T04:29:35.031+0800 I CONTROL [initandlisten] allocator: tcmalloc 2015-10-31T04:29:35.031+0800 I CONTROL [initandlisten] options: { config: "/etc/mongod.conf", net: { port: 27017 }, processManagement: { fork: true, pidFilePath: "/usr/local/mongodb/mongo.pid" }, replication: { oplogSizeMB: 2048 }, sharding: { clusterRole: "shardsvr" }, storage: { dbPath: "/data/mongodb/data/", directoryPerDB: true }, systemLog: { destination: "file", logAppend: true, path: "/data/mongodb/logs/mongo.log" } } 2015-10-31T04:29:36.869+0800 I NETWORK [initandlisten] waiting for connections on port 27017 2015-10-31T04:39:39.671+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:3134 #1 (1 connection now open) 2015-10-31T04:39:40.042+0800 I COMMAND [conn1] command admin.$cmd command: isMaster { isMaster: true } keyUpdates:0 writeConflicts:0 numYields:0 reslen:178 locks:{} 229ms 2015-10-31T04:39:40.379+0800 I NETWORK [conn1] end connection 192.168.1.106:3134 (0 connections now open) 2015-10-31T04:40:10.117+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:3137 #2 (1 connection now open) 2015-10-31T04:40:13.357+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:3138 #3 (2 connections now open) 2015-10-31T04:40:13.805+0800 I COMMAND [conn3] command local.$cmd command: usersInfo { usersInfo: 1 } keyUpdates:0 writeConflicts:0 numYields:0 reslen:49 locks:{ Global: { acquireCount: { r: 2 } }, MMAPV1Journal: { acquireCount: { r: 1 } }, Database: { acquireCount: { r: 1 } }, Collection: { acquireCount: { R: 1 } } } 304ms 2015-10-31T04:49:30.223+0800 I NETWORK [conn2] end connection 192.168.1.106:3137 (1 connection now open) 2015-10-31T04:49:30.223+0800 I NETWORK [conn3] end connection 192.168.1.106:3138 (0 connections now open) 2015-10-31T04:56:27.271+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:4335 #4 (1 connection now open) 2015-10-31T04:56:29.449+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:4336 #5 (2 connections now open) 2015-10-31T04:58:17.514+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:4356 #6 (3 connections now open) 2015-10-31T05:02:55.219+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:4902 #7 (4 connections now open) 2015-10-31T05:03:57.954+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:4907 #8 (5 connections now open) 2015-10-31T05:10:25.905+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:5064 #9 (6 connections now open) 2015-10-31T05:16:00.026+0800 I NETWORK [conn7] end connection 192.168.1.106:4902 (5 connections now open) 2015-10-31T05:16:00.101+0800 I NETWORK [conn8] end connection 192.168.1.106:4907 (4 connections now open) 2015-10-31T05:16:00.163+0800 I NETWORK [conn9] end connection 192.168.1.106:5064 (3 connections now open) 2015-10-31T05:26:28.837+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:5654 #10 (4 connections now open) 2015-10-31T05:26:28.837+0800 I NETWORK [conn4] end connection 192.168.1.106:4335 (2 connections now open) 2015-10-31T05:26:30.969+0800 I NETWORK [conn5] end connection 192.168.1.106:4336 (2 connections now open) 2015-10-31T05:26:30.973+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:5655 #11 (3 connections now open) 2015-10-31T05:56:30.336+0800 I NETWORK [conn10] end connection 192.168.1.106:5654 (2 connections now open) 2015-10-31T05:56:30.337+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:6153 #12 (3 connections now open) 2015-10-31T05:56:32.457+0800 I NETWORK [conn11] end connection 192.168.1.106:5655 (2 connections now open) 2015-10-31T05:56:32.458+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:6154 #13 (4 connections now open) 2015-10-31T06:26:31.837+0800 I NETWORK [conn12] end connection 192.168.1.106:6153 (2 connections now open) 2015-10-31T06:26:31.838+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:6514 #14 (3 connections now open) 2015-10-31T06:26:33.961+0800 I NETWORK [conn13] end connection 192.168.1.106:6154 (2 connections now open) 2015-10-31T06:26:33.962+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:6515 #15 (4 connections now open) 2015-10-31T06:27:09.518+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:6563 #16 (4 connections now open) 2015-10-31T06:29:57.407+0800 I INDEX [conn16] allocating new ns file /data/mongodb/data/testlyh/testlyh.ns, filling with zeroes... 2015-10-31T06:29:57.846+0800 I STORAGE [FileAllocator] allocating new datafile /data/mongodb/data/testlyh/testlyh.0, filling with zeroes... 2015-10-31T06:29:57.847+0800 I STORAGE [FileAllocator] creating directory /data/mongodb/data/testlyh/_tmp 2015-10-31T06:29:57.871+0800 I STORAGE [FileAllocator] done allocating datafile /data/mongodb/data/testlyh/testlyh.0, size: 64MB, took 0.003 secs 2015-10-31T06:29:57.890+0800 I COMMAND [conn16] command testlyh.$cmd command: create { create: "temporary" } keyUpdates:0 writeConflicts:0 numYields:0 reslen:37 locks:{ Global: { acquireCount: { r: 1, w: 1 } }, MMAPV1Journal: { acquireCount: { w: 6 } }, Database: { acquireCount: { W: 1 } }, Metadata: { acquireCount: { W: 4 } } } 483ms 2015-10-31T06:29:57.894+0800 I COMMAND [conn16] CMD: drop testlyh.temporary 2015-10-31T06:45:06.955+0800 I NETWORK [conn16] end connection 192.168.1.106:6563 (3 connections now open) 2015-10-31T06:56:33.323+0800 I NETWORK [conn14] end connection 192.168.1.106:6514 (2 connections now open) 2015-10-31T06:56:33.324+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:7692 #17 (3 connections now open) 2015-10-31T06:56:35.461+0800 I NETWORK [conn15] end connection 192.168.1.106:6515 (2 connections now open) 2015-10-31T06:56:35.462+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:7693 #18 (4 connections now open) 2015-10-31T07:13:30.230+0800 I NETWORK [initandlisten] connection accepted from 127.0.0.1:51696 #19 (4 connections now open) 2015-10-31T07:21:06.715+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:8237 #20 (5 connections now open) 2015-10-31T07:21:32.193+0800 I INDEX [conn6] build index on: local.people properties: { v: 1, unique: true, key: { name: 1.0 }, name: "name_1", ns: "local.people" } //创建索引 2015-10-31T07:21:32.193+0800 I INDEX [conn6] building index using bulk method //bulk insert方式建立索引 2015-10-31T07:21:32.194+0800 I INDEX [conn6] build index done. scanned 36 total records. 0 secs 2015-10-31T07:26:34.826+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:8328 #21 (6 connections now open) 2015-10-31T07:26:34.827+0800 I NETWORK [conn17] end connection 192.168.1.106:7692 (4 connections now open) 2015-10-31T07:26:36.962+0800 I NETWORK [conn18] end connection 192.168.1.106:7693 (4 connections now open) 2015-10-31T07:26:36.963+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:8329 #22 (6 connections now open) 2015-10-31T07:51:08.214+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:9202 #23 (6 connections now open) 2015-10-31T07:51:08.214+0800 I NETWORK [conn20] end connection 192.168.1.106:8237 (4 connections now open) 2015-10-31T07:56:36.327+0800 I NETWORK [conn21] end connection 192.168.1.106:8328 (4 connections now open) 2015-10-31T07:56:36.328+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:9310 #24 (6 connections now open) 2015-10-31T07:56:38.450+0800 I NETWORK [conn22] end connection 192.168.1.106:8329 (4 connections now open) 2015-10-31T07:56:38.452+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:9313 #25 (5 connections now open) 2015-10-31T08:03:56.823+0800 I NETWORK [conn25] end connection 192.168.1.106:9313 (4 connections now open) 2015-10-31T08:03:58.309+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:9470 #26 (5 connections now open) 2015-10-31T08:03:58.309+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:9471 #27 (6 connections now open) 2015-10-31T08:03:58.313+0800 I NETWORK [conn26] end connection 192.168.1.106:9470 (5 connections now open) 2015-10-31T08:03:58.314+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:9469 #28 (6 connections now open) 2015-10-31T08:03:58.315+0800 I NETWORK [conn27] end connection 192.168.1.106:9471 (5 connections now open) 2015-10-31T08:03:58.317+0800 I NETWORK [conn28] end connection 192.168.1.106:9469 (4 connections now open) 2015-10-31T08:04:04.852+0800 I NETWORK [conn19] end connection 127.0.0.1:51696 (3 connections now open) 2015-10-31T08:04:05.944+0800 I NETWORK [conn23] end connection 192.168.1.106:9202 (2 connections now open) 2015-10-31T08:04:06.215+0800 I NETWORK [conn24] end connection 192.168.1.106:9310 (1 connection now open) 2015-10-31T08:04:09.233+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:9531 #29 (2 connections now open) 2015-10-31T08:04:09.233+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:9530 #30 (3 connections now open) 2015-10-31T08:04:09.233+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:9532 #31 (4 connections now open) 2015-10-31T08:34:18.767+0800 I NETWORK [conn29] end connection 192.168.1.106:9531 (3 connections now open) 2015-10-31T08:34:18.767+0800 I NETWORK [conn30] end connection 192.168.1.106:9530 (3 connections now open) 2015-10-31T08:34:18.769+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:10157 #32 (3 connections now open) 2015-10-31T08:34:18.769+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:10158 #33 (4 connections now open) 2015-10-31T08:34:18.771+0800 I NETWORK [conn31] end connection 192.168.1.106:9532 (3 connections now open) 2015-10-31T08:34:18.774+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:10159 #34 (4 connections now open) 2015-10-31T08:36:23.662+0800 I NETWORK [conn33] end connection 192.168.1.106:10158 (3 connections now open) 2015-10-31T08:36:23.933+0800 I NETWORK [conn6] end connection 192.168.1.106:4356 (2 connections now open) 2015-10-31T08:36:24.840+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:10238 #35 (3 connections now open) 2015-10-31T08:36:24.840+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:10239 #36 (4 connections now open) 2015-10-31T08:36:24.844+0800 I NETWORK [conn36] end connection 192.168.1.106:10239 (3 connections now open) 2015-10-31T08:36:24.845+0800 I NETWORK [conn35] end connection 192.168.1.106:10238 (2 connections now open) 2015-10-31T08:36:28.000+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:10279 #37 (3 connections now open) 2015-10-31T08:36:28.004+0800 I NETWORK [conn37] end connection 192.168.1.106:10279 (2 connections now open) 2015-10-31T08:36:32.751+0800 I NETWORK [conn32] end connection 192.168.1.106:10157 (1 connection now open) 2015-10-31T08:36:32.756+0800 I NETWORK [conn34] end connection 192.168.1.106:10159 (0 connections now open) 2015-10-31T08:36:35.835+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:10339 #38 (1 connection now open) 2015-10-31T08:36:35.837+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:10341 #39 (2 connections now open) 2015-10-31T08:36:35.837+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:10340 #40 (3 connections now open) 2015-10-31T09:06:45.368+0800 I NETWORK [conn39] end connection 192.168.1.106:10341 (2 connections now open) 2015-10-31T09:06:45.370+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:12600 #41 (3 connections now open) 2015-10-31T09:06:45.371+0800 I NETWORK [conn40] end connection 192.168.1.106:10340 (2 connections now open) 2015-10-31T09:06:45.371+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:12601 #42 (4 connections now open) 2015-10-31T09:06:45.380+0800 I NETWORK [conn38] end connection 192.168.1.106:10339 (2 connections now open) 2015-10-31T09:06:45.381+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:12602 #43 (4 connections now open) 2015-10-31T09:23:54.705+0800 I NETWORK [initandlisten] connection accepted from 127.0.0.1:51697 #44 (4 connections now open) 2015-10-31T09:25:07.727+0800 I INDEX [conn44] allocating new ns file /data/mongodb/data/test/test.ns, filling with zeroes... 2015-10-31T09:25:08.375+0800 I STORAGE [FileAllocator] allocating new datafile /data/mongodb/data/test/test.0, filling with zeroes... 2015-10-31T09:25:08.375+0800 I STORAGE [FileAllocator] creating directory /data/mongodb/data/test/_tmp 2015-10-31T09:25:08.378+0800 I STORAGE [FileAllocator] done allocating datafile /data/mongodb/data/test/test.0, size: 64MB, took 0.001 secs 2015-10-31T09:25:08.386+0800 I WRITE [conn44] insert test.users query: { _id: ObjectId('56341873c4393e7396b20592'), id: 1.0 } ninserted:1 keyUpdates:0 writeConflicts:0 numYields:0 locks:{ Global: { acquireCount: { r: 2, w: 2 } }, MMAPV1Journal: { acquireCount: { w: 8 } }, Database: { acquireCount: { w: 1, W: 1 } }, Collection: { acquireCount: { W: 1 } }, Metadata: { acquireCount: { W: 4 } } } 659ms 2015-10-31T09:25:08.386+0800 I COMMAND [conn44] command test.$cmd command: insert { insert: "users", documents: [ { _id: ObjectId('56341873c4393e7396b20592'), id: 1.0 } ], ordered: true } keyUpdates:0 writeConflicts:0 numYields:0 reslen:40 locks:{ Global: { acquireCount: { r: 2, w: 2 } }, MMAPV1Journal: { acquireCount: { w: 8 } }, Database: { acquireCount: { w: 1, W: 1 } }, Collection: { acquireCount: { W: 1 } }, Metadata: { acquireCount: { W: 4 } } } 660ms 2015-10-31T09:26:09.405+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:13220 #45 (5 connections now open) 2015-10-31T09:36:46.873+0800 I NETWORK [conn41] end connection 192.168.1.106:12600 (4 connections now open) 2015-10-31T09:36:46.874+0800 I NETWORK [conn42] end connection 192.168.1.106:12601 (3 connections now open) 2015-10-31T09:36:46.875+0800 I NETWORK [conn43] end connection 192.168.1.106:12602 (2 connections now open) 2015-10-31T09:36:46.875+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:13498 #46 (3 connections now open) 2015-10-31T09:36:46.876+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:13499 #47 (4 connections now open) 2015-10-31T09:36:46.876+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:13500 #48 (5 connections now open) 2015-10-31T09:43:52.490+0800 I INDEX [conn45] build index on: local.people properties: { v: 1, key: { country: 1.0 }, name: "country_1", ns: "local.people" } 2015-10-31T09:43:52.490+0800 I INDEX [conn45] building index using bulk method 2015-10-31T09:43:52.491+0800 I INDEX [conn45] build index done. scanned 36 total records. 0 secs 2015-10-31T09:51:32.977+0800 I INDEX [conn45] build index on: local.people properties: { v: 1, key: { country: 1.0, name: 1.0 }, name: "country_1_name_1", ns: "local.people" } //建立复合索引 2015-10-31T09:51:32.977+0800 I INDEX [conn45] building index using bulk method 2015-10-31T09:51:32.977+0800 I INDEX [conn45] build index done. scanned 36 total records. 0 secs 2015-10-31T09:59:49.802+0800 I NETWORK [conn44] end connection 127.0.0.1:51697 (4 connections now open) 2015-10-31T10:06:48.357+0800 I NETWORK [conn47] end connection 192.168.1.106:13499 (3 connections now open) 2015-10-31T10:06:48.358+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:14438 #49 (5 connections now open) 2015-10-31T10:06:48.358+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:14439 #50 (5 connections now open) 2015-10-31T10:06:48.358+0800 I NETWORK [conn48] end connection 192.168.1.106:13500 (4 connections now open) 2015-10-31T10:06:48.358+0800 I NETWORK [conn46] end connection 192.168.1.106:13498 (4 connections now open) 2015-10-31T10:06:48.359+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:14440 #51 (5 connections now open) 2015-10-31T10:12:15.409+0800 I INDEX [conn45] build index on: local.users properties: { v: 1, key: { Attribute: 1.0 }, name: "Attribute_1", ns: "local.users" } 2015-10-31T10:12:15.409+0800 I INDEX [conn45] building index using bulk method 2015-10-31T10:12:15.409+0800 I INDEX [conn45] build index done. scanned 35 total records. 0 secs 2015-10-31T10:28:27.422+0800 I COMMAND [conn45] CMD: dropIndexes local.people //删除索引 2015-11-25T15:25:23.248+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:23227 #76 (4 connections now open) 2015-11-25T15:25:23.247+0800 I NETWORK [conn73] end connection 192.168.1.106:21648 (2 connections now open) 2015-11-25T15:25:36.226+0800 I NETWORK [conn75] end connection 192.168.1.106:21659 (2 connections now open) 2015-11-25T15:25:36.227+0800 I NETWORK [conn74] end connection 192.168.1.106:21658 (1 connection now open) 2015-11-25T15:25:36.227+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:23236 #77 (2 connections now open) 2015-11-25T15:25:36.227+0800 I NETWORK [initandlisten] connection accepted from 192.168.1.106:23237 #78 (3 connections now open)

复制集搭建步骤

1、各个机器都安装mongodb http://www.cnblogs.com/lyhabc/p/8529966.html 2、设置keyfile,在主节点生成keyfile,然后发送给各个从节点 openssl rand -base64 741 > /data/mongodb/mongodb27017/data/mongodb-keyfile chown -R mongodb.mongodb /data/mongodb/mongodb27017/data/ chmod 600 /data/mongodb/mongodb27017/data/mongodb-keyfile chown mongodb.mongodb /data/mongodb/mongodb27017/data/mongodb-keyfile 2、设置3台机器的mongod.conf里面的 replSet=复制集名 keyFile=/data/mongodb/mongodb27017/data/mongodb-keyfile 然后重启各个机器的mongodb 3、在主节点执行下面命令,这里的 _id:dbset 和上面配置文件中的replSet要保持一样,secondary不会从hidden和slavedelay成员复制数据 use admin config = { _id:"dbset", members:[ {_id:0,host:"192.168.1.155:27017",priority:3,votes:1}, {_id:1,host:"192.168.1.156:27017",priority:2,votes:1}, {_id:2,host:"192.168.1.157:27017",arbiterOnly:true,hidden:true,priority:1,slaveDelay:10,votes:1}] } #初始化副本集配置 执行初始化命令的这个mongodb实例将成为复制集中的primary节点 rs.initiate(config); 4、mongodb默认副本节点上不允许读,需要设置副本节点可以读,在各个从节点执行 db.getMongo().setSlaveOk(); 5、查看集群节点的状态 rs.status(); rs.conf() //查看配置 6、测试,主节点插入数据,可以看到数据已经复制到了从节点。 db.testdb.find(); 7、设置写关注为大多数 cfg = rs.conf() cfg.settings = {} cfg.settings.getLastErrorDefaults = {w: "majority"} rs.reconfig(cfg) --------------------------------------------------------------------------------- #初始化副本集配置 执行初始化命令的这个mongodb实例将成为复制集中的primary节点 rs.initiate(config); 在主库的admin库下执行 #定义副本集配置变量,这里的 _id:dbset 和上面配置文件中的replSet要保持一样。 config = { _id:"dbset", members:[ {_id:0,host:"192.168.1.155:27017"}, {_id:1,host:"192.168.14.221:27017"}, {_id:2,host:"192.168.14.198:27017"}] } rs.initiate(config); 或 rs.add()/rs.reremove 语法 rs.add({ _id: <int>, host: <string>, // required arbiterOnly: <boolean>, buildIndexes: <boolean>, hidden: <boolean>, priority: <number>, tags: <document>, slaveDelay: <int>, //单位秒 votes: <number> }) rs.reremove(host: <string>) #第一次做复制集 rs.initiate() #在主库执行 rs.add("192.168.245.131") #在主库执行 rs.add("192.168.245.132") #在主库执行 #做好复制集并上线运行之后,后续添加 rs.add( { _id:2, host: "192.168.1.6:27017", priority: 0, votes: 0 } ) #rs.add()的时候才用rs.reconfig() #设置权重,有权重必须要有选举权,priority不等于0,votes也要不等于0 cfg = rs.conf() cfg.members[0].priority = 2 cfg.members[1].priority = 1 cfg.members[0].votes = 1 #设置members[0]没选举权 rs.reconfig(cfg) #会导致重新选主,所以建议在维护窗口期间添加secondary ------------------------------------------------------------------------- 配置复制集时,"has data already, cannot initiate set解决方法 1、把从节点配置文件李的replSet=注释掉 2、重启mongodb服务 3、在从上执行db.dropDatabase(),清空所有的数据库,直到show dbs为空

副本集节点状态来看(rs.status命令):

stateStr 的值有

STARTUP //在副本集每个节点启动的时候,mongod加载副本集配置信息,然后将状态转换为STARTUP2 STARTUP2 //加载配置之后决定是否需要做Initial Sync,需要则停留在STARTUP2状态,不需要则进入RECOVERING状态 RECOVERING //处于不可对外提供读写的阶段,主要在Initial Sync之后追增量数据时候。 成员状态 STARTUP Not yet an active member of any set. All members start up in this state. The mongod parses the replica set configuration document while in STARTUP. PRIMARY The member in state primary is the only member that can accept write operations. Eligible to vote. SECONDARY A member in state secondary is replicating the data store. Eligible to vote. RECOVERING Members either perform startup self-checks, or transition from completing a rollback or resync. Eligible to vote. STARTUP2 The member has joined the set and is running an initial sync. UNKNOWN The member’s state, as seen from another member of the set, is not yet known. ARBITER Arbiters do not replicate data and exist solely to participate in elections. DOWN The member, as seen from another member of the set, is unreachable. ROLLBACK This member is actively performing a rollback. Data is not available for reads. REMOVED This member was once in a replica set but was subsequently removed.

变更同步源

ping不通自己的同步源

自己的同步源角色发生变化

自己的同步源与副本集任意一个节点延迟超过30s

触发MongoDB执行主从切换。

1、 新初始化一套副本集

2、 从库不能连接到主库(默认超过10s,可通过heartbeatTimeoutSecs参数控制),从库发起选举

3、 主库主动放弃primary 角色

主动执行rs.stepdown 命令

主库与大部分节点都无法通信的情况下

修改副本集配置的时候,rs.reconfig()

4、 移除从库的时候

终止回滚:

对比老主库的optime和同步源的optime,如果超过了30分钟,那么放弃回滚。

在回滚的过程中,如果发现单条oplog超过512M,则放弃回滚。

如果有dropDatabase操作,则放弃回滚。

最终生成的回滚记录超过300M,也会放弃回滚。

arbiter

dbset:PRIMARY> db.system.replset.find(); //rs.conf()返回的信息就是从db.system.replset里取

{

"_id": "dbset",

"version": 1,

"members": [

{

"_id": 0,

"host": "192.168.1.155:27017",

"arbiterOnly": false,

"buildIndexes": true,

"hidden": false,

"priority": 1,

"tags": { },

"slaveDelay": 0,

"votes": 1

},

{

"_id": 1,

"host": "192.168.14.221:27017",

"arbiterOnly": false,

"buildIndexes": true,

"hidden": false,

"priority": 1,

"tags": { },

"slaveDelay": 0,

"votes": 1

},

{

"_id": 2,

"host": "192.168.14.198:27017",

"arbiterOnly": false,

"buildIndexes": true,

"hidden": false,

"priority": 1,

"tags": { },

"slaveDelay": 0,

"votes": 1

}

],

"settings": {

"chainingAllowed": true,

"heartbeatTimeoutSecs": 10, //心跳超时10秒

"getLastErrorModes": { },

"getLastErrorDefaults": {

"w": 1,

"wtimeout": 0

}

}

}

db.oplog.rs.find(); //复制集每个节点都有 local.oplog.rs

{ "ts" : Timestamp(1448617001, 1), "h" : NumberLong(0), "v" : 2, "op" : "n", "ns" : "", "o" : { "msg" : "initiating set" } }

{ "ts" : Timestamp(1448619771, 1), "h" : NumberLong("-4910297248929153005"), "v" : 2, "op" : "c", "ns" : "foobar.$cmd", "o" : { "create" : "persons" } }

{ "ts" : Timestamp(1448619771, 2), "h" : NumberLong("-1223034904388786835"), "v" : 2, "op" : "i", "ns" : "foobar.persons", "o" : { "_id" : ObjectId("56582ef8041ad4811121921c"), "num" : 0 } }

{ "ts" : Timestamp(1448619771, 3), "h" : NumberLong("1509093586256204652"), "v" : 2, "op" : "i", "ns" : "foobar.persons", "o" : { "_id" : ObjectId("56582ef8041ad4811121921d"), "num" : 1 } }

{ "ts" : Timestamp(1448619771, 4), "h" : NumberLong("-1466302071499787062"), "v" : 2, "op" : "i", "ns" : "foobar.persons", "o" : { "_id" : ObjectId("56582ef8041ad4811121921e"), "num" : 2 } }

{ "ts" : Timestamp(1448619771, 5), "h" : NumberLong("-5291309432364303979"), "v" : 2, "op" : "i", "ns" : "foobar.persons", "o" : { "_id" : ObjectId("56582ef8041ad4811121921f"), "num" : 3 } }

{ "ts" : Timestamp(1448619771, 6), "h" : NumberLong("-1186940023830631529"), "v" : 2, "op" : "i", "ns" : "foobar.persons", "o" : { "_id" : ObjectId("56582ef8041ad48111219220"), "num" : 4 } }

{ "ts" : Timestamp(1448619771, 7), "h" : NumberLong("8105416294429864718"), "v" : 2, "op" : "i", "ns" : "foobar.persons", "o" : { "_id" : ObjectId("56582ef8041ad48111219221"), "num" : 5 } }

{ "ts" : Timestamp(1448619771, 8), "h" : NumberLong("4936086358438093652"), "v" : 2, "op" : "i", "ns" : "foobar.persons", "o" : { "_id" : ObjectId("56582ef8041ad48111219222"), "num" : 6 } }

{ "ts" : Timestamp(1448619771, 9), "h" : NumberLong("-6505444938187353001"), "v" : 2, "op" : "i", "ns" : "foobar.persons", "o" : { "_id" : ObjectId("56582ef8041ad48111219223"), "num" : 7 } }

{ "ts" : Timestamp(1448619771, 10), "h" : NumberLong("6604667343543284097"), "v" : 2, "op" : "i", "ns" : "foobar.persons", "o" : { "_id" : ObjectId("56582ef8041ad48111219224"), "num" : 8 } }

{ "ts" : Timestamp(1448619771, 11), "h" : NumberLong("1628850075451893232"), "v" : 2, "op" : "i", "ns" : "foobar.persons", "o" : { "_id" : ObjectId("56582ef8041ad48111219225"), "num" : 9 } }

{ "ts" : Timestamp(1448619771, 12), "h" : NumberLong("6976982335364958110"), "v" : 2, "op" : "i", "ns" : "foobar.persons", "o" : { "_id" : ObjectId("56582ef8041ad48111219226"), "num" : 10 } }

{ "ts" : Timestamp(1448619771, 13), "h" : NumberLong("670853545390097497"), "v" : 2, "op" : "i", "ns" : "foobar.persons", "o" : { "_id" : ObjectId("56582ef8041ad48111219227"), "num" : 11 } }

{ "ts" : Timestamp(1448619771, 14), "h" : NumberLong("-5105721635655707861"), "v" : 2, "op" : "i", "ns" : "foobar.persons", "o" : { "_id" : ObjectId("56582ef8041ad48111219228"), "num" : 12 } }

{ "ts" : Timestamp(1448619771, 15), "h" : NumberLong("6288713624602787858"), "v" : 2, "op" : "i", "ns" : "foobar.persons", "o" : { "_id" : ObjectId("56582ef8041ad48111219229"), "num" : 13 } }

{ "ts" : Timestamp(1448619771, 16), "h" : NumberLong("-1023807204070269528"), "v" : 2, "op" : "i", "ns" : "foobar.persons", "o" : { "_id" : ObjectId("56582ef8041ad4811121922a"), "num" : 14 } }

{ "ts" : Timestamp(1448619771, 17), "h" : NumberLong("2467324426565008795"), "v" : 2, "op" : "i", "ns" : "foobar.persons", "o" : { "_id" : ObjectId("56582ef8041ad4811121922b"), "num" : 15 } }

{ "ts" : Timestamp(1448619771, 18), "h" : NumberLong("-7308533254100947819"), "v" : 2, "op" : "i", "ns" : "foobar.persons", "o" : { "_id" : ObjectId("56582ef8041ad4811121922c"), "num" : 16 } }

{ "ts" : Timestamp(1448619771, 19), "h" : NumberLong("7461162953794131316"), "v" : 2, "op" : "i", "ns" : "foobar.persons", "o" : { "_id" : ObjectId("56582ef8041ad4811121922d"), "num" : 17 } }

local库下才有

oplog.rs 32位系统50m 64位 5%空闲磁盘空间 指定启动时候加--oplogSize

me

minvalid

startup_log

system.indexes

system.replset

设置副本可读

db.getMongo().setSlaveOk();

getLastError配置

db.system.replset.find();

"settings": {

"chainingAllowed": true,

"heartbeatTimeoutSecs": 10, //心跳超时10秒

"getLastErrorModes": { },

"getLastErrorDefaults": {

"w": 1,

"wtimeout": 0

}

}

w:

-1 驱动程序不会使用写关注,忽略掉所有网络或socket错误

0 驱动程序不会使用写关注,只返回网络或socket错误

1 驱动程序使用写关注,但是只针对primary节点,对复制集或单实例是默认配置

>1 写关注将针对复制集中的n个节点,当客户端收到这些节点的反馈信息后命令才返回给客户端继续执行

wtimeout:写关注应该在多长时间内返回,如果不指定可能因为不确定因素导致程序的写操作一直阻塞

节点奇数 官方推荐副本集的成员数量为奇数,最多12个副本集节点,最多7个节点参与选举。最多12个副本集节点是因为没必要一份数据复制那么多份,备份太多反而增加了网络负载和拖慢了集群性能;而最多7个节点参与选举是因为内部选举机制节点数量太多就会导致1分钟内还选不出主节点,凡事只要适当就好

相关文章

http://www.lanceyan.com/tech/mongodb/mongodb_repset1.html

http://www.lanceyan.com/tech/arch/mongodb_shard1.html

http://www.lanceyan.com/tech/mongodb_repset2.html

http://blog.nosqlfan.com/html/4139.html (Bully 算法)

心跳

综上所述,整个集群需要保持一定的通信才能知道哪些节点活着哪些节点挂掉。mongodb节点会向副本集中的其他节点每两秒就会发送一次pings包,

如果其他节点在10秒钟之内没有返回就标示为不能访问。每个节点内部都会维护一个状态映射表,表明当前每个节点是什么角色、日志时间戳等关键信息。

如果是主节点,除了维护映射表外还需要检查自己能否和集群中内大部分节点通讯,如果不能则把自己降级为secondary只读节点。

同步

副本集同步分为初始化同步和增量同步。初始化同步指全量从主节点同步数据,如果主节点数据量比较大同步时间会比较长。而keep复制指初始化同步过后,节点之间的实时同步一般是增量同步。

初始化同步不只是在第一次才会被触发,有以下两种情况会触发:

secondary第一次加入,这个是肯定的。

secondary落后的数据量超过了oplog的大小,这样也会被全量复制。

那什么是oplog的大小?前面说过oplog保存了数据的操作记录,secondary复制oplog并把里面的操作在secondary执行一遍。但是oplog也是mongodb的一个集合,保存在local.oplog.rs里,但是这个oplog是一个capped collection也就是固定大小的集合,新数据加入超过集合的大小会覆盖。所以这里需要注意,跨IDC的复制要设置合适的oplogSize,避免在生产环境经常产生全量复制。oplogSize 可以通过–oplogSize设置大小,

对于linux 和windows 64位,oplog size默认为剩余磁盘空间的5%。

同步也并非只能从主节点同步,假设集群中3个节点,节点1是主节点在IDC1,节点2、节点3在IDC2,初始化节点2、节点3会从节点1同步数据。

后面节点2、节点3会使用就近原则从当前IDC的副本集中进行复制,只要有一个节点从IDC1的节点1复制数据。

设置同步还要注意以下几点:

secondary不会从delayed和hidden成员上复制数据。MongoDB默认是采取级联复制的架构,就是默认不一定选择主库作为自己的同步源

只要是需要同步,两个成员的buildindexes必须要相同无论是否是true和false。buildindexes主要用来设置是否这个节点的数据用于查询,默认为true。

如果同步操作30秒都没有反应,则会重新选择一个节点进行同步。

增删节点: 后台有两个deamon做 chunk的split , 和 shard之前的balance

When removing a shard, the balancer migrates all chunks from a shard to other shards. After migrating all data and updating the meta data, you can safely remove the shard (这里的意思,必须要等搬迁完毕,不然数据就会丢失)

这个是因为片键的设置,文章中是为了做demo用的设置,这是不太好的方式,最好不要用自增id做片键,因为会出现数据热点,可以选用objectid

相关文章

http://www.lanceyan.com/tech/arch/mongodb_shard1.html

f

mongodb事务机制和数据安全

f

f

f

f

f

f

f

f

f

f

f

f

数据丢失常见分析

主要两个参数

1、写关注

w: 0 | 1 | n | majority | tag

wtimeout: millis 毫秒

2、日志

j: 1

f

f

f

f

f

f

f

f

f

f

数据安全总结

w:majority #写关注 复制集

j:1 #事务日志 单机,两种方式,1、连接字符串(每写一条数据都刷盘一次,性能损耗大)要达到MySQL的双1设置,连接字符串j:1 2、mongod.conf (100毫秒刷盘一次)

安全写级别(write concern) 目前有两种方法: 1、重载writeConcern方法: db.products.insert( { item: "envelopes", qty : 100, type: "Clasp" }, { writeConcern: { w: majority, wtimeout: 5000 } } ) 在插入数据时重载writeConcern方法,将w的值改为majority,表示写确认发送到集群中两台主机上包括主库。 writeConcern方法参数说明: { w: <value>, j: <boolean>, wtimeout: <number> } w:表示写操作的请求确认发送到mongod实例个数或者指定tag的mongod实例。具体有以下几个值: 0:表示不用写操作确认; 1:表示发送到单独一个mongod实例,对于复制集群环境,就发送到主库上; 大于1:表示发送到集群中实例的个数,但是不能超过集群个数,否则出现写一直阻塞; majority:v3.2版本中,发送到集群中大多数节点,包括主库,并且必须写到本地硬盘的日志文件中,才算这次写入是成功的。 <tag set>:表示发送到指定tag的实例上; j:表示写操作是否已经写入日志文件中,是boolean类型的。 wtimeout:确认请求的超时数,比如w设置10,但是集群一共才9个节点,那么就一直阻塞在那,通过设置超时数,避免写确认返回阻塞。 2、修改配置方法: 修改复制集的配置 cfg = rs.conf() cfg.settings = {} cfg.settings.getLastErrorDefaults = {w: "majority"} rs.reconfig(cfg) 日志刷盘 vim /etc/mongod.conf journal=true journalCommitInterval=100

f

f

不支持join意味着肆无忌惮的横向扩展能力

f

百度云

f

所有业务使用ssd,raid0

f

oplog并行同步

f

搭建分片

1、建立相关目录和文件 #机器1 mkdir -p /data/replset0/data/rs0 mkdir -p /data/replset0/log mkdir -p /data/replset0/config touch /data/replset0/config/rs0.conf touch /data/replset0/log/rs0.log mkdir -p /data/replset1/data/rs1 mkdir -p /data/replset1/log mkdir -p /data/replset1/config touch /data/replset1/config/rs1.conf touch /data/replset1/log/rs1.log mkdir -p /data/replset2/data/rs2 mkdir -p /data/replset2/log mkdir -p /data/replset2/config touch /data/replset2/config/rs2.conf touch /data/replset2/log/rs2.log mkdir -p /data/db_config/data/config0 mkdir -p /data/db_config/log/ mkdir -p /data/db_config/config/ touch /data/db_config/log/config0.log touch /data/db_config/config/cfgserver0.conf #机器2 mkdir -p /data/replset0/data/rs0 mkdir -p /data/replset0/log mkdir -p /data/replset0/config touch /data/replset0/config/rs0.conf touch /data/replset0/log/rs0.log mkdir -p /data/replset1/data/rs1 mkdir -p /data/replset1/log mkdir -p /data/replset1/config touch /data/replset1/config/rs1.conf touch /data/replset1/log/rs1.log mkdir -p /data/replset2/data/rs2 mkdir -p /data/replset2/log mkdir -p /data/replset2/config touch /data/replset2/config/rs2.conf touch /data/replset2/log/rs2.log mkdir -p /data/db_config/data/config1 mkdir -p /data/db_config/log/ mkdir -p /data/db_config/config/ touch /data/db_config/log/config1.log touch /data/db_config/config/cfgserver1.conf #机器3 mkdir -p /data/replset0/data/rs0 mkdir -p /data/replset0/log mkdir -p /data/replset0/config touch /data/replset0/config/rs0.conf touch /data/replset0/log/rs0.log mkdir -p /data/replset1/data/rs1 mkdir -p /data/replset1/log mkdir -p /data/replset1/config touch /data/replset1/config/rs1.conf touch /data/replset1/log/rs1.log mkdir -p /data/replset2/data/rs2 mkdir -p /data/replset2/log mkdir -p /data/replset2/config touch /data/replset2/config/rs2.conf touch /data/replset2/log/rs2.log mkdir -p /data/db_config/data/config2 mkdir -p /data/db_config/log/ mkdir -p /data/db_config/config/ touch /data/db_config/log/config2.log touch /data/db_config/config/cfgserver2.conf 2、配置复制集配置文件 #机器1 vi /data/replset0/config/rs0.conf journal=true port=4000 replSet=rsshard0 dbpath = /data/replset0/data/rs0 shardsvr = true oplogSize = 100 pidfilepath = /usr/local/mongodb/mongodb0.pid logpath = /data/replset0/log/rs0.log logappend = true profile = 1 slowms = 5 fork = true vi /data/replset1/config/rs1.conf journal=true port=4001 replSet=rsshard1 dbpath = /data/replset1/data/rs1 shardsvr = true oplogSize = 100 pidfilepath =/usr/local/mongodb/mongodb1.pid logpath = /data/replset1/log/rs1.log logappend = true profile = 1 slowms = 5 fork = true vi /data/replset2/config/rs2.conf journal=true port=4002 replSet=rsshard2 dbpath = /data/replset2/data/rs2 shardsvr = true oplogSize = 100 pidfilepath =/usr/local/mongodb/mongodb2.pid logpath = /data/replset2/log/rs2.log logappend = true profile = 1 slowms = 5 fork = true #机器2 vi /data/replset0/config/rs0.conf journal=true port=4000 replSet=rsshard0 dbpath = /data/replset0/data/rs0 shardsvr = true oplogSize = 100 pidfilepath = /usr/local/mongodb/mongodb0.pid logpath = /data/replset0/log/rs0.log logappend = true profile = 1 slowms = 5 fork = true vi /data/replset1/config/rs1.conf journal=true port=4001 replSet=rsshard1 dbpath = /data/replset1/data/rs1 shardsvr = true oplogSize = 100 pidfilepath =/usr/local/mongodb/mongodb1.pid logpath = /data/replset1/log/rs1.log logappend = true profile = 1 slowms = 5 fork = true vi /data/replset2/config/rs2.conf journal=true port=4002 replSet=rsshard2 dbpath = /data/replset2/data/rs2 shardsvr = true oplogSize = 100 pidfilepath =/usr/local/mongodb/mongodb2.pid logpath = /data/replset2/log/rs2.log logappend = true profile = 1 slowms = 5 fork = true #机器3 vi /data/replset0/config/rs0.conf journal=true port=4000 replSet=rsshard0 dbpath = /data/replset0/data/rs0 shardsvr = true oplogSize = 100 pidfilepath = /usr/local/mongodb/mongodb0.pid logpath = /data/replset0/log/rs0.log logappend = true profile = 1 slowms = 5 fork = true vi /data/replset1/config/rs1.conf journal=true port=4001 replSet=rsshard1 dbpath = /data/replset1/data/rs1 shardsvr = true oplogSize = 100 pidfilepath =/usr/local/mongodb/mongodb1.pid logpath = /data/replset1/log/rs1.log logappend = true profile = 1 slowms = 5 fork = true vi /data/replset2/config/rs2.conf journal=true port=4002 replSet=rsshard2 dbpath = /data/replset2/data/rs2 shardsvr = true oplogSize = 100 pidfilepath =/usr/local/mongodb/mongodb2.pid logpath = /data/replset2/log/rs2.log logappend = true profile = 1 slowms = 5 fork = true 3、启动复制集 #三台机器都启动mongodb /usr/local/mongodb/bin/mongod --config /data/replset0/config/rs0.conf /usr/local/mongodb/bin/mongod --config /data/replset1/config/rs1.conf /usr/local/mongodb/bin/mongod --config /data/replset2/config/rs2.conf /usr/local/mongodb/bin/mongod --config /data/replset0/config/rs0.conf /usr/local/mongodb/bin/mongod --config /data/replset1/config/rs1.conf /usr/local/mongodb/bin/mongod --config /data/replset2/config/rs2.conf /usr/local/mongodb/bin/mongod --config /data/replset0/config/rs0.conf /usr/local/mongodb/bin/mongod --config /data/replset1/config/rs1.conf /usr/local/mongodb/bin/mongod --config /data/replset2/config/rs2.conf 4、设置复制集 #机器1 mongo --port 4000 use admin config = { _id:"rsshard0", members:[ {_id:0,host:"192.168.1.155:4000"}, {_id:1,host:"192.168.14.221:4000"}, {_id:2,host:"192.168.14.198:4000"}] } rs.initiate(config); rs.conf() #机器2 mongo --port 4001 use admin config = { _id:"rsshard1", members:[ {_id:0,host:"192.168.1.155:4001"}, {_id:1,host:"192.168.14.221:4001"}, {_id:2,host:"192.168.14.198:4001"}] } rs.initiate(config); rs.conf() #机器3 mongo --port 4002 use admin config = { _id:"rsshard2", members:[ {_id:0,host:"192.168.1.155:4002"}, {_id:1,host:"192.168.14.221:4002"}, {_id:2,host:"192.168.14.198:4002"}] } rs.initiate(config); rs.conf() #机器1 cfg = rs.conf() cfg.members[0].priority = 2 cfg.members[1].priority = 1 cfg.members[2].priority = 1 rs.reconfig(cfg) #机器2 cfg = rs.conf() cfg.members[0].priority = 1 cfg.members[1].priority = 2 cfg.members[2].priority = 1 rs.reconfig(cfg) #机器3 cfg = rs.conf() cfg.members[0].priority = 1 cfg.members[1].priority = 1 cfg.members[2].priority = 2 rs.reconfig(cfg) 5、配置config服务器 #机器1 vi /data/db_config/config/cfgserver0.conf journal=true pidfilepath = /data/db_config/config/mongodb.pid dbpath = /data/db_config/data/config0 directoryperdb = true configsvr = true port = 5000 logpath =/data/db_config/log/config0.log logappend = true fork = true #机器2 vi /data/db_config/config/cfgserver1.conf journal=true pidfilepath = /data/db_config/config/mongodb.pid dbpath = /data/db_config/data/config1 directoryperdb = true configsvr = true port = 5000 logpath =/data/db_config/log/config1.log logappend = true fork = true #机器3 vi /data/db_config/config/cfgserver2.conf journal=true pidfilepath = /data/db_config/config/mongodb.pid dbpath = /data/db_config/data/config2 directoryperdb = true configsvr = true port = 5000 logpath =/data/db_config/log/config2.log logappend = true fork = true #机器1 /usr/local/mongodb/bin/mongod --config /data/db_config/config/cfgserver0.conf #机器2 /usr/local/mongodb/bin/mongod --config /data/db_config/config/cfgserver1.conf #机器3 /usr/local/mongodb/bin/mongod --config /data/db_config/config/cfgserver2.conf 6、配置mongos路由服务器 三台机器都要执行 mkdir -p /data/mongos/log/ touch /data/mongos/log/mongos.log touch /data/mongos/mongos.conf vi /data/mongos/mongos.conf #configdb = 192.168.1.155:5000,192.168.14.221:5000,192.168.14.198:5000 configdb = 192.168.1.155:5000 //最后还是只能使用一个config server port = 6000 chunkSize = 1 logpath =/data/mongos/log/mongos.log logappend = true fork = true mongos --config /data/mongos/mongos.conf 7、添加分片 mongo 192.168.1.155:6000 //连接到第一台 #添加分片,不能添加arbiter节点 sh.addShard("rsshard0/192.168.1.155:4000,192.168.14.221:4000,192.168.14.198:4000") sh.addShard("rsshard1/192.168.1.155:4001,192.168.14.221:4001,192.168.14.198:4001") sh.addShard("rsshard2/192.168.1.155:4002,192.168.14.221:4002,192.168.14.198:4002") #查看状况 sh.status(); --- Sharding Status --- sharding version: { "_id" : 1, "minCompatibleVersion" : 5, "currentVersion" : 6, "clusterId" : ObjectId("565eac6d8e75f6a7d3e6e65e") } shards: { "_id" : "rsshard0", "host" : "rsshard0/192.168.1.155:4000,192.168.14.198:4000,192.168.14.221:4000" } { "_id" : "rsshard1", "host" : "rsshard1/192.168.1.155:4001,192.168.14.198:4001,192.168.14.221:4001" } { "_id" : "rsshard2", "host" : "rsshard2/192.168.1.155:4002,192.168.14.198:4002,192.168.14.221:4002" } balancer: Currently enabled: yes Currently running: no Failed balancer rounds in last 5 attempts: 0 Migration Results for the last 24 hours: No recent migrations databases: { "_id" : "admin", "partitioned" : false, "primary" : "config" } #声明库和表要分片 mongos> use admin mongos> db.runCommand({enablesharding:"testdb"}) mongos> db.runCommand( { shardcollection : "testdb.books", key : { id : 1 } } ) #测试 use testdb mongos> for (var i = 1; i <= 20000; i++){db.books.save({id:i,name:"ttbook",sex:"male",age:27,value:"test"})} #查看分片统计 db.books.stats() -------------------------------------------------------------------------------------------------------------------------- 遇到的问题 http://www.thinksaas.cn/group/topic/344494/ mongodb "config servers not in sync"问题的解决方案 tintindesign tintindesign 发表于 2014-11-10 00:12:58 我有一个mongodb的sharding,两个mongod,三个config server,一个mongos,本来一切正常 但因为mongos所在的服务器没有外网ip,但线下又需要将数据发布到线上去,所在准备在线上的另一台有外网ip的服务器上再启一个mongos,结果启不起来,一看日志说是"config servers not in sync",而且都是说3台config server中的两台不一致。。。 google了下,看到有人说把出问题的那台config server的数据清掉,选一台正常的config server 把数据dump出来再restore进有问题的那台,但问题是我不知道到底哪台出问题了。mongodb的JIRA里有这么个未修复的issue: https://jira.mongodb.org/browse/SERVER-3698,所以现在也没有办法知道是哪台config server出问题了 算了,那就一台台试吧,我停掉了所有三台config server,把其中两台的data目录重命名了下,把另外一台config server 的data目录整个scp到那两台,然后再将config server全部启起来,再启动mongos,一切又和谐了~~ 个人感觉mongodb的config server之间的同步还是有些不怎么靠谱,也许目前来说单个config server反而更稳定些 I NETWORK [mongosMain] scoped connection to 192.168.1.155:5000,192.168.14.221:5000,192.168.14.198:5000 not being returned to the pool 2015-11-28T16:15:00.254+0800 E - [mongosMain] error upgrading config database to v6 :: caused by :: DistributedClockSkewed clock skew of the cluster 192.168.1.155:5000,192.168.14.221:5000,192.168.14.198:5000 is too far out of bounds to allow distributed locking. [mongosMain] error upgrading config database to v6 :: caused by :: DistributedClockSkewed clock skew of the cluster 192.168.1.155:5000,192.168.14.221:5000,192.168.14.198:5000 is too far out of bounds to allow distributed locking. 2015-11-28T16:45:43.846+0800 I CONTROL ***** SERVER RESTARTED ***** 2015-11-28T16:45:43.851+0800 I CONTROL ** WARNING: You are running this process as the root user, which is not recommended. 2015-11-28T16:45:43.851+0800 I CONTROL 2015-11-28T16:45:43.851+0800 I SHARDING [mongosMain] MongoS version 3.0.7 starting: pid=46938 port=6000 64-bit host=steven (--help for usage) 2015-11-28T16:45:43.851+0800 I CONTROL [mongosMain] db version v3.0.7 2015-11-28T16:45:43.851+0800 I CONTROL [mongosMain] git version: 6ce7cbe8c6b899552dadd907604559806aa2e9bd 2015-11-28T16:45:43.851+0800 I CONTROL [mongosMain] build info: Linux ip-10-101-218-12 2.6.32-220.el6.x86_64 #1 SMP Wed Nov 9 08:03:13 EST 2011 x86_64 BOOST_LIB_VERSION=1_49 2015-11-28T16:45:43.851+0800 I CONTROL [mongosMain] allocator: tcmalloc 2015-11-28T16:45:43.851+0800 I CONTROL [mongosMain] options: { config: "/data/mongos/mongos.conf", net: { port: 6000 }, processManagement: { fork: true }, sharding: { chunkSize: 1, configDB: "192.168.1.155:5000,192.168.14.221:5000,192.168.14.198:5000" }, systemLog: { destination: "file", logAppend: true, path: "/data/mongos/log/mongos.log" } } 2015-11-28T16:45:43.860+0800 W SHARDING [mongosMain] config servers 192.168.1.155:5000 and 192.168.14.221:5000 differ 2015-11-28T16:45:43.861+0800 W SHARDING [mongosMain] config servers 192.168.1.155:5000 and 192.168.14.221:5000 differ 2015-11-28T16:45:43.863+0800 W SHARDING [mongosMain] config servers 192.168.1.155:5000 and 192.168.14.221:5000 differ 2015-11-28T16:45:43.864+0800 W SHARDING [mongosMain] config servers 192.168.1.155:5000 and 192.168.14.221:5000 differ 2015-11-28T16:45:43.864+0800 E SHARDING [mongosMain] could not verify that config servers are in sync :: caused by :: config servers 192.168.1.155:5000 and 192.168.14.221:5000 differ: { chunks: "d41d8cd98f00b204e9800998ecf8427e", shards: "d41d8cd98f00b204e9800998ecf8427e", version: "8c18a7ed8908f1c2ec628d2a0af4bf3c" } vs {} 2015-11-28T16:45:43.864+0800 I - [mongosMain] configServer connection startup check failed ------------------------------------------------------------------------------------------------------------------------------------- db.books.stats() { "sharded" : true, "paddingFactorNote" : "paddingFactor is unused and unmaintained in 3.0. It remains hard coded to 1.0 for compatibility only.", "userFlags" : 1, "capped" : false, "ns" : "testdb.books", "count" : 20000, "numExtents" : 11, "size" : 2240000, "storageSize" : 5595136, "totalIndexSize" : 1267280, "indexSizes" : { "_id_" : 678608, "id_1" : 588672 }, "avgObjSize" : 112, "nindexes" : 2, "nchunks" : 5, "shards" : { "rsshard0" : { "ns" : "testdb.books", "count" : 9443, "size" : 1057616, "avgObjSize" : 112, "numExtents" : 5, "storageSize" : 2793472, "lastExtentSize" : 2097152, "paddingFactor" : 1, "paddingFactorNote" : "paddingFactor is unused and unmaintained in 3.0. It remains hard coded to 1.0 for compatibility only.", "userFlags" : 1, "capped" : false, "nindexes" : 2, "totalIndexSize" : 596848, "indexSizes" : { "_id_" : 318864, "id_1" : 277984 }, "ok" : 1, "$gleStats" : { "lastOpTime" : Timestamp(0, 0), "electionId" : ObjectId("565975792a5b76c2553522a5") } }, "rsshard1" : { "ns" : "testdb.books", "count" : 10549, "size" : 1181488, "avgObjSize" : 112, "numExtents" : 5, "storageSize" : 2793472, "lastExtentSize" : 2097152, "paddingFactor" : 1, "paddingFactorNote" : "paddingFactor is unused and unmaintained in 3.0. It remains hard coded to 1.0 for compatibility only.", "userFlags" : 1, "capped" : false, "nindexes" : 2, "totalIndexSize" : 654080, "indexSizes" : { "_id_" : 351568, "id_1" : 302512 }, "ok" : 1, "$gleStats" : { "lastOpTime" : Timestamp(0, 0), "electionId" : ObjectId("565eac15357442cd3ead5103") } }, "rsshard2" : { "ns" : "testdb.books", "count" : 8, "size" : 896, "avgObjSize" : 112, "numExtents" : 1, "storageSize" : 8192, "lastExtentSize" : 8192, "paddingFactor" : 1, "paddingFactorNote" : "paddingFactor is unused and unmaintained in 3.0. It remains hard coded to 1.0 for compatibility only.", "userFlags" : 1, "capped" : false, "nindexes" : 2, "totalIndexSize" : 16352, "indexSizes" : { "_id_" : 8176, "id_1" : 8176 }, "ok" : 1, "$gleStats" : { "lastOpTime" : Timestamp(0, 0), "electionId" : ObjectId("565eab094c148b20ecf4b442") } } }, "ok" : 1 sh.status() --- Sharding Status --- sharding version: { "_id" : 1, "minCompatibleVersion" : 5, "currentVersion" : 6, "clusterId" : ObjectId("565eac6d8e75f6a7d3e6e65e") } shards: { "_id" : "rsshard0", "host" : "rsshard0/192.168.1.155:4000,192.168.14.198:4000,192.168.14.221:4000" } { "_id" : "rsshard1", "host" : "rsshard1/192.168.1.155:4001,192.168.14.198:4001,192.168.14.221:4001" } { "_id" : "rsshard2", "host" : "rsshard2/192.168.1.155:4002,192.168.14.198:4002,192.168.14.221:4002" } balancer: Currently enabled: yes Currently running: no Failed balancer rounds in last 5 attempts: 0 Migration Results for the last 24 hours: No recent migrations databases: { "_id" : "admin", "partitioned" : false, "primary" : "config" } { "_id" : "test", "partitioned" : false, "primary" : "rsshard0" } { "_id" : "testdb", "partitioned" : true, "primary" : "rsshard0" } testdb.books shard key: { "id" : 1 } chunks: rsshard0 2 rsshard1 2 rsshard2 1 { "id" : { "$minKey" : 1 } } -->> { "id" : 2 } on : rsshard1 Timestamp(2, 0) { "id" : 2 } -->> { "id" : 10 } on : rsshard2 Timestamp(3, 0) { "id" : 10 } -->> { "id" : 4691 } on : rsshard0 Timestamp(4, 1) { "id" : 4691 } -->> { "id" : 9453 } on : rsshard0 Timestamp(3, 3) { "id" : 9453 } -->> { "id" : { "$maxKey" : 1 } } on : rsshard1 Timestamp(4, 0) { "_id" : "aaaa", "partitioned" : false, "primary" : "rsshard0" } -------------------------------------------------------------------------------------- 配置文件样例 rs journal=true port=4000 replset=rsshard0 dbpath = /data/mongodb/data shardsvr = true //启动分片 oplogSize = 100 //oplog大小 MB pidfilepath = /usr/local/mongodb/mongodb.pid //pid文件路径 logpath = /data/replset0/log/rs0.log //日志路径 logappend = true //以追加方式写入 profile = 1 //数据库分析,1表示仅记录较慢的操作 slowms = 5 //认定为慢查询的时间设置 fork = true //以守护进程的方式运行,创建服务器进程 mongos configdb = 192.168.1.155:5000,192.168.14.221:5000,192.168.14.198:5000 //监听的config服务器ip和端口,只能有1个或者3个 port = 6000 chunkSize = 1 //单位 mb 生成环境请使用 100 或删除,删除后默认是64 logpath =/data/mongos/log/mongos.log logappend = true fork = true

分片之后的执行计划

db.books.find({id:1}).explain() { "queryPlanner" : { "mongosPlannerVersion" : 1, "winningPlan" : { "stage" : "SINGLE_SHARD", "shards" : [ { "shardName" : "rsshard1", // 在第二个分片上 "connectionString" : "rsshard1/192.168.1.155:4001,192.168.14.198:4001,192.168.14.221:4001", "serverInfo" : { // 第二个分片的服务器信息 "host" : "steven2", "port" : 4001, "version" : "3.0.7", "gitVersion" : "6ce7cbe8c6b899552dadd907604559806aa2e9bd" }, "plannerVersion" : 1, "namespace" : "testdb.books", "indexFilterSet" : false, "parsedQuery" : { "id" : { // id等于1 "$eq" : 1 } }, "winningPlan" : { "stage" : "FETCH", "inputStage" : { "stage" : "SHARDING_FILTER", "inputStage" : { "stage" : "IXSCAN", "keyPattern" : { "id" : 1 }, "indexName" : "id_1", "isMultiKey" : false, "direction" : "forward", "indexBounds" : { //索引范围 "id" : [ "[1.0, 1.0]" ] } } } }, "rejectedPlans" : [ ] } ] } }, "ok" : 1 }

f

[mongosMain] warning: config servers social-11:27021 and social-11:27023 differ

config servers are not in sync【mongo】

http://m.blog.csdn.net/blog/u011321811/38372937

http://xiao9.iteye.com/blog/1395593

今天两台开发机突然挂掉了,只剩下一台,机器重新恢复后,在恢复mongos的过程中,config server报错,

具体日志见:

ERROR: could notverify that config servers are in sync :: caused by :: config servers xx.xx.xx.xx:20000 and yy.yy.yy.yy:20000 differ: { chunks:"f0d00cf4266edb17c63538d24e51b545", colle

ctions:"331a71ef5fd89be1d4e02d0ad6ed1e55", databases:"8653e07cb59685b0e89b1fd094a30133", shards:"0a1b3f23160cd5dc731fd837cfb6d081", version:"9ec885c985db1d9fb06e6e7d61814668" } vs { chunks:"99771bf8ac9d42dfbb7332e7fa08d377",

collections:"331a71ef5fd89be1d4e02d0ad6ed1e55", databases:"8653e07cb59685b0e89b1fd094a30133", shards:"0a1b3f23160cd5dc731fd837cfb6d081", version:"9ec885c985db1d9fb06e6e7d61814668" }

2014-08-04T17:03:40.232+0800[mongosMain] configServer connection startup check failed

直接google,发现这种情况的原因在于两个机器的config server记录的信息不一致导致。修复的方法,在mongo官方的jira中已经列出(https://jira.mongodb.org/browse/SERVER-10737)。

这里做个记录,并且简单说明下恢复的方法:

连接到每个分片的configserver,在我机器上是20000端口,运行db.runCommand('dbhash')

在每台机器上都运行上述命令,比较理想的情况,会找到两个md5一样的机器。

然后将与其他两台不一致的mongo进程都杀死,将另一台机器上的dbpath下的数据都拷到出问题的那台机器上。

重启日志中报错的两台机器的config server

试着启动mongos,看是否还存在上述问题。

而在我的环境中,由于两台机器先后挂掉,最终比较发现,shard中的3台机器,配置均不一样。所以我决定采用一直存活的mongoconfig的配置,将另外两台机器的进程杀死,数据删除,拷贝数据,重启。由于我的是线下环境,处理比较随意,生产环境请一定选择正确的数据恢复方法。

http://www.mongoing.com/anspress/question/2321/%E8%AF%B7%E9%97%AE%E5%A4%8D%E5%88%B6%E9%9B%86%E5%A6%82%E4%BD%95%E6%89%8B%E5%8A%A8%E5%88%87%E6%8D%A2

你可以用rs.stepDown()来降下主节点,但是不能保证新的主节点一定是在某一台从节点机器。另外一个方式就是 通过提高那台机器的优先级的方式来导致重新选举并选为主节点。如下:

conf=rs.conf();

conf.members[2].priority=100; // 假设你希望第3个节点成为主。默认priority是1

rs.reconfig(conf);

注意:在维护窗口期间做,不要在高峰做

复制集里面没有arbiter也可以故障转移

http://www.mongoing.com/anspress/question/2310/复制集里面没有arbiter也可以故障转移

lyhabc 在 1周 之前 提问了问题

搭建了一个复制集,三个机器,问一下为何没有arbiter也可以故障转移

TJ answered about 1小时 ago

arbiter这个名字有点误导人,以为它是权威的仲裁者。其实他只是一个有投票能力的空节点。

普通节点: 数据+投票

arbiter: 投票

所以,当你使用3个普通节点的时候,你实际上已经有了3个投票节点。arbiter作为专门的投票节点,只是在你数据节点凑不够3个或奇数的时候才用得着。

跟sqlserver的镜像原理一样,见证机器只是空节点,我明白了

f

公司内网192.168.1.8上面的mongodb测试库

f

f

f

f

F

F

F

F

.

F

用table view

F

地址:https://files.cnblogs.com/files/MYSQLZOUQI/mongodbportaldb.7z

f

2015-10-30T05:59:12.386+0800 I JOURNAL [initandlisten] journal dir=/data/mongodb/data/journal

2015-10-30T05:59:12.386+0800 I JOURNAL [initandlisten] recover : no journal files present, no recovery needed

2015-10-30T05:59:12.518+0800 I JOURNAL [durability] Durability thread started

2015-10-30T05:59:12.518+0800 I JOURNAL [journal writer] Journal writer thread started

2015-10-30T05:59:12.521+0800 I CONTROL [initandlisten] MongoDB starting : pid=4479 port=27017 dbpath=/data/mongodb/data/ 64-bit host=steven

2015-10-30T05:59:12.521+0800 I CONTROL [initandlisten]

2015-10-30T05:59:12.522+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/enabled is 'always'.

2015-10-30T05:59:12.522+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2015-10-30T05:59:12.522+0800 I CONTROL [initandlisten]

2015-10-30T05:59:12.522+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/defrag is 'always'.

2015-10-30T05:59:12.522+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2015-10-30T05:59:12.522+0800 I CONTROL [initandlisten]