使用 Ansible 实现 Apache NiFi 集群扩容

1. 环境信息

1.1 原 NiFi 集群

| IP | 主机名 | 内存(GB) | CPU核数 | 内核版本 | 磁盘 | 操作系统 | Java | Python | NiFi 部署用户 | NiFi 版本 | NiFi 部署目录 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 10.116.201.63 | sy-vm-afp-oneforall01 | 16 | 8 | 4.18.0-372.9.1.el8.x86_64 | 525GB | Red Hat Enterprise Linux release 8.6 (Ootpa) | /opt/app/middles/openjdk-1.8.0 未配置 JAVA_HOME |

/usr/bin/python3(3.6.8) 未设置为默认 python 版本 |

afp | 1.27.0 | /opt/app/middles/nifi-1.27.0 |

| 10.116.201.64 | sy-vm-afp-oneforall02 | 16 | 8 | 4.18.0-372.9.1.el8.x86_64 | 525GB | Red Hat Enterprise Linux release 8.6 (Ootpa) | /opt/app/middles/openjdk-1.8.0 未配置 JAVA_HOME |

/usr/bin/python3(3.6.8) 未设置为默认 python 版本 |

afp | 1.27.0 | /opt/app/middles/nifi-1.27.0 |

| 10.116.201.65 | sy-vm-afp-oneforall03 | 16 | 8 | 4.18.0-372.9.1.el8.x86_64 | 525GB | Red Hat Enterprise Linux release 8.6 (Ootpa) | /opt/app/middles/openjdk-1.8.0 未配置 JAVA_HOME |

/usr/bin/python3(3.6.8) 未设置为默认 python 版本 |

afp | 1.27.0 | /opt/app/middles/nifi-1.27.0 |

1.2 扩容节点

| IP | 主机名 | 内存(GB) | CPU核数 | 内核版本 | 磁盘 | 操作系统 | Java | Python | NiFi 部署用户 | NiFi 版本 | NiFi 部署目录 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 10.116.201.43 | sy-vm-afp-dispatchmanagement01 | 32 | 16 | 4.18.0-372.9.1.el8.x86_64 | 525GB | Red Hat Enterprise Linux release 8.6 (Ootpa) | /opt/app/middles/openjdk-1.8.0 未配置 JAVA_HOME |

/usr/bin/python3(3.6.8) /opt/app/middles/Python-2.7.6/bin/python 默认 python 版本为 2.7.6 |

afp | 1.27.0 | /opt/app/middles/nifi-1.27.0 |

| 10.116.201.44 | sy-vm-afp-dispatchmanagement02 | 32 | 16 | 4.18.0-372.9.1.el8.x86_64 | 525GB | Red Hat Enterprise Linux release 8.6 (Ootpa) | /opt/app/middles/openjdk-1.8.0 未配置 JAVA_HOME |

/usr/bin/python3(3.6.8) 未设置为默认 python 版本 |

afp | 1.27.0 | /opt/app/middles/nifi-1.27.0 |

| 10.116.201.52 | sy-vm-afp-pa01 | 32 | 16 | 4.18.0-372.9.1.el8.x86_64 | 1T | Red Hat Enterprise Linux release 8.6 (Ootpa) | /opt/app/middles/openjdk-1.8.0 未配置 JAVA_HOME |

/usr/bin/python3(3.6.8) 未设置为默认 python 版本 |

afp | 1.27.0 | /opt/app/middles/nifi-1.27.0 |

| 10.116.201.53 | sy-vm-afp-pa02 | 32 | 16 | 4.18.0-372.9.1.el8.x86_64 | 1.1T | Red Hat Enterprise Linux release 8.6 (Ootpa) | /opt/app/middles/openjdk-1.8.0 未配置 JAVA_HOME |

/usr/bin/python3(3.6.8) 未设置为默认 python 版本 |

afp | 1.27.0 | /opt/app/middles/nifi-1.27.0 |

| 10.116.201.54 | sy-vm-afp-pa03 | 32 | 16 | 4.18.0-372.9.1.el8.x86_64 | 1.1T | Red Hat Enterprise Linux release 8.6 (Ootpa) | /opt/app/middles/openjdk-1.8.0 未配置 JAVA_HOME |

/usr/bin/python3(3.6.8) 未设置为默认 python 版本 |

afp | 1.27.0 | /opt/app/middles/nifi-1.27.0 |

1.3 Ansible 管理机

| IP | 主机名 | 是否存在 afp 用户 | Ansible 版本 | Ansible 目录 |

|---|---|---|---|---|

| 10.116.148.94 | sy-afp-bigdata01 | 是 | 2.29.27 | /root/ansible |

2. 准备工作

2.1 安装包下载

Apache NiFi 下载地址:

- https://archive.apache.org/dist/nifi/1.27.0/nifi-1.27.0-bin.zip

- https://archive.apache.org/dist/nifi/1.27.0/nifi-toolkit-1.27.0-bin.zip

2.2 Ansible 连通

2.2.1 修改管理机的 /etc/hosts 文件

操作机器:10.116.148.94

10.116.201.63 sy-vm-afp-oneforall01

10.116.201.44 sy-vm-afp-oneforall02

10.116.201.65 sy-vm-afp-oneforall03

10.116.201.43 sy-vm-afp-dispatchmanagement01

10.116.201.44 sy-vm-afp-dispatchmanagement02

10.116.201.52 sy-vm-afp-pa01

10.116.201.53 sy-vm-afp-pa02

10.116.201.54 sy-vm-afp-pa03

2.2.2 root 用户 SSH 配置

说明:

- 可以使用 Ansible playbook 进行 SSH 配置,但我目前实现这一功能的剧本会重新生成密钥文件,虽然可以做管理机到 nifi 集群的 SSH 配置,但会破坏管理机到其他机器的 SSH 配置,所以此处选择使用手动配置的方式;

- 需提供被管理机的 root 用户的密码。

[root@sy-afp-bigdata01 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@sy-vm-afp-oneforall01

[root@sy-afp-bigdata01 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@sy-vm-afp-oneforall02

[root@sy-afp-bigdata01 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@sy-vm-afp-oneforall03

[root@sy-afp-bigdata01 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@sy-vm-afp-dispatchmanagement01

[root@sy-afp-bigdata01 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@sy-vm-afp-dispatchmanagement02

[root@sy-afp-bigdata01 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@sy-vm-afp-pa01

[root@sy-afp-bigdata01 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@sy-vm-afp-pa02

[root@sy-afp-bigdata01 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@sy-vm-afp-pa03

2.2.3 设置 python 软链接

说明:

由于 ansible 命令需要目标机器具备 python 命令,经查,sy-vm-afp-oneforall01 ~ sy-vm-afp-oneforall03、sy-vm-afp-dispatchmanagement02、sy-vm-afp-pa01 ~ sy-vm-afp-pa03 这 7 台机器上,存在默认的 /usr/bin/python3 命令,其版本为 3.6.8,但是没有 python 命令,所以在这 7 台机器上执行:ln -s /usr/bin/python3 /usr/bin/python

而在 sy-vm-afp-dispatchmanagement02 这台机器上,存在默认的 python 命令,其软链接为:/usr/bin/python -> /opt/app/middles/Python-2.7.6/bin/python,使用这个版本后,ansible 控制此机器执行命令报错:

An exception occurred during task execution. To see the full traceback, use -vvv.

The error was: zipimport.ZipImportError: can't decompress data; zlib not available

sy-vm-afp-dispatchmanagement01 | FAILED! => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python'

},

"changed": false,

"module_stderr": "Shared connection to sy-vm-afp-dispatchmanagement01 closed.\r\n",

"module_stdout": "Traceback (most recent call last):\r\n File \"/root/.ansible/tmp/ansible-tmp-1762860095.83-9201-116763673824206/AnsiballZ_command.py\", line 102, in <module>\r\n _ansiballz_main(\r\n File \"/root/.ansible/tmp/ansible-tmp-1762860095.83-9201-116763673824206/AnsiballZ_command.py", line 94, in _ansiballz_main\r\n invoke_module(zipped_mod, temp_path, ANSIBALLZ_PARAMS)\r\n File \"/root/.ansible/tmp/ansible-tmp-1762860095.83-9201-116763673824206/AnsiballZ_command.py", line 37, in invoke_module\r\n from ansible.module_utils import basic\r\nzipimport.ZipImportError: can't decompress data, zlib not available\r\n",

"msg": "MODULE FAILURE\nSee stdout/stderr for the exact error",

"rc": 1

}

根据提示,以为是没有安装 zlib 包,于是下载以下包进行了安装:

- zlib-1.2.11-18.el8_5.x86_64.rpm

- zlib-devel-1.2.11-18.el8_5.x86_64.rpm

- perl-IO-Zlib-1.10-421.el8.noarch.rpm

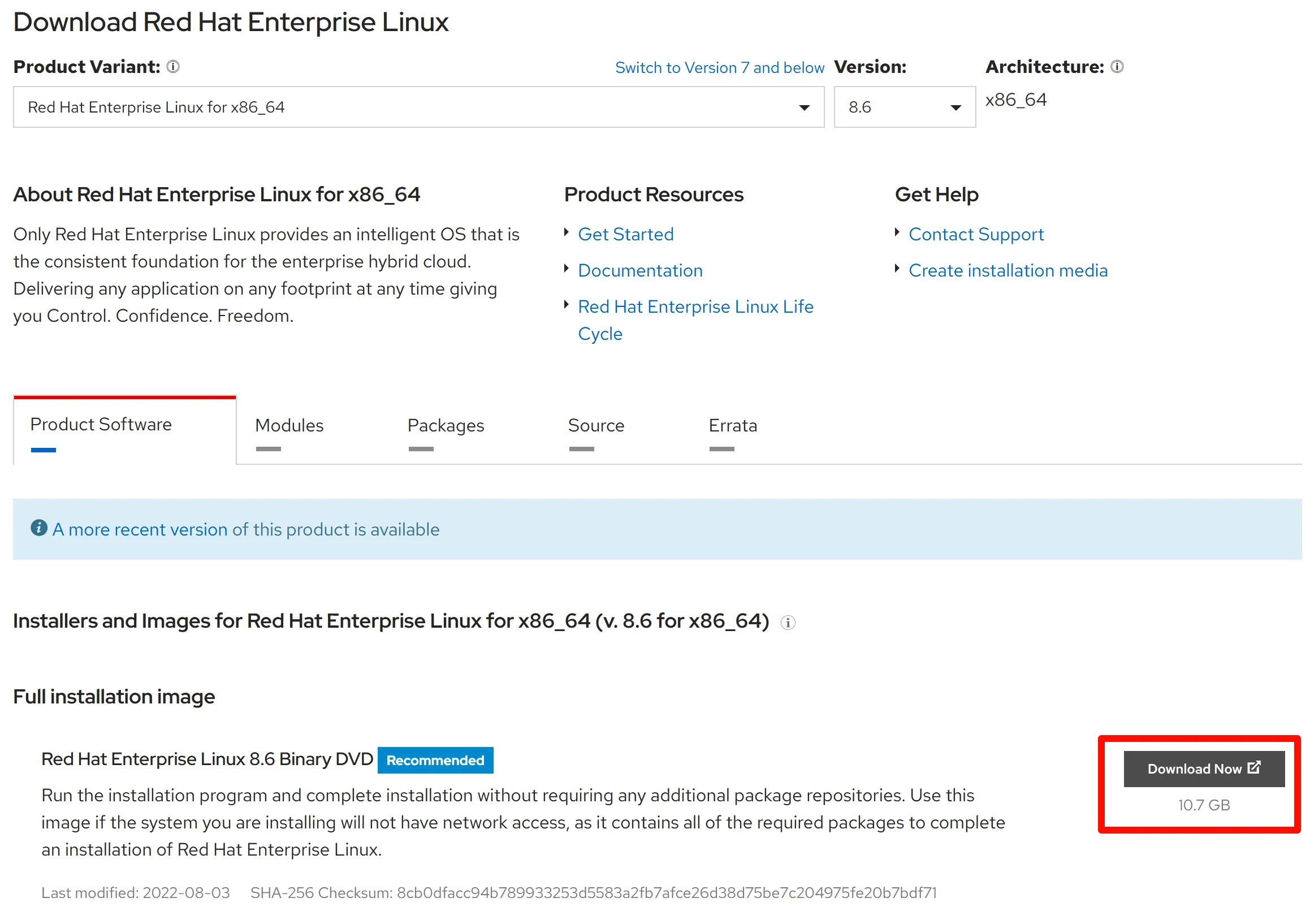

这些包我是这么下载的,先下载 rhel-8.6-x86_64-dvd.iso,下载地址:https://access.redhat.com/downloads/content/479/ver=/rhel---8/8.6/x86_64/product-software

解压后,在 rhel-8.6-x86_64-dvd\BaseOS\Packages目录下,安装,没有解决问题!

之后在 sy-vm-afp-dispatchmanagement02 这台机器上,将 python 软链接设置为 python 3 的版本,解决了问题。

unlink /usr/bin/python

ln -s /usr/bin/python3 /usr/bin/python

在集群扩容完成之后,为防止对原机器的其他组件有影响,又将该软链接恢复了:

unlink /usr/bin/python

ln -s /opt/app/middles/Python-2.7.6/bin/python /usr/bin/python

2.2.4 上传文件

将 ansible 配置文件、剧本文件、NiFi 安装包上传到 /root/ansible 目录下:

$ tree /root/ansible

/root/ansible

├── ansible.cfg

├── inventory

└── nifi

├── backup.yml

├── expand.yml

├── limits.yml

├── nifi-1.27.0-bin.zip

├── nifi-toolkit-1.27.0-bin.zip

├── rollback.yml

└── update_hosts.yml

2.2.5 ansible.cfg

[defaults]

host_key_checking=False

inventory=./inventory

2.2.6 inventory

[nifi]

sy-vm-afp-oneforall01

sy-vm-afp-oneforall02

sy-vm-afp-oneforall03

sy-vm-afp-dispatchmanagement01

sy-vm-afp-dispatchmanagement02

sy-vm-afp-pa01

sy-vm-afp-pa02

sy-vm-afp-pa03

[nifi_old]

sy-vm-afp-oneforall01

sy-vm-afp-oneforall02

sy-vm-afp-oneforall03

[nifi_new]

sy-vm-afp-dispatchmanagement01

sy-vm-afp-dispatchmanagement02

sy-vm-afp-pa01

sy-vm-afp-pa02

sy-vm-afp-pa03

2.2.7 root 用户连通性测试

操作机器:10.116.148.94

$ cd /root/ansible

$ ansible nifi -m shell -a "whoami"

sy-vm-afp-oneforall01 | CHANGED | rc=0 >>

root

sy-vm-afp-oneforall02 | CHANGED | rc=0 >>

root

sy-vm-afp-oneforall03 | CHANGED | rc=0 >>

root

sy-vm-afp-dispatchmanagement01 | CHANGED | rc=0 >>

root

sy-vm-afp-dispatchmanagement02 | CHANGED | rc=0 >>

root

sy-vm-afp-pa01 | CHANGED | rc=0 >>

root

sy-vm-afp-pa01 | CHANGED | rc=0 >>

root

sy-vm-afp-pa03 | CHANGED | rc=0 >>

root

2.2.8 afp 用户 SSH 配置

说明:由于 root 用户仅在上线当天提供且需要申请,而 NiFi 集群由 afp 用户部署,为方便使用统一脚本对集群进行启停管理,说明配置使用 afp 用户从管理机 SSH 到 nifi 集群时免密码;

使用 afp 用户将以下文件上传到 /home/afp/ansible 目录下:

$ tree /home/afp/ansible

/home/afp/ansible

├── ansible.cfg

├── inventory

└── nifi

└── ssh_key.yml

ansible.cfg

[defaults]

host_key_checking=False

inventory=./inventory

inventory,此处每台机器的 afp 用户的密码提前申请好。

[nifi]

sy-vm-afp-oneforall01 ansible_password='123456'

sy-vm-afp-oneforall02 ansible_password='123456'

sy-vm-afp-oneforall03 ansible_password='123456'

sy-vm-afp-dispatchmanagement01 ansible_password='123456'

sy-vm-afp-dispatchmanagement02 ansible_password='123456'

sy-vm-afp-pa01 ansible_password='123456'

sy-vm-afp-pa02 ansible_password='123456'

sy-vm-afp-pa03 ansible_password='123456'

ssh_key.yml

---

- name: Setup SSH key authentication

hosts: nifi

gather_facts: false

vars:

admin_user: "afp"

ssh_key_file: "~/.ssh/id_rsa"

tasks:

- name: Ensure .ssh directory exists

file:

path: "~/.ssh"

state: directory

owner: "{{ admin_user }}"

group: "{{ admin_user }}"

mode: '0700'

- name: Generate SSH key on management node

openssh_keypair:

path: "{{ ssh_key_file }}"

type: rsa

size: 4096

owner: "{{ admin_user }}"

group: "{{ admin_user }}"

mode: '0600'

delegate_to: localhost

run_once: true

- name: Fetch public key from management node

slurp:

src: "{{ ssh_key_file }}.pub"

delegate_to: localhost

run_once: true

register: pubkey

- name: Authorize SSH key on all nodes

authorized_key:

user: "{{ admin_user }}"

state: present

key: "{{ pubkey['content'] | b64decode }}"

- shell: whoami

register: result

- debug:

msg: "{{ result.stdout }}"

使用 afp 用户登录 10.116.148.94 进行操作:

$ cd ~/ansible

# SSH 配置

$ ansible-playbook nifi/ssh_key.yml

# 去掉每台机器后面的密码配置

$ cat > /home/afp/ansible/inventory <<EOF

[nifi]

sy-vm-afp-oneforall01

sy-vm-afp-oneforall02

sy-vm-afp-oneforall03

sy-vm-afp-dispatchmanagement01

sy-vm-afp-dispatchmanagement02

sy-vm-afp-pa01

sy-vm-afp-pa02

sy-vm-afp-pa03

EOF

# 测试

$ ansible nifi -m shell -a "whoami"

sy-vm-afp-oneforall01 | CHANGED | rc=0 >>

afp

sy-vm-afp-oneforall02 | CHANGED | rc=0 >>

afp

sy-vm-afp-oneforall03 | CHANGED | rc=0 >>

afp

sy-vm-afp-dispatchmanagement01 | CHANGED | rc=0 >>

afp

sy-vm-afp-dispatchmanagement02 | CHANGED | rc=0 >>

afp

sy-vm-afp-pa01 | CHANGED | rc=0 >>

afp

sy-vm-afp-pa01 | CHANGED | rc=0 >>

afp

sy-vm-afp-pa03 | CHANGED | rc=0 >>

afp

3. 扩容

本步骤操作,均使用 root 用户登录到管理机之后,在 /root/ansible 目录下执行命令。

3.1 修改目标机器的 /etc/hosts 文件

在目标机器的 /etc/hosts 文件中,设置 nifi 集群的全部 IP 和 主机名的映射。使用剧本:update_hosts.yml

---

- name: Ensure expected hosts entries exist on target machine

hosts: nifi

vars:

hosts_map:

- { ip: "10.116.201.63", name: "sy-vm-afp-oneforall01" }

- { ip: "10.116.201.64", name: "sy-vm-afp-oneforall02" }

- { ip: "10.116.201.65", name: "sy-vm-afp-oneforall03" }

- { ip: "10.116.201.43", name: "sy-vm-afp-dispatchmanagement01" }

- { ip: "10.116.201.44", name: "sy-vm-afp-dispatchmanagement02" }

- { ip: "10.116.201.52", name: "sy-vm-afp-pa01" }

- { ip: "10.116.201.53", name: "sy-vm-afp-pa02" }

- { ip: "10.116.201.54", name: "sy-vm-afp-pa03" }

tasks:

- name: Ensure each IP has its corresponding hostname

shell: |

cp /etc/hosts /etc/hosts.backup.`date +"%Y%m%d%H%M%S"`

OL=$(egrep "^{{ item.ip }}" /etc/hosts)

if [ "x${OL}" == "x" ]; then

NL="{{ item.ip }} {{ item.name }}"

echo $NL >> /etc/hosts

else

NL="{{ item.ip }}"

echo "$OL" | while IFS= read -r line; do

change="true"

arr=(${line})

for i in "${!arr[@]}"; do

if [[ "${arr[$i]}" != "{{ item.ip }}" ]]; then

if [[ "${arr[$i]}" == "{{ item.name }}" ]]; then

NL="${OL}"

change="false"

break

else

NL="${NL} ${arr[$i]}"

fi

fi

done

if [[ "${change}" == "true" ]]; then

NL="${NL} {{ item.name }}"

sed -i "/^{{ item.ip }}/c\\${NL}" /etc/hosts

fi

done

fi

loop: "{{ hosts_map }}"

该剧本的作用:确保 nifi 集群的任何一台机器的 /etc/hosts 文件中,存在以下配置:

10.116.201.63 sy-vm-afp-oneforall01

10.116.201.44 sy-vm-afp-oneforall02

10.116.201.65 sy-vm-afp-oneforall03

10.116.201.43 sy-vm-afp-dispatchmanagement01

10.116.201.44 sy-vm-afp-dispatchmanagement02

10.116.201.52 sy-vm-afp-pa01

10.116.201.53 sy-vm-afp-pa02

10.116.201.54 sy-vm-afp-pa03

其中的命令会对旧的配置进行判断,假如旧配置是例如:10.116.201.63 oneforall.com这样的配置,则不会覆盖原配置,会修改成为:10.116.201.63 oneforall.com sy-vm-afp-oneforall01,总之就是确保新增配置,而不毁坏原配置。

执行命令:

[root@sy-afp-bigdata01 ansible]# ansible-playbook nifi/update_hosts.yml

3.2 系统参数优化

官网建议的系统配置最佳实践:https://nifi.apache.org/nifi-docs/administration-guide.html#configuration-best-practices

limits.yml

---

- hosts: nifi

gather_facts: false

vars:

target_user: afp

limits_file: "/etc/security/limits.d/99-limits-nifi.conf"

limits_config:

- { domain: "{{ target_user }}", type: "soft", item: "nofile", value: "50000" }

- { domain: "{{ target_user }}", type: "hard", item: "nofile", value: "50000" }

- { domain: "{{ target_user }}", type: "soft", item: "nproc", value: "10000" }

- { domain: "{{ target_user }}", type: "hard", item: "nproc", value:"10000" }

tasks:

- name: Ensure limits file exists

file:

path: "{{ limits_file }}"

state: touch

mode: '0644'

- name: Truncate limits file

copy:

content: ""

dest: "{{ limits_file }}"

owner: root

group: root

mode: '0644'

- name: Configure limits (idempotent replace or append)

lineinfile:

path: "{{ limits_file }}"

regexp: "^{{ item.domain }}\\s+{{ item.type }}\\s+{{ item.item }}\\s+.*$"

line: "{{ item.domain }} {{ item.type }} {{ item.item }} {{ item.value }}"

state: present

loop: "{{ limits_config }}"

- name: Show the contents of {{ limits_file }}

command: cat {{ limits_file }}

register: limits_content

changed_when: false

- name: Display {{ limits_file }} content

debug:

msg: "{{ limits_content.stdout_lines }}"

- name: Verify ulimit for {{ target_user }}

shell: su - {{ target_user }} -c "ulimit -a | egrep '\-n|\-u|\-f|\-v|\-l'"

register: ulimit_output

changed_when: false

- name: Display ulimit for {{ target_user }}

debug:

msg: "{{ ulimit_output.stdout_lines }}"

sysctl.yml

---

- hosts: nifi

gather_facts: false

become: yes

vars:

sysctl_config_file: /etc/sysctl.conf

sysctl_params:

net.ipv4.ip_local_port_range: "10000 65000"

# 对于内核版本 >= 3.0 使用此配置

net.netfilter.nf_conntrack_tcp_timeout_time_wait: 1

# 对于内核版本 = 2.6 使用此配置

# net.ipv4.netfilter.ip_conntrack_tcp_timeout_time_wait: 1

vm.swappiness: 0

tasks:

- name: Load nf_conntrack model

shell: |

# 对于 rhel 7.x 要激活两个模块:nf_conntrack 和 nf_conntrack_ipv4

# 对于 rhel 8.x 只需要激活 nf_conntrack 模块

modprobe nf_conntrack

# modprobe nf_conntrack_ipv4

- name: Ensure sysctl.conf parameters

lineinfile:

path: '{{ sysctl_config_file }}'

regexp: '^{{ item.key }}\s*='

line: '{{ item.key }}={{ item.value }}'

state: present

loop: "{{ sysctl_params | dict2items }}"

- name: Apply sysctl params

command: sysctl -p

- name: Show modified sysctl.conf lines

shell: "grep -E'^({{ sysctl_params.keys() | join('|') }})' {{ sysctl_config_file }}"

register: sysctl_conf_check

- name: Print modified sysctl.conf lines

debug:

msg: "{{ sysctl_conf_check.stdout_lines }}"

执行命令:

[root@sy-afp-bigdata01 ansible]# ansible-playbook nifi/sysctl.yml

[root@sy-afp-bigdata01 ansible]# ansible-playbook nifi/limits.yml

3.3 备份旧集群配置文件

backup.yml

---

- hosts: nifi_old

gather_facts: false

become: yes

vars:

- nifi_home: /opt/app/middles/nifi-1.27.0

tasks:

- name: Backup nifi conf

shell: |

cd {{ nifi_home }}

rm -rf conf_backup*

cp -ar conf conf_backup_$(date +'%Y%m%d')

- name: Check backup

shell: ls -l {{ nifi_home }}/conf_backup_$(date +'%Y%m%d')/

register: backup_check

- debug:

msg: "{{ backup_check.stdout_lines }}"

执行命令:

[root@sy-afp-bigdata01 ansible]# ansible-playbook nifi/backup.yml

3.4 扩容操作

expand.yml

---

- name: Nifi cluster expand

hosts: nifi

become: true

gather_facts: false

vars:

nifi_owner: afp

nifi_group: afp

https_port: 9443

nifi_base_dir: /opt/app/middles

nifi_home: "{{ nifi_base_dir }}/nifi-1.27.0"

nifi_toolkit_base_dir: "{{ nifi_base_dir }}"

nifi_certs_dir: "{{ nifi_toolkit_base_dir }}/certs"

nifi_heap_size: 8g

NIFI_ZK_CONNECT_STRING: 10.116.148.111:2181,10.116.148.112:2181,10.116.148.113:2181

SINGLE_USER_CREDENTIALS_PASSWORD: Afp@20240820

NIFI_SENSITIVE_PROPS_KEY: afp@20240820

tasks:

- name: Create nifi directories

shell: |

mkdir -p {{ nifi_base_dir }}

when: "'nifi _new' in group_names"

- name: Copy nifi package

copy:

src: nifi-1.27.0-bin.zip

dest: "{{ nifi_base_dir }}"

owner: "{{ nifi_owner }}"

group: "{{ nifi_group }}"

mode: "0644"

when: "'nifi_new' in group_names"

- name: Unzip nifi package

unarchive:

src: "{{ nifi_base_dir }}/nifi-1.27.0-bin.zip"

dest: "{{ nifi_base_dir }}"

remote_src: yes

mode: "0755"

owner: "{{ nifi_owner }}"

group: "{{ nifi_group }}"

extra_opts:

- -o

when: "'nifi_new' in group_names"

- name: Unzip nifi-toolkit package

shell: mkdir -p {{ nifi_toolkit_base_dir }}

delegate_to: localhost

run_once: true

- unarchive:

src: nifi-toolkit-1.27.0-bin.zip

dest: "{{ nifi_toolkit_base_dir }}"

remote_src: yes

extra_opts:

- -o

delegate_to: localhost

run_once: true

- name: TLS config

shell: >

rm -rf {{ nifi_certs_dir }}

mkdir -p {{ nifi_certs_dir }}

{{ nifi_toolkit_base_dir }}/nifi-toolkit-1.27.0/bin/tls-toolkit.sh standalone \

--clientCertDn 'CN=NIFI, OU=NIFI' \

--hostnames {{ ansible_play_hosts | join(,) }} \

--keyAlgorithm RSA \

--keySize 2048 \

--days 36500 \

--keyPassword keyPassword@123456 \

--keyStorePassword keyStorePassword@123456 \

--trustStorePassword trustStorePassword@123456 \

--outputDirectory {{ nifi_certs_dir }}

delegate_to: localhost

run_once: true

- name: Copy TLS file from control node to all nifi nodes

copy:

src: "{{ nifi_certs_dir }}"

dest: "{{ nifi_home }}/conf"

owner: "{{ nifi_owner }}"

group: "{{ nifi_group }}"

mode: preserve

- name: Copy certs and keys

shell: |

rm -f {{ nifi_home }}/conf/*.p12 {{ nifi_home }}/conf/*.password

remote_certs_dir={{ nifi_home }}/conf/$(basename {{ nifi_certs_dir }})

cp ${remote_certs_dir}/{{ inventory_hostname }}/* {{ nifi_home }}/conf/

cp ${remote_certs_dir}/*.p12 {{ nifi_home }}/conf/

cp ${remote_certs_dir}/*.password {{ nifi_home }}/conf/

cp ${remote_certs_dir}/*.pem {{ nifi_home }}/conf/

cp ${remote_certs_dir}/*.key {{ nifi_home }}/conf/

- name: Modify nifi config

shell: >

sed -i -e 's|^nifi.remote.input.host=.*|nifi.remote.input.host={{

inventory_hostname }}|' {{ nifi_home }}/conf/nifi.properties

sed -i -e 's|^nifi.web.https.host=.*|nifi.web.https.host={{ inventory_hostname }}|' {{ nifi_home }}/conf/nifi.properties

sed -i -e 's|^nifi.cluster.node.address=.*|nifi.cluster.node.address={{ inventory_hostname }}|' {{ nifi_home }}/conf/nifi.properties

sed -i -e 's|^nifi.web.https.port=.*|nifi.web.https.port={{ https_port }}|' {{ nifi_home }}/conf/nifi.properties

sed -i -e 's|^nifi.zookeeper.connect.string=.*|nifi.zookeeper.connect.string={{ NIFI_ZK_CONNECT_STRING }}|' {{ nifi_home }}/conf/nifi.properties

sed -i -e 's|^nifi.state.management.embedded.zookeeper.start=.*|nifi.state.management.embedded.zookeeper.start=false|' {{ nifi_home }}/conf/nifi.properties

sed -i -e 's|^nifi.cluster.is.node=.*|nifi.cluster.is.node=true|' {{ nifi_home }}/conf/nifi.properties

sed -i -e 's|^nifi.sensitive.props.key=.*|nifi.sensitive.props.key={{ NIFI_SENSITIVE_PROPS_KEY }}|' {{ nifi_home }}/conf/nifi.properties

sed -i -e 's|^nifi.security.keystorePasswd=.*|nifi.security.keystorePasswd=keyStorePassword@123456|' {{ nifi_home }}/conf/nifi.properties

sed -i -e 's|^nifi.security.keyPasswd=.*|nifi.security.keyPasswd=keyPassword@123456|' {{ nifi_home }}/conf/nifi.properties

sed -i -e 's|^nifi.security.truststorePasswd=.*|nifi.security.truststorePasswd=trustStorePassword@123456|' {{ nifi_home }}/conf/nifi.properties

sed -i -e 's|^nifi.security.user.oidc.connect.timeout=.*|nifi.security.user.oidc.connect.timeout=60 secs|' {{ nifi_home }}/conf/nifi.properties

sed -i -e 's|^nifi.security.user.oidc.read.timeout=.*|nifi.security.user.oidc.read.timeout=60 secs|' {{ nifi_home }}/conf/nifi.properties

sed -i -e 's|<property name="Connect String">.*</property>|<property name="Connect String">{{ NIFI_ZK_CONNECT_STRING }}</property>|' {{ nifi_home }}/conf/state-management.xml

sed -i -e 's|^java.arg.2=.*|java.arg.2=-Xms{{ nifi_heap_size }}|' {{ nifi_home }}/conf/bootstrap.conf

sed -i -e 's|^java.arg.3=.*|java.arg.3=-Xmx{{ nifi_heap_size }}|' {{ nifi_home }}/conf/bootstrap.conf

sed -i -e 's|^run.as=.*|run.as={{ nifi_owner }}|' {{ nifi_home }}/conf/bootstrap.conf

sed -i -e '33s/nifi-app_%d{yyyy-MM-dd_HH}.%i.log/nifi-app_%d.%i.log/' {{ nifi_home }}/conf/logback.xml

sed -i -e '34s/100MB/200MB/' {{ nifi_home }}/conf/logback.xml

chown -R {{ nifi_owner }}:{{ nifi_group }} {{ nifi_home }}

- name: Fetch directory from old cluster

shell: |

cd {{ nifi_home }}

tar -zcf extensions.tar.gz extensions

tar -zcf lib.tar.gz lib

delegate_to: "{{ groups['nifi_old'][0] }}"

run_once: true

- fetch:

src: "{{ nifi_home }}/extensions.tar.gz"

dest: /root/ansible/nifi/

flat: yes

delegate_to: "{{ groups['nifi_old'][0] }}"

run_once: true

- fetch:

src: "{{ nifi_home }}/lib.tar.gz"

dest: /root/ansible/nifi/

flat: yes

delegate_to: "{{ groups['nifi_old'][0] }}"

run_once: true

- name: Extract lib and extensions package

unarchive:

src: extensions.tar.gz

dest: "{{ nifi_home }}/extensions"

remote_src: no

owner: "{{ nifi_owner }}"

group: "{{ nifi_group }}"

extra_opts:

- --strip-components=1

when: "'nifi_new' in group_names"

- unarchive:

src: lib.tar.gz

dest: "{{ nifi_home }}/lib"

remote_src: no

owner: "{{ nifi_owner }}"

group: "{{ nifi_group }}"

extra_opts:

- --strip-components=1

when: "'nifi_new' in group_names"

- name: Set login password

shell: "{{ nifi_home }}/bin/nifi.sh set-single-user-credentials admin {{

SINGLE_USER_CREDENTIALS_PASSWORD }}"

when: "'nifi_new' in group_names"

- name: Clean lib and extensions package

shell: rm -rf /root/ansible/nifi/extensions.tar.gz /root/ansible/nifi/lib.tar.gz

{{ nifi_certs_dir }} {{ nifi_toolkit_base_dir }}/nifi-toolkit-1.27.0

delegate_to: localhost

run_once: true

执行命令:

[root@sy-afp-bigdata01 ansible]# ansible-playbook nifi/expand.yml

3.5 重启集群

使用 root 用户将脚本 afp-nifi.sh 上传到管理机 /usr/local/bin 目录下,然后执行:

[root@sy-afp-bigdata01 ansible]# chmod 755 /usr/local/bin/afp-nifi.sh

[root@sy-afp-bigdata01 ansible]# chown afp:afp /usr/local/bin/afp-nifi.sh

脚本内容为:

#!/bin/bash

NIFI_NODES="sy-vm-afp-oneforall01 sy-vm-afp-oneforall02 sy-vm-afp-oneforall03 sy-vm-afp-dispatchmanagement01 sy-vmafp-dispatchmanagement02 sy-vm-afp-pa01 sy-vm-afp-pa02 sy-vm-afp-pa03"

NIFI_HOME=/opt/app/middles/nifi-1.27.0

dest_java_home=/opt/app/middles/openjdk-1.8.0

source /etc/profile

operations="start stop restart jps status"

if [ $# -ne 1 || ! $operations =~ $1 ]]; then

echo "

Usage: afp-nifi.sh operations

The following operations are supported:

$operations

Your arg is: $1

"

exit 1

fi

line="-------------------------------------------------------------"

function restart_nifi() {

echo

for node in $NIFI_NODES; do

echo "Restart nifi in $node"

ssh $node "

echo $line

source /etc/profile

process_num=\$(${dest_java_home}/bin/jps | grep -i nifi | wc -l 2>/dev/null)

if [ \"\$process_num\" == \"0\" ]; then

echo \"The NiFi service has not been started and does not need to stopped\"

else

while [ \"\$process_num\" != \"0\" ]

do

$NIFI_HOME/bin/nifi.sh stop

sleep 5

process_num=\$(${dest_java_home}/bin/jps | grep -i nifi | wc -l 2>/dev/null)

done

rm -rf $NIFI_HOME/run/*

echo \"The NiFi service has been successfully stopped\"

fi

$NIFI_HOME/bin/nifi.sh start

process_num=\$(${dest_java_home}/bin/jps | grep -i nifi | wc -l 2>/dev/null)

while [ \"\$process_num\" != \"2\"]

do

sleep 5

process_num=\$(${dest_java_home}/bin/jps | grep -i nifi | wc -l 2>/dev/null)

done

echo \"The NiFi service has been successfully started\"

echo \$(${dest_java_home}/bin/jps | grep -i nifi)

"

done

}

function stop_nifi() {

echo

for node in $NIFI_NODES; do

echo "Stop nifi in $node"

ssh $node "

echo $line

source /etc/profile

process_num=\$(${dest_java_home}/bin/jps | grep -i nifi | wc -l 2>/dev/null)

if [ \"\$process_num\" == \"0\" ]; then

echo \"The NiFi service has not been started and does not need to stopped\"

else

while [ \"\$process_num\" != \"0\" ]

do

$NIFI_HOME/bin/nifi.sh stop

sleep 5

process_num=\$(${dest_java_home}/bin/jps | grep -i nifi | wc -l 2>/dev/null)

done

rm -rf $NIFI_HOME/run/*

echo \"The NiFi service has been successfully stopped\"

fi

"

done

}

function jps_nifi() {

echo

for node in $NIFI_NODES; do

echo "nifi java process in $node"

ssh $node "

echo $line

source /etc/profile

${dest_java_home}/bin/jps | grep -i nifi

"

echo

done

}

function status_nifi() {

echo

for node in $NIFI_NODES; do

echo "nifi status in $node"

ssh $node "

echo $line

source /etc/profile

${NIFI_HOME}/bin/nifi.sh status

"

echo

done

}

function start_nifi() {

echo

for node in $NIFI_NODES; do

echo "Start nifi in $node"

ssh $node "

echo $line

source /etc/profile

process_num=\$(${dest_java_home}/bin/jps | grep -i nifi | wc -l 2>/dev/null)

if [ \"\$process_num\" == \"2\" ]; then

echo \"The NiFi service has been started\"

else

while [ \"\$process_num\" != \"0\" ]

do

${dest_java_home}/bin/jps | grep -i nifi | awk '{print \$1}' | xargs kill -9

sleep 5

process_num=\$(${dest_java_home}/bin/jps | grep -i nifi | wc -l 2>/dev/null)

done

rm -rf $NIFI_HOME/run/*

$NIFI_HOME/bin/nifi.sh start

process_num=\$(${dest_java_home}/bin/jps | grep -i nifi | wc -l 2>/dev/null)

while [ \"\$process_num\" != \"2\" ]

do

sleep 5

process_num=\$(${dest_java_home}/bin/jps | grep -i nifi | wc -l 2>/dev/null)

done

echo \"The NiFi service has been successfully started\"

echo \$(${dest_java_home}/bin/jps | grep -i nifi)

fi

"

done

}

case $1 in

"start")

start_nifi

;;

"stop")

stop_nifi

;;

"restart")

restart_nifi

;;

"jps")

jps_nifi

;;

"status")

status_nifi

;;

*)

;;

esac

使用 afp 用户登录管理机,执行重启命令:

# 重启

afp-nifi.sh restart

# 检查

afp-nifi.sh jps

3.6 回滚

如出问题,可以使用剧本 rollback.yml 进行回滚。

---

- name: Nifi cluster expand rollback

hosts: nifi

become: true

gather_facts: false

vars:

nifi_owner: afp

nifi_group: afp

nifi_base_dir: /opt/app/middles

nifi_home: "{{ nifi_base_dir }}/nifi-1.27.0"

tasks:

- name: Recovery config dir

shell: |

cd {{ nifi_home }}

backup_conf_dir=$(ls -td conf_backup_* 2>/dev/null | head -n 1)

rm -rf conf

mv $backup_conf_dir conf

chown -R {{ nifi_owner }}:{{ nifi_group }} {{ nifi_home }}

when: "'nifi_old' in group_names"

- name: Delete nifi dir on new node

shell: rm -rf {{ nifi home }}

when: "'nifi_new' in group_names"

执行命令:

[root@sy-afp-bigdata01 ansible]# ansible-playbook nifi/rollback.yml

4. 验证

访问页面:

- https://10.116.201.63:9443/nifi/

- https://10.116.201.64:9443/nifi/

- https://10.116.201.65:9443/nif/

- https://10.116.201.43:9443/nifi/

- https://10.116.201.44:9443/nifi/

- https://10.116.201.52:9443/nifi/

- https://10.116.201.53:9443/nifi/

- https://10.116.201.54:9443/nifi/

保证每个页面都能登录,且账号密码一致。(admin/Afp@20240820)

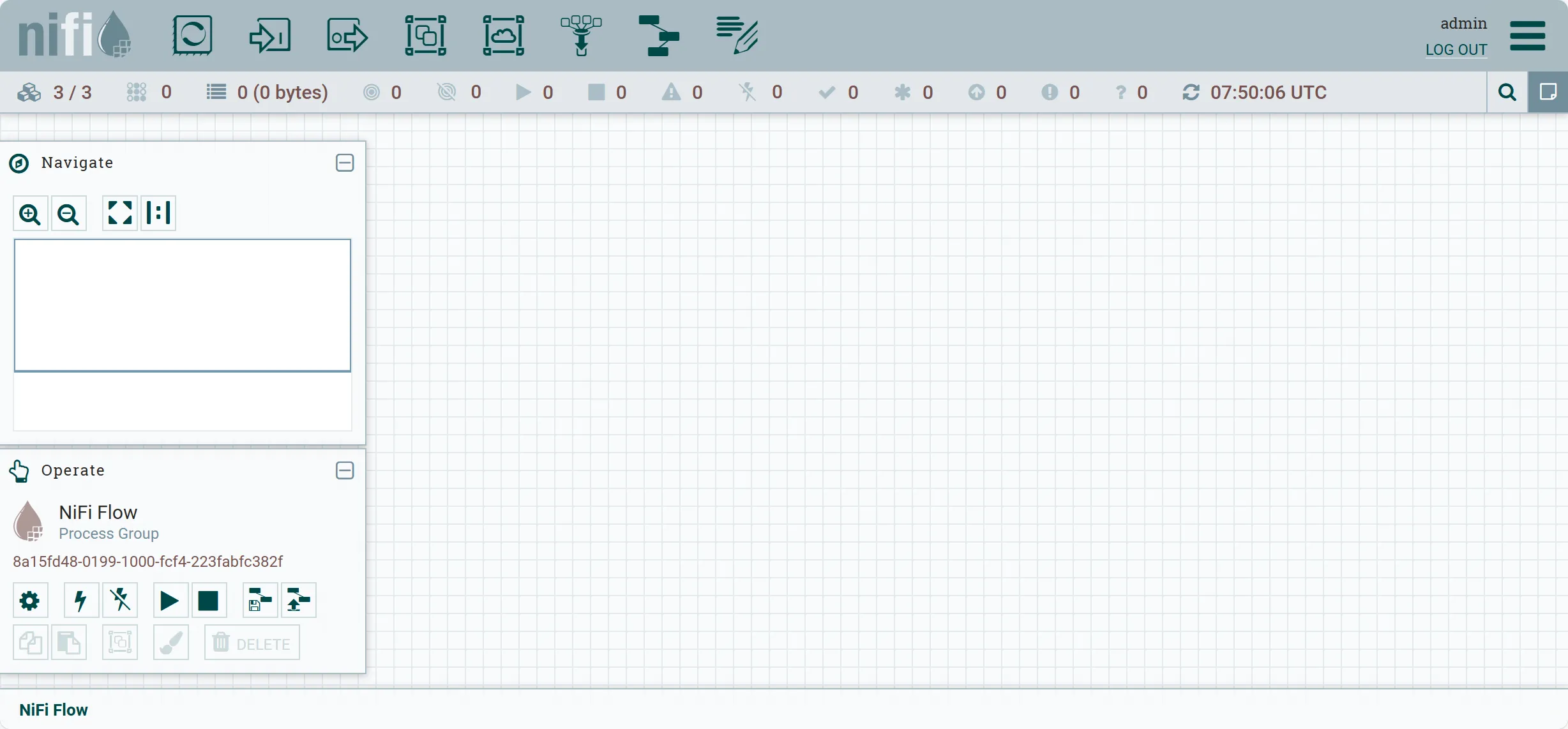

注:图片为测试环境,实际左上角节点是 8/8

浙公网安备 33010602011771号

浙公网安备 33010602011771号