轻量级日志系统笔记

轻量级日志系统

〇、统一日志平台方案

1. 方案一:ELK

ElasticSearch:存储Logstash:代理Kibana:可视化

2. 方案二:LAG

Loki:存储Alloy/Promtail:代理Grafana:可视化

3. 方案三:大数据

Hadoop/ClickHouse:存储Flume:代理Superset:可视化

一、Loki

1. 介绍

Loki 是一个可水平扩展、高可用性、多租户日志聚合系统,其灵感来自 Prometheus。Loki 与 Prometheus 的不同之处在于,它专注于日志而不是指标,并通过推送而不是拉取来收集日志。

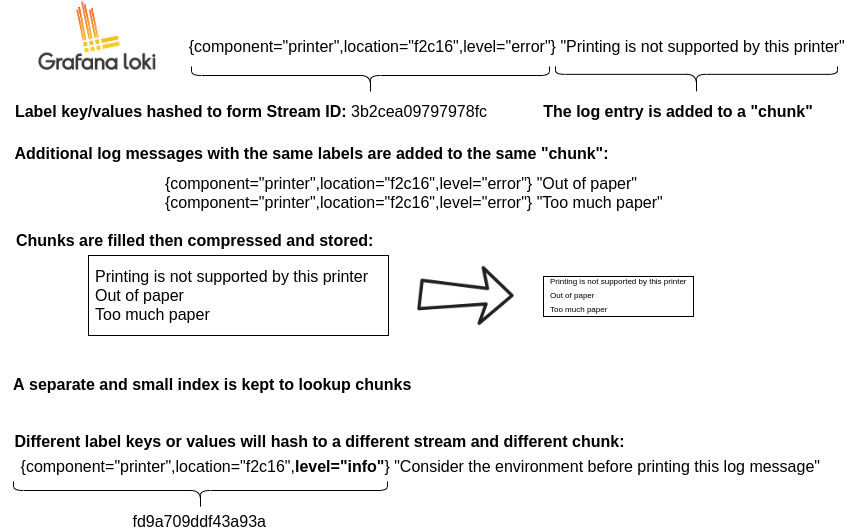

Loki 的设计非常经济高效,并且具有高度可扩展性。与其他日志系统不同,Loki 不会对日志内容进行索引,而只会对日志的元数据进行索引,将其作为每个日志流的一组标签。

日志流是一组共享相同标签的日志。标签帮助Loki在您的数据存储中找到日志流,因此拥有一组高质量的标签是高效查询执行的关键。

然后,日志数据被压缩并以块的形式存储在对象存储中,如亚马逊简单存储服务(S3)或谷歌云存储(GCS),甚至为了开发或概念验证,存储在文件系统上。小索引和高度压缩的块简化了操作,显著降低了Loki的成本。

2. 日志结构

Loki数据存储格式

- index:索引;存储Loki标签,如日志级别、来源、分组

- chunk:块;存储日志条目本身

3. 架构

Agent:

代理或客户端,例如Grafana Alloy或Promtail,随Loki一起分发。代理抓取日志,通过添加标签将日志转换为流,并通过HTTP API将流推送到Loki。

Loki主服务器:

负责摄取和存储日志以及处理查询。它可以部署在三种不同的配置中,有关更多信息,请参阅部署模式。

Grafana:

用于查询和显示日志数据。您还可以使用LogCLI或直接使用Loki API从命令行查询日志。

二、Alloy

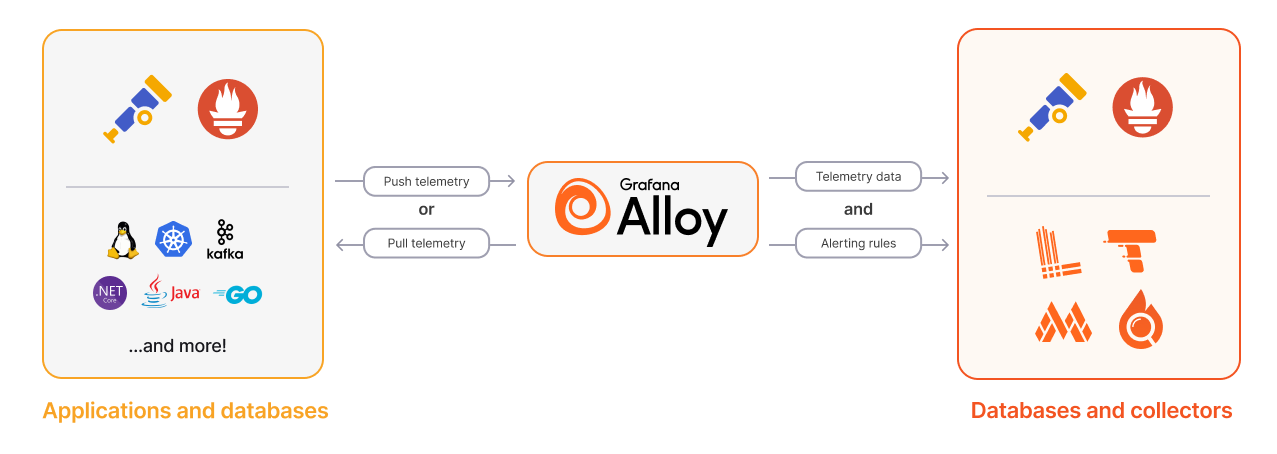

1. 架构

Alloy是一个多功能的可观测性收集器,可以摄取各种格式的日志并将其发送到Loki。我们推荐Alloy作为向Loki发送日志的主要方法,因为它为构建高度可扩展和可靠的可观测性流水线提供了更强大和特征丰富的解决方案。

2. 组件

| Type | Component |

|---|---|

| Collector | loki.source.api |

| Collector | loki.source.awsfirehose |

| Collector | loki.source.azure_event_hubs |

| Collector | loki.source.cloudflare |

| Collector | loki.source.docker |

| Collector | loki.source.file |

| Collector | loki.source.gcplog |

| Collector | loki.source.gelf |

| Collector | loki.source.heroku |

| Collector | loki.source.journal |

| Collector | loki.source.kafka |

| Collector | loki.source.kubernetes |

| Collector | loki.source.kubernetes_events |

| Collector | loki.source.podlogs |

| Collector | loki.source.syslog |

| Collector | loki.source.windowsevent |

| Collector | otelcol.receiver.loki |

| Transformer | loki.relabel |

| Transformer | loki.process |

| Writer | loki.write |

| Writer | otelcol.exporter.loki |

| Writer | otelcol.exporter.logging |

三、部署实践

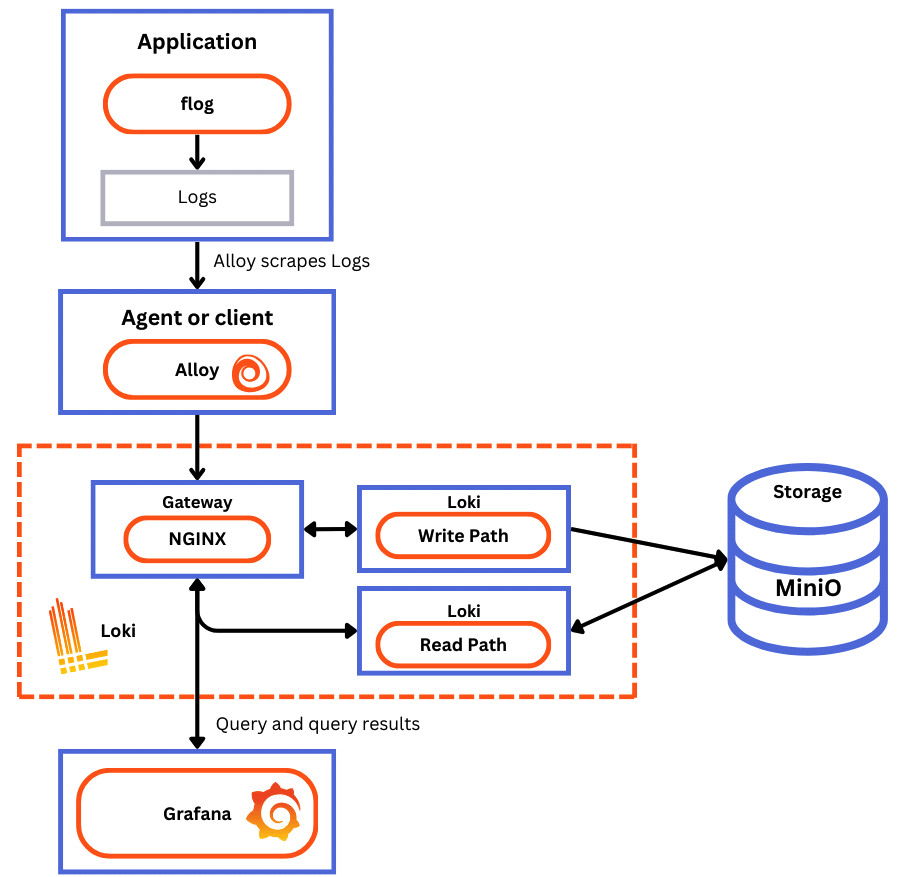

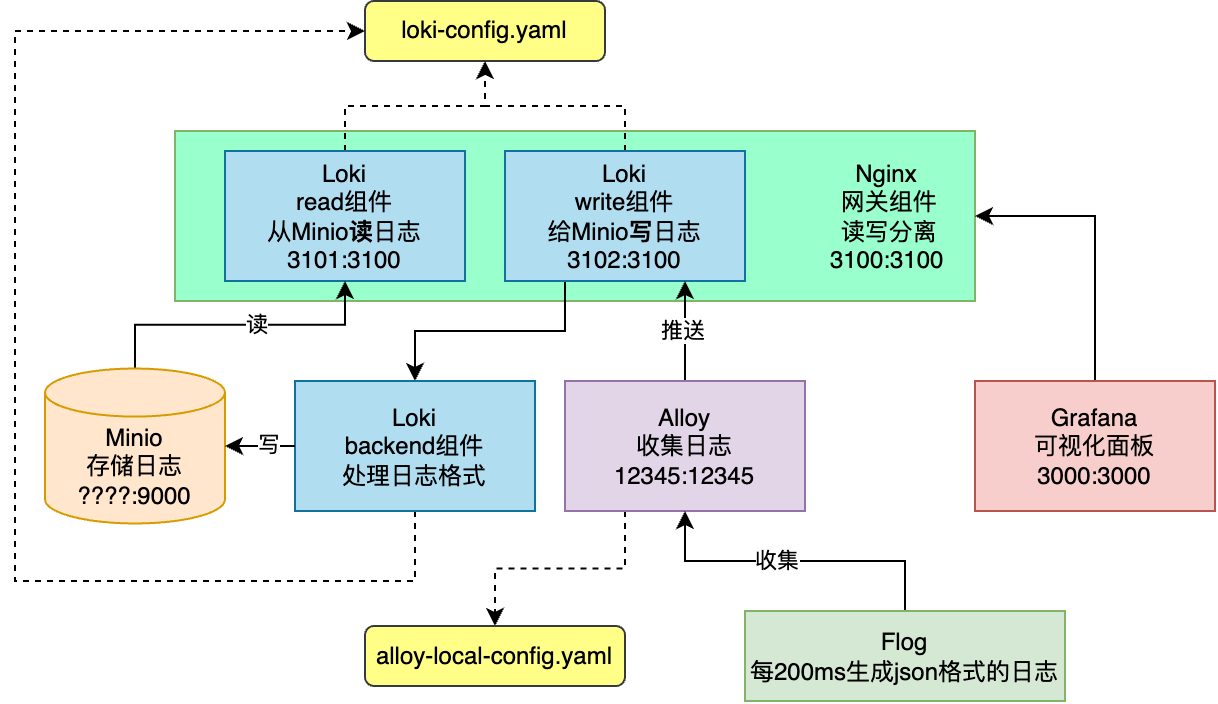

0. 架构设计

用 Docker Compose 部署以上服务,快速体验Loki生态:

- flog:生成日志行。flog是常见日志格式的日志生成器。

- Grafana Alloy:它从flog上抓取日志线,并通过网关将它们推送到Loki。

- 网关(nginx):接收请求并根据请求的URL将它们重定向到适当的容器。

- Loki read组件:它运行一个查询前端和一个查询器。

- Loki write组件:它运行一个分发器和一个Ingester。

- Loki 后端组件:它运行索引网关、压缩器、标尺、Bloom压缩器(实验)和Bloom网关(实验)。

- Minio:Loki用来存储其索引和块。

- Grafana:它提供了在Loki中捕获的日志行的可视化。

1. 准备

# 1、准备目录

mkdir evaluate-loki

cd evaluate-loki

# 2、下载默认配置文件

wget https://raw.githubusercontent.com/grafana/loki/main/examples/getting-started/loki-config.yaml -O loki-config.yaml

wget https://raw.githubusercontent.com/grafana/loki/main/examples/getting-started/alloy-local-config.yaml -O alloy-local-config.yaml

wget https://raw.githubusercontent.com/grafana/loki/main/examples/getting-started/docker-compose.yaml -O docker-compose.yaml

配置文件下载不来,可以复制如下:

4.1 loki-config.yaml

---

server:

http_listen_address: 0.0.0.0

http_listen_port: 3100

memberlist:

join_members: ["read", "write", "backend"]

dead_node_reclaim_time: 30s

gossip_to_dead_nodes_time: 15s

left_ingesters_timeout: 30s

bind_addr: ['0.0.0.0']

bind_port: 7946

gossip_interval: 2s

schema_config:

configs:

- from: 2023-01-01

store: tsdb

object_store: s3

schema: v13

index:

prefix: index_

period: 24h

common:

path_prefix: /loki

replication_factor: 1

compactor_address: http://backend:3100

storage:

s3:

endpoint: minio:9000

insecure: true

bucketnames: loki-data

access_key_id: loki

secret_access_key: supersecret

s3forcepathstyle: true

ring:

kvstore:

store: memberlist

ruler:

storage:

s3:

bucketnames: loki-ruler

compactor:

working_directory: /tmp/compactor

4.2 alloy-local-config.yaml

# 每5s抓取Docker信息日志

discovery.docker "flog_scrape" {

host = "unix:///var/run/docker.sock"

refresh_interval = "5s"

}

# 处理Docker日志的规则

discover y.relabel "flog_scrape" {

targets = []

rule {

# 把 __meta_docker_container_name 重打标签为 container

# 例如:__meta_docker_container_name="/xxxxx"

# 重打标签后:container="xxxxx"

source_labels = ["__meta_docker_container_name"]

regex = "/(.*)"

target_label = "container"

}

}

loki.source.docker "flog_scrape" {

host = "unix:///var/run/docker.sock" # 来源为Docker

targets = discovery.docker.flog_scrape.targets # 收集的目标

forward_to = [loki.write.default.receiver] # 转发

relabel_rules = discovery.relabel.flog_scrape.rules # 处理日志的规则

refresh_interval = "5s" # 每5s收集一次

}

loki.write "default" {

endpoint {

url = "http://gateway:3100/loki/api/v1/push"

tenant_id = "tenant1"

}

external_labels = {}

}

4.3 docker-compose.yaml

---

version: "3"

networks:

loki:

services:

# --------------------Loki读组件--------------------

read:

image: grafana/loki:3.1.0

command: "-config.file=/etc/loki/config.yaml -target=read"

ports:

- 3101:3100

- 7946

- 9095

volumes:

- ./loki-config.yaml:/etc/loki/config.yaml

depends_on:

- minio

healthcheck:

test: [ "CMD-SHELL", "wget --no-verbose --tries=1 --spider http://localhost:3100/ready || exit 1" ]

interval: 10s

timeout: 5s

retries: 5

networks: &loki-dns

loki:

aliases:

- loki

# --------------------Loki写组件--------------------

write:

image: grafana/loki:3.1.0

command: "-config.file=/etc/loki/config.yaml -target=write"

ports:

- 3102:3100

- 7946

- 9095

volumes:

- ./loki-config.yaml:/etc/loki/config.yaml

healthcheck:

test: [ "CMD-SHELL", "wget --no-verbose --tries=1 --spider http://localhost:3100/ready || exit 1" ]

interval: 10s

timeout: 5s

retries: 5

depends_on:

- minio

networks:

<<: *loki-dns

# --------------------Alloy--------------------

alloy:

image: grafana/alloy:latest

volumes:

- ./alloy-local-config.yaml:/etc/alloy/config.alloy:ro

- /var/run/docker.sock:/var/run/docker.sock

command: run --server.http.listen-addr=0.0.0.0:12345 --storage.path=/var/lib/alloy/data /etc/alloy/config.alloy

ports:

- 12345:12345

depends_on:

- gateway

networks:

- loki

# --------------------Minio--------------------

minio:

image: minio/minio

entrypoint:

- sh

- -euc

- |

mkdir -p /data/loki-data && \

mkdir -p /data/loki-ruler && \

minio server /data

environment:

- MINIO_ROOT_USER=loki

- MINIO_ROOT_PASSWORD=supersecret

- MINIO_PROMETHEUS_AUTH_TYPE=public

- MINIO_UPDATE=off

ports:

- 9000

volumes:

- ./.data/minio:/data

healthcheck:

test: [ "CMD", "curl", "-f", "http://localhost:9000/minio/health/live" ]

interval: 15s

timeout: 20s

retries: 5

networks:

- loki

# --------------------Grafana--------------------

grafana:

image: grafana/grafana:latest

environment:

- GF_PATHS_PROVISIONING=/etc/grafana/provisioning

- GF_AUTH_ANONYMOUS_ENABLED=true

- GF_AUTH_ANONYMOUS_ORG_ROLE=Admin

depends_on:

- gateway

entrypoint:

- sh

- -euc

- |

mkdir -p /etc/grafana/provisioning/datasources

cat <<EOF > /etc/grafana/provisioning/datasources/ds.yaml

apiVersion: 1

datasources:

- name: Loki

type: loki

access: proxy

url: http://gateway:3100

jsonData:

httpHeaderName1: "X-Scope-OrgID"

secureJsonData:

httpHeaderValue1: "tenant1"

EOF

/run.sh

ports:

- "3000:3000"

healthcheck:

test: [ "CMD-SHELL", "wget --no-verbose --tries=1 --spider http://localhost:3000/api/health || exit 1" ]

interval: 10s

timeout: 5s

retries: 5

networks:

- loki

# --------------------Loki backend组件--------------------

backend:

image: grafana/loki:3.1.0

volumes:

- ./loki-config.yaml:/etc/loki/config.yaml

ports:

- "3100"

- "7946"

command: "-config.file=/etc/loki/config.yaml -target=backend -legacy-read-mode=false"

depends_on:

- gateway

networks:

- loki

# --------------------Nginx网关--------------------

gateway:

image: nginx:latest

depends_on:

- read

- write

entrypoint:

- sh

- -euc

- |

cat <<EOF > /etc/nginx/nginx.conf

user nginx;

worker_processes 5; ## Default: 1

events {

worker_connections 1000;

}

http {

resolver 127.0.0.11;

server {

listen 3100;

location = / {

return 200 'OK';

auth_basic off;

}

location = /api/prom/push {

proxy_pass http://write:3100\$$request_uri;

}

location = /api/prom/tail {

proxy_pass http://read:3100\$$request_uri;

proxy_set_header Upgrade \$$http_upgrade;

proxy_set_header Connection "upgrade";

}

location ~ /api/prom/.* {

proxy_pass http://read:3100\$$request_uri;

}

location = /loki/api/v1/push {

proxy_pass http://write:3100\$$request_uri;

}

location = /loki/api/v1/tail {

proxy_pass http://read:3100\$$request_uri;

proxy_set_header Upgrade \$$http_upgrade;

proxy_set_header Connection "upgrade";

}

location ~ /loki/api/.* {

proxy_pass http://read:3100\$$request_uri;

}

}

}

EOF

/docker-entrypoint.sh nginx -g "daemon off;"

ports:

- "3100:3100"

healthcheck:

test: ["CMD", "service", "nginx", "status"]

interval: 10s

timeout: 5s

retries: 5

networks:

- loki

# --------------------Flog--------------------

flog:

image: mingrammer/flog

command: -f json -d 200ms -l

networks:

- loki

整个docker-compose文件,实现的部署架构:

2. 部署

docker compose up -d

3. 查看日志

可以使用LogCli或者Grafana可视化界面查看日志

使用 Grafana 查询 Loki 数据源的数据:

- 访问Grafana:

http://<ip>:3000 - 已经整合了Loki数据源

- 点击 Explore 查看

- 使用Code模式,编写

LogQL查询

四、Grafana

访问Grafana:http://<ip>:3000

官网示例:https://grafana.com/docs/loki/latest/query/query_examples/

1. 标签检索

# 查看 container 标签值 为 evaluate-loki-flog-1 的日志

{container="evaluate-loki-flog-1"}

{container="evaluate-loki-grafana-1"}

2. 包含值

# 查看 container 标签值 为 evaluate-loki-flog-1 ,且 json 格式中 status字段值为404

{container="evaluate-loki-flog-1"} | json | status=`404`

3. 计算

sum by(container) (rate({container="evaluate-loki-flog-1"} | json | status=`404` [$__auto]))

4. 其他

{container="evaluate-loki-flog-1"}

{container="evaluate-loki-flog-1"} |= "GET"

{container="evaluate-loki-flog-1"} |= "POST"

{container="evaluate-loki-flog-1"} | json | status="401"

{container="evaluate-loki-flog-1"} != "401"

很高兴本文对你有用(*^_^*),如需转载请记得标明出处哟(☆▽☆):

本文来自博客园,作者:雪与锄,原文链接:https://www.cnblogs.com/corianderfiend/articles/18696225

浙公网安备 33010602011771号

浙公网安备 33010602011771号