基于NFS服务创建StorageClass 存储实现自动创建PV

前言

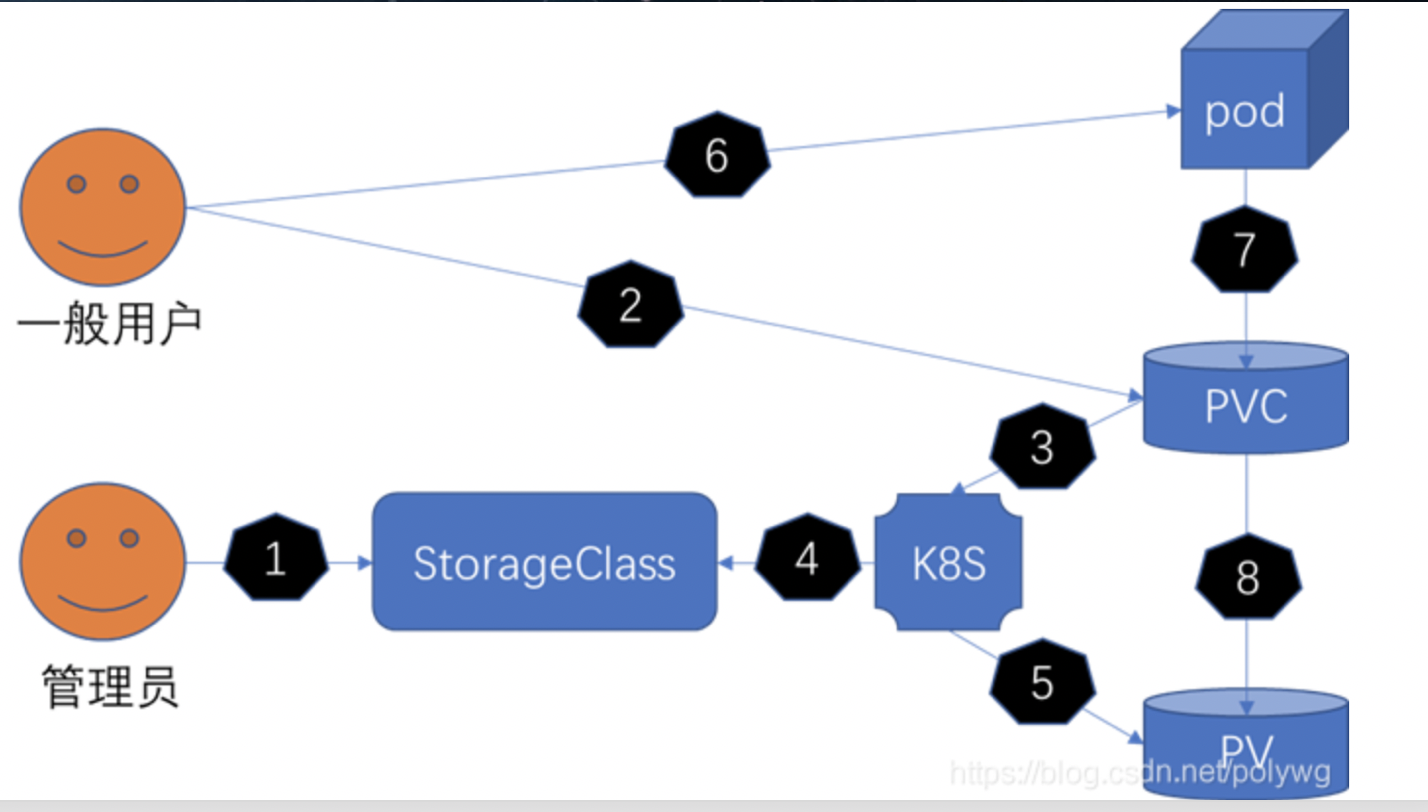

Storageclass解决PV手动创建需求,当每次创建 PVC 声明使用存储时,都需要去手动的创建 PV,来满足 PVC 的使用。

可以用一种机制来根据用户声明的存储使用量(PVC)来动态的创建对应的持久化存储卷(PV)。k8s 用 StorageClass 来实现动态创建 持久化存储。

实现原理:

管理员首先会创建一个存储类StorageClass,然后需要业务人员根据自己的业务属性

一、搭建NFS服务

在这里我建k8s master01节点作为NFS server服务

[root@k8s-master01 ~]# yum install -y nfs-utils #所有master都要安装 [root@k8s-master01 ~]# mkdir /data/volumes/v1 [root@k8s-master01 ~]# systemctl start rpcbind [root@k8s-master01 ~]# systemctl status rpcbind

root@k8s-master01 ~]# systemctl enable --now nfs-server

Created symlink from /etc/systemd/system/multi-user.target.wants/nfs-server.service to /u:qsr/lib/systemd/system/nfs-server.ser

Master节点创建共享挂载目录

#mkdir -p /data/volumes/{v1,v2,v3}

编辑master节点/etc/exports文件,将目录共享到192.168.126.0/24这个网段中(网段可根据自己环境来填写,exports文件需要在每台master节点上进行配置)

#vim /etc/exports

/data/volumes/v1 192.168.126.0/24(rw,no_root_squash,no_all_squash)

发布

#exportfs -arv

exporting 192.168.126.0/24:/data/volumes/v1

查看

#showmounte -e

Export list for k8s-master01:

/data/volumes/v1 192.168.126.0/24

二、创建StorageClass存储类型

创建StorageClass,负责创建PVC并调用NFSprovisioner进行预订工作,并关联PV和PVC

#vim class.yaml

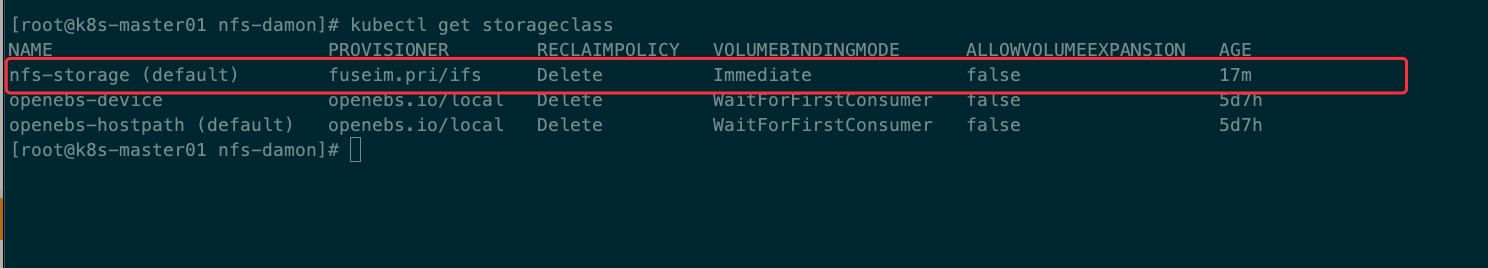

kind: StorageClass apiVersion: storage.k8s.io/v1 metadata: name: nfs-storage annotations: storageclass.beta.kubernetes.io/is-default-class: 'true' storageclass.kubernetes.io/is-default-class: 'true' provisioner: fuseim.pri/ifs #这里指定存储供应者来源名称 reclaimPolicy: Delete #指定回收策略,在这里选择的是Delete,与PV相连的后端存储完成Volume的删除操作 volumeBindingMode: Immediate #指定绑定模式,在这里选择的是即刻绑定,也就是存储卷声明创建之后,立刻动态创建存储卷饼将其绑定到存储卷声明,另外还有"WaitForFirstConsumer",直到存储卷声明第一次被容器组使用时,才创建存储卷,并将其绑定到存储卷声明

#kubectl apply -f class.yaml

注意:如果SC存储不是默认的,可以标记一个StorageClass为默认的(根据自己实际名称标记即可)

#kubectl patch storageclass managed-nfs-storage -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

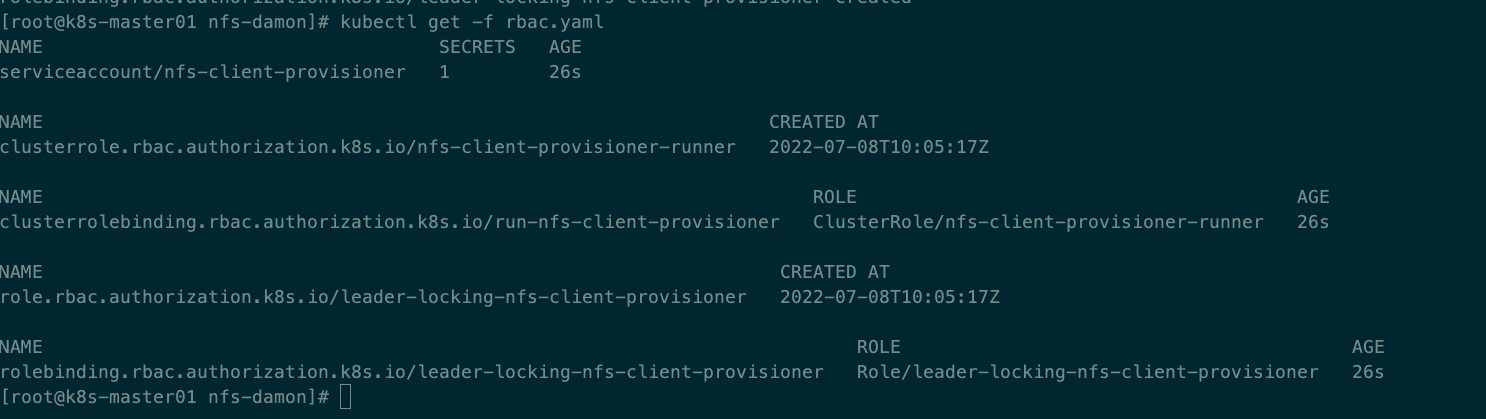

二、创建RBAC权限

创建Service Account,用来管控NFS provisioner在k8s集群中的运行权限

rbac(基于角色的访问控制,就是用户通过角色与权限进行关联),是一个从认证---->授权-----》准入机制

#vim rbac.yaml

apiVersion: v1 kind: ServiceAccount metadata: name: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: nfs-client-provisioner-runner rules: - apiGroups: [""] resources: ["persistentvolumes"] verbs: ["get", "list", "watch", "create", "delete"] - apiGroups: [""] resources: ["persistentvolumeclaims"] verbs: ["get", "list", "watch", "update"] - apiGroups: ["storage.k8s.io"] resources: ["storageclasses"] verbs: ["get", "list", "watch"] - apiGroups: [""] resources: ["events"] verbs: ["create", "update", "patch"] --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: run-nfs-client-provisioner subjects: - kind: ServiceAccount name: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default roleRef: kind: ClusterRole name: nfs-client-provisioner-runner apiGroup: rbac.authorization.k8s.io --- kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default rules: - apiGroups: [""] resources: ["endpoints"] verbs: ["get", "list", "watch", "create", "update", "patch"] --- kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default subjects: - kind: ServiceAccount name: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default roleRef: kind: Role name: leader-locking-nfs-client-provisioner apiGroup: rbac.authorization.k8s.io

#将上述编写好的yaml文件创建出来

[root@k8s-master01 nfs-damon]# kubectl apply -f rbac.yaml

serviceaccount/nfs-client-provisioner created

clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created

clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created

role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

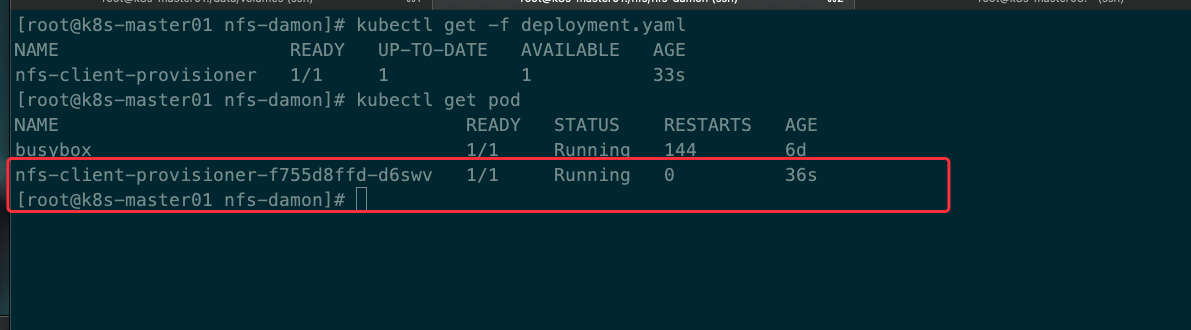

三、创建PV的Provisioner(存储插件)

创建PV存储插件,这样才能实现自动创建PV,一是在NFS共享目录下创建挂载点(volume),二是建立PV并将PC与NFS挂载建立关联

#vim deployment.yaml

apiVersion: apps/v1 kind: Deployment metadata: name: nfs-client-provisioner labels: app: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default spec: replicas: 1 strategy: type: Recreate selector: matchLabels: app: nfs-client-provisioner template: metadata: labels: app: nfs-client-provisioner spec: serviceAccountName: nfs-client-provisioner containers: - name: nfs-client-provisioner #image: quay.io/external_storage/nfs-client-provisioner:latest #image: gcr.io/k8s-staging-sig-storage/nfs-subdir-external-provisioner:latest image: gmoney23/nfs-client-provisioner:latest #注意该NFS-client镜像一定要与k8s版本相匹配,如果不兼容那么就无法绑定 volumeMounts: - name: nfs-client-root mountPath: /persistentvolumes env: - name: PROVISIONER_NAME value: fuseim.pri/ifs #这里必须要填写storageclass中的PROVISIONER名称信息一致 - name: NFS_SERVER value: 192.168.126.131 #指定NFS服务器的IP地址 - name: NFS_PAT value: /data/volumes/v1 #指定NFS服务器中的共享挂载目录 volumes: - name: nfs-client-root #定义持久化卷的名称,必须要上面volumeMounts挂载的名称一致 nfs: server: 192.168.126.131 #指定NFS所在的IP地址 path: /data/volumes/v1 #指定NFS服务器中的共享挂载目录

[root@k8s-master01 nfs-damon]# kubectl apply -f deployment.yaml

deployment.apps/nfs-client-provisioner created

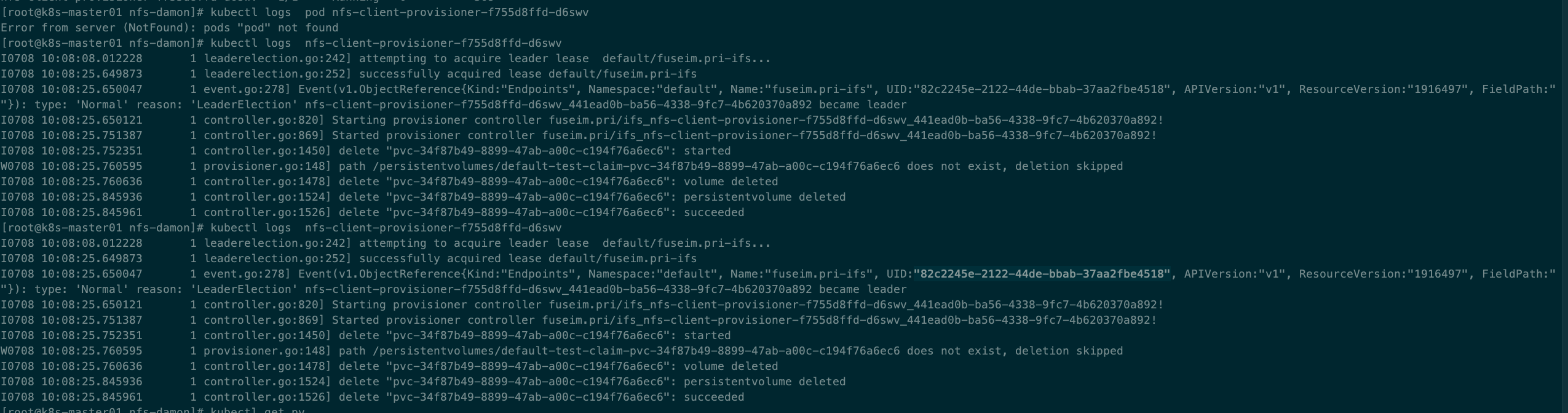

通过检查容器日志查看启动的NFS插件是否正常

Ps:如果出现error等相关信息一定仔细排查,否则会导致NFS-celient一直处于pending状态

https://github.com/kubernetes-sigs/nfs-subdir-external-provisioner/issues/25

#kubectl logs pod nfs-client-provisioner-f755d8ffd-d6swv

四、创建PVC持久化卷

#vim test-claim.yaml

kind: PersistentVolumeClaim apiVersion: v1 metadata: name: test-claim namespace: default spec: storageClassName: nfs-storage accessModes: - ReadWriteMany resources: requests: storage: 256Mi

#kubectl apply -f test-claim.yaml

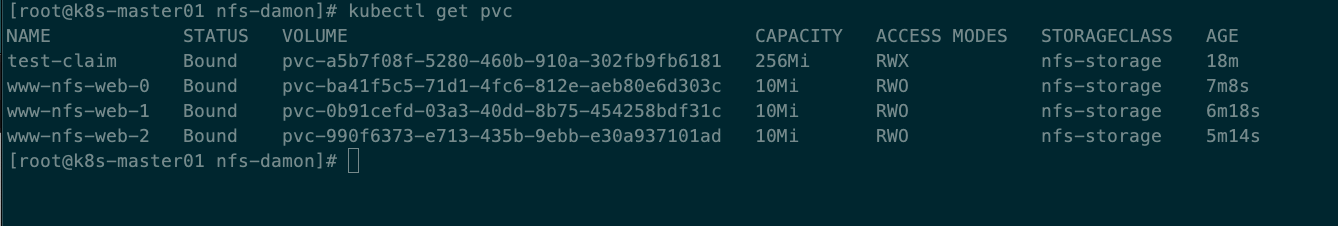

查看PVC是否已经与Storageclass挂载绑定

#kubectl get pvc

五、创建测试文件

#vim statefulset-nfs.yaml

apiVersion: v1 kind: Service metadata: name: nginx labels: app: nginx spec: ports: - port: 80 name: web clusterIP: None selector: app: nginx --- apiVersion: apps/v1 kind: StatefulSet metadata: name: nfs-web spec: serviceName: "nginx" replicas: 3 selector: matchLabels: app: nfs-web # has to match .spec.template.metadata.labels template: metadata: labels: app: nfs-web spec: terminationGracePeriodSeconds: 10 containers: - name: nginx image: nginx:1.7.9 ports: - containerPort: 80 name: web volumeMounts: - name: www mountPath: /usr/share/nginx/html volumeClaimTemplates: - metadata: name: www annotations: volume.beta.kubernetes.io/storage-class: nfs-storage spec: accessModes: [ "ReadWriteOnce" ] resources: requests: storage: 10Mi

#kubectl apply -f statefulset-nfs.yaml

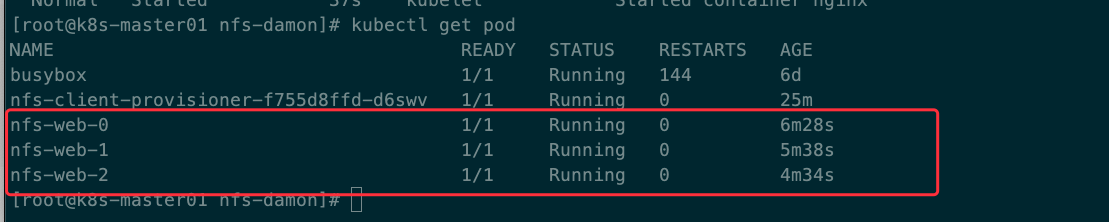

验证是否自动创建PV

查看PVC

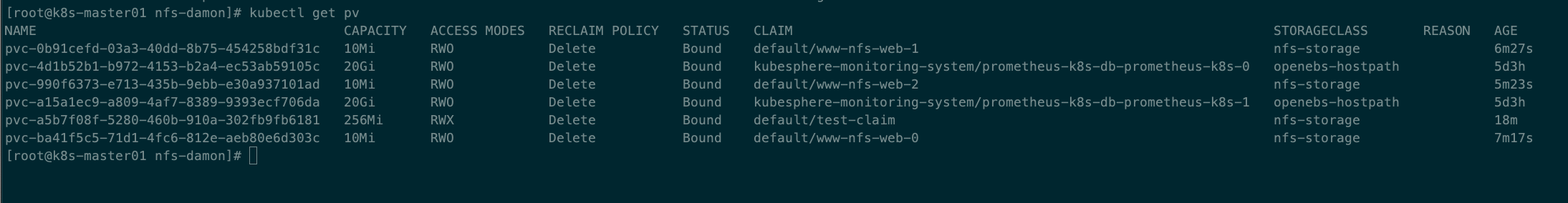

查看PV

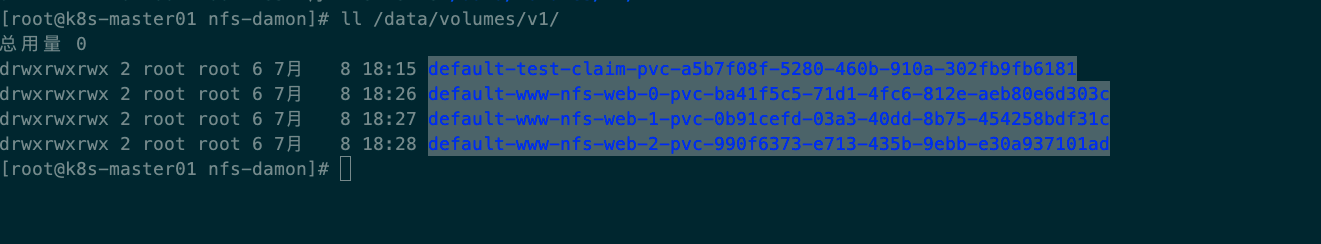

查看NFS-server 数据信息

浙公网安备 33010602011771号

浙公网安备 33010602011771号