Paul S. Randal的扩展事件系列 30日学扩展事件

Paul S. Randal的扩展事件系列 30日学扩展事件

地址:http://www.sqlskills.com/blogs/jonathan/an-xevent-a-day-31-days-of-extended-events/

查询扩展时间相关DMV

Querying the Extended Events Metadata

http://www.sqlskills.com/blogs/jonathan/querying-extended-events-metadata/

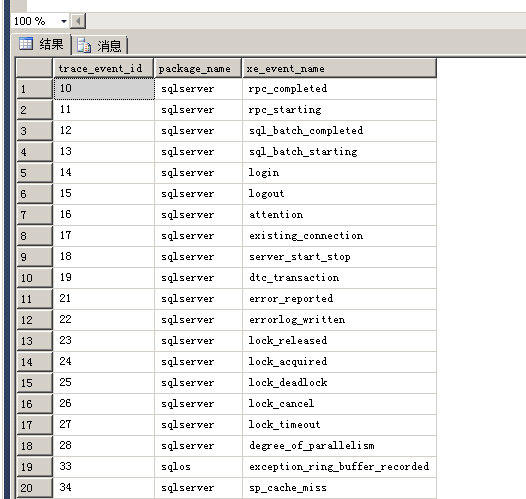

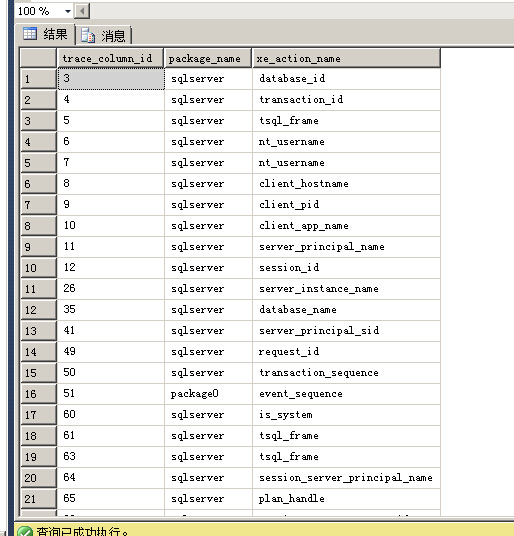

sys.dm_xe_packages -- Extended Event Packages SELECT name, guid, description FROM sys.dm_xe_packages p WHERE (p.capabilities IS NULL OR p.capabilities & 1 = 0) SELECT p.name, p.description, lm.name FROM sys.dm_xe_packages p JOIN sys.dm_os_loaded_modules lm ON p.module_address = lm.base_address WHERE (p.capabilities IS NULL OR p.capabilities & 1 = 0) Events -- Event objects SELECT p.name AS package_name, o.name AS event_name, o.description FROM sys.dm_xe_packages AS p JOIN sys.dm_xe_objects AS o ON p.guid = o.package_guid WHERE (p.capabilities IS NULL OR p.capabilities & 1 = 0) AND (o.capabilities IS NULL OR o.capabilities & 1 = 0) AND o.object_type = 'event' Actions -- Actions SELECT p.name AS package_name, o.name AS action_name, o.description FROM sys.dm_xe_packages AS p JOIN sys.dm_xe_objects AS o ON p.guid = o.package_guid WHERE (p.capabilities IS NULL OR p.capabilities & 1 = 0) AND (o.capabilities IS NULL OR o.capabilities & 1 = 0) AND o.object_type = 'action' Targets -- Targets SELECT p.name AS package_name, o.name AS target_name, o.description FROM sys.dm_xe_packages AS p JOIN sys.dm_xe_objects AS o ON p.guid = o.package_guid WHERE (p.capabilities IS NULL OR p.capabilities & 1 = 0) AND (o.capabilities IS NULL OR o.capabilities & 1 = 0) AND o.object_type = 'target' Predicate Sources -- State Data Predicates SELECT p.name AS package_name, o.name AS source_name, o.description FROM sys.dm_xe_objects AS o JOIN sys.dm_xe_packages AS p ON o.package_guid = p.guid WHERE (p.capabilities IS NULL OR p.capabilities & 1 = 0) AND (o.capabilities IS NULL OR o.capabilities & 1 = 0) AND o.object_type = 'pred_source' Predicate Comparators -- Comparison Predicates SELECT p.name AS package_name, o.name AS source_name, o.description FROM sys.dm_xe_objects AS o JOIN sys.dm_xe_packages AS p ON o.package_guid = p.guid WHERE (p.capabilities IS NULL OR p.capabilities & 1 = 0) AND (o.capabilities IS NULL OR o.capabilities & 1 = 0) AND o.object_type = 'pred_compare' Maps -- Maps SELECT p.name AS package_name, o.name AS source_name, o.description FROM sys.dm_xe_objects AS o JOIN sys.dm_xe_packages AS p ON o.package_guid = p.guid WHERE (p.capabilities IS NULL OR p.capabilities & 1 = 0) AND (o.capabilities IS NULL OR o.capabilities & 1 = 0) AND o.object_type = 'map' Types -- Types SELECT p.name AS package_name, o.name AS source_name, o.description FROM sys.dm_xe_objects AS o JOIN sys.dm_xe_packages AS p ON o.package_guid = p.guid WHERE (p.capabilities IS NULL OR p.capabilities & 1 = 0) AND (o.capabilities IS NULL OR o.capabilities & 1 = 0) AND o.object_type = 'Type' -- Event Columns SELECT oc.name AS column_name, oc.column_type AS column_type, oc.column_value AS column_value, oc.description AS column_description FROM sys.dm_xe_packages AS p JOIN sys.dm_xe_objects AS o ON p.guid = o.package_guid JOIN sys.dm_xe_object_columns AS oc ON o.name = oc.OBJECT_NAME AND o.package_guid = oc.object_package_guid WHERE (p.capabilities IS NULL OR p.capabilities & 1 = 0) AND (o.capabilities IS NULL OR o.capabilities & 1 = 0) AND (oc.capabilities IS NULL OR oc.capabilities & 1 = 0) AND o.object_type = 'event' AND o.name = 'wait_info' -- Configurable Event Columns SELECT oc.name AS column_name, oc.column_type AS column_type, oc.column_value AS column_value, oc.description AS column_description FROM sys.dm_xe_packages AS p JOIN sys.dm_xe_objects AS o ON p.guid = o.package_guid JOIN sys.dm_xe_object_columns AS oc ON o.name = oc.OBJECT_NAME AND o.package_guid = oc.object_package_guid WHERE (p.capabilities IS NULL OR p.capabilities & 1 = 0) AND (o.capabilities IS NULL OR o.capabilities & 1 = 0) AND (oc.capabilities IS NULL OR oc.capabilities & 1 = 0) AND o.object_type = 'event' AND o.name = 'file_write_completed' AND oc.column_type = 'customizable' -- Target Configurable Fields SELECT oc.name AS column_name, oc.column_id, oc.type_name, oc.capabilities_desc, oc.description FROM sys.dm_xe_packages AS p JOIN sys.dm_xe_objects AS o ON p.guid = o.package_guid JOIN sys.dm_xe_object_columns AS oc ON o.name = oc.OBJECT_NAME AND o.package_guid = oc.object_package_guid WHERE (p.capabilities IS NULL OR p.capabilities & 1 = 0) AND (o.capabilities IS NULL OR o.capabilities & 1 = 0) AND o.object_type = 'target' AND o.name = 'asynchronous_file_target' -- Map Values SELECT name, map_key, map_value FROM sys.dm_xe_map_values WHERE name = 'wait_types'

创建扩展事件的例子

Managing Event Sessions

http://www.sqlskills.com/blogs/jonathan/managing-extended-events-sessions/

CREATE EVENT SESSION [TrackTempdbFileWrites] ON SERVER ADD EVENT sqlserver.file_write_completed( SET collect_path = 1 ACTION (sqlserver.sql_text) WHERE database_id = 2), ADD EVENT sqlserver.file_written( WHERE database_id = 2) ADD TARGET package0.ring_buffer, ADD TARGET package0.asynchronous_bucketizer( SET filtering_event_name='sqlserver.file_write_completed', source_type=0, source='file_id') WITH (MAX_MEMORY=4096 KB, EVENT_RETENTION_MODE=ALLOW_SINGLE_EVENT_LOSS, MAX_DISPATCH_LATENCY=30 SECONDS, MAX_EVENT_SIZE=0 KB, MEMORY_PARTITION_MODE=NONE, TRACK_CAUSALITY=OFF, STARTUP_STATE=OFF) GO -- ALTER the Event Session to Start it ALTER EVENT SESSION [TrackTempdbFileWrites] ON SERVER STATE=START GO USE [tempdb] GO IF OBJECT_ID('Test') IS NOT NULL DROP TABLE Test CREATE TABLE Test (rowid INT IDENTITY PRIMARY KEY, exampledata VARCHAR(4000)) GO INSERT INTO Test(exampledata) VALUES (REPLICATE('abcd', 1000)) GO 100 ALTER EVENT SESSION [TrackTempdbFileWrites] ON SERVER DROP EVENT sqlserver.file_write_completed, DROP EVENT sqlserver.file_written GO SELECT CAST(target_data AS XML) FROM sys.dm_xe_session_targets st JOIN sys.dm_xe_sessions s ON st.event_session_address = s.address WHERE s.name = 'TrackTempdbFileWrites' GO USE [tempdb] GO IF OBJECT_ID('Test') IS NOT NULL DROP TABLE Test CREATE TABLE Test (rowid INT IDENTITY PRIMARY KEY, exampledata VARCHAR(4000)) GO INSERT INTO Test(exampledata) VALUES (REPLICATE('abcd', 1000)) GO 100 SELECT CAST(target_data AS XML) FROM sys.dm_xe_session_targets st JOIN sys.dm_xe_sessions s ON st.event_session_address = s.address WHERE s.name = 'TrackTempdbFileWrites' GO -- Add the sqlserver.file_write_completed back with new predicates ALTER EVENT SESSION [TrackTempdbFileWrites] ON SERVER ADD EVENT sqlserver.file_write_completed( SET collect_path = 1 ACTION (sqlserver.sql_text) WHERE database_id = 2 AND FILE_ID = 1) USE [tempdb] GO IF OBJECT_ID('Test') IS NOT NULL DROP TABLE Test CREATE TABLE Test (rowid INT IDENTITY PRIMARY KEY, exampledata VARCHAR(4000)) GO INSERT INTO Test(exampledata) VALUES (REPLICATE('abcd', 1000)) GO 100 SELECT CAST(target_data AS XML) FROM sys.dm_xe_session_targets st JOIN sys.dm_xe_sessions s ON st.event_session_address = s.address WHERE s.name = 'TrackTempdbFileWrites' GO -- Add a file target ALTER EVENT SESSION [TrackTempdbFileWrites] ON SERVER ADD TARGET package0.asynchronous_file_target ( SET filename = 'C:\SQLBlog\TrackTempdbFileWrites.xel', metadatafile = 'C:\SQLBlog\TrackTempdbFileWrites.mta') GO -- Drop a ring_buffer target ALTER EVENT SESSION [TrackTempdbFileWrites] ON SERVER DROP TARGET package0.ring_buffer GO Msg 25707, Level 16, State 1, Line 2 Event session option "event_retention_mode" cannot be changed while the session is running. Stop the event session before changing this session option. -- Stop the Event Seession first ALTER EVENT SESSION [TrackTempdbFileWrites] ON SERVER STATE=STOP GO -- Change Event Retention Mode ALTER EVENT SESSION [TrackTempdbFileWrites] ON SERVER WITH (EVENT_RETENTION_MODE = NO_EVENT_LOSS) GO -- Start the Event Seession after the change ALTER EVENT SESSION [TrackTempdbFileWrites] ON SERVER STATE=START GO DROP EVENT SESSION [TrackTempdbFileWrites] ON SERVER

扩展事件定义相关DMVs

Querying the Session Definition and Active Session DMV’s

--http://www.sqlskills.com/blogs/jonathan/extended-events-dmvs/

sys.server_event_sessions The sys.server_event_sessions DMV provides information about the Event Sessions that exist inside of the Extended Events Engine. The Session level options for the Event Session can be retrieved from this DMV, to determine how the Event Session is configured. -- Session level information for current Event Sessions SELECT s.name, s.max_memory, s.event_retention_mode_desc, s.max_dispatch_latency, s.max_event_size, s.memory_partition_mode_desc, s.track_causality, s.startup_state FROM sys.server_event_sessions AS s; sys.server_event_session_events The sys.server_event_session_events DMV provides information about the specific Events that are defined in the Event Sessions maintained by the Extended Events Engine. This DMV also returns the defined Predicates for the Events that are included for collection in Event Sessions on the server. The event_session_id column can be used to join this DMV to sys.server_event_sessions as shown below. -- Get events in a session SELECT ses.name AS session_name, sese.package AS event_package, sese.name AS event_name, sese.predicate AS event_predicate FROM sys.server_event_sessions AS ses INNER JOIN sys.server_event_session_events AS sese ON ses.event_session_id = sese.event_session_id; sys.server_event_session_actions The sys.server_event_session_actions DMV contains one row for each of the Actions that have been added to an Event in an Event Session. If the same Action was added to multiple Events, there would be a separate row per Event and Action pair in the Event Session. The event_session_id and event_id columns are used to join this DMV to the sys.server_event_session_events DMV. -- Get actions SELECT ses.name AS session_name, sese.package AS event_package, sese.name AS event_name, sese.predicate AS event_predicate, sesa.package AS action_package, sesa.name AS action_name FROM sys.server_event_sessions AS ses INNER JOIN sys.server_event_session_events AS sese ON ses.event_session_id = sese.event_session_id INNER JOIN sys.server_event_session_actions AS sesa ON ses.event_session_id = sesa.event_session_id AND sese.event_id = sesa.event_id; sys.server_event_session_targets The sys.server_event_session_targets DMV contains one row for each of the configured Targets that are defined for an Event Session. The event_session_id column is used to join this DMV to the sys.server_event_sessions DMV. -- Get target information SELECT ses.name AS session_name, sest.name AS target_name FROM sys.server_event_sessions AS ses INNER JOIN sys.server_event_session_targets AS sest ON ses.event_session_id = sest.event_session_id; sys.server_event_session_fields The sys.server_event_session_fields DMV contains one row for each of the configured options for each Target defined for an Event Session. The event_session_id and target_id columns are used to join this DMV to the sys.server_event_session_targets DMV. -- Get target option information SELECT ses.name AS session_name, sest.name AS target_name, sesf.name AS option_name, sesf.value AS option_value FROM sys.server_event_sessions AS ses INNER JOIN sys.server_event_session_targets AS sest ON ses.event_session_id = sest.event_session_id INNER JOIN sys.server_event_session_fields AS sesf ON sest.event_session_id = sesf.event_session_id AND sest.target_id = sesf.object_id; Extended Events Active Session DMV’s sys.dm_xe_sessions The sys.dm_xe_sessions DMV contains one row for each active Event Session (STATE=START) in the SQL Server Instance, and provides information about the configuration of the Session buffers. Information about the size, and number of buffers is returned for the regular sized and large sized buffers associated with the Event Session. An Event Session will have large sized buffers when the MAX_EVENT_SIZE configured is larger than the regular buffer size. In general, most Events will be buffered to the regular buffers. Information about event loss associated with the buffers being full and buffers that are full and pending dispatch is also contained in this DMV. -- Look at Active Session Information SELECT s.name, s.pending_buffers, s.total_regular_buffers, s.regular_buffer_size, s.total_large_buffers, s.large_buffer_size, s.total_buffer_size, s.buffer_policy_flags, s.buffer_policy_desc, s.flags, s.flag_desc, s.dropped_event_count, s.dropped_buffer_count, s.blocked_event_fire_time, s.create_time, s.largest_event_dropped_size FROM sys.dm_xe_sessions AS s; sys.dm_xe_session_targets The sys.dm_xe_session_targets DMV will contain one row for each Target that exists for an active Event Session. Information about the Target such as the Target name (ring_buffer, pair_matching, etc.) and Target execution statistics are returned by this DMV. For memory resident Targets, the target_data columns will return an XML document containing the information about the Events that have been dispatched to the Target and are still available. For persisted Targets, the target_data column still contains an XML document, but only statistics about the Target will be contained in the document. More specific information about the target_data column will be provided in the next week as we look at each Target individually. The event_session_address column is used to join this DMV to the address column in the sys.dm_xe_sessions DMV. -- Target information for a running session SELECT s.name AS session_name, t.target_name AS target_name, t.execution_count AS execution_count, t.execution_duration_ms AS execution_duration, CAST(t.target_data AS XML) AS target_data FROM sys.dm_xe_sessions AS s INNER JOIN sys.dm_xe_session_targets AS t ON s.address = t.event_session_address; sys.dm_xe_session_events The sys.dm_xe_session_events DMV contains one row for each Event that is defined in an Active Event Session. The predicate definition for each event, if defined, is included in the output of this DMV. However, the predicate is not the same as returned by sys.server_event_session_events if standard logical operators were used in the Event definition. Instead the Predicates are converted to use Predicate Comparators in text form, and for complex Predicates, the length can exceed the allowable output. When this occurs, “Predicate too large for display” will be returned by the DMV. The event_session_address column is used to join this DMV to the address column in the sys.dm_xe_sessions DMV. -- Event Information for a running session SELECT s.name AS session_name, e.event_name AS event_name, e.event_predicate AS event_predicate FROM sys.dm_xe_sessions AS s INNER JOIN sys.dm_xe_session_events AS e ON s.address = e.event_session_address; sys.dm_xe_session_event_actions The sys.dm_xe_session_event_actions DMV contains one row for each Action that is defined on an Event in an Active Event Session. If the same Action is defined on multiple Events in the Event Session, one row will be returned for each Event/Action pair. The event_session_address and event_name columns are used to join this DMV to the address column in the sys.dm_xe_session_events DMV. -- Event Information with Actions for a running session SELECT s.name AS session_name, e.event_name AS event_name, e.event_predicate AS event_predicate, ea.action_name AS action_name FROM sys.dm_xe_sessions AS s INNER JOIN sys.dm_xe_session_events AS e ON s.address = e.event_session_address INNER JOIN sys.dm_xe_session_event_actions AS ea ON e.event_session_address = ea.event_session_address AND e.event_name = ea.event_name; sys.dm_xe_session_object_columns The sys.dm_xe_session_object_columns DMV contains one row for each of the configured options for a Target that is defined in an Active Event Session, as well as one row for each of the customizable Data Elements for a Event that is defined in an Active Event Session. The event_session_address and event_name columns are used to join this DMV to the address column in the sys.dm_xe_session_events DMV. The event_session_address and target_name columns are used to join this DMV to the address column in the sys.dm_xe_session_targets DMV. -- Configurable event and target column information SELECT DISTINCT s.name AS session_name, oc.OBJECT_NAME, oc.object_type, oc.column_name, oc.column_value FROM sys.dm_xe_sessions AS s INNER JOIN sys.dm_xe_session_targets AS t ON s.address = t.event_session_address INNER JOIN sys.dm_xe_session_events AS e ON s.address = e.event_session_address INNER JOIN sys.dm_xe_session_object_columns AS oc ON s.address = oc.event_session_address AND ((oc.object_type = 'target' AND t.target_name = oc.object_name) OR (oc.object_type = 'event' AND e.event_name = oc.object_name));

ring_buffer是什么 ?ring_buffer目标

Targets Week – ring_buffer

--http://www.sqlskills.com/blogs/jonathan/extended-events-ring_buffer/

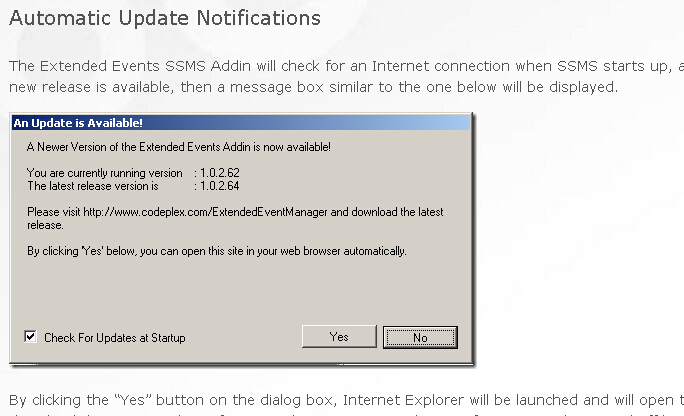

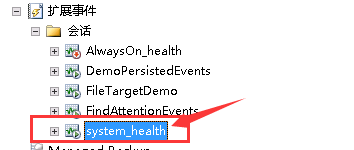

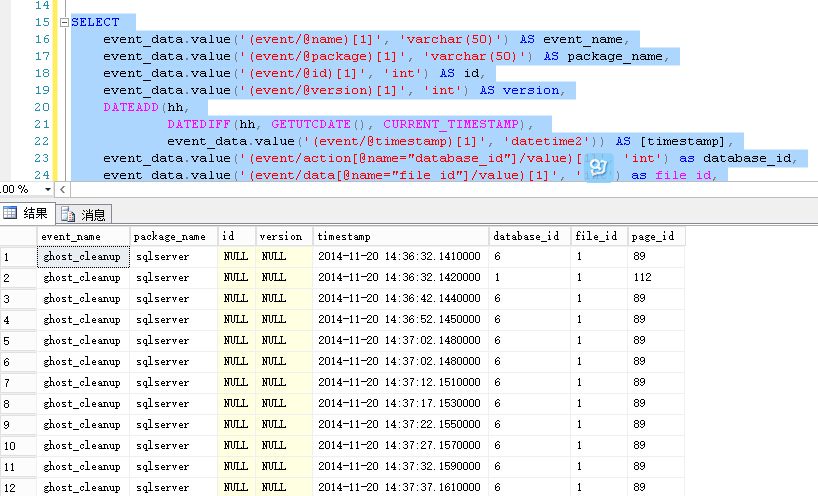

What is the ring_buffer? The ring_buffer is one of two targets available in Extended Events that captures event data in its raw format. The ring_buffer is a memory resident target that holds event data in a single XML document while the Event Session is active on the SQL Server. When the Event Session is stopped, the memory buffers allocated to the ring_buffer target are freed and all data contained in the target disappears. The ring_buffer collects events in a First In First Out (FIFO) manner that can be configured to be strict, where the oldest event is removed when the memory allocated to the target become full and new events arrive, or per event, allowing you to specify the maximum number of occurrences that will be retained for each event defined in the event session. The default configuration for event flushing is strict FIFO. Configuration Options The ring_buffer like most of the targets has configuration options that can be found in the sys.dm_xe_object_columns DMV. -- Target Configurable Fields SELECT oc.name AS column_name, oc.column_id, oc.type_name, oc.capabilities_desc, oc.description FROM sys.dm_xe_packages AS p INNER JOIN sys.dm_xe_objects AS o ON p.guid = o.package_guid INNER JOIN sys.dm_xe_object_columns AS oc ON o.name = oc.OBJECT_NAME AND o.package_guid = oc.object_package_guid WHERE (p.capabilities IS NULL OR p.capabilities & 1 = 0) AND (o.capabilities IS NULL OR o.capabilities & 1 = 0) AND o.object_type = N'target' AND o.name = N'ring_buffer'; In SQL Server 2008, 2008R2, and SQL Server Denali CTP1, the configuration options for the ring buffer are the max_memory option, which sets the maximum amount of memory to be used by the target buffers to hold Event data, and the occurrence_number, which is used to configure the FIFO operation of the target per Event, specifying the number of events by type that the target will retain. Extended Events Series (5 of 31) Targets Week ring buffer image thumb Both of the configurable options for the ring_buffer target are optional, and when they are not specified as a part of the Event Session definition, they take on their default values. As previously stated, the default configuration for the ring_buffer is to use strict FIFO if no value is specified for the occurrence_number in the Event Session. The default max_memory value is 4MB for the ring_buffer, which is covered in slightly more detail in the Considerations for Usage section of this post. Understanding the Target Data Format As previously mentioned, the ring_buffer stores Event data in its raw format. Inside the Extended Events Engine, the Event data is maintained in a binary format that minimizes the amount of memory necessary to store the Events, maximizing the number of Events that can be stored inside the Targets memory buffers. The Event data is materialized into an XML document when the Target information is queried using the sys.dm_xe_session_targets DMV, allowing it to be used for Event analysis. The ring_buffer XML document contains a parent XML <RingBufferTarget> Node that contains attributes about the Targets operation since the Event Session was started including the number of Events processed per second, the amount of time the Target has spent processing Events, the total number of Events that have been processed by the Target, the current number of Events in the target, the number of Events dropped due to full buffers, and the amount of memory used by the Target. <RingBufferTarget eventsPerSec="" processingTime="" totalEventsProcessed="" eventCount="" droppedCount="" memoryUsed="" /> Inside of the <RingBufferTarget> parent node, are the Event data XML nodes which contain the information returned by the Events defined in the Event Session. While the XML returned for the Event data does not conform to any published XML Schema, it does have a predictable format based on the Event Session definition. The root <event> node contains attributes for the Event name, the Package that loaded the Event metadata and that fired the Event for the Event Session, an id associated with the Event, a version associated with the Event, and the timestamp for the date and time in GMT that the Event fired on the server. Each <event> node will have one or more <data> nodes that contain the information for each of the Event Data Elements returned by the Events default payload. If Actions have been defined for the Event in the Event Session the <event> node will have an <action> node for each of the Actions that were added to the Event. The <data> nodes and <action> nodes share a common XML schema, with one exception. These <data> nodes contain a single attribute containing the name of the Data Element contained by that node, whereas the <action> nodes contain two attributes; one containing the name of the Action contained by that node, and the other the Package for the Action. Each <data> or <action> node will have a <type> node that contains two attributes; the name of the Type for data type of the value being returned by the parent node, and the Package for the Type. The <data> or <action> node will also have two additional nodes; a <value> node which contains the value for the data being returned in the Data Element, and a <text> node which will contain the Map lookup text for Data Elements that correspond to Maps in the Extended Events Metadata. The basic XML definition of an <event> node would be: <event name="" package="" id="" version="" timestamp=""> <data name=""> <type name="" package="" /> <value /> <text /> </data> <action name="" package=""> <type name="" package="" /> <value /> <text /> </action> </event> Below is an example Event from the default system_health Event Session that is running on every installation of SQL Server 2008, and SQL Server Denali CTP1 for the wait_info Event. <event name="wait_info" package="sqlos" id="48" version="1" timestamp="2010-12-03T15:29:00.578Z"> <data name="wait_type"> <type name="wait_types" package="sqlos" /> <value>98</value> <text>ASYNC_IO_COMPLETION</text> </data> <data name="opcode"> <type name="event_opcode" package="sqlos" /> <value>1</value> <text>End</text> </data> <data name="duration"> <type name="uint64" package="package0" /> <value>44598</value> <text /> </data> <data name="max_duration"> <type name="uint64" package="package0" /> <value>44598</value> <text /> </data> <data name="total_duration"> <type name="uint64" package="package0" /> <value>44598</value> <text /> </data> <data name="signal_duration"> <type name="uint64" package="package0" /> <value>0</value> <text /> </data> <data name="completed_count"> <type name="uint64" package="package0" /> <value>1</value> <text /> </data> <action name="callstack" package="package0"> <type name="callstack" package="package0" /> <value>0x0000000001829555 0x0000000000CEA584 0x000000000233FA28 0x0000000002E2FA0C 0x0000000002F10CB9 0x0000000002F112D7 0x0000000002F1B90B 0x0000000002D8C59A 0x0000000000B0F6D2 0x000000000065C59B 0x000000000065C25A 0x000000000065BF35 0x0000000000BE6410 0x0000000000BE64E0 0x0000000000BD87A0 0x0000000000BE5F9F</value> <text /> </action> <action name="session_id" package="sqlserver"> <type name="uint16" package="package0" /> <value>87</value> <text /> </action> <action name="sql_text" package="sqlserver"> <type name="unicode_string" package="package0" /> <value>Unable to retrieve SQL text</value> <text /> </action> </event> In this Event, the wait_type and opcode Data Elements correspond to the Maps wait_types and opcode respectively, and the text value for the Map that corresponds to the <value> node is in the <text> node. It is possible to lookup the Maps in the DMV’s separately though not necessary in this example as follows: SELECT p.name AS package_name, mv.name AS map_name, mv.map_key, mv.map_value FROM sys.dm_xe_packages p JOIN sys.dm_xe_map_values mv ON p.guid = mv.object_package_guid WHERE (p.name = 'sqlos' AND mv.name = 'wait_types') OR (p.name = 'sqlos' AND mv.name = 'event_opcode') ; Querying/Parsing the Target Data Since the ring_buffer target returns the Event data as XML, obviously we are going to have to do a little bit of work to shred the XML into actionable data using XQuery. For those new to XQuery, the best I can recommend is to jump over to my good friend Jacob Sebastian’s blog and work your way through his series of XQuery Labs, which have been an amazing resource along my way to learning XQuery. If you are not interested in learning XQuery, but still want to work with Extended Events, all is not lost. Adam Machanic wrote the Extended Events Code Generator, which you can use to generate a TSQL statement that will parse out the target data for the ring_buffer, as well as for tomorrows topic, the asynchronous_file_target. It also includes a SQLCLR helper TVF that optimizes shredding the XML by leveraging the power of .NET that you can optionally deploy in your environment. Another option is to use the Extended Events SSMS Addin for SQL Server 2008 which includes a TargetDataViewer that shreds the XML for every target available in Extended Events and displays the Event data in a SQL Profiler like GridView inside of SQL Server Management Studio. Beyond these two tools, you can also use the code available in this blog series. One of the nuances of working with XML inside of SQL Server, especially with Extended Events, is that sometimes it is better for performance to use a XML variable to hold the XML data for shredding, rather than attempting to shred the XML directly from the DMV’s. I’ve never quite figured out why this is the case, but it tends to make a bigger impact on larger XML documents, specifically those in the 2MB+ size range. Since every server running SQL Server 2008, 2008R2, or Denali CTP1 has the system_health session running by default in it, I am going to use that event session to demonstrate how to query the information from the ring_buffer target. To get the Target data into an XML variable, we’ll need to query the target_data column of the sys.dm_xe_session_targets DMV and CAST the value returned to the XML data type. DECLARE @target_data XML; SELECT @target_data = CAST(target_data AS XML) FROM sys.dm_xe_sessions AS s JOIN sys.dm_xe_session_targets AS t ON t.event_session_address = s.address WHERE s.name = N'system_health'; With that variable, we can then parse out the Targets header information from the <RingBufferTarget> node attributes: SELECT @target_data.value('(RingBufferTarget/@eventsPerSec)[1]', 'int') AS eventsPerSec, @target_data.value('(RingBufferTarget/@processingTime)[1]', 'int') AS processingTime, @target_data.value('(RingBufferTarget/@totalEventsProcessed)[1]', 'int') AS totalEventsProcessed, @target_data.value('(RingBufferTarget/@eventCount)[1]', 'int') AS eventCount, @target_data.value('(RingBufferTarget/@droppedCount)[1]', 'int') AS droppedCount, @target_data.value('(RingBufferTarget/@memoryUsed)[1]', 'int') AS memoryUsed; We can also parse out the individual <event> data nodes from the Target data by using .nodes() method and specifying the XPath to the event nodes and then using .query() method to materialize each node returned by .nodes() as a separate XML document for output. SELECT n.query('.') AS event_data FROM @target_data.nodes('RingBufferTarget/event') AS q(n); We can also use an XPath filter in the .nodes() method to query specific Events only (Note: if your server hasn’t encountered waits that exceed the predicates for the system_health session, this query will not return results). SELECT n.query('.') AS event_data FROM @target_data.nodes('RingBufferTarget/event[@name=''wait_info'']') AS q(n); Building on this, we can shred the <event> nodes for the wait_info Events based on the Event definition in the Event Session to turn the XML data into a tabular output that is easier to read. SELECT n.value('(@name)[1]', 'varchar(50)') AS event_name, n.value('(@package)[1]', 'varchar(50)') AS package_name, n.value('(@id)[1]', 'int') AS id, n.value('(@version)[1]', 'int') AS version, DATEADD(hh, DATEDIFF(hh, GETUTCDATE(), CURRENT_TIMESTAMP), n.value('(@timestamp)[1]', 'datetime2')) AS [timestamp], n.value('(data[@name="wait_type"]/text)[1]', 'varchar(250)') as wait_type, n.value('(data[@name="opcode"]/text)[1]', 'varchar(250)') as opcode, n.value('(data[@name="duration"]/value)[1]', 'bigint') as duration, n.value('(data[@name="max_duration"]/value)[1]', 'bigint') as max_duration, n.value('(data[@name="total_duration"]/value)[1]', 'bigint') as total_duration, n.value('(data[@name="completed_count"]/value)[1]', 'bigint') as completed_count, n.value('(action[@name="callstack"]/value)[1]', 'varchar(max)') as callstack, n.value('(action[@name="session_id"]/value)[1]', 'int') as session_id, n.value('(action[@name="sql_text"]/value)[1]', 'varchar(max)') as sql_text FROM @target_data.nodes('RingBufferTarget/event[@name=''wait_info'']') AS q(n); Now we could do a lot more actionable work with this data by turning this query into a derived table, or changing it to be a SELECT INTO a temporary table that we query a number of other ways after shredding the XML information. I’ll leave the possibilities for how to consume this data after shredding the XML up to your imagination, and for another post in this series. Considerations for Usage While the ring_buffer target may seem like the ideal Target for short term analysis, there are a number of considerations that must be made in determining whether or not it is the correct Target to use in an Event Session. The first of these is the number of Events that the Target can actually hold based on the max_memory. If the Event Session is expected to generate a large quantity of Events, the ring_buffer will probably not meet your needs, depending on the Events definitions in the Event Session. In addition to this consideration, there is a known issue related to the ring_buffer Target associated with it returning XML through the sys.dm_xe_session_targets DMV as discussed by Bob Ward in his blog post: You may not see the data you expect in Extended Event Ring Buffer Targets…. Essentially, the DMV can only return 4MB of materialized XML, which becomes problematic for a Target that is defined to retain 4MB of Event data in binary form. The binary representation of the Event data can easily exceed 4MB when materialized as XML for the DMV to output. When this occurs the output from the DMV is a malformed XML document, as detailed in the Connect item referenced in Bob’s blog post. According to the the Connect item, this problem has been addressed in SQL Server 2008 Service Pack 2. In addition to this issue, Adam Machanic filed a slightly different Connect item regarding the failure of the ring_buffer target to return all of the Events captured, which according to the feedback comments is also fixed in SQL Server 2008 Service Pack 2 and SQL Server 2008 R2 Cumulative Update 1. One of the other considerations for using the ring_buffer Target is that the information captured by an Event Session is memory resident only. This means that if you are capturing Events in the Event Session that are critical and require persistence in the event of that the SQL Server instance encounters a crash the information captured by the target will not be available when the SQL Server instance restarts. However, when you are doing analysis of a specific workload while the server is online and available, the ring_buffer can still be useful for capturing Events specific to the workload being analyzed. To accommodate this, and capture Events and maintain them in a static nature without performing a DROP SESSION on the Event Session, it is necessary to remove the Events from the Event Session by performing an ALTER EVENT SESSION in conjunction with the DROP EVENT DDL command. To demonstrate this, we can create an Event Session that captures the error_reported Event. -- Create an Event Session to capture Errors Reported CREATE EVENT SESSION DemoPersistedEvents ON SERVER ADD EVENT sqlserver.error_reported ADD TARGET package0.ring_buffer WITH (MAX_DISPATCH_LATENCY = 1 SECONDS); GO -- Alter the Event Session and Start it. ALTER EVENT SESSION DemoPersistedEvents ON SERVER STATE=START; GO -- SELECT from a non-existent table to create Event SELECT * FROM master.schema_doesnt_exist.table_doesnt_exist; GO -- Drop the Event to halt Event collection ALTER EVENT SESSION DemoPersistedEvents ON SERVER DROP EVENT sqlserver.error_reported; GO -- Wait for Event buffering to Target WAITFOR DELAY '00:00:01'; GO -- Create XML variable to hold Target Data DECLARE @target_data XML; SELECT @target_data = CAST(target_data AS XML) FROM sys.dm_xe_sessions AS s JOIN sys.dm_xe_session_targets AS t ON t.event_session_address = s.address WHERE s.name = N'DemoPersistedEvents' AND t.target_name = N'ring_buffer'; -- Query XML variable to get Event Data SELECT @target_data.value('(RingBufferTarget/@eventsPerSec)[1]', 'int') AS eventsPerSec, @target_data.value('(RingBufferTarget/@processingTime)[1]', 'int') AS processingTime, @target_data.value('(RingBufferTarget/@totalEventsProcessed)[1]', 'int') AS totalEventsProcessed, @target_data.value('(RingBufferTarget/@eventCount)[1]', 'int') AS eventCount, @target_data.value('(RingBufferTarget/@droppedCount)[1]', 'int') AS droppedCount, @target_data.value('(RingBufferTarget/@memoryUsed)[1]', 'int') AS memoryUsed; SELECT n.value('(@name)[1]', 'varchar(50)') AS event_name, n.value('(@package)[1]', 'varchar(50)') AS package_name, n.value('(@id)[1]', 'int') AS id, n.value('(@version)[1]', 'int') AS version, DATEADD(hh, DATEDIFF(hh, GETUTCDATE(), CURRENT_TIMESTAMP), n.value('(@timestamp)[1]', 'datetime2')) AS [timestamp], n.value('(data[@name="error"]/value)[1]', 'int') as error, n.value('(data[@name="severity"]/value)[1]', 'int') as severity, n.value('(data[@name="duration"]/value)[1]', 'int') as state, n.value('(data[@name="user_defined"]/value)[1]', 'varchar(5)') as user_defined, n.value('(data[@name="message"]/value)[1]', 'varchar(max)') as message FROM @target_data.nodes('RingBufferTarget/event') AS q(n); GO -- Drop the Event Session to cleanup Demo DROP EVENT SESSION DemoPersistedEvents ON SERVER; If your specific requirements allow for the loss of Events due to the FIFO nature of the ring_buffer Target, and you have applied the necessary patches to your SQL Server instance to ensure that invalid XML is not returned by the sys.dm_xe_session_targets DMV, the ring_buffer Target may provide the required functionality for your specific implementation. When using an Event Session in the short term, or an Event Session that is Predicated to minimize the number of Events that will actually be fired, the ring_buffer is a maintenance free method of collecting raw Event data for further analysis when the Events are dropped from the Event Session to ensure that unnecessary Events are not captured by the Event Session.

The default max_memory value is 4MB for the ring_buffer

asynchronous_file_target? 异步文件目标

Targets Week – asynchronous_file_target

--http://www.sqlskills.com/blogs/jonathan/an-xevent-a-day-6-of-31-targets-week-asynchronous_file_target/

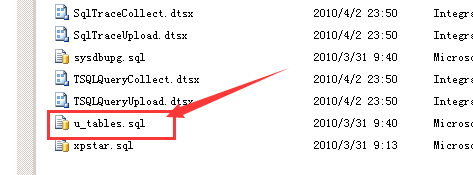

What is the asynchronous_file_target? The asynchronous_file_target holds the raw format Event data in a proprietary binary file format that persists beyond server restarts and can be provided to another person via ftp or email for remote disconnected analysis of the events. The asynchronous_file_target has two types of files that are associated with it, the log files which contain the Event data, and the metadata file which contains information about the Events contained in the log files, allowing correct parsing of the log files and the Events and associated Actions contained within them. Depending on the options configured for the asynchronous_file_target, there may be multiple log files associated with a started Event Session, but there will only be one metadata file created for the duration of that Event Sessions collection. Subsequent collections by the same Event Session, for example, stopping it and starting it again at a later time, will create a new metadata file associated with that collection by the Event Session. These files exist as a set and must be maintained together for the log files to be read. Configuration Options The asynchronous_file_target like the ring_buffer, has configuration options that can be found in the sys.dm_xe_object_columns DMV. -- Target Configurable Fields SELECT oc.name AS column_name, oc.column_id, oc.type_name, oc.capabilities_desc, oc.description FROM sys.dm_xe_packages AS p JOIN sys.dm_xe_objects AS o ON p.guid = o.package_guid JOIN sys.dm_xe_object_columns AS oc ON o.name = oc.OBJECT_NAME AND o.package_guid = oc.object_package_guid WHERE (p.capabilities IS NULL OR p.capabilities & 1 = 0) AND (o.capabilities IS NULL OR o.capabilities & 1 = 0) AND o.object_type = 'target' AND o.name = 'asynchronous_file_target' In SQL Server 2008, 2008R2, and SQL Server Denali CTP1, there are five configuration options for the asynchronous_file_target. The filename specifies the path and name of the log files and is a required to add the asynchronous_file_target to an Event Session. The max_file_size option functions the same as SQL Trace maxfilesize option, limiting the size of each file before rollover occurs. The max_rollover_files option functions the same as the SQL Trace maxrolloverfiles option, specifying the number of rollover files to maintain in the file system, and can be used in conjunction with the max_file_size option to prevent the SQL Server from running out of disk space during Event collection. The increment option is similar to the AutoGrowth settings for a database in SQL Server, and specifies the size in megabytes that the log files grow, allowing the files to grow incrementally and reducing the number of times a log file has to grow while Events are being dispatched and buffered to the Target. The metadatafile option specifies the path and name of the metadata file for the target. An XEvent a Day (6 of 31) Targets Week asynchronous file target image thumb Notice that the only mandatory option for the file target is the filename for the log files. When the asynchronous_file_target is used in an Event Session, if the metadatafile option is not explicitly set, the asynchronous_file_target will use the same path and filename specified in the filename option with a .xem extension for the metadata file automatically. Understanding the Target Data Format Like the ring_buffer, the asynchronous_file_target stores Event data in its raw format. Inside the log files, the Event data is maintained in a binary format that minimizes the amount of space necessary to store the Events, maximizing the number of Events that can be stored inside the log files. Unlike the ring_buffer target however, the asynchronous_file_target is queried not through the sys.dm_xe_session_targets DMV, but through the sys.fn_xe_file_target_read_file() DMF. The sys.fn_xe_file_target_read_file() DMF requires four input parameters; @path which is the path, filename, and extension mask to the log files, @mdpath which is the path, filename, and extension mask to metadata file, @initial_file_name which is the exact path and filename of a file to start reading from and when specified requires the final parameter @initial_offset which is the offset inside that file from which to begin reading the events. The sys.fn_xe_file_target_read_file() DMF returns a single row for each instance of Event data that is contained inside of the log files being read. The Event data is materialized into an XML document in the event_data column output by the DMF.when the Target information is queried using the sys.fn_xe_file_target_read_file() DMF, allowing it to be used for Event analysis. Like the ring_buffer Target, the Event data returned by the sys.fn_xe_file_target_read_file() DMF is not schema bound, but it has exactly the same XML format as an individual <event> node in the ring_buffer Targets output making it very easy to parse the Events contained in either target with very similar XQuery’s. Querying/Parsing the Target Data Since the asynchronous_file_target returns the Event data as XML, we have to do the same type of XQuery work to retrieve the Event data from it as we did with the ring_buffer target from yesterday. After reading yesterday’s post, Adam Machanic (Blog|Twitter) pointed out in a comment on Twitter that the slow XML parsing is an optimizer bug that is handled with a derived table in his Extended Events Code Generator. Adam is absolutely correct, and if you attended my session on Extended Events this year at PASS you’d recall that I didn’t have performance issues in my demo’s for querying the Target data generated by my demo’s. I used a very different parsing method in my PASS demo’s than I showed yesterday, and I plan to cover that method in a later post in this series already. However, if you want to see the gist of how to work around the performance issue take a look at the code output by Adam’s code generator. I am going to reuse yesterday’s demo as a basis for looking at the asynchronous_file_target for simplicity as well as to show the similarity of the XQuery used for querying the Event data. The basic Event Session captures the error_reported Event and to trigger an error performs a SELECT against a non-existent table. -- Create an Event Session to capture Errors Reported CREATE EVENT SESSION DemoPersistedEvents ON SERVER ADD EVENT sqlserver.error_reported ADD TARGET package0.ring_buffer, ADD TARGET package0.asynchronous_file_target( SET filename='D:\SQLData\MSSQL10.MSSQLSERVER\MSSQL\Log\DemoPersistedEvents.xel') WITH (MAX_DISPATCH_LATENCY = 1 SECONDS) GO -- Alter the Event Session and Start it. ALTER EVENT SESSION DemoPersistedEvents ON SERVER STATE=START GO -- SELECT from a non-existent table to create Event SELECT * FROM master.schema_doesnt_exist.table_doesnt_exist GO -- Drop the Event to halt Event collection ALTER EVENT SESSION DemoPersistedEvents ON SERVER DROP EVENT sqlserver.error_reported GO The first thing we need to know to query our asynchronous_file_target is the filename and metafilename for the files that we want to query from. If the event session is active and running, we can get this information by querying the Active Session DMV’s. SELECT soc.column_name, soc.column_value FROM sys.dm_xe_sessions s JOIN sys.dm_xe_session_object_columns soc ON s.address = soc.event_session_address WHERE s.name = 'DemoPersistedEvents' AND soc.object_name = 'asynchronous_file_target' An XEvent a Day (6 of 31) Targets Week asynchronous file target image thumb Notice that the metatdatafile option is NULL, meaning that we were lazy and didn’t explicitly define the metadata file information in our Event Session so now we have to figure it out in order to query the target data from the log files. One way to find the information would be to open up the path on the server to the log file that was specified: An XEvent a Day (6 of 31) Targets Week asynchronous file target image thumb Notice that the Extended Events Engine automatically created a metadata file with the same name as the log file, but a different extension, .xem. Also notice that the file names for both the log file and the metadata file have changed from what was actually defined in the Event Session. The Engine adds a _0_ and a long integer value that represents the number of milliseconds between January 1, 1600 and the date and time that the file was generated by the Extended Events Engine. Subsequent files will have a different long integer value that is larger in value allowing you to easily sort the log files from oldest to newest or vice versa. To query the data contained in the log files, you have two options. First you can explicitly provide the filenames as shown above, or you can use wildcards in the names and the engine will find the correct matching files and begin reading them. DECLARE @path nvarchar(260), @mdpath nvarchar(260) -- Get the log file name and substitute * wildcard in SELECT @path = LEFT(column_value, LEN(column_value)-CHARINDEX('.', REVERSE(column_value))) + '*' + RIGHT(column_value, CHARINDEX('.', REVERSE(column_value))-1) FROM sys.dm_xe_sessions s JOIN sys.dm_xe_session_object_columns soc ON s.address = soc.event_session_address WHERE s.name = 'DemoPersistedEvents' AND soc.object_name = 'asynchronous_file_target' AND soc.column_name = 'filename' -- Get the metadata file name and substitute * wildcard in SELECT @mdpath = LEFT(column_value, LEN(column_value)-CHARINDEX('.', REVERSE(column_value))) + '*' + RIGHT(column_value, CHARINDEX('.', REVERSE(column_value))-1) FROM sys.dm_xe_sessions s JOIN sys.dm_xe_session_object_columns soc ON s.address = soc.event_session_address WHERE s.name = 'DemoPersistedEvents' AND soc.object_name = 'asynchronous_file_target' AND soc.column_name = ' metadatafile' -- Set the metadata filename if it is NULL to the log file name with xem extension SELECT @mdpath = ISNULL(@mdpath, LEFT(@path, LEN(@path)-CHARINDEX('*', REVERSE(@path))) + '*xem') -- Query the Event data from the Target. SELECT module_guid, package_guid, object_name, event_data, file_name, file_offset FROM sys.fn_xe_file_target_read_file(@path, @mdpath, null, null) An XEvent a Day (6 of 31) Targets Week asynchronous file target image thumb The DMF outputs the module_guid, package_guid, and object_name associated with the Event, the event_data as a XML document, but in string format requiring that it be CAST/CONVERT’d to XML for parsing, the file_name of the log file that the Event data was read from and the file_offset inside the file for the event. Using a CAST to XML and performing a CROSS APPLY of the <event> nodes and the same XQuery’s as in yesterday’s post we can query the Event data from the asynchronous_file_target. -- Query the Event data from the Target. SELECT n.value('(@name)[1]', 'varchar(50)') AS event_name, n.value('(@package)[1]', 'varchar(50)') AS package_name, n.value('(@id)[1]', 'int') AS id, n.value('(@version)[1]', 'int') AS version, DATEADD(hh, DATEDIFF(hh, GETUTCDATE(), CURRENT_TIMESTAMP), n.value('(@timestamp)[1]', 'datetime2')) AS [timestamp], n.value('(data[@name="error"]/value)[1]', 'int') as error, n.value('(data[@name="severity"]/value)[1]', 'int') as severity, n.value('(data[@name="duration"]/value)[1]', 'int') as state, n.value('(data[@name="user_defined"]/value)[1]', 'varchar(5)') as user_defined, n.value('(data[@name="message"]/value)[1]', 'varchar(max)') as message FROM (SELECT CAST(event_data AS XML) AS event_data FROM sys.fn_xe_file_target_read_file(@path, @mdpath, null, null) ) as tab CROSS APPLY event_data.nodes('event') as q(n) Like the ring_buffer Target, the asynchronous_file_target also has an entry in sys.dm_xe_session_targets, but instead of returning the Event data, it returns information about the targets operation. select target_data.value('(FileTarget/@truncated)[1]', 'int') as truncated, target_data.value('(FileTarget/Buffers/@logged)[1]', 'int') as logged, target_data.value('(FileTarget/Buffers/@dropped)[1]', 'int') as dropped FROM (SELECT CAST(target_data AS XML) AS target_data FROM sys.dm_xe_sessions AS s JOIN sys.dm_xe_session_targets AS t ON t.event_session_address = s.address WHERE s.name = 'DemoPersistedEvents' AND t.target_name = 'asynchronous_file_target' ) as tab The file_name and file_offset information in the the sys.fn_xe_file_target_read_file output can be used to perform differential reads from the asynchronous_file_target. To demonstrate this we can create an Event Session that will capture a lot of Events in a short period of time. (Note: I wouldn’t create an unfiltered Event Session on the starting and completed events like this on a production server without first evaluating its potential impact. While this should be safe, if it causes you a problem, its your server not mine.) IF EXISTS(SELECT * FROM sys.server_event_sessions WHERE name='FileTargetDemo') DROP EVENT SESSION [FileTargetDemo] ON SERVER; CREATE EVENT SESSION [FileTargetDemo] ON SERVER ADD EVENT sqlserver.sql_statement_starting, ADD EVENT sqlserver.sql_statement_completed, ADD EVENT sqlserver.sp_statement_starting, ADD EVENT sqlserver.sp_statement_completed, ADD EVENT sqlserver.rpc_starting, ADD EVENT sqlserver.rpc_completed, ADD EVENT sqlserver.module_start, ADD EVENT sqlserver.module_end ADD TARGET package0.asynchronous_file_target( SET filename='D:\SQLData\MSSQL10.MSSQLSERVER\MSSQL\Log\FileTargetDemo.xel', metadatafile='D:\SQLData\MSSQL10.MSSQLSERVER\MSSQL\Log\FileTargetDemo.xem', max_file_size = 5, max_rollover_files = 5) WITH(MAX_DISPATCH_LATENCY = 5SECONDS) GO -- Start the Event Collection ALTER EVENT SESSION [FileTargetDemo] ON SERVER STATE=STOP GO -- Take a pause and allow events to be generated -- Query the target data from the files. SELECT object_name, CAST(event_data as xml) as event_data, file_name, file_offset FROM sys.fn_xe_file_target_read_file('D:\SQLData\MSSQL10.MSSQLSERVER\MSSQL\Log\FileTargetDemo*xel', 'D:\SQLData\MSSQL10.MSSQLSERVER\MSSQL\Log\FileTargetDemo*xem', null, null) If you scroll through the output to where the file_offset changes, you can grab the file_name and file_offset for the last event in the first file_offset. An XEvent a Day (6 of 31) Targets Week asynchronous file target image thumb Then requery the target passing that file_name and file_offset into the @initial_file_name and @intitial_offset parameters of the sys.fn_xe_file_target_read_file DMF to have the DMF begin reading from the last entry of the provided offset forward. -- Query the target data from the files. SELECT object_name, CAST(event_data as xml) as event_data, file_name, file_offset FROM sys.fn_xe_file_target_read_file('D:\SQLData\MSSQL10.MSSQLSERVER\MSSQL\Log\FileTargetDemo*xel', 'D:\SQLData\MSSQL10.MSSQLSERVER\MSSQL\Log\FileTargetDemo*xem', 'D:\SQLData\MSSQL10.MSSQLSERVER\MSSQL\Log\FileTargetDemo_0_129360796797990000.xel', 0) An XEvent a Day (6 of 31) Targets Week asynchronous file target image thumb If you’ve run the demo’s in this blog post to this point, don’t forget to cleanup the system. IF EXISTS(SELECT * FROM sys.server_event_sessions WHERE name='FileTargetDemo') DROP EVENT SESSION [FileTargetDemo] ON SERVER; GO IF EXISTS(SELECT * FROM sys.server_event_sessions WHERE name='DemoPersistedEvents') DROP EVENT SESSION [DemoPersistedEvents] ON SERVER; GO Considerations for Usage The asynchronous_file_target will probably be the preferred target for most people interested in performing long term analysis of Events collected, or performing short term analysis using an Event Session that is expected to generate a large number of events and event loss due to the FIFO nature of the ring_buffer is not acceptable. However, there are a couple of considerations associated with this target. The first is that the log files and metadata file are a set, and have to be maintained together. If you send someone a log file without the metadata file, they won’t be able to read the information contained in the log file. The second consideration associated with this target is that the only way to read the information contained inside of the log files, as of the date of this blog post being published, is to copy them to a system that is running SQL Server 2008 or 2008R2 and query the files using the there is no way to retrieve the information contained inside of the log files, without querying the sys.fn_xe_file_target_read_file() DMF using TSQL.

--用sys.dm_xe_session_targets来获取ringbuffer里面的数据 DECLARE @target_data XML; SELECT @target_data = CAST(t.target_data AS XML) FROM sys.dm_xe_sessions AS s JOIN sys.dm_xe_session_targets AS t ON t.event_session_address = s.address WHERE s.name = N'DemoPersistedEvents' AND t.target_name = N'ring_buffer'; --异步文件使用sys.fn_xe_file_target_read_file() DMF读取xel文件数据

目标:bucketizer 在内存中

Targets Week – bucketizers

--http://www.sqlskills.com/blogs/jonathan/an-xevent-a-day-7-of-31-targets-week-bucketizers/

The bucketizer performs grouping of Events as they are processed by the target into buckets based on the Event data and the Targets configuration. There are two bucketizer targets in Extended Events; a synchronous_bucketizer and an asynchronous_bucketizer. The only difference between the two is the manner in which the Event data is processed; either synchronously on the connection that generated the Event, or asynchronously after being dispatched to the target based on the MAX_DISPATCH_LATENCY for the Event Session, or when the dispatch buffer becomes full. Since the two bucketizers are identical in every way, except for their processing, this blog post will use the asynchronous_bucketizer for all further references. The bucketizers are a memory resident target, similar to the ring_buffer and like the ring_buffer, only contain the grouped Event data when the Event Session is active. When the Event Session is stopped, the memory buffers allocated to the bucketizer target are freed and all data contained in the target disappears. The bucketizer targets can be used to simplify troubleshooting by identifying the events that are occurring the most, and then allowing more focused Event collection for further analysis. Further analysis could include using either the ring_buffer or the asynchronous_file_target to look at the actual Event data being generated, or changing the bucketizer Targets configuration to group event occurrences based on a different criteria. Configuration Options The ring_buffer like most of the targets has configuration options that can be found in the sys.dm_xe_object_columns DMV. -- Target Configurable Fields SELECT oc.name AS column_name, oc.column_id, oc.type_name, oc.capabilities_desc, oc.description FROM sys.dm_xe_packages AS p JOIN sys.dm_xe_objects AS o ON p.guid = o.package_guid JOIN sys.dm_xe_object_columns AS oc ON o.name = oc.OBJECT_NAME AND o.package_guid = oc.object_package_guid WHERE (p.capabilities IS NULL OR p.capabilities & 1 = 0) AND (o.capabilities IS NULL OR o.capabilities & 1 = 0) AND o.object_type = 'target' AND o.name = 'asynchronous_bucketizer' In SQL Server 2008, 2008R2, and SQL Server Denali CTP1, there are four configuration options for the asynchronous_bucketizer Target. The slots option sets the maximum number of buckets the target will collect. Once this number of buckets is reached, new events that do not apply to an existing bucket are dropped by the target and not grouped. The filtering_event_name option is used to set the name of the specific Event in the Event Session to filter on. The source_type option is used to specify whether the source being used for bucketing is a part of the Event data or an Action that has been added to the Events contained in the Event Session. The source option specifies the source that will be used to generate the buckets for grouping in the target. An XEvent a Day (7 of 31) Targets Week bucketizers image thumb As shown above the source is the only required option for the asynchronous_bucketizer Target. However, when the source is an Action the source_type option is also required to specify that the source is an Action. When using one of the Event Data elements as the source, only the Data element (also known as a column name) needs to provided to the source. When using an Action for the source, the Package name must be specified along with the Action name in the format of packagename.actionname. Likewise when specifying a filtering_event_name, the Package name must also be provided in the format of packagename.eventname. Understanding the Target Data Format The bucketizer Targets like the other Targets already output the data in XML format, and the XML is not schema bound, but has a predictable format. Inside the Extended Events Engine, the bucketing data is maintained in a binary format that minimizes the amount of memory necessary for the Targets memory buffers. The bucketing data is materialized into an XML document when the Target information is queried using the sys.dm_xe_session_targets DMV, allowing it to be used for analysis. The asynchronous_bucketizer XML document contains a parent XML <BucketizerTarget> Node that contains attributes about the Targets operation since the Event Session was started including the number of truncated Events and the maximum number of buckets contained in the Target. The bucket groups are contained in <Slot> nodes that have two attributes; the count is the number of events that have occurred and the trunc is the number of bytes that have been truncated. The <Slot> node contains a <value> node that contains the source that the bucket belongs to. A simplified representation of the XML document for the asynchronous_bucketizer target is: <BucketizerTarget truncated="" buckets=""> <Slot count="" trunc=""> <value></value> </Slot> </BucketizerTarget> Querying/Parsing the Target Data The asynchronous_bucketizer targets simplistic XML output makes querying it relatively simple compared to the targets that we’ve already looked at this week. However, unlike the other ring_buffer and asychronous_file_target, the asychronous_bucketizer can not be parsed using Adam Machanic’s Extended Events Code Generator. The simplicity of the XML and its standard output doesn’t really require specialized code to generate a easily usable table output for this. The Extended Events SSMS Addin for SQL Server 2008 TargetDataViewer will shred the XML but its not even worth using for this particular target, since the XQuery is very simple, and you can do a lot more with the TSQL depending on the Event Session that your create. To demonstrate the usage of the asynchronous_bucketizer, we’ll look at a couple of examples. The first example will show how to track recompiles by database_id to find the databases that have the most recompiles occurring. -- Create an Event Session to Track Recompiles IF EXISTS(SELECT * FROM sys.server_event_sessions WHERE name='BucketizerTargetDemoRecompiles') DROP EVENT SESSION [BucketizerTargetDemoRecompiles] ON SERVER; CREATE EVENT SESSION [BucketizerTargetDemoRecompiles] ON SERVER ADD EVENT sqlserver.sql_statement_starting ( ACTION (sqlserver.database_id) -- database_id to bucket on WHERE (state=1) -- recompile state from dm_xe_map_values ), ADD EVENT sqlserver.sp_statement_starting ( ACTION (sqlserver.database_id) -- database_id to bucket on WHERE (state=1) -- recompile state from dm_xe_map_values ) ADD TARGET package0.asynchronous_bucketizer ( SET source_type=1, -- specifies bucketing on Action source='sqlserver.database_id' -- Action to bucket on ) WITH (MAX_DISPATCH_LATENCY = 5 SECONDS) GO ALTER EVENT SESSION [BucketizerTargetDemoRecompiles] ON SERVER STATE=START The above session collects the sql_statement_starting and sp_statement_starting Events, adds the database_id Action to the Event so that we can bucket on it, and then filters the Events to only fire if the state for the Event matches the map_key in sys.dm_xe_map_values for Recompile. If the server being tested on doesn’t have a high recompile rate, an easy way to trigger Recompiles is to update the statistics on the tables inside of a database. EXECUTE sp_MSforeachtable 'UPDATE STATISTICS ?' To view the bucketized data from the target, we query sys.dm_xe_session_targets for our session and target using CAST to convert the target_data to XML in a derived table, and then using a CROSS APPLY of the .node() method to split on the <Slot> nodes. SELECT DB_NAME(n.value('(value)[1]', 'int')) AS DatabaseName, n.value('(@count)[1]', 'int') AS EventCount, n.value('(@trunc)[1]', 'int') AS EventsTrunc FROM (SELECT CAST(target_data as XML) target_data FROM sys.dm_xe_sessions AS s JOIN sys.dm_xe_session_targets t ON s.address = t.event_session_address WHERE s.name = 'BucketizerTargetDemoRecompiles' AND t.target_name = 'asynchronous_bucketizer') as tab CROSS APPLY target_data.nodes('BucketizerTarget/Slot') as q(n) With the <Slot> nodes split, pulling the <value> node and attributes is very simple, and since we bucketed on database_id, we can use the DB_NAME() function in SQL to return the database name associated with the database_id in the <value> node. Considerations for Usage The bucketizer targets are great for simplifying analysis of Event data to determine who to best proceed with further troubleshooting. However, in SQL Server 2008, and 2008R2 a bug exists that causes incorrect output from the bucketizers when used to bucket on the wait_info event wait_type Data element. This was fixed in SQL Server 2008 Service Pack 2 (http://support.microsoft.com/kb/2285068), and is not a problem in SQL Server Denali CTP1, but as of this writing has yet to be corrected in SQL Server 2008 R2 (at least the CU’s I have tested, there may be a newer one that I have missed, but I didn’t find one in a search). To demonstrate this problem the following Event Session can be used: IF EXISTS(SELECT * FROM sys.server_event_sessions WHERE name='BucketizerTargetDemoWaits') DROP EVENT SESSION [BucketizerTargetDemoWaits] ON SERVER; CREATE EVENT SESSION [BucketizerTargetDemoWaits] ON SERVER ADD EVENT sqlos.wait_info ( ACTION (sqlserver.database_id) WHERE (duration > 0)) ADD TARGET package0.asynchronous_bucketizer( SET filtering_event_name='sqlos.wait_info', source_type=0, source='wait_type') WITH (MAX_DISPATCH_LATENCY = 5 SECONDS) GO ALTER EVENT SESSION [BucketizerTargetDemoWaits] ON SERVER STATE=START The above Event Session will return valid map_key values for the wait_types Map in sys.dm_xe_map_values on SQL Server 2008 Service Pack 2 and SQL Server Denali CTP1, but will have erroneous information in the <value> node on SQL Server 2008 RTM and SP1 and SQL Server 2008 R2. To query the bucketed waits from the target, use the following query: SELECT mv.map_value AS WaitType, n.value('(@count)[1]', 'int') AS EventCount, n.value('(@trunc)[1]', 'int') AS EventsTrunc, n.value('(value)[1]', 'int') AS MapKey FROM (SELECT CAST(target_data as XML) target_data FROM sys.dm_xe_sessions AS s JOIN sys.dm_xe_session_targets t ON s.address = t.event_session_address WHERE s.name = 'BucketizerTargetDemoWaits' AND t.target_name = 'asynchronous_bucketizer') as tab CROSS APPLY target_data.nodes('BucketizerTarget/Slot') as q(n) JOIN sys.dm_xe_map_values as mv ON mv.map_key = n.value('(value)[1]', 'int') WHERE mv.name = 'wait_types'

更新所有表的统计信息使所有SQL语句重编译

EXECUTE sp_MSforeachtable 'UPDATE STATISTICS ?'

synchronous_event_counter 同步事件计数器 内存中

Targets Week – synchronous_event_counter

--http://www.sqlskills.com/blogs/jonathan/an-xevent-a-day-8-of-31-targets-week-synchronous_event_counter/

统计事件触发的次数 存储在内存里

Yesterday’s post, Targets Week – Bucketizers, looked at the bucketizer Targets in Extended Events and how they can be used to simplify analysis and perform more targeted analysis based on their output. Today’s post will be fairly short, by comparison to the previous posts, while we look at the synchronous_event_counter target, which can be used to test the impact of an Event Session without actually incurring the cost of Event collection. What is the synchronous_event_counter? The synchronous_event_count simply put, is a Target that counts the number of Events that fire for a given Event Session. It can be used to test whether or not the defined Predicates on Events in an Event Session perform the level of filtering expected, without having to actually perform full Event collection using one of the raw Event data targets like the ring_buffer or asynchronous_file_target. The Target is synchronous however, due to the fact that it only counts the number of times each Event fires, its impact is minimized in comparison to the other synchronous targets available. Like the ring_buffer and bucketizer, the synchronous_event_counter Target is a memory resident Target that holds event data in memory while the Event Session is active on the SQL Server. When the Event Session is stopped, the memory buffers allocated to the synchronous_event_counter target are freed and any information contained in the target is lost. Configuration Options There are no configuration options for the synchronous_event_counter Target. (see I said this was going to be a short post comparatively). -- Target Configurable Fields SELECT oc.name AS column_name, oc.column_id, oc.type_name, oc.capabilities_desc, oc.description FROM sys.dm_xe_packages AS p JOIN sys.dm_xe_objects AS o ON p.guid = o.package_guid JOIN sys.dm_xe_object_columns AS oc ON o.name = oc.OBJECT_NAME AND o.package_guid = oc.object_package_guid WHERE(p.capabilities IS NULL OR p.capabilities & 1 = 0) AND (o.capabilities IS NULL OR o.capabilities & 1 = 0) AND o.object_type = 'target' AND o.name = 'synchronous_event_counter' An XEvent a Day (8 of 31) Targets Week synchronous event counter image thumb Understanding the Target Data Format Like the ring_buffer and bucketizer Targets, the synchronous_event_counter Target returns its information by querying the sys.dm_xe_session_targets DMV, and it returns the Target data in an XML format that is not schema bound, but that has a standardized format. The synchronous_event_counter Target has a very simple XML document, much like the bucketizer Targets. The root node of the XML is the <CounterTarget> node which has a child node <Packages> which has a child <Package> node for each package that identifies the package using an @name attribute. Each <Package> node will have one or more <Event> nodes based on the number of Events defined in the Event Session for that particular package. The <Event> nodes each will contain two attributes, the name of the event and the count for its occurrence since the Event Session started. A simplified representation of the XML output by the synchronous_event_counter Target is below: <CounterTarget truncated=""> <Packages> <Package name=""> <Event name="" count="" /> </Package> </Packages> </CounterTarget> Querying/Parsing the Target Data Like the other memory resident Targets in Extended Events, the synchronous_event_counter Target data is only exposed by querying the sys.dm_xe_session_targets DMV. The following example will demonstrate how the synchronous_event_counter can be used to test the number of Events that an Event Session will generate: -- Create an Event Session to Track Recompiles IF EXISTS(SELECT * FROM sys.server_event_sessions WHERE name='CounterTargetDemo') DROP EVENT SESSION [CounterTargetDemo] ON SERVER; CREATE EVENT SESSION [CounterTargetDemo] ON SERVER ADD EVENT sqlserver.sql_statement_starting, ADD EVENT sqlos.wait_info ( WHERE (duration > 0)) ADD TARGET package0.synchronous_event_counter GO -- Start the Event Session ALTER EVENT SESSION [CounterTargetDemo] ON SERVER STATE=STOP GO -- Wait for Events to generate and then Query Target -- Query the Target SELECT n.value('../@name[1]', 'varchar(50)') as PackageName, n.value('@name[1]', 'varchar(50)') as EventName, n.value('@count[1]', 'int') as Occurence FROM ( SELECT CAST(target_data AS XML) as target_data FROM sys.dm_xe_sessions AS s JOIN sys.dm_xe_session_targets AS t ON t.event_session_address = s.address WHERE s.name = 'CounterTargetDemo' AND t.target_name = 'synchronous_event_counter' ) as tab CROSS APPLY target_data.nodes('CounterTarget/Packages/Package/Event') as q(n) -- Drop the Event Session IF EXISTS(SELECT * FROM sys.server_event_sessions WHERE name='CounterTargetDemo') DROP EVENT SESSION [CounterTargetDemo] ON SERVER; This session on one of my test servers generated the following output while running for less than five seconds: An XEvent a Day (8 of 31) Targets Week synchronous event counter image thumb Based on this number of Events being fired, it may be determined that the predicates for the session need to provide further filtering of the Events, or if it is determined that the Predicates are filtering the Events as intended, this at least lets us know that the number of Events firing will require the use of the asynchronous_file_target, if the plan is to look at the Events raw data. Considerations for Usage The only real consideration associated with the synchronous_event_counter Target is that it is an synchronous Target. However, since it is only counting the occurrences of the Events defined in the Event Session and is not actually buffering the data for dispatch its impact is not generally a concern.

目标:pair_matching 内存中

Targets Week – pair_matching

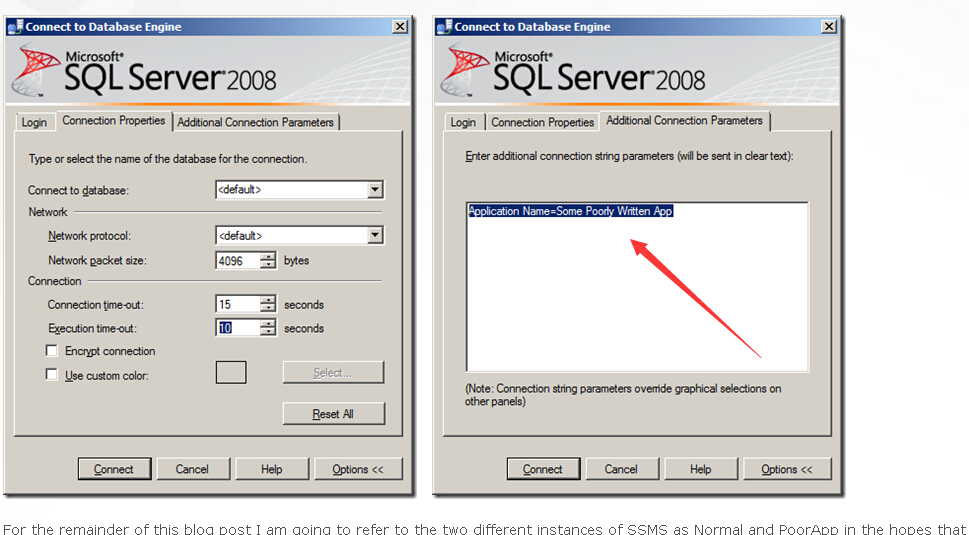

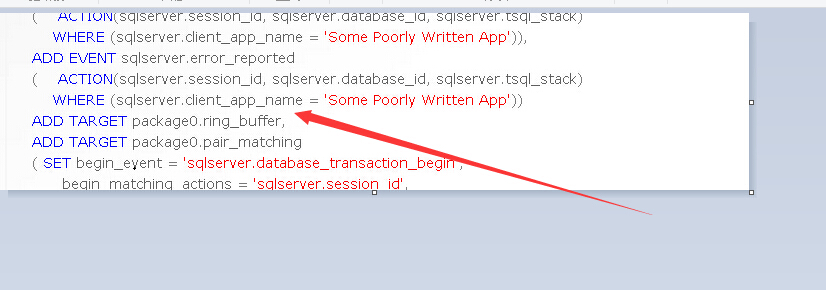

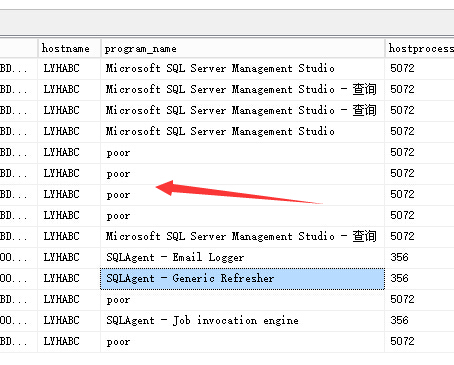

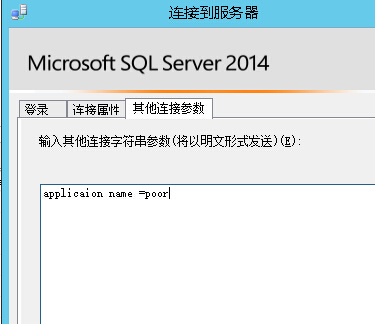

--http://www.sqlskills.com/blogs/jonathan/an-xevent-a-day-9-of-31-targets-week-pair_matching/