【大数据系列】基于MapReduce的数据处理 SequenceFile序列化文件

为键值对提供持久的数据结构

1、txt纯文本格式,若干行记录

2、SequenceFile

key-value格式,若干行记录,类似于map

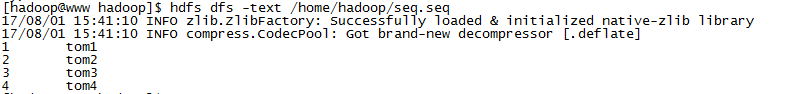

3、编写写入和读取的文件

package com.slp; import java.io.IOException; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.SequenceFile; import org.apache.hadoop.io.SequenceFile.Reader; import org.apache.hadoop.io.SequenceFile.Writer; import org.apache.hadoop.io.Text; import org.junit.Test; public class TestSequenceFile { @Test public void write() throws IOException{ Configuration conf = new Configuration(); conf.set("fs.defaultFS", "hdfs://www.node1.com:9000/"); FileSystem fs = FileSystem.get(conf); Path path = new Path("hdfs://www.node1.com:9000/home/hadoop/seq.seq"); Writer writer = SequenceFile.createWriter(fs, conf, path, IntWritable.class, Text.class); writer.append(new IntWritable(1), new Text("tom1")); writer.append(new IntWritable(2), new Text("tom2")); writer.append(new IntWritable(3), new Text("tom3")); writer.append(new IntWritable(4), new Text("tom4")); writer.close(); System.out.println("over"); } @Test public void readSeq() throws IOException{ Configuration conf = new Configuration(); conf.set("fs.defaultFS", "hdfs://www.node1.com:9000/"); FileSystem fs = FileSystem.get(conf); Path path = new Path("hdfs://www.node1.com:9000/home/hadoop/seq.seq"); Reader reader = new SequenceFile.Reader(fs, path, conf); IntWritable key = new IntWritable(); Text value = new Text(); while(reader.next(key, value)){ System.out.println(key+"="+value); } reader.close(); } }测试方法的输出为:

1=tom1

2=tom2

3=tom3

4=tom4