Concurrency != Parallelism

前段时间在公司给大家分享GO语言的一些特性,然后讲到了并发概念,大家表示很迷茫,然后分享过程中我拿来了Rob Pike大神的Slides 《Concurrency is not Parallelism》,反而搞的大家更迷茫了,看来大家丢了很多以前的基本知识。后来我就把Pike大神的slide和网上的一些牛人关于Cocurrency和Parallelism的观点做了整理,最终写了本文。

在Rob Pike的《Concurrency is not Parallelism》(http://talks.golang.org/2012/waza.slide)中说到,我们的世界是Parallelism,比如网络,比如大量独立的个人。但是需要协作的。所以就有了并发。很多人认为并发很COOL,认为并发就是并行。但是这种观念在ROB PIKE看来是错误的。比如之前有个人写了一个质数筛选的程序,然后这个运行在4核的平台上,但是运行结果很慢。这个程序员错误的以为GO提供的并发就是并行计算。

在Rob的这个slide中举了一个地鼠烧书的例子,在我和小伙伴们一起看的时候表示不太理解,所以做了一些功课来理解。

为了更容易的搞懂Rob提的并发不是并行的概念,就需要搞懂并发和并行到底有什么区别。

在Rob看来,Concurrency是一种把一些独立的执行过程组合起来的程序设计方法。

而Parallelism是同时执行一些可能相关(结果相关而不是依赖耦合关系的相关)或者独立计算过程。

Rob总结到:

Concurrency is about dealing with lots of things at one.

Parallelism is about doing lots of things at one. Not the same, but related.

Concurrency is about structure, parallelism is about execution.

Concurrency provides a way to structure a solution to solve a problem that may(but not necessarily) be parallelizable.

Rob还提到:

Concurrency is a way to structure a program by breaking it into pieces that can be executed independently.

Communication is the means to coordinate the independent executions.

This is the GO model and it's based on CSP.

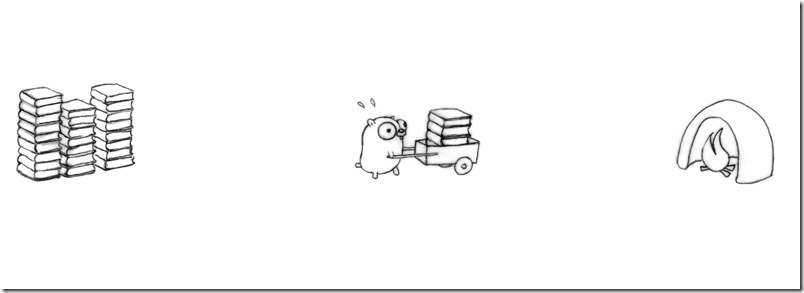

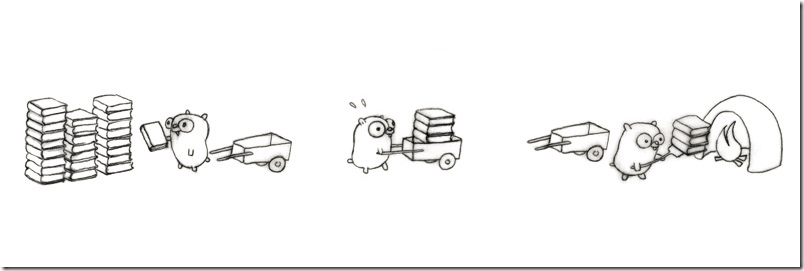

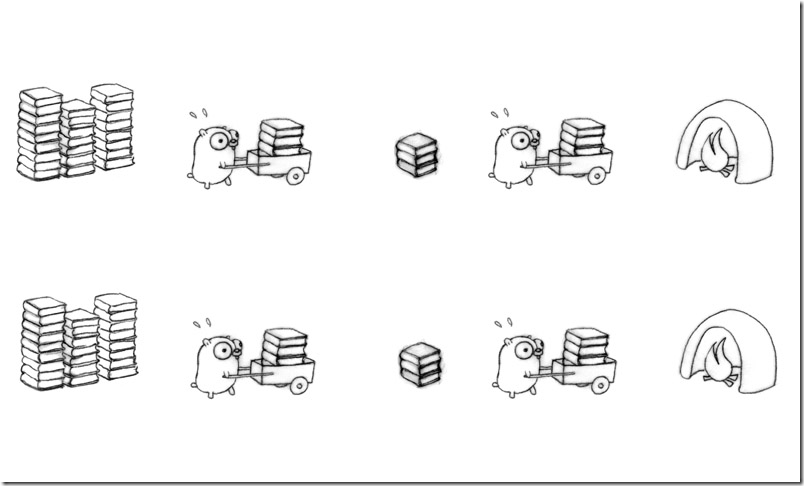

上面的只有一只地鼠推着小车把一堆语言手册运送到焚烧炉中烧掉。如果手册很多,运送距离很远,那么整个过程就要花费很多时间。

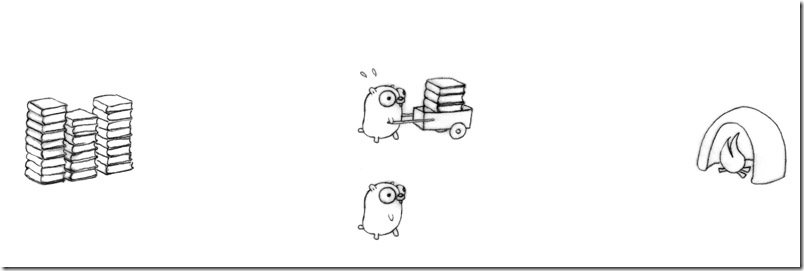

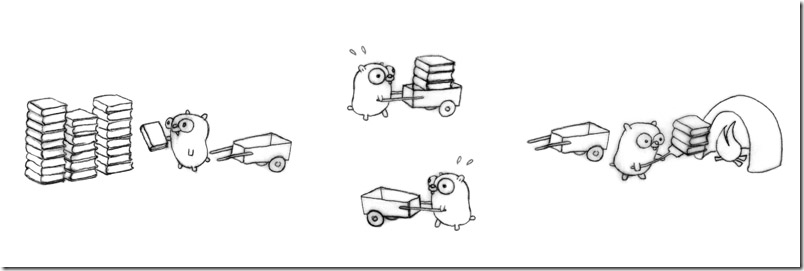

然后增加一只地鼠来一起帮忙做这个事情,但是两个人来做,只有一辆小推车是不够的,所以需要更多的车子

虽然车子增加了,但是有可能手册不够少了,或者炉子不够用了,而且两只地鼠需要做工作的时候协商搬书到车里,或者协商谁先用炉子。工作效率也不是太高。

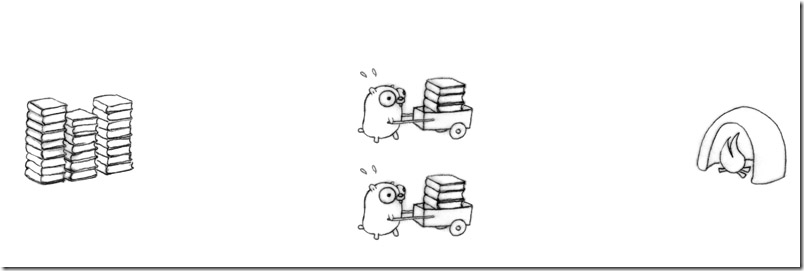

因此我们把它们真正的独立开。它们之间就不需要协商了,也都有足够的手册可以烧了。

虽然上面两只地鼠都分开独立的运行了,但是它们之间有并发成分(Concurrent composition)存在。

假设在一个单位时间内,只有一只地鼠在工作,它们就不是并行(Parallel),它们还是并发的。

这个设计不是为了有意并行而设计,但是可以自然地转化为可并行的(The design is not automatically parallel. However, it's automatically parallelizable)。

而且这个并发成分还暗示了有其他的模型。

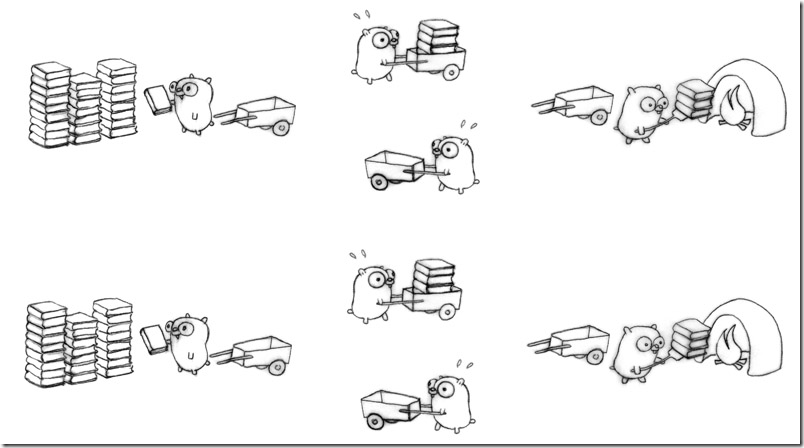

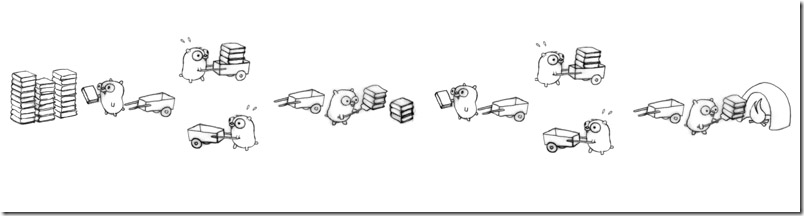

这里三只地鼠活动,可能有些延迟。每个地鼠都是一个独立的执行过程。它们协作交流。

这里新增加了一只地鼠用于推送空车子到装书处。每只地鼠都只做一件事情。这个并发的粒度比以前的更细小了。

如果我们把一切正确的安排好(虽然可能令人难以置信,但是并非不可能),这个设计会比最开始的那一只地鼠的效率要快4倍。

我们在原有的设计里增加了一个并发的执行过程提升了性能。

Different concurrent designs enable different ways to parallelize.

这种并发的设计可以很容易的使这个流程并行的执行。比如下面这样:8只地鼠繁忙的工作。

需要记住的是,即使在单位时间内只有一只地鼠在活动,此时虽然不是并行的,但是这个设计还是一个正确的并发的解决方案。

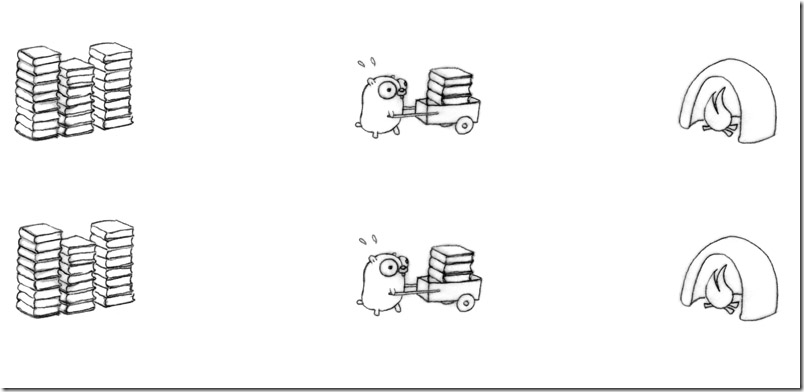

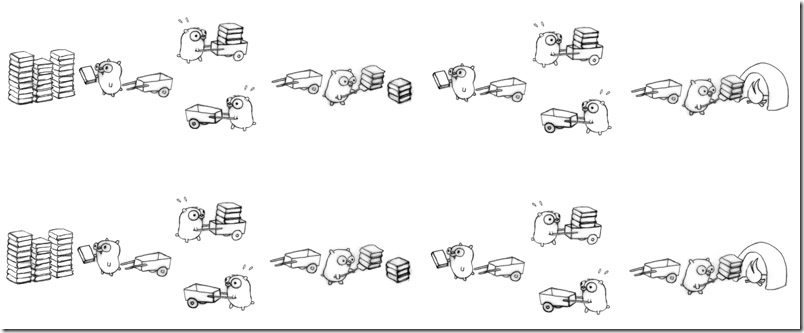

还有另外一种结构来组织两只地鼠的并发因素.即在两只地鼠中间再增加一堆手册。

还有一个组织方式:

然后再并行它:

现在有16只地鼠在工作了!

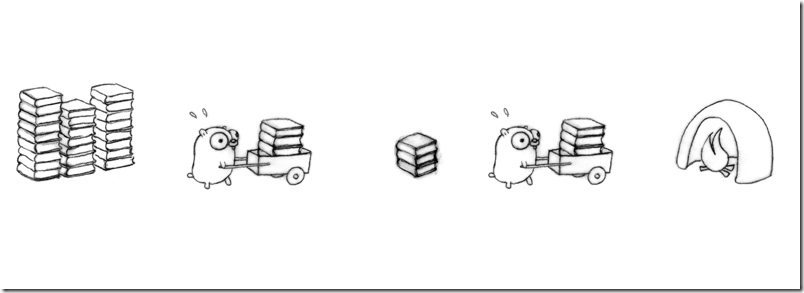

有很多方式可以分解一个过程。这个分解的过程就是并发的设计。一旦我们做好分解,并行化和正确性就会变的容易。

There are many ways to break the processing down. That's concurrent design. Once we have the breakdown, parallelization can fall out and correctness is easy.

一个复杂的问题可以分解为多个可以简单易懂的部分,然后把它们并发地(concurrently)组合在一起。最后得到一个简单易懂,高效的,可伸缩的而且正确的设计。甚至可以并行。

并发是非常强大的,虽然不是并行,但是可以做到并行,而且可以容易的做到并行,甚至是可伸缩性或者其他任何的东西。

把上面地鼠的例子转换到我们计算机中,书堆就好比网页内容,地鼠好比CPU,小推车好比序列化器或者渲染过程,或者网络,而火炉就是最终的消费者,比如浏览器。

就是说浏览器发起请求,地鼠开始工作,把需要的网页内容渲染好,然后通过网络再发回浏览器。这也就变成了一个针对可伸缩的WEB服务做的并发的设计。

在Golang中,Goroutine就是一个与其他goroutine在同一地址空间但是是独立执行的函数。Goroutine不是线程,虽然有点像,但是比线程更轻量。

Goroutine会被多路复用到需要的系统线程中。当一个goroutine阻塞了,其相关的线程也阻塞了,但是其他的goroutine没有阻塞(注:这种阻塞是指一些系统调用,而像网络操作,CHANNEL操作之类的,goroutine会被放入等待队列中,等运行条件成立,比如网络操作完毕,或者从CHANNEL中处理好收发,那么就会被切换到运行队列中运行)

Golang还提供了Channel用于goroutine之间的同步和数据交换。Select语句类似于switch,只是是用于判断哪个channel可以通信。

Golang是真的支持并发,一个Golang可以创建非常多的goroutine,比如一个测试程序创建了130W个goroutine,而且每个goroutine的栈开始的时候都比较小,但是会需扩大或收缩。但是Goroutine不是免费的,但是是轻量的。

总结

用于区别Concurrency和Parallelism的最好的例子是哲学家进餐问题。经典哲学家进餐问题中,如果刀或者叉不够的时候,每个哲学家就需要协商来确保总有一个人能够吃到饭。而如果餐具足够了,那么就需要协商了,大家可以很嗨皮的各吃各的了。

Concurrency与Parallelism最大的区别是要满足Concurrency,每个独立的执行体之间必须有协作,协作工具比如锁或者信号量,或者队列。

而Parallelism虽然也有很多独立的执行体,但是它们不是需要协作的,只需要自己运行,计算出结果,最后被汇总起来就好了。

Concurrency是一种问题分解和组织方法,而Parallelism一种执行方式。两者不同,但是有一定的关系。

我们大部分的问题都可以切割成细小的问题,每个问题作为一个独立的执行体执行,相互间有协作,它们就是Concurrency。

这种设计方式并不是为了Parallelism,但是这种设计可以自然而然的转化为可并行的。而且这种设计方式还能够确保正确性。

Concurrency不管是单核机器还是多核机器,还是网络或者其他平台,都具有更好的伸缩性;

而Parallelism在单核机器上就做不到真正的Parallelism。但是Concurrency在多核机器上反而有可能自然而然的进化为Parallelism。

Concurrency的系统的结果是不确定的(indeterminate),为了保证确定性,需要用锁或者其他方式解决。而Parallelism的结果是确定的。

Golang是支持并发的,可以做到并行的执行Goroutine(即通过runtime.GOMAXPROC设置P的数量)。基于CSP提供了Goroutine,channel和select语句,提倡大家通过Message-Passing进行协作,而不是通过锁等工具。这种协作方式可以带来更好的可扩展性。

在维基百科中,关于Concurrent的定义如下:

Concurrent computing is a form of computing in which several computations are executing during overlapping time periods – concurrently – instead of sequentially (one completing before the next starts). This is a property of a system – this may be an individual program, a computer, or a network – and there is a separate execution point or "thread of control" for each computation ("process"). A concurrent system is one where a computation can make progress without waiting for all other computations to complete – where more than one computation can make progress at "the same time"

Concurrent computing is related to but distinct from parallel computing, though these concepts are frequently confused, and both can be described as "multiple processes executing at the same time". In parallel computing, execution literally occurs at the same instant, for example on separate processors of a multi-processor machine – parallel computing is impossible on a (single-core) single processor, as only one computation can occur at any instant (during any single clock cycle).(This is discounting parallelism internal to a processor core, such as pipelining or vectorized instructions. A single-core, single-processor machine may be capable of some parallelism, such as with a coprocessor, but the processor itself is not.) By contrast, concurrent computing consists of process lifetimes overlapping, but execution need not happen at the same instant.

For example, concurrent processes can be executed on a single core by interleaving the execution steps of each process via time slices: only one process runs at a time, and if it does not complete during its time slice, it is paused, another process begins or resumes, and then later the original process is resumed. In this way multiple processes are part-way through execution at a single instant, but only one process is being executed at that instant.

Concurrent computations may be executed in parallel, for example by assigning each process to a separate processor or processor core, or distributing a computation across a network. This is known as task parallelism, and this type of parallel computing is a form of concurrent computing.

The exact timing of when tasks in a concurrent system are executed depend on the scheduling, and tasks need not always be executed concurrently. For example, given two tasks, T1 and T2:

- T1 may be executed and finished before T2

- T2 may be executed and finished before T1

- T1 and T2 may be executed alternatively (time-slicing)

- T1 and T2 may be executed simultaneously at the same instant of time (parallelism)

The word "sequential" is used as an antonym for both "concurrent" and "parallel"; when these are explicitly distinguished, concurrent/sequential and parallel/serial are used as opposing pairs

Concurrency的定义如下:

In computer science, concurrency is a property of systems in which several computations are executing simultaneously, and potentially interacting with each other.

The computations may be executing on multiple cores in the same chip, preemptively time-shared threads on the same processor, or executed on physically separated processors.

A number of mathematical models have been developed for general concurrent computation including Petri nets,process calculi, the Parallel Random Access Machine model, the Actor model and the Reo Coordination Language.

Because computations in a concurrent system can interact with each other while they are executing, the number of possible execution paths in the system can be extremely large, and the resulting outcome can be indeterminate. Concurrent use of shared resources can be a source of indeterminacy leading to issues such as deadlock, and starvation.

The design of concurrent systems often entails finding reliable techniques for coordinating their execution, data exchange, memory allocation, and execution scheduling to minimize response time and maximize throughput.

关于Parallelism的定义如下:

Parallel computing is a form of computation in which many calculations are carried out simultaneously,

operating on the principle that large problems can often be divided into smaller ones, which are then solved concurrently (“in parallel”).

By contrast, parallel computing by data parallelism may or may not be concurrent computing – a single process may control all computations, in which case it is not concurrent, or the computations may be spread across several processes, in which case this is concurrent. For example, SIMD (single instruction, multiple data) processing is (data) parallel but not concurrent – multiple computations are happening at the same instant (in parallel), but there is only a single process. Examples of this include vector processors and graphics processing units (GPUs). By contrast, MIMD (multiple instruction, multiple data) processing is both data parallel and task parallel, and is concurrent; this is commonly implemented as SPMD (single program, multiple data), where multiple programs execute concurrently and in parallel on different data.

Concurrency有Parallelism所不具备的特性:interacting。并发的程序中,每个执行体之间都是可以相互作用的。